mirror of

https://github.com/nod-ai/SHARK-Studio.git

synced 2026-01-09 22:07:55 -05:00

4

.github/workflows/gh-pages-releases.yml

vendored

4

.github/workflows/gh-pages-releases.yml

vendored

@@ -10,7 +10,7 @@ jobs:

|

|||||||

runs-on: ubuntu-latest

|

runs-on: ubuntu-latest

|

||||||

|

|

||||||

# Don't run this in everyone's forks.

|

# Don't run this in everyone's forks.

|

||||||

if: github.repository == 'nod-ai/AMDSHARK'

|

if: github.repository == 'nod-ai/AMD-SHARK-Studio'

|

||||||

|

|

||||||

steps:

|

steps:

|

||||||

- name: Checking out repository

|

- name: Checking out repository

|

||||||

@@ -18,7 +18,7 @@ jobs:

|

|||||||

with:

|

with:

|

||||||

token: ${{ secrets.NODAI_INVOCATION_TOKEN }}

|

token: ${{ secrets.NODAI_INVOCATION_TOKEN }}

|

||||||

- name: Run scrape releases script

|

- name: Run scrape releases script

|

||||||

run: python ./build_tools/scrape_releases.py nod-ai AMDSHARK > /tmp/index.html

|

run: python ./build_tools/scrape_releases.py nod-ai AMD-SHARK-Studio > /tmp/index.html

|

||||||

shell: bash

|

shell: bash

|

||||||

- run: git fetch --all

|

- run: git fetch --all

|

||||||

- run: git switch github-pages

|

- run: git switch github-pages

|

||||||

|

|||||||

2

.gitmodules

vendored

2

.gitmodules

vendored

@@ -1,4 +1,4 @@

|

|||||||

[submodule "inference/thirdparty/amdshark-runtime"]

|

[submodule "inference/thirdparty/amdshark-runtime"]

|

||||||

path = inference/thirdparty/amdshark-runtime

|

path = inference/thirdparty/amdshark-runtime

|

||||||

url =https://github.com/nod-ai/SRT.git

|

url =https://github.com/nod-ai/SRT.git

|

||||||

branch = amdshark-06032022

|

branch = shark-06032022

|

||||||

|

|||||||

24

README.md

24

README.md

@@ -6,7 +6,7 @@ High Performance Machine Learning Distribution

|

|||||||

|

|

||||||

*The latest versions of this project are developments towards a refactor on top of IREE-Turbine. Until further notice, make sure you use an .exe release or a checkout of the `AMDSHARK-1.0` branch, for a working AMDSHARK-Studio*

|

*The latest versions of this project are developments towards a refactor on top of IREE-Turbine. Until further notice, make sure you use an .exe release or a checkout of the `AMDSHARK-1.0` branch, for a working AMDSHARK-Studio*

|

||||||

|

|

||||||

[](https://github.com/nod-ai/AMDSHARK-Studio/actions/workflows/nightly.yml)

|

[](https://github.com/nod-ai/AMD-SHARK-Studio/actions/workflows/nightly.yml)

|

||||||

|

|

||||||

<details>

|

<details>

|

||||||

<summary>Prerequisites - Drivers </summary>

|

<summary>Prerequisites - Drivers </summary>

|

||||||

@@ -27,9 +27,9 @@ Other users please ensure you have your latest vendor drivers and Vulkan SDK fro

|

|||||||

|

|

||||||

### Quick Start for AMDSHARK Stable Diffusion for Windows 10/11 Users

|

### Quick Start for AMDSHARK Stable Diffusion for Windows 10/11 Users

|

||||||

|

|

||||||

Install the Driver from [Prerequisites](https://github.com/nod-ai/AMDSHARK-Studio#install-your-hardware-drivers) above

|

Install the Driver from [Prerequisites](https://github.com/nod-ai/AMD-SHARK-Studio#install-your-hardware-drivers) above

|

||||||

|

|

||||||

Download the [stable release](https://github.com/nod-ai/AMDSHARK-Studio/releases/latest) or the most recent [AMDSHARK 1.0 pre-release](https://github.com/nod-ai/AMDSHARK-Studio/releases).

|

Download the [stable release](https://github.com/nod-ai/AMD-SHARK-Studio/releases/latest) or the most recent [AMDSHARK 1.0 pre-release](https://github.com/nod-ai/AMD-SHARK-Studio/releases).

|

||||||

|

|

||||||

Double click the .exe, or [run from the command line](#running) (recommended), and you should have the [UI](http://localhost:8080/) in the browser.

|

Double click the .exe, or [run from the command line](#running) (recommended), and you should have the [UI](http://localhost:8080/) in the browser.

|

||||||

|

|

||||||

@@ -67,8 +67,8 @@ Enjoy.

|

|||||||

## Check out the code

|

## Check out the code

|

||||||

|

|

||||||

```shell

|

```shell

|

||||||

git clone https://github.com/nod-ai/AMDSHARK.git

|

git clone https://github.com/nod-ai/AMD-SHARK-Studio.git

|

||||||

cd AMDSHARK

|

cd AMD-SHARK-Studio

|

||||||

```

|

```

|

||||||

|

|

||||||

## Switch to the Correct Branch (IMPORTANT!)

|

## Switch to the Correct Branch (IMPORTANT!)

|

||||||

@@ -179,12 +179,12 @@ python -m pip install --upgrade pip

|

|||||||

|

|

||||||

*macOS Metal* users please install https://sdk.lunarg.com/sdk/download/latest/mac/vulkan-sdk.dmg and enable "System wide install"

|

*macOS Metal* users please install https://sdk.lunarg.com/sdk/download/latest/mac/vulkan-sdk.dmg and enable "System wide install"

|

||||||

|

|

||||||

### Install AMDSHARK

|

### Install AMD-SHARK

|

||||||

|

|

||||||

This step pip installs AMDSHARK and related packages on Linux Python 3.8, 3.10 and 3.11 and macOS / Windows Python 3.11

|

This step pip installs AMD-SHARK and related packages on Linux Python 3.8, 3.10 and 3.11 and macOS / Windows Python 3.11

|

||||||

|

|

||||||

```shell

|

```shell

|

||||||

pip install nodai-amdshark -f https://nod-ai.github.io/AMDSHARK/package-index/ -f https://llvm.github.io/torch-mlir/package-index/ -f https://nod-ai.github.io/SRT/pip-release-links.html --extra-index-url https://download.pytorch.org/whl/nightly/cpu

|

pip install nodai-amdshark -f https://nod-ai.github.io/AMD-SHARK-Studio/package-index/ -f https://llvm.github.io/torch-mlir/package-index/ -f https://nod-ai.github.io/SRT/pip-release-links.html --extra-index-url https://download.pytorch.org/whl/nightly/cpu

|

||||||

```

|

```

|

||||||

|

|

||||||

### Run amdshark tank model tests.

|

### Run amdshark tank model tests.

|

||||||

@@ -196,7 +196,7 @@ See tank/README.md for a more detailed walkthrough of our pytest suite and CLI.

|

|||||||

### Download and run Resnet50 sample

|

### Download and run Resnet50 sample

|

||||||

|

|

||||||

```shell

|

```shell

|

||||||

curl -O https://raw.githubusercontent.com/nod-ai/AMDSHARK/main/amdshark/examples/amdshark_inference/resnet50_script.py

|

curl -O https://raw.githubusercontent.com/nod-ai/AMD-SHARK-Studio/main/amdshark/examples/amdshark_inference/resnet50_script.py

|

||||||

#Install deps for test script

|

#Install deps for test script

|

||||||

pip install --pre torch torchvision torchaudio tqdm pillow gsutil --extra-index-url https://download.pytorch.org/whl/nightly/cpu

|

pip install --pre torch torchvision torchaudio tqdm pillow gsutil --extra-index-url https://download.pytorch.org/whl/nightly/cpu

|

||||||

python ./resnet50_script.py --device="cpu" #use cuda or vulkan or metal

|

python ./resnet50_script.py --device="cpu" #use cuda or vulkan or metal

|

||||||

@@ -204,7 +204,7 @@ python ./resnet50_script.py --device="cpu" #use cuda or vulkan or metal

|

|||||||

|

|

||||||

### Download and run BERT (MiniLM) sample

|

### Download and run BERT (MiniLM) sample

|

||||||

```shell

|

```shell

|

||||||

curl -O https://raw.githubusercontent.com/nod-ai/AMDSHARK/main/amdshark/examples/amdshark_inference/minilm_jit.py

|

curl -O https://raw.githubusercontent.com/nod-ai/AMD-SHARK-Studio/main/amdshark/examples/amdshark_inference/minilm_jit.py

|

||||||

#Install deps for test script

|

#Install deps for test script

|

||||||

pip install transformers torch --extra-index-url https://download.pytorch.org/whl/nightly/cpu

|

pip install transformers torch --extra-index-url https://download.pytorch.org/whl/nightly/cpu

|

||||||

python ./minilm_jit.py --device="cpu" #use cuda or vulkan or metal

|

python ./minilm_jit.py --device="cpu" #use cuda or vulkan or metal

|

||||||

@@ -358,12 +358,12 @@ AMDSHARK is maintained to support the latest innovations in ML Models:

|

|||||||

| Vision Transformer | :green_heart: | :green_heart: | :green_heart: |

|

| Vision Transformer | :green_heart: | :green_heart: | :green_heart: |

|

||||||

| ResNet50 | :green_heart: | :green_heart: | :green_heart: |

|

| ResNet50 | :green_heart: | :green_heart: | :green_heart: |

|

||||||

|

|

||||||

For a complete list of the models supported in AMDSHARK, please refer to [tank/README.md](https://github.com/nod-ai/AMDSHARK-Studio/blob/main/tank/README.md).

|

For a complete list of the models supported in AMDSHARK, please refer to [tank/README.md](https://github.com/nod-ai/AMD-SHARK-Studio/blob/main/tank/README.md).

|

||||||

|

|

||||||

## Communication Channels

|

## Communication Channels

|

||||||

|

|

||||||

* [AMDSHARK Discord server](https://discord.gg/RUqY2h2s9u): Real time discussions with the AMDSHARK team and other users

|

* [AMDSHARK Discord server](https://discord.gg/RUqY2h2s9u): Real time discussions with the AMDSHARK team and other users

|

||||||

* [GitHub issues](https://github.com/nod-ai/AMDSHARK-Studio/issues): Feature requests, bugs etc

|

* [GitHub issues](https://github.com/nod-ai/AMD-SHARK-Studio/issues): Feature requests, bugs etc

|

||||||

|

|

||||||

## Related Projects

|

## Related Projects

|

||||||

|

|

||||||

|

|||||||

@@ -14,7 +14,7 @@ def amdshark(model, inputs, *, options):

|

|||||||

log.exception(

|

log.exception(

|

||||||

"Unable to import AMDSHARK - High Performance Machine Learning Distribution"

|

"Unable to import AMDSHARK - High Performance Machine Learning Distribution"

|

||||||

"Please install the right version of AMDSHARK that matches the PyTorch version being used. "

|

"Please install the right version of AMDSHARK that matches the PyTorch version being used. "

|

||||||

"Refer to https://github.com/nod-ai/AMDSHARK-Studio/ for details."

|

"Refer to https://github.com/nod-ai/AMD-SHARK-Studio/ for details."

|

||||||

)

|

)

|

||||||

raise

|

raise

|

||||||

return AMDSharkBackend(model, inputs, options)

|

return AMDSharkBackend(model, inputs, options)

|

||||||

|

|||||||

@@ -23,7 +23,7 @@ pip install accelerate transformers ftfy

|

|||||||

|

|

||||||

Please cherry-pick this branch of torch-mlir: https://github.com/vivekkhandelwal1/torch-mlir/tree/sd-ops

|

Please cherry-pick this branch of torch-mlir: https://github.com/vivekkhandelwal1/torch-mlir/tree/sd-ops

|

||||||

and build it locally. You can find the instructions for using locally build Torch-MLIR,

|

and build it locally. You can find the instructions for using locally build Torch-MLIR,

|

||||||

here: https://github.com/nod-ai/AMDSHARK-Studio#how-to-use-your-locally-built-iree--torch-mlir-with-amdshark

|

here: https://github.com/nod-ai/AMD-SHARK-Studio#how-to-use-your-locally-built-iree--torch-mlir-with-amdshark

|

||||||

|

|

||||||

## Run the Stable diffusion fine tuning

|

## Run the Stable diffusion fine tuning

|

||||||

|

|

||||||

|

|||||||

@@ -157,7 +157,7 @@ def device_driver_info(device):

|

|||||||

f"Required drivers for {device} not found. {device_driver_err_map[device]['debug']} "

|

f"Required drivers for {device} not found. {device_driver_err_map[device]['debug']} "

|

||||||

f"Please install the required drivers{device_driver_err_map[device]['solution']} "

|

f"Please install the required drivers{device_driver_err_map[device]['solution']} "

|

||||||

f"For further assistance please reach out to the community on discord [https://discord.com/invite/RUqY2h2s9u]"

|

f"For further assistance please reach out to the community on discord [https://discord.com/invite/RUqY2h2s9u]"

|

||||||

f" and/or file a bug at https://github.com/nod-ai/AMDSHARK-Studio/issues"

|

f" and/or file a bug at https://github.com/nod-ai/AMD-SHARK-Studio/issues"

|

||||||

)

|

)

|

||||||

return err_msg

|

return err_msg

|

||||||

else:

|

else:

|

||||||

|

|||||||

@@ -192,7 +192,7 @@ def get_rocm_target_chip(device_str):

|

|||||||

if key in device_str:

|

if key in device_str:

|

||||||

return rocm_chip_map[key]

|

return rocm_chip_map[key]

|

||||||

raise AssertionError(

|

raise AssertionError(

|

||||||

f"Device {device_str} not recognized. Please file an issue at https://github.com/nod-ai/AMDSHARK-Studio/issues."

|

f"Device {device_str} not recognized. Please file an issue at https://github.com/nod-ai/AMD-SHARK-Studio/issues."

|

||||||

)

|

)

|

||||||

|

|

||||||

|

|

||||||

|

|||||||

@@ -1,9 +1,9 @@

|

|||||||

# Overview

|

# Overview

|

||||||

|

|

||||||

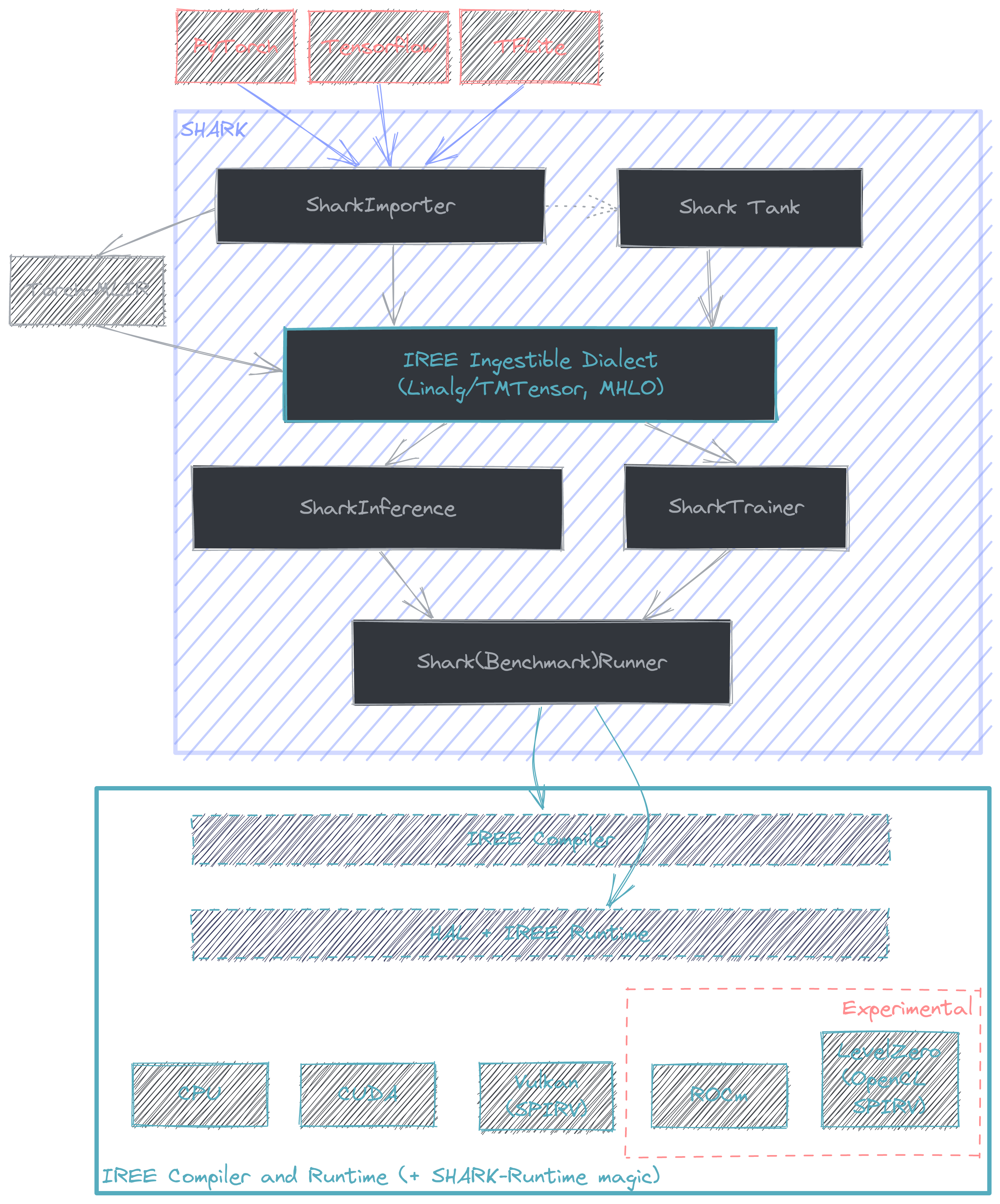

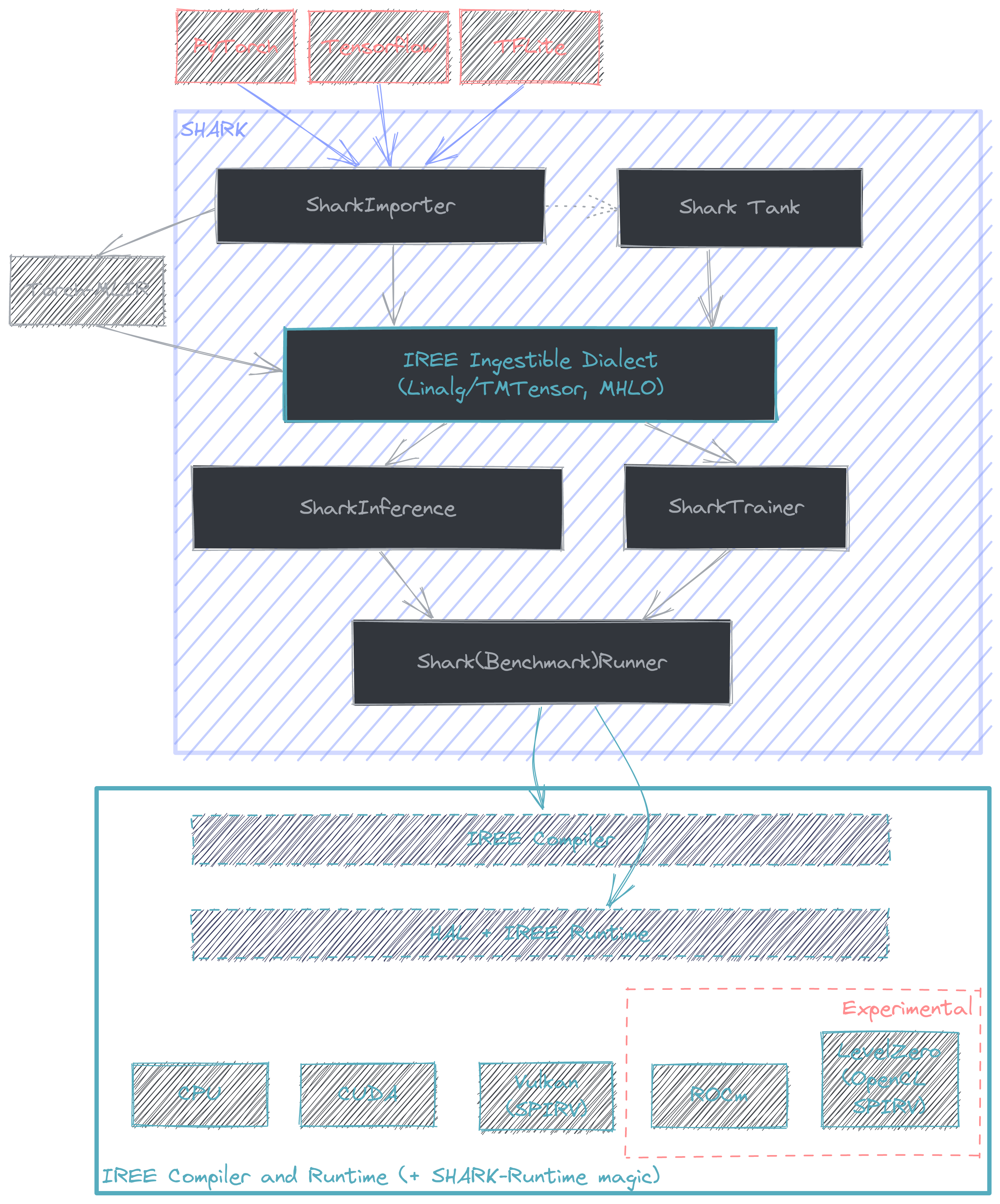

This document is intended to provide a starting point for profiling with AMDSHARK/IREE. At it's core

|

This document is intended to provide a starting point for profiling with AMDSHARK/IREE. At it's core

|

||||||

[AMDSHARK](https://github.com/nod-ai/AMDSHARK-Studio/tree/main/tank) is a python API that links the MLIR lowerings from various

|

[AMDSHARK](https://github.com/nod-ai/AMD-SHARK-Studio/tree/main/tank) is a python API that links the MLIR lowerings from various

|

||||||

frameworks + frontends (e.g. PyTorch -> Torch-MLIR) with the compiler + runtime offered by IREE. More information

|

frameworks + frontends (e.g. PyTorch -> Torch-MLIR) with the compiler + runtime offered by IREE. More information

|

||||||

on model coverage and framework support can be found [here](https://github.com/nod-ai/AMDSHARK-Studio/tree/main/tank). The intended

|

on model coverage and framework support can be found [here](https://github.com/nod-ai/AMD-SHARK-Studio/tree/main/tank). The intended

|

||||||

use case for AMDSHARK is for compilation and deployment of performant state of the art AI models.

|

use case for AMDSHARK is for compilation and deployment of performant state of the art AI models.

|

||||||

|

|

||||||

|

|

||||||

|

|||||||

@@ -6,7 +6,7 @@ We currently make use of the [AI-Render Plugin](https://github.com/benrugg/AI-Re

|

|||||||

|

|

||||||

## Setup AMDSHARK and prerequisites:

|

## Setup AMDSHARK and prerequisites:

|

||||||

|

|

||||||

* Download the latest AMDSHARK SD webui .exe from [here](https://github.com/nod-ai/AMDSHARK-Studio/releases) or follow instructions on the [README](https://github.com/nod-ai/AMDSHARK-Studio#readme)

|

* Download the latest AMDSHARK SD webui .exe from [here](https://github.com/nod-ai/AMD-SHARK-Studio/releases) or follow instructions on the [README](https://github.com/nod-ai/AMD-SHARK-Studio#readme)

|

||||||

* Once you have the .exe where you would like AMDSHARK to install, run the .exe from terminal/PowerShell with the `--api` flag:

|

* Once you have the .exe where you would like AMDSHARK to install, run the .exe from terminal/PowerShell with the `--api` flag:

|

||||||

```

|

```

|

||||||

## Run the .exe in API mode:

|

## Run the .exe in API mode:

|

||||||

|

|||||||

@@ -20,8 +20,8 @@ This does mean however, that on a brand new fresh install of AMDSHARK that has n

|

|||||||

|

|

||||||

## Setup AMDSHARK and prerequisites:

|

## Setup AMDSHARK and prerequisites:

|

||||||

|

|

||||||

* Make sure you have suitable drivers for your graphics card installed. See the prerequisties section of the [README](https://github.com/nod-ai/AMDSHARK-Studio#readme).

|

* Make sure you have suitable drivers for your graphics card installed. See the prerequisties section of the [README](https://github.com/nod-ai/AMD-SHARK-Studio#readme).

|

||||||

* Download the latest AMDSHARK studio .exe from [here](https://github.com/nod-ai/AMDSHARK-Studio/releases) or follow the instructions in the [README](https://github.com/nod-ai/AMDSHARK-Studio#readme) for an advanced, Linux or Mac install.

|

* Download the latest AMDSHARK studio .exe from [here](https://github.com/nod-ai/AMD-SHARK-Studio/releases) or follow the instructions in the [README](https://github.com/nod-ai/AMD-SHARK-Studio#readme) for an advanced, Linux or Mac install.

|

||||||

* Run AMDSHARK from terminal/PowerShell with the `--api` flag. Since koboldcpp also expects both CORS support and the image generator to be running on port `7860` rather than AMDSHARK default of `8080`, also include both the `--api_accept_origin` flag with a suitable origin (use `="*"` to enable all origins) and `--server_port=7860` on the command line. (See the if you want to run AMDSHARK on a different port)

|

* Run AMDSHARK from terminal/PowerShell with the `--api` flag. Since koboldcpp also expects both CORS support and the image generator to be running on port `7860` rather than AMDSHARK default of `8080`, also include both the `--api_accept_origin` flag with a suitable origin (use `="*"` to enable all origins) and `--server_port=7860` on the command line. (See the if you want to run AMDSHARK on a different port)

|

||||||

|

|

||||||

```powershell

|

```powershell

|

||||||

|

|||||||

@@ -8,7 +8,7 @@ wheel

|

|||||||

|

|

||||||

torch==2.3.0

|

torch==2.3.0

|

||||||

iree-turbine @ git+https://github.com/iree-org/iree-turbine.git@main

|

iree-turbine @ git+https://github.com/iree-org/iree-turbine.git@main

|

||||||

turbine-models @ git+https://github.com/nod-ai/AMDSHARK-ModelDev.git@main#subdirectory=models

|

turbine-models @ git+https://github.com/nod-ai/AMD-SHARK-ModelDev.git@main#subdirectory=models

|

||||||

diffusers @ git+https://github.com/nod-ai/diffusers@0.29.0.dev0-amdshark

|

diffusers @ git+https://github.com/nod-ai/diffusers@0.29.0.dev0-amdshark

|

||||||

brevitas @ git+https://github.com/Xilinx/brevitas.git@6695e8df7f6a2c7715b9ed69c4b78157376bb60b

|

brevitas @ git+https://github.com/Xilinx/brevitas.git@6695e8df7f6a2c7715b9ed69c4b78157376bb60b

|

||||||

|

|

||||||

|

|||||||

4

setup.py

4

setup.py

@@ -20,8 +20,8 @@ setup(

|

|||||||

long_description=long_description,

|

long_description=long_description,

|

||||||

long_description_content_type="text/markdown",

|

long_description_content_type="text/markdown",

|

||||||

project_urls={

|

project_urls={

|

||||||

"Code": "https://github.com/nod-ai/AMDSHARK",

|

"Code": "https://github.com/nod-ai/AMD-SHARK-Studio",

|

||||||

"Bug Tracker": "https://github.com/nod-ai/AMDSHARK-Studio/issues",

|

"Bug Tracker": "https://github.com/nod-ai/AMD-SHARK-Studio/issues",

|

||||||

},

|

},

|

||||||

classifiers=[

|

classifiers=[

|

||||||

"Programming Language :: Python :: 3",

|

"Programming Language :: Python :: 3",

|

||||||

|

|||||||

@@ -142,7 +142,7 @@ For more information refer to [MODEL TRACKING SHEET](https://docs.google.com/spr

|

|||||||

|

|

||||||

### Run all model tests on CPU/GPU/VULKAN/Metal

|

### Run all model tests on CPU/GPU/VULKAN/Metal

|

||||||

|

|

||||||

For a list of models included in our pytest model suite, see https://github.com/nod-ai/AMDSHARK-Studio/blob/main/tank/all_models.csv

|

For a list of models included in our pytest model suite, see https://github.com/nod-ai/AMD-SHARK-Studio/blob/main/tank/all_models.csv

|

||||||

|

|

||||||

```shell

|

```shell

|

||||||

pytest tank/test_models.py

|

pytest tank/test_models.py

|

||||||

|

|||||||

@@ -1,19 +1,19 @@

|

|||||||

bert-base-uncased,linalg,torch,1e-2,1e-3,default,None,False,False,False,"",""

|

bert-base-uncased,linalg,torch,1e-2,1e-3,default,None,False,False,False,"",""

|

||||||

bert-large-uncased,linalg,torch,1e-2,1e-3,default,None,False,False,False,"",""

|

bert-large-uncased,linalg,torch,1e-2,1e-3,default,None,False,False,False,"",""

|

||||||

facebook/deit-small-distilled-patch16-224,linalg,torch,1e-2,1e-3,default,nhcw-nhwc,False,False,False,"Fails during iree-compile.",""

|

facebook/deit-small-distilled-patch16-224,linalg,torch,1e-2,1e-3,default,nhcw-nhwc,False,False,False,"Fails during iree-compile.",""

|

||||||

google/vit-base-patch16-224,linalg,torch,1e-2,1e-3,default,nhcw-nhwc,True,True,True,"https://github.com/nod-ai/AMDSHARK/issues/311",""

|

google/vit-base-patch16-224,linalg,torch,1e-2,1e-3,default,nhcw-nhwc,True,True,True,"https://github.com/nod-ai/AMD-SHARK-Studio/issues/311",""

|

||||||

microsoft/beit-base-patch16-224-pt22k-ft22k,linalg,torch,1e-2,1e-3,default,nhcw-nhwc,False,False,False,"https://github.com/nod-ai/AMDSHARK/issues/390","macos"

|

microsoft/beit-base-patch16-224-pt22k-ft22k,linalg,torch,1e-2,1e-3,default,nhcw-nhwc,False,False,False,"https://github.com/nod-ai/AMD-SHARK-Studio/issues/390","macos"

|

||||||

microsoft/MiniLM-L12-H384-uncased,linalg,torch,1e-2,1e-3,default,None,False,False,False,"",""

|

microsoft/MiniLM-L12-H384-uncased,linalg,torch,1e-2,1e-3,default,None,False,False,False,"",""

|

||||||

google/mobilebert-uncased,linalg,torch,1e-2,1e-3,default,None,False,False,False,"https://github.com/nod-ai/AMDSHARK/issues/344","macos"

|

google/mobilebert-uncased,linalg,torch,1e-2,1e-3,default,None,False,False,False,"https://github.com/nod-ai/AMD-SHARK-Studio/issues/344","macos"

|

||||||

mobilenet_v3_small,linalg,torch,1e-1,1e-2,default,nhcw-nhwc,False,False,False,"https://github.com/nod-ai/AMDSHARK/issues/388, https://github.com/nod-ai/AMDSHARK/issues/1487","macos"

|

mobilenet_v3_small,linalg,torch,1e-1,1e-2,default,nhcw-nhwc,False,False,False,"https://github.com/nod-ai/AMD-SHARK-Studio/issues/388, https://github.com/nod-ai/AMD-SHARK-Studio/issues/1487","macos"

|

||||||

nvidia/mit-b0,linalg,torch,1e-2,1e-3,default,None,True,True,True,"https://github.com/nod-ai/AMDSHARK/issues/343,https://github.com/nod-ai/AMDSHARK/issues/1487","macos"

|

nvidia/mit-b0,linalg,torch,1e-2,1e-3,default,None,True,True,True,"https://github.com/nod-ai/AMD-SHARK-Studio/issues/343,https://github.com/nod-ai/AMD-SHARK-Studio/issues/1487","macos"

|

||||||

resnet101,linalg,torch,1e-2,1e-3,default,nhcw-nhwc/img2col,True,True,True,"","macos"

|

resnet101,linalg,torch,1e-2,1e-3,default,nhcw-nhwc/img2col,True,True,True,"","macos"

|

||||||

resnet18,linalg,torch,1e-2,1e-3,default,None,True,True,True,"","macos"

|

resnet18,linalg,torch,1e-2,1e-3,default,None,True,True,True,"","macos"

|

||||||

resnet50,linalg,torch,1e-2,1e-3,default,nhcw-nhwc,False,False,False,"","macos"

|

resnet50,linalg,torch,1e-2,1e-3,default,nhcw-nhwc,False,False,False,"","macos"

|

||||||

squeezenet1_0,linalg,torch,1e-2,1e-3,default,nhcw-nhwc,False,False,False,"","macos"

|

squeezenet1_0,linalg,torch,1e-2,1e-3,default,nhcw-nhwc,False,False,False,"","macos"

|

||||||

wide_resnet50_2,linalg,torch,1e-2,1e-3,default,nhcw-nhwc/img2col,True,True,True,"","macos"

|

wide_resnet50_2,linalg,torch,1e-2,1e-3,default,nhcw-nhwc/img2col,True,True,True,"","macos"

|

||||||

mnasnet1_0,linalg,torch,1e-2,1e-3,default,nhcw-nhwc,False,False,False,"","macos"

|

mnasnet1_0,linalg,torch,1e-2,1e-3,default,nhcw-nhwc,False,False,False,"","macos"

|

||||||

efficientnet_b0,linalg,torch,1e-2,1e-3,default,nhcw-nhwc,True,True,True,"https://github.com/nod-ai/AMDSHARK/issues/1487","macos"

|

efficientnet_b0,linalg,torch,1e-2,1e-3,default,nhcw-nhwc,True,True,True,"https://github.com/nod-ai/AMD-SHARK-Studio/issues/1487","macos"

|

||||||

efficientnet_b7,linalg,torch,1e-2,1e-3,default,nhcw-nhwc,True,True,True,"https://github.com/nod-ai/AMDSHARK/issues/1487","macos"

|

efficientnet_b7,linalg,torch,1e-2,1e-3,default,nhcw-nhwc,True,True,True,"https://github.com/nod-ai/AMD-SHARK-Studio/issues/1487","macos"

|

||||||

t5-base,linalg,torch,1e-2,1e-3,default,None,True,True,True,"","macos"

|

t5-base,linalg,torch,1e-2,1e-3,default,None,True,True,True,"","macos"

|

||||||

t5-large,linalg,torch,1e-2,1e-3,default,None,True,True,True,"","macos"

|

t5-large,linalg,torch,1e-2,1e-3,default,None,True,True,True,"","macos"

|

||||||

|

|||||||

|

@@ -1,5 +1,5 @@

|

|||||||

# Lint as: python3

|

# Lint as: python3

|

||||||

"""AMDSHARK Tank"""

|

"""AMD-SHARK Tank"""

|

||||||

# python generate_amdsharktank.py, you have to give a csv tile with [model_name, model_download_url]

|

# python generate_amdsharktank.py, you have to give a csv tile with [model_name, model_download_url]

|

||||||

# will generate local amdshark tank folder like this:

|

# will generate local amdshark tank folder like this:

|

||||||

# /AMDSHARK

|

# /AMDSHARK

|

||||||

@@ -191,7 +191,7 @@ if __name__ == "__main__":

|

|||||||

# type=lambda x: is_valid_file(x),

|

# type=lambda x: is_valid_file(x),

|

||||||

# default="./tank/torch_model_list.csv",

|

# default="./tank/torch_model_list.csv",

|

||||||

# help="""Contains the file with torch_model name and args.

|

# help="""Contains the file with torch_model name and args.

|

||||||

# Please see: https://github.com/nod-ai/AMDSHARK-Studio/blob/main/tank/torch_model_list.csv""",

|

# Please see: https://github.com/nod-ai/AMD-SHARK-Studio/blob/main/tank/torch_model_list.csv""",

|

||||||

# )

|

# )

|

||||||

# parser.add_argument(

|

# parser.add_argument(

|

||||||

# "--ci_tank_dir",

|

# "--ci_tank_dir",

|

||||||

|

|||||||

@@ -396,7 +396,7 @@ class AMDSharkModuleTest(unittest.TestCase):

|

|||||||

and device == "rocm"

|

and device == "rocm"

|

||||||

):

|

):

|

||||||

pytest.xfail(

|

pytest.xfail(

|

||||||

reason="iree-compile buffer limit issue: https://github.com/nod-ai/AMDSHARK-Studio/issues/475"

|

reason="iree-compile buffer limit issue: https://github.com/nod-ai/AMD-SHARK-Studio/issues/475"

|

||||||

)

|

)

|

||||||

if (

|

if (

|

||||||

config["model_name"]

|

config["model_name"]

|

||||||

@@ -407,7 +407,7 @@ class AMDSharkModuleTest(unittest.TestCase):

|

|||||||

and device == "rocm"

|

and device == "rocm"

|

||||||

):

|

):

|

||||||

pytest.xfail(

|

pytest.xfail(

|

||||||

reason="Numerics issues: https://github.com/nod-ai/AMDSHARK-Studio/issues/476"

|

reason="Numerics issues: https://github.com/nod-ai/AMD-SHARK-Studio/issues/476"

|

||||||

)

|

)

|

||||||

if config["framework"] == "tf" and self.module_tester.batch_size != 1:

|

if config["framework"] == "tf" and self.module_tester.batch_size != 1:

|

||||||

pytest.xfail(

|

pytest.xfail(

|

||||||

|

|||||||

Reference in New Issue

Block a user