diff --git a/.github/workflows/ci.yml b/.github/workflows/ci.yml

new file mode 100644

index 00000000..5a6f6a3e

--- /dev/null

+++ b/.github/workflows/ci.yml

@@ -0,0 +1,35 @@

+name: Run unit tests

+

+on:

+ push:

+ branches: [ "main" ]

+ pull_request:

+ branches: [ "main" ]

+

+jobs:

+ build:

+

+ runs-on: ${{ matrix.os }}

+ timeout-minutes: 10

+ strategy:

+ fail-fast: false

+ matrix:

+ python-version: ["3.9", "3.12"]

+ os: [ubuntu-latest, macos-latest, windows-latest]

+

+ steps:

+ - uses: actions/checkout@v4

+ - name: Set up Python ${{ matrix.python-version }}

+ uses: actions/setup-python@v4

+ with:

+ python-version: ${{ matrix.python-version }}

+ - name: Install dependencies

+ run: |

+ python -m pip install poetry

+ poetry install --with=dev

+ - name: Lint with ruff

+ run: poetry run ruff check --output-format github

+ - name: Check code style with ruff

+ run: poetry run ruff format --check --diff

+ - name: Test with pytest

+ run: poetry run pytest

diff --git a/.gitignore b/.gitignore

new file mode 100644

index 00000000..8806b02f

--- /dev/null

+++ b/.gitignore

@@ -0,0 +1,18 @@

+__pycache__/

+.venv/

+.vscode/

+.idea/

+htmlcov/

+dist/

+workspace/

+

+.coverage

+*.code-workspace

+.*_cache

+.env

+*.pyc

+*.db

+config.json

+poetry.lock

+.DS_Store

+*.log

diff --git a/.pre-commit-config.yaml b/.pre-commit-config.yaml

new file mode 100644

index 00000000..1d395e98

--- /dev/null

+++ b/.pre-commit-config.yaml

@@ -0,0 +1,21 @@

+fail_fast: true

+repos:

+ - repo: https://github.com/astral-sh/ruff-pre-commit

+ # Ruff version.

+ rev: v0.3.5

+ hooks:

+ # Run the linter.

+ - id: ruff

+ args: [ --fix ]

+ # Run the formatter.

+ - id: ruff-format

+ - repo: local

+ hooks:

+ # Run the tests

+ - id: pytest

+ name: pytest

+ stages: [commit]

+ types: [python]

+ entry: pytest

+ language: system

+ pass_filenames: false

diff --git a/LICENSE b/LICENSE

new file mode 100644

index 00000000..74887def

--- /dev/null

+++ b/LICENSE

@@ -0,0 +1,110 @@

+# Functional Source License, Version 1.1, MIT Future License

+

+## Abbreviation

+

+FSL-1.1-MIT

+

+## Notice

+

+Copyright 2024 Pythagora Technologies, Inc.

+

+## Terms and Conditions

+

+### Licensor ("We")

+

+The party offering the Software under these Terms and Conditions.

+

+### The Software

+

+The "Software" is each version of the software that we make available under

+these Terms and Conditions, as indicated by our inclusion of these Terms and

+Conditions with the Software.

+

+### License Grant

+

+Subject to your compliance with this License Grant and the Patents,

+Redistribution and Trademark clauses below, we hereby grant you the right to

+use, copy, modify, create derivative works, publicly perform, publicly display

+and redistribute the Software for any Permitted Purpose identified below.

+

+### Permitted Purpose

+

+A Permitted Purpose is any purpose other than a Competing Use. A Competing Use

+means making the Software available to others in a commercial product or

+service that:

+

+1. substitutes for the Software;

+

+2. substitutes for any other product or service we offer using the Software

+ that exists as of the date we make the Software available; or

+

+3. offers the same or substantially similar functionality as the Software.

+

+Permitted Purposes specifically include using the Software:

+

+1. for your internal use and access;

+

+2. for non-commercial education;

+

+3. for non-commercial research; and

+

+4. in connection with professional services that you provide to a licensee

+ using the Software in accordance with these Terms and Conditions.

+

+### Patents

+

+To the extent your use for a Permitted Purpose would necessarily infringe our

+patents, the license grant above includes a license under our patents. If you

+make a claim against any party that the Software infringes or contributes to

+the infringement of any patent, then your patent license to the Software ends

+immediately.

+

+### Redistribution

+

+The Terms and Conditions apply to all copies, modifications and derivatives of

+the Software.

+

+If you redistribute any copies, modifications or derivatives of the Software,

+you must include a copy of or a link to these Terms and Conditions and not

+remove any copyright notices provided in or with the Software.

+

+### Disclaimer

+

+THE SOFTWARE IS PROVIDED "AS IS" AND WITHOUT WARRANTIES OF ANY KIND, EXPRESS OR

+IMPLIED, INCLUDING WITHOUT LIMITATION WARRANTIES OF FITNESS FOR A PARTICULAR

+PURPOSE, MERCHANTABILITY, TITLE OR NON-INFRINGEMENT.

+

+IN NO EVENT WILL WE HAVE ANY LIABILITY TO YOU ARISING OUT OF OR RELATED TO THE

+SOFTWARE, INCLUDING INDIRECT, SPECIAL, INCIDENTAL OR CONSEQUENTIAL DAMAGES,

+EVEN IF WE HAVE BEEN INFORMED OF THEIR POSSIBILITY IN ADVANCE.

+

+### Trademarks

+

+Except for displaying the License Details and identifying us as the origin of

+the Software, you have no right under these Terms and Conditions to use our

+trademarks, trade names, service marks or product names.

+

+## Grant of Future License

+

+We hereby irrevocably grant you an additional license to use the Software under

+the MIT license that is effective on the second anniversary of the date we make

+the Software available. On or after that date, you may use the Software under

+the MIT license, in which case the following will apply:

+

+Permission is hereby granted, free of charge, to any person obtaining a copy of

+this software and associated documentation files (the "Software"), to deal in

+the Software without restriction, including without limitation the rights to

+use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies

+of the Software, and to permit persons to whom the Software is furnished to do

+so, subject to the following conditions:

+

+The above copyright notice and this permission notice shall be included in all

+copies or substantial portions of the Software.

+

+THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

+IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

+FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

+AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

+LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

+OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

+SOFTWARE.

diff --git a/README.md b/README.md

new file mode 100644

index 00000000..a7e496bb

--- /dev/null

+++ b/README.md

@@ -0,0 +1,238 @@

+

+

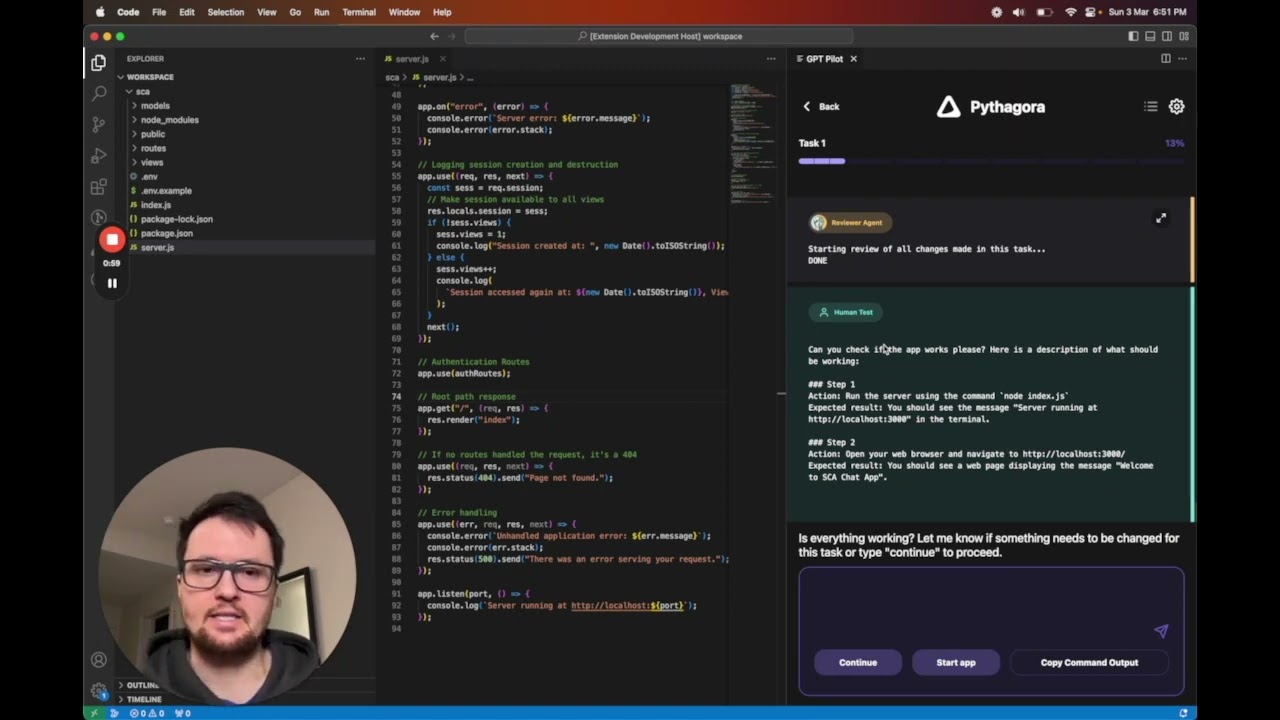

+### GPT Pilot doesn't just generate code, it builds apps!

+

+

+

+---

+

+

+[](https://youtu.be/4g-1cPGK0GA)

+

+(click to open the video in YouTube) (1:40min)

+

+

+

+---

+

+

+

+

+

+

+

+GPT Pilot is the core technology for the [Pythagora VS Code extension](https://bit.ly/3IeZxp6) that aims to provide **the first real AI developer companion**. Not just an autocomplete or a helper for PR messages but rather a real AI developer that can write full features, debug them, talk to you about issues, ask for review, etc.

+

+---

+

+📫 If you would like to get updates on future releases or just get in touch, join our [Discord server](https://discord.gg/HaqXugmxr9) or you [can add your email here](http://eepurl.com/iD6Mpo). 📬

+

+---

+

+

+* [🔌 Requirements](#-requirements)

+* [🚦How to start using gpt-pilot?](#how-to-start-using-gpt-pilot)

+* [🔎 Examples](#-examples)

+* [🐳 How to start gpt-pilot in docker?](#-how-to-start-gpt-pilot-in-docker)

+* [🧑💻️ CLI arguments](#-cli-arguments)

+* [🏗 How GPT Pilot works?](#-how-gpt-pilot-works)

+* [🕴How's GPT Pilot different from _Smol developer_ and _GPT engineer_?](#hows-gpt-pilot-different-from-smol-developer-and-gpt-engineer)

+* [🍻 Contributing](#-contributing)

+* [🔗 Connect with us](#-connect-with-us)

+* [🌟 Star history](#-star-history)

+

+

+---

+

+GPT Pilot aims to research how much LLMs can be utilized to generate fully working, production-ready apps while the developer oversees the implementation.

+

+**The main idea is that AI can write most of the code for an app (maybe 95%), but for the rest, 5%, a developer is and will be needed until we get full AGI**.

+

+If you are interested in our learnings during this project, you can check [our latest blog posts](https://blog.pythagora.ai/2024/02/19/gpt-pilot-what-did-we-learn-in-6-months-of-working-on-a-codegen-pair-programmer/).

+

+---

+

+

+

+

+

+### **[👉 Examples of apps written by GPT Pilot 👈](https://github.com/Pythagora-io/gpt-pilot/wiki/Apps-created-with-GPT-Pilot)**

+

+

+

+

+---

+

+# 🔌 Requirements

+

+- **Python 3.9+**

+

+# 🚦How to start using gpt-pilot?

+👉 If you are using VS Code as your IDE, the easiest way to start is by downloading [GPT Pilot VS Code extension](https://bit.ly/3IeZxp6). 👈

+

+Otherwise, you can use the CLI tool.

+

+### If you're new to GPT Pilot:

+

+After you have Python and (optionally) PostgreSQL installed, follow these steps:

+

+1. `git clone https://github.com/Pythagora-io/gpt-pilot.git` (clone the repo)

+2. `cd gpt-pilot` (go to the repo folder)

+3. `python -m venv venv` (create a virtual environment)

+4. `source venv/bin/activate` (or on Windows `venv\Scripts\activate`) (activate the virtual environment)

+5. `pip install -r requirements.txt` (install the dependencies)

+6. `cp example-config.json config.json` (create `config.json` file)

+7. Set your key and other settings in `config.json` file:

+ - LLM Provider (`openai`, `anthropic` or `groq`) key and endpoints (leave `null` for default) (note that Azure and OpenRouter are suppored via the `openai` setting)

+ - Your API key (if `null`, will be read from the environment variables)

+ - database settings: sqlite is used by default, PostgreSQL should also work

+ - optionally update `fs.ignore_paths` and add files or folders which shouldn't be tracked by GPT Pilot in workspace, useful to ignore folders created by compilers

+8. `python main.py` (start GPT Pilot)

+

+All generated code will be stored in the folder `workspace` inside the folder named after the app name you enter upon starting the pilot.

+

+### If you're upgrading from GPT Pilot v0.1

+

+Assuming you already have the git repository with an earlier version:

+

+1. `git pull` (update the repo)

+2. `source pilot-env/bin/activate` (or on Windows `pilot-env\Scripts\activate`) (activate the virtual environment)

+3. `pip install -r requirements.txt` (install the new dependencies)

+4. `python main.py --import-v0 pilot/gpt-pilot` (this should import your settings and existing projects)

+

+This will create a new database `pythagora.db` and import all apps from the old database. For each app,

+it will import the start of the latest task you were working on.

+

+To verify that the import was successful, you can run `python main.py --list` to see all the apps you have created,

+and check `config.json` to check the settings were correctly converted to the new config file format (and make

+any adjustments if needed).

+

+# 🔎 [Examples](https://github.com/Pythagora-io/gpt-pilot/wiki/Apps-created-with-GPT-Pilot)

+

+[Click here](https://github.com/Pythagora-io/gpt-pilot/wiki/Apps-created-with-GPT-Pilot) to see all example apps created with GPT Pilot.

+

+## 🐳 How to start gpt-pilot in docker?

+1. `git clone https://github.com/Pythagora-io/gpt-pilot.git` (clone the repo)

+2. Update the `docker-compose.yml` environment variables, which can be done via `docker compose config`. If you wish to use a local model, please go to [https://localai.io/basics/getting_started/](https://localai.io/basics/getting_started/).

+3. By default, GPT Pilot will read & write to `~/gpt-pilot-workspace` on your machine, you can also edit this in `docker-compose.yml`

+4. run `docker compose build`. this will build a gpt-pilot container for you.

+5. run `docker compose up`.

+6. access the web terminal on `port 7681`

+7. `python main.py` (start GPT Pilot)

+

+This will start two containers, one being a new image built by the `Dockerfile` and a Postgres database. The new image also has [ttyd](https://github.com/tsl0922/ttyd) installed so that you can easily interact with gpt-pilot. Node is also installed on the image and port 3000 is exposed.

+

+

+# 🧑💻️ CLI arguments

+

+### List created projects (apps)

+

+```bash

+python main.py --list

+```

+

+Note: for each project (app), this also lists "branches". Currently we only support having one branch (called "main"), and in the future we plan to add support for multiple project branches.

+

+### Load and continue from the latest step in a project (app)

+

+```bash

+python main.py --project

+```

+

+### Load and continue from a specific step in a project (app)

+

+```bash

+python main.py --project --step

+```

+

+Warning: this will delete all progress after the specified step!

+

+### Delete project (app)

+

+```bash

+python main.py --delete

+```

+

+Delete project with the specified `app_id`. Warning: this cannot be undone!

+

+### Import projects from v0.1

+

+```bash

+python main.py --import-v0

+```

+

+This will import projects from the old GPT Pilot v0.1 database. The path should be the path to the old GPT Pilot v0.1 database. For each project, it will import the start of the latest task you were working on. If the project was already imported, the import procedure will skip it (won't overwrite the project in the database).

+

+### Other command-line options

+

+There are several other command-line options that mostly support calling GPT Pilot from our VSCode extension. To see all the available options, use the `--help` flag:

+

+```bash

+python main.py --help

+```

+

+# 🏗 How GPT Pilot works?

+Here are the steps GPT Pilot takes to create an app:

+

+1. You enter the app name and the description.

+2. **Product Owner agent** like in real life, does nothing. :)

+3. **Specification Writer agent** asks a couple of questions to understand the requirements better if project description is not good enough.

+4. **Architect agent** writes up technologies that will be used for the app and checks if all technologies are installed on the machine and installs them if not.

+5. **Tech Lead agent** writes up development tasks that the Developer must implement.

+6. **Developer agent** takes each task and writes up what needs to be done to implement it. The description is in human-readable form.

+7. **Code Monkey agent** takes the Developer's description and the existing file and implements the changes.

+8. **Reviewer agent** reviews every step of the task and if something is done wrong Reviewer sends it back to Code Monkey.

+9. **Troubleshooter agent** helps you to give good feedback to GPT Pilot when something is wrong.

+10. **Debugger agent** hate to see him, but he is your best friend when things go south.

+11. **Technical Writer agent** writes documentation for the project.

+

+

+

+# 🕴How's GPT Pilot different from _Smol developer_ and _GPT engineer_?

+

+- **GPT Pilot works with the developer to create a fully working production-ready app** - I don't think AI can (at least in the near future) create apps without a developer being involved. So, **GPT Pilot codes the app step by step** just like a developer would in real life. This way, it can debug issues as they arise throughout the development process. If it gets stuck, you, the developer in charge, can review the code and fix the issue. Other similar tools give you the entire codebase at once - this way, bugs are much harder to fix for AI and for you as a developer.

+

+- **Works at scale** - GPT Pilot isn't meant to create simple apps but rather so it can work at any scale. It has mechanisms that filter out the code, so in each LLM conversation, it doesn't need to store the entire codebase in context, but it shows the LLM only the relevant code for the current task it's working on. Once an app is finished, you can continue working on it by writing instructions on what feature you want to add.

+

+# 🍻 Contributing

+If you are interested in contributing to GPT Pilot, join [our Discord server](https://discord.gg/HaqXugmxr9), check out open [GitHub issues](https://github.com/Pythagora-io/gpt-pilot/issues), and see if anything interests you. We would be happy to get help in resolving any of those. The best place to start is by reviewing blog posts mentioned above to understand how the architecture works before diving into the codebase.

+

+## 🖥 Development

+Other than the research, GPT Pilot needs to be debugged to work in different scenarios. For example, we realized that the quality of the code generated is very sensitive to the size of the development task. When the task is too broad, the code has too many bugs that are hard to fix, but when the development task is too narrow, GPT also seems to struggle in getting the task implemented into the existing code.

+

+## 📊 Telemetry

+To improve GPT Pilot, we are tracking some events from which you can opt out at any time. You can read more about it [here](./docs/TELEMETRY.md).

+

+# 🔗 Connect with us

+🌟 As an open-source tool, it would mean the world to us if you starred the GPT-pilot repo 🌟

+

+💬 Join [the Discord server](https://discord.gg/HaqXugmxr9) to get in touch.

diff --git a/core/agents/__init__.py b/core/agents/__init__.py

new file mode 100644

index 00000000..e69de29b

diff --git a/core/agents/architect.py b/core/agents/architect.py

new file mode 100644

index 00000000..646f02cf

--- /dev/null

+++ b/core/agents/architect.py

@@ -0,0 +1,146 @@

+from typing import Optional

+

+from pydantic import BaseModel, Field

+

+from core.agents.base import BaseAgent

+from core.agents.convo import AgentConvo

+from core.agents.response import AgentResponse

+from core.llm.parser import JSONParser

+from core.telemetry import telemetry

+from core.templates.registry import PROJECT_TEMPLATES, ProjectTemplateEnum

+from core.ui.base import ProjectStage

+

+ARCHITECTURE_STEP = "architecture"

+WARN_SYSTEM_DEPS = ["docker", "kubernetes", "microservices"]

+WARN_FRAMEWORKS = ["next.js", "vue", "vue.js", "svelte", "angular"]

+WARN_FRAMEWORKS_URL = "https://github.com/Pythagora-io/gpt-pilot/wiki/Using-GPT-Pilot-with-frontend-frameworks"

+

+

+# FIXME: all the reponse pydantic models should be strict (see config._StrictModel), also check if we

+# can disallow adding custom Python attributes to the model

+class SystemDependency(BaseModel):

+ name: str = Field(

+ None,

+ description="Name of the system dependency, for example Node.js or Python.",

+ )

+ description: str = Field(

+ None,

+ description="One-line description of the dependency.",

+ )

+ test: str = Field(

+ None,

+ description="Command line to test whether the dependency is available on the system.",

+ )

+ required_locally: bool = Field(

+ None,

+ description="Whether this dependency must be installed locally (as opposed to connecting to cloud or other server)",

+ )

+

+

+class PackageDependency(BaseModel):

+ name: str = Field(

+ None,

+ description="Name of the package dependency, for example Express or React.",

+ )

+ description: str = Field(

+ None,

+ description="One-line description of the dependency.",

+ )

+

+

+class Architecture(BaseModel):

+ architecture: str = Field(

+ None,

+ description="General description of the app architecture.",

+ )

+ system_dependencies: list[SystemDependency] = Field(

+ None,

+ description="List of system dependencies required to build and run the app.",

+ )

+ package_dependencies: list[PackageDependency] = Field(

+ None,

+ description="List of framework/language-specific packages used by the app.",

+ )

+ template: Optional[ProjectTemplateEnum] = Field(

+ None,

+ description="Project template to use for the app, if any (optional, can be null).",

+ )

+

+

+class Architect(BaseAgent):

+ agent_type = "architect"

+ display_name = "Architect"

+

+ async def run(self) -> AgentResponse:

+ await self.ui.send_project_stage(ProjectStage.ARCHITECTURE)

+

+ llm = self.get_llm()

+ convo = AgentConvo(self).template("technologies", templates=PROJECT_TEMPLATES).require_schema(Architecture)

+

+ await self.send_message("Planning project architecture ...")

+ arch: Architecture = await llm(convo, parser=JSONParser(Architecture))

+

+ await self.check_compatibility(arch)

+ await self.check_system_dependencies(arch.system_dependencies)

+

+ spec = self.current_state.specification.clone()

+ spec.architecture = arch.architecture

+ spec.system_dependencies = [d.model_dump() for d in arch.system_dependencies]

+ spec.package_dependencies = [d.model_dump() for d in arch.package_dependencies]

+ spec.template = arch.template.value if arch.template else None

+

+ self.next_state.specification = spec

+ telemetry.set(

+ "architecture",

+ {

+ "description": spec.architecture,

+ "system_dependencies": spec.system_dependencies,

+ "package_dependencies": spec.package_dependencies,

+ },

+ )

+ telemetry.set("template", spec.template)

+ return AgentResponse.done(self)

+

+ async def check_compatibility(self, arch: Architecture) -> bool:

+ warn_system_deps = [dep.name for dep in arch.system_dependencies if dep.name.lower() in WARN_SYSTEM_DEPS]

+ warn_package_deps = [dep.name for dep in arch.package_dependencies if dep.name.lower() in WARN_FRAMEWORKS]

+

+ if warn_system_deps:

+ await self.ask_question(

+ f"Warning: GPT Pilot doesn't officially support {', '.join(warn_system_deps)}. "

+ f"You can try to use {'it' if len(warn_system_deps) == 1 else 'them'}, but you may run into problems.",

+ buttons={"continue": "Continue"},

+ buttons_only=True,

+ default="continue",

+ )

+

+ if warn_package_deps:

+ await self.ask_question(

+ f"Warning: GPT Pilot works best with vanilla JavaScript. "

+ f"You can try try to use {', '.join(warn_package_deps)}, but you may run into problems. "

+ f"Visit {WARN_FRAMEWORKS_URL} for more information.",

+ buttons={"continue": "Continue"},

+ buttons_only=True,

+ default="continue",

+ )

+

+ # TODO: add "cancel" option to the above buttons; if pressed, Architect should

+ # return AgentResponse.revise_spec()

+ # that SpecWriter should catch and allow the user to reword the initial spec.

+ return True

+

+ async def check_system_dependencies(self, deps: list[SystemDependency]):

+ """

+ Check whether the required system dependencies are installed.

+ """

+

+ for dep in deps:

+ status_code, _, _ = await self.process_manager.run_command(dep.test)

+ if status_code != 0:

+ if dep.required_locally:

+ remedy = "Please install it before proceeding with your app."

+ else:

+ remedy = "If you would like to use it locally, please install it before proceeding."

+ await self.send_message(f"❌ {dep.name} is not available. {remedy}")

+ else:

+ await self.send_message(f"✅ {dep.name} is available.")

diff --git a/core/agents/base.py b/core/agents/base.py

new file mode 100644

index 00000000..3959cf87

--- /dev/null

+++ b/core/agents/base.py

@@ -0,0 +1,174 @@

+from typing import Any, Callable, Optional

+

+from core.agents.response import AgentResponse

+from core.config import get_config

+from core.db.models import ProjectState

+from core.llm.base import BaseLLMClient, LLMError

+from core.log import get_logger

+from core.proc.process_manager import ProcessManager

+from core.state.state_manager import StateManager

+from core.ui.base import AgentSource, UIBase, UserInput

+

+log = get_logger(__name__)

+

+

+class BaseAgent:

+ """

+ Base class for agents.

+ """

+

+ agent_type: str

+ display_name: str

+

+ def __init__(

+ self,

+ state_manager: StateManager,

+ ui: UIBase,

+ *,

+ step: Optional[Any] = None,

+ prev_response: Optional["AgentResponse"] = None,

+ process_manager: Optional["ProcessManager"] = None,

+ ):

+ """

+ Create a new agent.

+ """

+ self.ui_source = AgentSource(self.display_name, self.agent_type)

+ self.ui = ui

+ self.stream_output = True

+ self.state_manager = state_manager

+ self.process_manager = process_manager

+ self.prev_response = prev_response

+ self.step = step

+

+ @property

+ def current_state(self) -> ProjectState:

+ """Current state of the project (read-only)."""

+ return self.state_manager.current_state

+

+ @property

+ def next_state(self) -> ProjectState:

+ """Next state of the project (write-only)."""

+ return self.state_manager.next_state

+

+ async def send_message(self, message: str):

+ """

+ Send a message to the user.

+

+ Convenience method, uses `UIBase.send_message()` to send the message,

+ setting the correct source.

+

+ :param message: Message to send.

+ """

+ await self.ui.send_message(message + "\n", source=self.ui_source)

+

+ async def ask_question(

+ self,

+ question: str,

+ *,

+ buttons: Optional[dict[str, str]] = None,

+ default: Optional[str] = None,

+ buttons_only: bool = False,

+ initial_text: Optional[str] = None,

+ allow_empty: bool = False,

+ hint: Optional[str] = None,

+ ) -> UserInput:

+ """

+ Ask a question to the user and return the response.

+

+ Convenience method, uses `UIBase.ask_question()` to

+ ask the question, setting the correct source and

+ logging the question/response.

+

+ :param question: Question to ask.

+ :param buttons: Buttons to display with the question.

+ :param default: Default button to select.

+ :param buttons_only: Only display buttons, no text input.

+ :param allow_empty: Allow empty input.

+ :param hint: Text to display in a popup as a hint to the question.

+ :param initial_text: Initial text input.

+ :return: User response.

+ """

+ response = await self.ui.ask_question(

+ question,

+ buttons=buttons,

+ default=default,

+ buttons_only=buttons_only,

+ allow_empty=allow_empty,

+ hint=hint,

+ initial_text=initial_text,

+ source=self.ui_source,

+ )

+ await self.state_manager.log_user_input(question, response)

+ return response

+

+ async def stream_handler(self, content: str):

+ """

+ Handle streamed response from the LLM.

+

+ Serves as a callback to `AgentBase.llm()` so it can stream the responses to the UI.

+ This can be turned on/off on a pe-request basis by setting `BaseAgent.stream_output`

+ to True or False.

+

+ :param content: Response content.

+ """

+ if self.stream_output:

+ await self.ui.send_stream_chunk(content, source=self.ui_source)

+

+ if content is None:

+ await self.ui.send_message("")

+

+ async def error_handler(self, error: LLMError, message: Optional[str] = None):

+ """

+ Handle error responses from the LLM.

+

+ :param error: The exception that was thrown the the LLM client.

+ :param message: Optional message to show.

+ """

+

+ if error == LLMError.KEY_EXPIRED:

+ await self.ui.send_key_expired(message)

+ elif error == LLMError.RATE_LIMITED:

+ await self.stream_handler(message)

+

+ def get_llm(self, name=None) -> Callable:

+ """

+ Get a new instance of the agent-specific LLM client.

+

+ The client initializes the UI stream handler and stores the

+ request/response to the current state's log. The agent name

+ can be overridden in case the agent needs to use a different

+ model configuration.

+

+ :param name: Name of the agent for configuration (default: class name).

+ :return: LLM client for the agent.

+ """

+

+ if name is None:

+ name = self.__class__.__name__

+

+ config = get_config()

+

+ llm_config = config.llm_for_agent(name)

+ client_class = BaseLLMClient.for_provider(llm_config.provider)

+ llm_client = client_class(llm_config, stream_handler=self.stream_handler, error_handler=self.error_handler)

+

+ async def client(convo, **kwargs) -> Any:

+ """

+ Agent-specific LLM client.

+

+ For details on optional arguments to pass to the LLM client,

+ see `pythagora.llm.openai_client.OpenAIClient()`.

+ """

+ response, request_log = await llm_client(convo, **kwargs)

+ await self.state_manager.log_llm_request(request_log, agent=self)

+ return response

+

+ return client

+

+ async def run() -> AgentResponse:

+ """

+ Run the agent.

+

+ :return: Response from the agent.

+ """

+ raise NotImplementedError()

diff --git a/core/agents/code_monkey.py b/core/agents/code_monkey.py

new file mode 100644

index 00000000..67aa77e8

--- /dev/null

+++ b/core/agents/code_monkey.py

@@ -0,0 +1,127 @@

+from pydantic import BaseModel, Field

+

+from core.agents.base import BaseAgent

+from core.agents.convo import AgentConvo

+from core.agents.response import AgentResponse, ResponseType

+from core.config import DESCRIBE_FILES_AGENT_NAME

+from core.llm.parser import JSONParser, OptionalCodeBlockParser

+from core.log import get_logger

+

+log = get_logger(__name__)

+

+

+class FileDescription(BaseModel):

+ summary: str = Field(

+ description="Detailed description summarized what the file is about, and what the major classes, functions, elements or other functionality is implemented."

+ )

+ references: list[str] = Field(

+ description="List of references the file imports or includes (only files local to the project), where each element specifies the project-relative path of the referenced file, including the file extension."

+ )

+

+

+class CodeMonkey(BaseAgent):

+ agent_type = "code-monkey"

+ display_name = "Code Monkey"

+

+ async def run(self) -> AgentResponse:

+ if self.prev_response and self.prev_response.type == ResponseType.DESCRIBE_FILES:

+ return await self.describe_files()

+ else:

+ return await self.implement_changes()

+

+ def _get_task_convo(self) -> AgentConvo:

+ # FIXME: Current prompts reuse task breakdown / iteration messages so we have to resort to this

+ task = self.current_state.current_task

+ current_task_index = self.current_state.tasks.index(task)

+

+ convo = AgentConvo(self).template(

+ "breakdown",

+ task=task,

+ iteration=None,

+ current_task_index=current_task_index,

+ )

+ # TODO: We currently show last iteration to the code monkey; we might need to show the task

+ # breakdown and all the iterations instead? To think about when refactoring prompts

+ if self.current_state.iterations:

+ convo.assistant(self.current_state.iterations[-1]["description"])

+ else:

+ convo.assistant(self.current_state.current_task["instructions"])

+ return convo

+

+ async def implement_changes(self) -> AgentResponse:

+ file_name = self.step["save_file"]["path"]

+

+ current_file = await self.state_manager.get_file_by_path(file_name)

+ file_content = current_file.content.content if current_file else ""

+

+ task = self.current_state.current_task

+

+ if self.prev_response and self.prev_response.type == ResponseType.CODE_REVIEW_FEEDBACK:

+ attempt = self.prev_response.data["attempt"] + 1

+ feedback = self.prev_response.data["feedback"]

+ log.debug(f"Fixing file {file_name} after review feedback: {feedback} ({attempt}. attempt)")

+ await self.send_message(f"Reworking changes I made to {file_name} ...")

+ else:

+ log.debug(f"Implementing file {file_name}")

+ await self.send_message(f"{'Updating existing' if file_content else 'Creating new'} file {file_name} ...")

+ attempt = 1

+ feedback = None

+

+ llm = self.get_llm()

+ convo = self._get_task_convo().template(

+ "implement_changes",

+ file_name=file_name,

+ file_content=file_content,

+ instructions=task["instructions"],

+ )

+ if feedback:

+ convo.assistant(f"```\n{self.prev_response.data['new_content']}\n```\n").template(

+ "review_feedback",

+ content=self.prev_response.data["approved_content"],

+ original_content=file_content,

+ rework_feedback=feedback,

+ )

+

+ response: str = await llm(convo, temperature=0, parser=OptionalCodeBlockParser())

+ # FIXME: provide a counter here so that we don't have an endless loop here

+ return AgentResponse.code_review(self, file_name, task["instructions"], file_content, response, attempt)

+

+ async def describe_files(self) -> AgentResponse:

+ llm = self.get_llm(DESCRIBE_FILES_AGENT_NAME)

+ to_describe = {

+ file.path: file.content.content for file in self.current_state.files if not file.meta.get("description")

+ }

+

+ for file in self.next_state.files:

+ content = to_describe.get(file.path)

+ if content is None:

+ continue

+

+ if content == "":

+ file.meta = {

+ **file.meta,

+ "description": "Empty file",

+ "references": [],

+ }

+ continue

+

+ log.debug(f"Describing file {file.path}")

+ await self.send_message(f"Describing file {file.path} ...")

+

+ convo = (

+ AgentConvo(self)

+ .template(

+ "describe_file",

+ path=file.path,

+ content=content,

+ )

+ .require_schema(FileDescription)

+ )

+ llm_response: FileDescription = await llm(convo, parser=JSONParser(spec=FileDescription))

+

+ file.meta = {

+ **file.meta,

+ "description": llm_response.summary,

+ "references": llm_response.references,

+ }

+ return AgentResponse.done(self)

diff --git a/core/agents/code_reviewer.py b/core/agents/code_reviewer.py

new file mode 100644

index 00000000..1d1a8457

--- /dev/null

+++ b/core/agents/code_reviewer.py

@@ -0,0 +1,328 @@

+import re

+from difflib import unified_diff

+from enum import Enum

+

+from pydantic import BaseModel, Field

+

+from core.agents.base import BaseAgent

+from core.agents.convo import AgentConvo

+from core.agents.response import AgentResponse

+from core.llm.parser import JSONParser

+from core.log import get_logger

+

+log = get_logger(__name__)

+

+

+# Constant for indicating missing new line at the end of a file in a unified diff

+NO_EOL = "\\ No newline at end of file"

+

+# Regular expression pattern for matching hunk headers

+PATCH_HEADER_PATTERN = re.compile(r"^@@ -(\d+),?(\d+)? \+(\d+),?(\d+)? @@")

+

+# Maximum number of attempts to ask for review if it can't be parsed

+MAX_REVIEW_RETRIES = 2

+

+# Maximum number of code implementation attempts after which we accept the changes unconditionaly

+MAX_CODING_ATTEMPTS = 3

+

+

+class Decision(str, Enum):

+ APPLY = "apply"

+ IGNORE = "ignore"

+ REWORK = "rework"

+

+

+class Hunk(BaseModel):

+ number: int = Field(description="Index of the hunk in the diff. Starts from 1.")

+ reason: str = Field(description="Reason for applying or ignoring this hunk, or for asking for it to be reworked.")

+ decision: Decision = Field(description="Whether to apply this hunk, rework, or ignore it.")

+

+

+class ReviewChanges(BaseModel):

+ hunks: list[Hunk]

+ review_notes: str = Field(description="Additional review notes (optional, can be empty).")

+

+

+class CodeReviewer(BaseAgent):

+ agent_type = "code-reviewer"

+ display_name = "Code Reviewer"

+

+ async def run(self) -> AgentResponse:

+ if (

+ not self.prev_response.data["old_content"]

+ or self.prev_response.data["new_content"] == self.prev_response.data["old_content"]

+ or self.prev_response.data["attempt"] >= MAX_CODING_ATTEMPTS

+ ):

+ # we always auto-accept new files and unchanged files, or if we've tried too many times

+ return await self.accept_changes(self.prev_response.data["path"], self.prev_response.data["new_content"])

+

+ approved_content, feedback = await self.review_change(

+ self.prev_response.data["path"],

+ self.prev_response.data["instructions"],

+ self.prev_response.data["old_content"],

+ self.prev_response.data["new_content"],

+ )

+ if feedback:

+ return AgentResponse.code_review_feedback(

+ self,

+ new_content=self.prev_response.data["new_content"],

+ approved_content=approved_content,

+ feedback=feedback,

+ attempt=self.prev_response.data["attempt"],

+ )

+ else:

+ return await self.accept_changes(self.prev_response.data["path"], approved_content)

+

+ async def accept_changes(self, path: str, content: str) -> AgentResponse:

+ await self.state_manager.save_file(path, content)

+ self.next_state.complete_step()

+

+ input_required = self.state_manager.get_input_required(content)

+ if input_required:

+ return AgentResponse.input_required(

+ self,

+ [{"file": path, "line": line} for line in input_required],

+ )

+ else:

+ return AgentResponse.done(self)

+

+ def _get_task_convo(self) -> AgentConvo:

+ # FIXME: Current prompts reuse conversation from the developer so we have to resort to this

+ task = self.current_state.current_task

+ current_task_index = self.current_state.tasks.index(task)

+

+ convo = AgentConvo(self).template(

+ "breakdown",

+ task=task,

+ iteration=None,

+ current_task_index=current_task_index,

+ )

+ # TODO: We currently show last iteration to the code monkey; we might need to show the task

+ # breakdown and all the iterations instead? To think about when refactoring prompts

+ if self.current_state.iterations:

+ convo.assistant(self.current_state.iterations[-1]["description"])

+ else:

+ convo.assistant(self.current_state.current_task["instructions"])

+ return convo

+

+ async def review_change(

+ self, file_name: str, instructions: str, old_content: str, new_content: str

+ ) -> tuple[str, str]:

+ """

+ Review changes that were applied to the file.

+

+ This asks the LLM to act as a PR reviewer and for each part (hunk) of the

+ diff, decide if it should be applied (kept) or ignored (removed from the PR).

+

+ :param file_name: name of the file being modified

+ :param instructions: instructions for the reviewer

+ :param old_content: old file content

+ :param new_content: new file content (with proposed changes)

+ :return: tuple with file content update with approved changes, and review feedback

+

+ Diff hunk explanation: https://www.gnu.org/software/diffutils/manual/html_node/Hunks.html

+ """

+

+ hunks = self.get_diff_hunks(file_name, old_content, new_content)

+

+ llm = self.get_llm()

+ convo = (

+ self._get_task_convo()

+ .template(

+ "review_changes",

+ instructions=instructions,

+ file_name=file_name,

+ old_content=old_content,

+ hunks=hunks,

+ )

+ .require_schema(ReviewChanges)

+ )

+ llm_response: ReviewChanges = await llm(convo, temperature=0, parser=JSONParser(ReviewChanges))

+

+ for i in range(MAX_REVIEW_RETRIES):

+ reasons = {}

+ ids_to_apply = set()

+ ids_to_ignore = set()

+ ids_to_rework = set()

+ for hunk in llm_response.hunks:

+ reasons[hunk.number - 1] = hunk.reason

+ if hunk.decision == "apply":

+ ids_to_apply.add(hunk.number - 1)

+ elif hunk.decision == "ignore":

+ ids_to_ignore.add(hunk.number - 1)

+ elif hunk.decision == "rework":

+ ids_to_rework.add(hunk.number - 1)

+

+ n_hunks = len(hunks)

+ n_review_hunks = len(reasons)

+ if n_review_hunks == n_hunks:

+ break

+ elif n_review_hunks < n_hunks:

+ error = "Not all hunks have been reviewed. Please review all hunks and add 'apply', 'ignore' or 'rework' decision for each."

+ elif n_review_hunks > n_hunks:

+ error = f"Your review contains more hunks ({n_review_hunks}) than in the original diff ({n_hunks}). Note that one hunk may have multiple changed lines."

+

+ # Max two retries; if the reviewer still hasn't reviewed all hunks, we'll just use the entire new content

+ convo.assistant(llm_response.model_dump_json()).user(error)

+ llm_response = await llm(convo, parser=JSONParser(ReviewChanges))

+ else:

+ return new_content, None

+

+ hunks_to_apply = [h for i, h in enumerate(hunks) if i in ids_to_apply]

+ diff_log = f"--- {file_name}\n+++ {file_name}\n" + "\n".join(hunks_to_apply)

+

+ hunks_to_rework = [(i, h) for i, h in enumerate(hunks) if i in ids_to_rework]

+ review_log = (

+ "\n\n".join([f"## Change\n```{hunk}```\nReviewer feedback:\n{reasons[i]}" for (i, hunk) in hunks_to_rework])

+ + "\n\nReview notes:\n"

+ + llm_response.review_notes

+ )

+

+ if len(hunks_to_apply) == len(hunks):

+ await self.send_message("Applying entire change")

+ log.info(f"Applying entire change to {file_name}")

+ return new_content, None

+

+ elif len(hunks_to_apply) == 0:

+ if hunks_to_rework:

+ await self.send_message(

+ f"Requesting rework for {len(hunks_to_rework)} changes with reason: {llm_response.review_notes}"

+ )

+ log.info(f"Requesting rework for {len(hunks_to_rework)} changes to {file_name} (0 hunks to apply)")

+ return old_content, review_log

+ else:

+ # If everything can be safely ignored, it's probably because the files already implement the changes

+ # from previous tasks (which can happen often). Insisting on a change here is likely to cause problems.

+ await self.send_message(f"Rejecting entire change with reason: {llm_response.review_notes}")

+ log.info(f"Rejecting entire change to {file_name} with reason: {llm_response.review_notes}")

+ return old_content, None

+

+ print("Applying code change:\n" + diff_log)

+ log.info(f"Applying code change to {file_name}:\n{diff_log}")

+ new_content = self.apply_diff(file_name, old_content, hunks_to_apply, new_content)

+ if hunks_to_rework:

+ print(f"Requesting rework for {len(hunks_to_rework)} changes with reason: {llm_response.review_notes}")

+ log.info(f"Requesting further rework for {len(hunks_to_rework)} changes to {file_name}")

+ return new_content, review_log

+ else:

+ return new_content, None

+

+ @staticmethod

+ def get_diff_hunks(file_name: str, old_content: str, new_content: str) -> list[str]:

+ """

+ Get the diff between two files.

+

+ This uses Python difflib to produce an unified diff, then splits

+ it into hunks that will be separately reviewed by the reviewer.

+

+ :param file_name: name of the file being modified

+ :param old_content: old file content

+ :param new_content: new file content

+ :return: change hunks from the unified diff

+ """

+ from_name = "old_" + file_name

+ to_name = "to_" + file_name

+ from_lines = old_content.splitlines(keepends=True)

+ to_lines = new_content.splitlines(keepends=True)

+ diff_gen = unified_diff(from_lines, to_lines, fromfile=from_name, tofile=to_name)

+ diff_txt = "".join(diff_gen)

+

+ hunks = re.split(r"\n@@", diff_txt, re.MULTILINE)

+ result = []

+ for i, h in enumerate(hunks):

+ # Skip the prologue (file names)

+ if i == 0:

+ continue

+ txt = h.splitlines()

+ txt[0] = "@@" + txt[0]

+ result.append("\n".join(txt))

+ return result

+

+ def apply_diff(self, file_name: str, old_content: str, hunks: list[str], fallback: str):

+ """

+ Apply the diff to the original file content.

+

+ This uses the internal `_apply_patch` method to apply the

+ approved diff hunks to the original file content.

+

+ If patch apply fails, the fallback is the full new file content

+ with all the changes applied (as if the reviewer approved everythng).

+

+ :param file_name: name of the file being modified

+ :param old_content: old file content

+ :param hunks: change hunks from the unified diff

+ :param fallback: proposed new file content (with all the changes applied)

+ """

+ diff = (

+ "\n".join(

+ [

+ f"--- {file_name}",

+ f"+++ {file_name}",

+ ]

+ + hunks

+ )

+ + "\n"

+ )

+ try:

+ fixed_content = self._apply_patch(old_content, diff)

+ except Exception as e:

+ # This should never happen but if it does, just use the new version from

+ # the LLM and hope for the best

+ print(f"Error applying diff: {e}; hoping all changes are valid")

+ return fallback

+

+ return fixed_content

+

+ # Adapted from https://gist.github.com/noporpoise/16e731849eb1231e86d78f9dfeca3abc (Public Domain)

+ @staticmethod

+ def _apply_patch(original: str, patch: str, revert: bool = False):

+ """

+ Apply a patch to a string to recover a newer version of the string.

+

+ :param original: The original string.

+ :param patch: The patch to apply.

+ :param revert: If True, treat the original string as the newer version and recover the older string.

+ :return: The updated string after applying the patch.

+ """

+ original_lines = original.splitlines(True)

+ patch_lines = patch.splitlines(True)

+

+ updated_text = ""

+ index_original = start_line = 0

+

+ # Choose which group of the regex to use based on the revert flag

+ match_index, line_sign = (1, "+") if not revert else (3, "-")

+

+ # Skip header lines of the patch

+ while index_original < len(patch_lines) and patch_lines[index_original].startswith(("---", "+++")):

+ index_original += 1

+

+ while index_original < len(patch_lines):

+ match = PATCH_HEADER_PATTERN.match(patch_lines[index_original])

+ if not match:

+ raise Exception("Bad patch -- regex mismatch [line " + str(index_original) + "]")

+

+ line_number = int(match.group(match_index)) - 1 + (match.group(match_index + 1) == "0")

+

+ if start_line > line_number or line_number > len(original_lines):

+ raise Exception("Bad patch -- bad line number [line " + str(index_original) + "]")

+

+ updated_text += "".join(original_lines[start_line:line_number])

+ start_line = line_number

+ index_original += 1

+

+ while index_original < len(patch_lines) and patch_lines[index_original][0] != "@":

+ if index_original + 1 < len(patch_lines) and patch_lines[index_original + 1][0] == "\\":

+ line_content = patch_lines[index_original][:-1]

+ index_original += 2

+ else:

+ line_content = patch_lines[index_original]

+ index_original += 1

+

+ if line_content:

+ if line_content[0] == line_sign or line_content[0] == " ":

+ updated_text += line_content[1:]

+ start_line += line_content[0] != line_sign

+

+ updated_text += "".join(original_lines[start_line:])

+ return updated_text

diff --git a/core/agents/convo.py b/core/agents/convo.py

new file mode 100644

index 00000000..ad389cf6

--- /dev/null

+++ b/core/agents/convo.py

@@ -0,0 +1,75 @@

+import json

+import sys

+from copy import deepcopy

+from typing import TYPE_CHECKING, Optional

+

+from pydantic import BaseModel

+

+from core.config import get_config

+from core.llm.convo import Convo

+from core.llm.prompt import JinjaFileTemplate

+from core.log import get_logger

+

+if TYPE_CHECKING:

+ from core.agents.response import BaseAgent

+

+log = get_logger(__name__)

+

+

+class AgentConvo(Convo):

+ prompt_loader: Optional[JinjaFileTemplate] = None

+

+ def __init__(self, agent: "BaseAgent"):

+ self.agent_instance = agent

+ super().__init__()

+ try:

+ system_message = self.render("system")

+ self.system(system_message)

+ except ValueError as err:

+ log.warning(f"Agent {agent.__class__.__name__} has no system prompt: {err}")

+

+ @classmethod

+ def _init_templates(cls):

+ if cls.prompt_loader is not None:

+ return

+

+ config = get_config()

+ cls.prompt_loader = JinjaFileTemplate(config.prompt.paths)

+

+ def _get_default_template_vars(self) -> dict:

+ if sys.platform == "win32":

+ os = "Windows"

+ elif sys.platform == "darwin":

+ os = "macOS"

+ else:

+ os = "Linux"

+

+ return {

+ "state": self.agent_instance.current_state,

+ "os": os,

+ }

+

+ def render(self, name: str, **kwargs) -> str:

+ self._init_templates()

+

+ kwargs.update(self._get_default_template_vars())

+

+ # Jinja uses "/" even in Windows

+ template_name = f"{self.agent_instance.agent_type}/{name}.prompt"

+ log.debug(f"Loading template {template_name}")

+ return self.prompt_loader(template_name, **kwargs)

+

+ def template(self, template_name: str, **kwargs) -> "AgentConvo":

+ message = self.render(template_name, **kwargs)

+ self.user(message)

+ return self

+

+ def fork(self) -> "AgentConvo":

+ child = AgentConvo(self.agent_instance)

+ child.messages = deepcopy(self.messages)

+ return child

+

+ def require_schema(self, model: BaseModel) -> "AgentConvo":

+ schema_txt = json.dumps(model.model_json_schema())

+ self.user(f"IMPORTANT: Your response MUST conform to this JSON schema:\n```\n{schema_txt}\n```")

+ return self

diff --git a/core/agents/developer.py b/core/agents/developer.py

new file mode 100644

index 00000000..d1642739

--- /dev/null

+++ b/core/agents/developer.py

@@ -0,0 +1,294 @@

+from enum import Enum

+from typing import Annotated, Literal, Optional, Union

+from uuid import uuid4

+

+from pydantic import BaseModel, Field

+

+from core.agents.base import BaseAgent

+from core.agents.convo import AgentConvo

+from core.agents.response import AgentResponse, ResponseType

+from core.llm.parser import JSONParser

+from core.log import get_logger

+

+log = get_logger(__name__)

+

+

+class StepType(str, Enum):

+ COMMAND = "command"

+ SAVE_FILE = "save_file"

+ HUMAN_INTERVENTION = "human_intervention"

+

+

+class CommandOptions(BaseModel):

+ command: str = Field(description="Command to run")

+ timeout: int = Field(description="Timeout in seconds")

+ success_message: str = ""

+

+

+class SaveFileOptions(BaseModel):

+ path: str

+

+

+class SaveFileStep(BaseModel):

+ type: Literal[StepType.SAVE_FILE] = StepType.SAVE_FILE

+ save_file: SaveFileOptions

+

+

+class CommandStep(BaseModel):

+ type: Literal[StepType.COMMAND] = StepType.COMMAND

+ command: CommandOptions

+

+

+class HumanInterventionStep(BaseModel):

+ type: Literal[StepType.HUMAN_INTERVENTION] = StepType.HUMAN_INTERVENTION

+ human_intervention_description: str

+

+

+Step = Annotated[

+ Union[SaveFileStep, CommandStep, HumanInterventionStep],

+ Field(discriminator="type"),

+]

+

+

+class TaskSteps(BaseModel):

+ steps: list[Step]

+

+

+class Developer(BaseAgent):

+ agent_type = "developer"

+ display_name = "Developer"

+

+ async def run(self) -> AgentResponse:

+ if self.prev_response and self.prev_response.type == ResponseType.TASK_REVIEW_FEEDBACK:

+ return await self.breakdown_current_iteration(self.prev_response.data["feedback"])

+

+ # If any of the files are missing metadata/descriptions, those need to be filled-in

+ missing_descriptions = [file.path for file in self.current_state.files if not file.meta.get("description")]

+ if missing_descriptions:

+ log.debug(f"Some files are missing descriptions: {', '.join(missing_descriptions)}, reqesting analysis")

+ return AgentResponse.describe_files(self)

+

+ log.debug(f"Current state files: {len(self.current_state.files)}, relevant {self.current_state.relevant_files}")

+ # Check which files are relevant to the current task

+ if self.current_state.files and not self.current_state.relevant_files:

+ await self.get_relevant_files()

+ return AgentResponse.done(self)

+

+ if not self.current_state.unfinished_tasks:

+ log.warning("No unfinished tasks found, nothing to do (why am I called? is this a bug?)")

+ return AgentResponse.done(self)

+

+ if self.current_state.unfinished_iterations:

+ return await self.breakdown_current_iteration()

+

+ # By default, we want to ask the user if they want to run the task,

+ # except in certain cases (such as they've just edited it).

+ if not self.current_state.current_task.get("run_always", False):

+ if not await self.ask_to_execute_task():

+ return AgentResponse.done(self)

+

+ return await self.breakdown_current_task()

+

+ async def breakdown_current_iteration(self, review_feedback: Optional[str] = None) -> AgentResponse:

+ """

+ Breaks down current iteration or task review into steps.

+

+ :param review_feedback: If provided, the task review feedback is broken down instead of the current iteration

+ :return: AgentResponse.done(self) when the breakdown is done

+ """

+ if self.current_state.unfinished_steps:

+ # if this happens, it's most probably a bug as we should have gone through all the

+ # steps before getting new new iteration instructions

+ log.warning(

+ f"Unfinished steps found before the next iteration is broken down: {self.current_state.unfinished_steps}"

+ )

+

+ if review_feedback is not None:

+ iteration = None

+ description = review_feedback

+ user_feedback = ""

+ source = "review"

+ n_tasks = 1

+ log.debug(f"Breaking down the task review feedback {review_feedback}")

+ await self.send_message("Breaking down the task review feedback...")

+ else:

+ iteration = self.current_state.current_iteration

+ if iteration is None:

+ log.error("Iteration breakdown called but there's no current iteration or task review, possible bug?")

+ return AgentResponse.done(self)

+

+ description = iteration["description"]

+ user_feedback = iteration["user_feedback"]

+ source = "troubleshooting"

+ n_tasks = len(self.next_state.iterations)

+ log.debug(f"Breaking down the iteration {description}")

+ await self.send_message("Breaking down the current task iteration ...")

+

+ await self.ui.send_task_progress(

+ n_tasks, # iterations and reviews can be created only one at a time, so we are always on last one

+ n_tasks,

+ self.current_state.current_task["description"],

+ source,

+ "in-progress",

+ )

+ llm = self.get_llm()

+ # FIXME: In case of iteration, parse_task depends on the context (files, tasks, etc) set there.

+ # Ideally this prompt would be self-contained.

+ convo = (

+ AgentConvo(self)

+ .template(

+ "iteration",

+ current_task=self.current_state.current_task,

+ user_feedback=user_feedback,

+ user_feedback_qa=None,

+ next_solution_to_try=None,

+ )

+ .assistant(description)

+ .template("parse_task")

+ .require_schema(TaskSteps)

+ )

+ response: TaskSteps = await llm(convo, parser=JSONParser(TaskSteps), temperature=0)

+

+ self.set_next_steps(response, source)

+

+ if iteration:

+ self.next_state.complete_iteration()

+

+ return AgentResponse.done(self)

+

+ async def breakdown_current_task(self) -> AgentResponse:

+ task = self.current_state.current_task

+ source = self.current_state.current_epic.get("source", "app")

+ await self.ui.send_task_progress(

+ self.current_state.tasks.index(self.current_state.current_task) + 1,

+ len(self.current_state.tasks),

+ self.current_state.current_task["description"],

+ source,

+ "in-progress",

+ )

+

+ log.debug(f"Breaking down the current task: {task['description']}")

+ await self.send_message("Thinking about how to implement this task ...")

+

+ current_task_index = self.current_state.tasks.index(task)

+

+ llm = self.get_llm()

+ convo = AgentConvo(self).template(

+ "breakdown",

+ task=task,

+ iteration=None,

+ current_task_index=current_task_index,

+ )

+ response: str = await llm(convo)

+

+ # FIXME: check if this is correct, as sqlalchemy can't figure out modifications

+ # to attributes; however, self.next is not saved yet so maybe this is fine

+ self.next_state.tasks[current_task_index] = {

+ **task,

+ "instructions": response,

+ }

+

+ await self.send_message("Breaking down the task into steps ...")

+ convo.template("parse_task").require_schema(TaskSteps)

+ response: TaskSteps = await llm(convo, parser=JSONParser(TaskSteps), temperature=0)

+

+ # There might be state leftovers from previous tasks that we need to clean here

+ self.next_state.modified_files = {}

+ self.set_next_steps(response, source)

+ return AgentResponse.done(self)

+

+ async def get_relevant_files(self) -> AgentResponse:

+ log.debug("Getting relevant files for the current task")

+ await self.send_message("Figuring out which project files are relevant for the next task ...")

+

+ llm = self.get_llm()

+ convo = AgentConvo(self).template("filter_files", current_task=self.current_state.current_task)

+

+ # FIXME: this doesn't validate correct structure format, we should use pydantic for that as well

+ llm_response: list[str] = await llm(convo, parser=JSONParser(), temperature=0)

+

+ existing_files = {file.path for file in self.current_state.files}

+ self.next_state.relevant_files = [path for path in llm_response if path in existing_files]

+

+ return AgentResponse.done(self)

+

+ def set_next_steps(self, response: TaskSteps, source: str):

+ # For logging/debugging purposes, we don't want to remove the finished steps

+ # until we're done with the task.

+ finished_steps = [step for step in self.current_state.steps if step["completed"]]

+ self.next_state.steps = finished_steps + [

+ {

+ "id": uuid4().hex,

+ "completed": False,

+ "source": source,

+ **step.model_dump(),

+ }

+ for step in response.steps

+ ]

+ if len(self.next_state.unfinished_steps) > 0:

+ self.next_state.steps += [

+ # TODO: add refactor step here once we have the refactor agent

+ {

+ "id": uuid4().hex,

+ "completed": False,

+ "type": "review_task",

+ "source": source,

+ },

+ {

+ "id": uuid4().hex,

+ "completed": False,

+ "type": "create_readme",

+ "source": source,

+ },

+ ]

+ log.debug(f"Next steps: {self.next_state.unfinished_steps}")

+

+ async def ask_to_execute_task(self) -> bool:

+ """

+ Asks the user to approve, skip or edit the current task.

+

+ If task is edited, the method returns False so that the changes are saved. The

+ Orchestrator will rerun the agent on the next iteration.

+

+ :return: True if the task should be executed as is, False if the task is skipped or edited

+ """

+ description = self.current_state.current_task["description"]

+ user_response = await self.ask_question(

+ "Do you want to execute the this task:\n\n" + description,

+ buttons={"yes": "Yes", "edit": "Edit Task", "skip": "Skip Task"},

+ default="yes",

+ buttons_only=True,

+ )

+ if user_response.button == "yes":

+ # Execute the task as is

+ return True

+

+ if user_response.cancelled or user_response.button == "skip":

+ log.info(f"Skipping task: {description}")

+ self.next_state.current_task["instructions"] = "(skipped on user request)"

+ self.next_state.complete_task()

+ await self.send_message(f"Skipping task {description}")

+ # We're done here, and will pick up the next task (if any) on the next run

+ return False

+

+ user_response = await self.ask_question(

+ "Edit the task description:",

+ buttons={

+ # FIXME: Continue doesn't actually work, VSCode doesn't send the user

+ # message if it's clicked. Long term we need to fix the extension.

+ # "continue": "Continue",

+ "cancel": "Cancel",

+ },

+ default="continue",

+ initial_text=description,

+ )

+ if user_response.button == "cancel" or user_response.cancelled:

+ # User hasn't edited the task so we can execute it immediately as is

+ return True

+

+ self.next_state.current_task["description"] = user_response.text

+ self.next_state.current_task["run_always"] = True

+ self.next_state.relevant_files = []

+ log.info(f"Task description updated to: {user_response.text}")

+ # Orchestrator will rerun us with the new task description

+ return False

diff --git a/core/agents/error_handler.py b/core/agents/error_handler.py

new file mode 100644

index 00000000..d74b6fb3

--- /dev/null

+++ b/core/agents/error_handler.py

@@ -0,0 +1,108 @@

+from uuid import uuid4

+

+from core.agents.base import BaseAgent

+from core.agents.convo import AgentConvo

+from core.agents.response import AgentResponse

+from core.log import get_logger

+

+log = get_logger(__name__)

+

+

+class ErrorHandler(BaseAgent):

+ """

+ Error handler agent.

+

+ Error handler is responsible for handling errors returned by other agents. If it's possible

+ to recover from the error, it should do it (which may include updating the "next" state) and

+ return DONE. Otherwise it should return EXIT to tell Orchestrator to quit the application.

+ """

+

+ agent_type = "error-handler"

+ display_name = "Error Handler"

+

+ async def run(self) -> AgentResponse:

+ from core.agents.executor import Executor

+ from core.agents.spec_writer import SpecWriter

+

+ error = self.prev_response

+ if error is None:

+ log.warning("ErrorHandler called without a previous error", stack_info=True)

+ return AgentResponse.done(self)

+

+ log.error(

+ f"Agent {error.agent.display_name} returned error response: {error.type}",

+ extra={"data": error.data},

+ )

+

+ if isinstance(error.agent, SpecWriter):

+ # If SpecWriter wasn't able to get the project description, there's nothing for

+ # us to do.

+ return AgentResponse.exit(self)

+

+ if isinstance(error.agent, Executor):

+ return await self.handle_command_error(

+ error.data.get("message", "Unknown error"), error.data.get("details", {})

+ )

+

+ log.error(

+ f"Unhandled error response from agent {error.agent.display_name}",

+ extra={"data": error.data},

+ )

+ return AgentResponse.exit(self)

+

+ async def handle_command_error(self, message: str, details: dict) -> AgentResponse:

+ """

+ Handle an error returned by Executor agent.

+

+ Error message must be the analyis of the command execution, and the details must contain:

+ * cmd - command that was executed

+ * timeout - timeout for the command if any (or None if no timeout was used)

+ * status_code - exit code for the command (or None if the command timed out)

+ * stdout - standard output of the command

+ * stderr - standard error of the command

+

+ :return: AgentResponse

+ """

+ cmd = details.get("cmd")

+ timeout = details.get("timeout")

+ status_code = details.get("status_code")

+ stdout = details.get("stdout", "")

+ stderr = details.get("stderr", "")

+

+ if not message:

+ raise ValueError("No error message provided in command error response")

+ if not cmd:

+ raise ValueError("No command provided in command error response details")

+

+ llm = self.get_llm()

+ convo = AgentConvo(self).template(

+ "debug",

+ task_steps=self.current_state.steps,

+ current_task=self.current_state.current_task,

+ # FIXME: can this break?

+ step_index=self.current_state.steps.index(self.current_state.current_step),

+ cmd=cmd,

+ timeout=timeout,

+ stdout=stdout,

+ stderr=stderr,

+ status_code=status_code,

+ # fixme: everything above copypasted from Executor

+ analysis=message,

+ )

+ llm_response: str = await llm(convo)

+

+ # TODO: duplicate from Troubleshooter, maybe extract to a ProjectState method?

+ self.next_state.iterations = self.current_state.iterations + [

+ {

+ "id": uuid4().hex,

+ "user_feedback": f"Error running command: {cmd}",

+ "description": llm_response,

+ "alternative_solutions": [],

+ "attempts": 1,

+ "completed": False,

+ }

+ ]

+ # TODO: maybe have ProjectState.finished_steps as well? would make the debug/ran_command prompts nicer too

+ self.next_state.steps = [s for s in self.current_state.steps if s.get("completed") is True]

+ # No need to call complete_step() here as we've just removed the steps so that Developer can break down the iteration

+ return AgentResponse.done(self)

diff --git a/core/agents/executor.py b/core/agents/executor.py

new file mode 100644

index 00000000..450588d8

--- /dev/null

+++ b/core/agents/executor.py

@@ -0,0 +1,166 @@

+from datetime import datetime

+from typing import Optional

+

+from pydantic import BaseModel, Field

+

+from core.agents.base import BaseAgent

+from core.agents.convo import AgentConvo

+from core.agents.response import AgentResponse

+from core.llm.parser import JSONParser

+from core.log import get_logger

+from core.proc.exec_log import ExecLog

+from core.proc.process_manager import ProcessManager

+from core.state.state_manager import StateManager

+from core.ui.base import AgentSource, UIBase

+

+log = get_logger(__name__)

+

+

+class CommandResult(BaseModel):

+ """

+ Analysis of the command run and decision on the next steps.

+ """

+

+ analysis: str = Field(

+ description="Analysis of the command output (stdout, stderr) and exit code, in context of the current task"

+ )

+ success: bool = Field(

+ description="True if the command should be treated as successful and the task should continue, false if the command unexpectedly failed and we should debug the issue"

+ )

+

+

+class Executor(BaseAgent):

+ agent_type = "executor"

+ display_name = "Executor"

+

+ def __init__(

+ self,

+ state_manager: StateManager,

+ ui: UIBase,

+ ):

+ """

+ Create a new Executor agent

+ """

+ self.ui_source = AgentSource(self.display_name, self.agent_type)

+ self.ui = ui

+ self.state_manager = state_manager

+ self.process_manager = ProcessManager(

+ root_dir=state_manager.get_full_project_root(),

+ output_handler=self.output_handler,

+ exit_handler=self.exit_handler,

+ )

+ self.stream_output = True

+

+ def for_step(self, step):

+ # FIXME: not needed, refactor to use self.current_state.current_step

+ # in general, passing current step is not needed

+ self.step = step

+ return self

+

+ async def output_handler(self, out, err):

+ await self.stream_handler(out)

+ await self.stream_handler(err)

+

+ async def exit_handler(self, process):

+ pass

+

+ async def run(self) -> AgentResponse:

+ if not self.step:

+ raise ValueError("No current step set (probably an Orchestrator bug)")

+

+ options = self.step["command"]

+ cmd = options["command"]

+ timeout = options.get("timeout")

+

+ if timeout:

+ q = f"Can I run command: {cmd} with {timeout}s timeout?"

+ else:

+ q = f"Can I run command: {cmd}?"

+

+ confirm = await self.ask_question(

+ q,

+ buttons={"yes": "Yes", "no": "No"},

+ default="yes",

+ buttons_only=True,

+ )

+ if confirm.button == "no":

+ log.info(f"Skipping command execution of `{cmd}` (requested by user)")

+ await self.send_message(f"Skipping command {cmd}")

+ self.complete()

+ return AgentResponse.done(self)

+

+ started_at = datetime.now()

+

+ log.info(f"Running command `{cmd}` with timeout {timeout}s")

+ status_code, stdout, stderr = await self.process_manager.run_command(cmd, timeout=timeout)

+ llm_response = await self.check_command_output(cmd, timeout, stdout, stderr, status_code)

+

+ duration = (datetime.now() - started_at).total_seconds()

+

+ self.complete()

+

+ exec_log = ExecLog(

+ started_at=started_at,

+ duration=duration,

+ cmd=cmd,

+ cwd=".",

+ env={},

+ timeout=timeout,

+ status_code=status_code,

+ stdout=stdout,

+ stderr=stderr,

+ analysis=llm_response.analysis,

+ success=llm_response.success,

+ )

+ await self.state_manager.log_command_run(exec_log)

+

+ if llm_response.success:

+ return AgentResponse.done(self)

+

+ return AgentResponse.error(

+ self,

+ llm_response.analysis,

+ {

+ "cmd": cmd,

+ "timeout": timeout,

+ "stdout": stdout,

+ "stderr": stderr,

+ "status_code": status_code,

+ },

+ )

+

+ async def check_command_output(

+ self, cmd: str, timeout: Optional[int], stdout: str, stderr: str, status_code: int

+ ) -> CommandResult:

+ llm = self.get_llm()

+ convo = (

+ AgentConvo(self)

+ .template(

+ "ran_command",

+ task_steps=self.current_state.steps,

+ current_task=self.current_state.current_task,

+ # FIXME: can step ever happen *not* to be in current steps?

+ step_index=self.current_state.steps.index(self.step),

+ cmd=cmd,

+ timeout=timeout,

+ stdout=stdout,

+ stderr=stderr,

+ status_code=status_code,

+ )

+ .require_schema(CommandResult)

+ )

+ return await llm(convo, parser=JSONParser(spec=CommandResult), temperature=0)

+

+ def complete(self):

+ """

+ Mark the step as complete.

+

+ Note that this marks the step complete in the next state. If there's an error,

+ the state won't get committed and the error handler will have access to the

+ current state, where this step is still unfinished.

+

+ This is intentional, so that the error handler can decide what to do with the

+ information we give it.

+ """

+ self.step = None

+ self.next_state.complete_step()

diff --git a/core/agents/human_input.py b/core/agents/human_input.py

new file mode 100644

index 00000000..5bd62d29

--- /dev/null

+++ b/core/agents/human_input.py

@@ -0,0 +1,46 @@

+from core.agents.base import BaseAgent

+from core.agents.response import AgentResponse, ResponseType

+

+

+class HumanInput(BaseAgent):

+ agent_type = "human-input"

+ display_name = "Human Input"

+

+ async def run(self) -> AgentResponse:

+ if self.prev_response and self.prev_response.type == ResponseType.INPUT_REQUIRED:

+ return await self.input_required(self.prev_response.data.get("files", []))

+

+ return await self.human_intervention(self.step)

+

+ async def human_intervention(self, step) -> AgentResponse:

+ description = step["human_intervention_description"]

+

+ await self.ask_question(

+ f"I need human intervention: {description}",

+ buttons={"continue": "Continue"},

+ default="continue",

+ buttons_only=True,

+ )

+ self.next_state.complete_step()

+ return AgentResponse.done(self)

+

+ async def input_required(self, files: list[dict]) -> AgentResponse:

+ for item in files:

+ file = item["file"]

+ line = item["line"]

+

+ # FIXME: this is an ugly hack, we shouldn't need to know how to get to VFS and

+ # anyways the full path is only available for local vfs, so this is doubly wrong;

+ # instead, we should just send the relative path to the extension and it should

+ # figure out where its local files are and how to open it.

+ full_path = self.state_manager.file_system.get_full_path(file)

+

+ await self.send_message(f"Input required on {file}:{line}")

+ await self.ui.open_editor(full_path, line)

+ await self.ask_question(

+ f"Please open {file} and modify line {line} according to the instructions.",

+ buttons={"continue": "Continue"},

+ default="continue",

+ buttons_only=True,

+ )

+ return AgentResponse.done(self)

diff --git a/core/agents/mixins.py b/core/agents/mixins.py

new file mode 100644

index 00000000..5ea0aae7

--- /dev/null

+++ b/core/agents/mixins.py

@@ -0,0 +1,37 @@

+from typing import Optional

+

+from core.agents.convo import AgentConvo

+

+

+class IterationPromptMixin:

+ """

+ Provides a method to find a solution to a problem based on user feedback.

+

+ Used by ProblemSolver and Troubleshooter agents.

+ """

+

+ async def find_solution(

+ self,