mirror of

https://github.com/Significant-Gravitas/AutoGPT.git

synced 2026-01-10 07:38:04 -05:00

Merge branch 'master' into master

This commit is contained in:

2

.coveragerc

Normal file

2

.coveragerc

Normal file

@@ -0,0 +1,2 @@

|

||||

[run]

|

||||

relative_files = true

|

||||

14

.github/ISSUE_TEMPLATE/1.bug.yml

vendored

14

.github/ISSUE_TEMPLATE/1.bug.yml

vendored

@@ -57,6 +57,20 @@ body:

|

||||

- Other (Please specify in your problem)

|

||||

validations:

|

||||

required: true

|

||||

- type: dropdown

|

||||

attributes:

|

||||

label: Which version of Auto-GPT are you using?

|

||||

description: |

|

||||

Please select which version of Auto-GPT you were using when this issue occurred.

|

||||

If you downloaded the code from the [releases page](https://github.com/Significant-Gravitas/Auto-GPT/releases/) make sure you were using the latest code.

|

||||

**If you weren't please try with the [latest code](https://github.com/Significant-Gravitas/Auto-GPT/releases/)**.

|

||||

If installed with git you can run `git branch` to see which version of Auto-GPT you are running.

|

||||

options:

|

||||

- Latest Release

|

||||

- Stable (branch)

|

||||

- Master (branch)

|

||||

validations:

|

||||

required: true

|

||||

- type: dropdown

|

||||

attributes:

|

||||

label: GPT-3 or GPT-4?

|

||||

|

||||

25

.github/workflows/ci.yml

vendored

25

.github/workflows/ci.yml

vendored

@@ -42,6 +42,14 @@ jobs:

|

||||

if: success() || failure()

|

||||

|

||||

test:

|

||||

permissions:

|

||||

# Gives the action the necessary permissions for publishing new

|

||||

# comments in pull requests.

|

||||

pull-requests: write

|

||||

# Gives the action the necessary permissions for pushing data to the

|

||||

# python-coverage-comment-action branch, and for editing existing

|

||||

# comments (to avoid publishing multiple comments in the same PR)

|

||||

contents: write

|

||||

runs-on: ubuntu-latest

|

||||

strategy:

|

||||

matrix:

|

||||

@@ -63,10 +71,25 @@ jobs:

|

||||

|

||||

- name: Run unittest tests with coverage

|

||||

run: |

|

||||

pytest --cov=autogpt --without-integration --without-slow-integration

|

||||

pytest --cov=autogpt --without-integration --without-slow-integration --cov-report term-missing --cov-branch --cov-report xml --cov-report term

|

||||

|

||||

- name: Generate coverage report

|

||||

run: |

|

||||

coverage report

|

||||

coverage xml

|

||||

if: success() || failure()

|

||||

|

||||

- name: Coverage comment

|

||||

id: coverage_comment

|

||||

uses: py-cov-action/python-coverage-comment-action@v3

|

||||

with:

|

||||

GITHUB_TOKEN: ${{ github.token }}

|

||||

|

||||

- name: Store Pull Request comment to be posted

|

||||

uses: actions/upload-artifact@v3

|

||||

if: steps.coverage_comment.outputs.COMMENT_FILE_WRITTEN == 'true'

|

||||

with:

|

||||

# If you use a different name, update COMMENT_ARTIFACT_NAME accordingly

|

||||

name: python-coverage-comment-action

|

||||

# If you use a different name, update COMMENT_FILENAME accordingly

|

||||

path: python-coverage-comment-action.txt

|

||||

|

||||

36

.github/workflows/coverage.yml

vendored

Normal file

36

.github/workflows/coverage.yml

vendored

Normal file

@@ -0,0 +1,36 @@

|

||||

# .github/workflows/coverage.yml

|

||||

name: Post coverage comment

|

||||

|

||||

on:

|

||||

workflow_run:

|

||||

workflows: ["Python CI"]

|

||||

types:

|

||||

- completed

|

||||

|

||||

jobs:

|

||||

test:

|

||||

name: Run tests & display coverage

|

||||

runs-on: ubuntu-latest

|

||||

if: github.event.workflow_run.event == 'pull_request' && github.event.workflow_run.conclusion == 'success'

|

||||

permissions:

|

||||

# Gives the action the necessary permissions for publishing new

|

||||

# comments in pull requests.

|

||||

pull-requests: write

|

||||

# Gives the action the necessary permissions for editing existing

|

||||

# comments (to avoid publishing multiple comments in the same PR)

|

||||

contents: write

|

||||

# Gives the action the necessary permissions for looking up the

|

||||

# workflow that launched this workflow, and download the related

|

||||

# artifact that contains the comment to be published

|

||||

actions: read

|

||||

steps:

|

||||

# DO NOT run actions/checkout here, for security reasons

|

||||

# For details, refer to https://securitylab.github.com/research/github-actions-preventing-pwn-requests/

|

||||

- name: Post comment

|

||||

uses: py-cov-action/python-coverage-comment-action@v3

|

||||

with:

|

||||

GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

|

||||

GITHUB_PR_RUN_ID: ${{ github.event.workflow_run.id }}

|

||||

# Update those if you changed the default values:

|

||||

# COMMENT_ARTIFACT_NAME: python-coverage-comment-action

|

||||

# COMMENT_FILENAME: python-coverage-comment-action.txt

|

||||

@@ -1,6 +1,6 @@

|

||||

repos:

|

||||

- repo: https://github.com/pre-commit/pre-commit-hooks

|

||||

rev: v0.9.2

|

||||

rev: v4.4.0

|

||||

hooks:

|

||||

- id: check-added-large-files

|

||||

args: ['--maxkb=500']

|

||||

|

||||

147

README.md

147

README.md

@@ -26,9 +26,8 @@ Demo made by <a href=https://twitter.com/BlakeWerlinger>Blake Werlinger</a>

|

||||

|

||||

<h2 align="center"> 💖 Help Fund Auto-GPT's Development 💖</h2>

|

||||

<p align="center">

|

||||

If you can spare a coffee, you can help to cover the costs of developing Auto-GPT and help push the boundaries of fully autonomous AI!

|

||||

Your support is greatly appreciated

|

||||

Development of this free, open-source project is made possible by all the <a href="https://github.com/Significant-Gravitas/Auto-GPT/graphs/contributors">contributors</a> and <a href="https://github.com/sponsors/Torantulino">sponsors</a>. If you'd like to sponsor this project and have your avatar or company logo appear below <a href="https://github.com/sponsors/Torantulino">click here</a>.

|

||||

If you can spare a coffee, you can help to cover the costs of developing Auto-GPT and help to push the boundaries of fully autonomous AI!

|

||||

Your support is greatly appreciated. Development of this free, open-source project is made possible by all the <a href="https://github.com/Significant-Gravitas/Auto-GPT/graphs/contributors">contributors</a> and <a href="https://github.com/sponsors/Torantulino">sponsors</a>. If you'd like to sponsor this project and have your avatar or company logo appear below <a href="https://github.com/sponsors/Torantulino">click here</a>.

|

||||

</p>

|

||||

|

||||

|

||||

@@ -52,7 +51,7 @@ Development of this free, open-source project is made possible by all the <a hre

|

||||

## 🚀 Features

|

||||

|

||||

- 🌐 Internet access for searches and information gathering

|

||||

- 💾 Long-Term and Short-Term memory management

|

||||

- 💾 Long-term and short-term memory management

|

||||

- 🧠 GPT-4 instances for text generation

|

||||

- 🔗 Access to popular websites and platforms

|

||||

- 🗃️ File storage and summarization with GPT-3.5

|

||||

@@ -75,56 +74,60 @@ Development of this free, open-source project is made possible by all the <a hre

|

||||

|

||||

## ⚠️ OpenAI API Keys Configuration ⚠️

|

||||

|

||||

Obtain your OpenAI API key from: https://platform.openai.com/account/api-keys.

|

||||

Get your OpenAI API key from: https://platform.openai.com/account/api-keys.

|

||||

|

||||

To use OpenAI API key for Auto-GPT, you **NEED** to have billing set up (AKA paid account).

|

||||

|

||||

You can set up paid account at https://platform.openai.com/account/billing/overview.

|

||||

|

||||

Important: It's highly recommended that you track your usage on [the Usage page](https://platform.openai.com/account/usage)

|

||||

You can also set limits on how much you spend on [the Usage limits page](https://platform.openai.com/account/billing/limits).

|

||||

|

||||

|

||||

|

||||

#### **PLEASE ENSURE YOU HAVE DONE THIS STEP BEFORE PROCEEDING, OTHERWISE NOTHING WILL WORK!**

|

||||

#### **PLEASE ENSURE YOU HAVE DONE THIS STEP BEFORE PROCEEDING. OTHERWISE, NOTHING WILL WORK!**

|

||||

|

||||

## 💾 Installation

|

||||

|

||||

To install Auto-GPT, follow these steps:

|

||||

|

||||

1. Make sure you have all the **requirements** listed above, if not, install/get them

|

||||

1. Make sure you have all the **requirements** listed above. If not, install/get them.

|

||||

|

||||

_To execute the following commands, open a CMD, Bash, or Powershell window by navigating to a folder on your computer and typing `CMD` in the folder path at the top, then press enter._

|

||||

|

||||

2. Clone the repository: For this step, you need Git installed. Alternatively,

|

||||

you can download the [latest stable release](https://github.com/Significant-Gravitas/Auto-GPT/releases/latest) (`Source code (zip)`, bottom of the page).

|

||||

2. Clone the repository: For this step, you need Git installed.

|

||||

Note: If you don't have Git, you can just download the [latest stable release](https://github.com/Significant-Gravitas/Auto-GPT/releases/latest) instead (`Source code (zip)`, at the bottom of the page).

|

||||

|

||||

```bash

|

||||

git clone -b stable https://github.com/Significant-Gravitas/Auto-GPT.git

|

||||

```

|

||||

|

||||

3. Navigate to the directory where the repository was downloaded

|

||||

3. Navigate to the directory where you downloaded the repository.

|

||||

|

||||

```bash

|

||||

cd Auto-GPT

|

||||

```

|

||||

|

||||

4. Install the required dependencies

|

||||

4. Install the required dependencies.

|

||||

|

||||

```bash

|

||||

pip install -r requirements.txt

|

||||

```

|

||||

|

||||

5. Configure Auto-GPT

|

||||

1. Locate the file named `.env.template` in the main `/Auto-GPT` folder.

|

||||

2. Create a copy of this file, called `.env` by removing the `template` extension. The easiest way is to do this in a command prompt/terminal window `cp .env.template .env`.

|

||||

3. Open the `.env` file in a text editor. _Note: Files starting with a dot might be hidden by your Operating System._

|

||||

5. Configure Auto-GPT:

|

||||

1. Find the file named `.env.template` in the main /Auto-GPT folder. This file may be hidden by default in some operating systems due to the dot prefix. To reveal hidden files, follow the instructions for your specific operating system (e.g., in Windows, click on the "View" tab in File Explorer and check the "Hidden items" box; in macOS, press Cmd + Shift + .).

|

||||

2. Create a copy of this file and call it `.env` by removing the `template` extension. The easiest way is to do this in a command prompt/terminal window `cp .env.template .env`.

|

||||

3. Open the `.env` file in a text editor.

|

||||

4. Find the line that says `OPENAI_API_KEY=`.

|

||||

5. After the `"="`, enter your unique OpenAI API Key (without any quotes or spaces).

|

||||

6. Enter any other API keys or Tokens for services you would like to utilize.

|

||||

6. Enter any other API keys or Tokens for services you would like to use.

|

||||

7. Save and close the `.env` file.

|

||||

|

||||

By completing these steps, you have properly configured the API Keys for your project.

|

||||

After you complete these steps, you'll have properly configured the API keys for your project.

|

||||

|

||||

- See [OpenAI API Keys Configuration](#openai-api-keys-configuration) to obtain your OpenAI API key.

|

||||

- Obtain your ElevenLabs API key from: https://elevenlabs.io. You can view your xi-api-key using the "Profile" tab on the website.

|

||||

Notes:

|

||||

- See [OpenAI API Keys Configuration](#openai-api-keys-configuration) to get your OpenAI API key.

|

||||

- Get your ElevenLabs API key from: https://elevenlabs.io. You can view your xi-api-key using the "Profile" tab on the website.

|

||||

- If you want to use GPT on an Azure instance, set `USE_AZURE` to `True` and then follow these steps:

|

||||

- Rename `azure.yaml.template` to `azure.yaml` and provide the relevant `azure_api_base`, `azure_api_version` and all the deployment IDs for the relevant models in the `azure_model_map` section:

|

||||

- `fast_llm_model_deployment_id` - your gpt-3.5-turbo or gpt-4 deployment ID

|

||||

@@ -141,21 +144,23 @@ _To execute the following commands, open a CMD, Bash, or Powershell window by na

|

||||

|

||||

## 🔧 Usage

|

||||

|

||||

1. Run `autogpt` Python module in your terminal.

|

||||

1. Run the `autogpt` Python module in your terminal.

|

||||

* On Linux/MacOS:

|

||||

```bash

|

||||

# On Linux of Mac:

|

||||

./run.sh

|

||||

|

||||

# On Windows:

|

||||

```

|

||||

* On Windows:

|

||||

```bash

|

||||

.\run.bat

|

||||

```

|

||||

Running with `--help` after `.\run.bat` lists all the possible command line arguments you can pass.

|

||||

|

||||

2. After each action, choose from options to authorize command(s),

|

||||

2. After each response from Auto-GPT, choose from the options to authorize command(s),

|

||||

exit the program, or provide feedback to the AI.

|

||||

1. Authorize a single command, enter `y`

|

||||

2. Authorize a series of _N_ continuous commands, enter `y -N`

|

||||

3. Exit the program, enter `n`

|

||||

1. Authorize a single command by entering `y`

|

||||

2. Authorize a series of _N_ continuous commands by entering `y -N`. For example, entering `y -10` would run 10 automatic iterations.

|

||||

3. Enter any free text to give feedback to Auto-GPT.

|

||||

4. Exit the program by entering `n`

|

||||

|

||||

|

||||

### Logs

|

||||

@@ -174,7 +179,7 @@ You can also build this into a docker image and run it:

|

||||

|

||||

```bash

|

||||

docker build -t autogpt .

|

||||

docker run -it --env-file=./.env -v $PWD/auto_gpt_workspace:/app/auto_gpt_workspace autogpt

|

||||

docker run -it --env-file=./.env -v $PWD/auto_gpt_workspace:/home/appuser/auto_gpt_workspace autogpt

|

||||

```

|

||||

|

||||

Or if you have `docker-compose`:

|

||||

@@ -184,7 +189,7 @@ docker-compose run --build --rm auto-gpt

|

||||

|

||||

You can pass extra arguments, for instance, running with `--gpt3only` and `--continuous` mode:

|

||||

```bash

|

||||

docker run -it --env-file=./.env -v $PWD/auto_gpt_workspace:/app/auto_gpt_workspace autogpt --gpt3only --continuous

|

||||

docker run -it --env-file=./.env -v $PWD/auto_gpt_workspace:/home/appuser/auto_gpt_workspace autogpt --gpt3only --continuous

|

||||

```

|

||||

```bash

|

||||

docker-compose run --build --rm auto-gpt --gpt3only --continuous

|

||||

@@ -211,13 +216,13 @@ Here are some common arguments you can use when running Auto-GPT:

|

||||

|

||||

## 🗣️ Speech Mode

|

||||

|

||||

Use this to use TTS _(Text-to-Speech)_ for Auto-GPT

|

||||

Enter this command to use TTS _(Text-to-Speech)_ for Auto-GPT

|

||||

|

||||

```bash

|

||||

python -m autogpt --speak

|

||||

```

|

||||

|

||||

### List of IDs with names from eleven labs, you can use the name or ID:

|

||||

### List of IDs with names from eleven labs. You can use the name or ID:

|

||||

|

||||

- Rachel : 21m00Tcm4TlvDq8ikWAM

|

||||

- Domi : AZnzlk1XvdvUeBnXmlld

|

||||

@@ -231,19 +236,36 @@ python -m autogpt --speak

|

||||

|

||||

## 🔍 Google API Keys Configuration

|

||||

|

||||

This section is optional, use the official google api if you are having issues with error 429 when running a google search.

|

||||

Note:

|

||||

This section is optional. use the official google api if you are having issues with error 429 when running a google search.

|

||||

To use the `google_official_search` command, you need to set up your Google API keys in your environment variables.

|

||||

|

||||

Create your project:

|

||||

1. Go to the [Google Cloud Console](https://console.cloud.google.com/).

|

||||

2. If you don't already have an account, create one and log in.

|

||||

3. Create a new project by clicking on the "Select a Project" dropdown at the top of the page and clicking "New Project". Give it a name and click "Create".

|

||||

4. Go to the [APIs & Services Dashboard](https://console.cloud.google.com/apis/dashboard) and click "Enable APIs and Services". Search for "Custom Search API" and click on it, then click "Enable".

|

||||

5. Go to the [Credentials](https://console.cloud.google.com/apis/credentials) page and click "Create Credentials". Choose "API Key".

|

||||

6. Copy the API key and set it as an environment variable named `GOOGLE_API_KEY` on your machine. See setting up environment variables below.

|

||||

7. [Enable](https://console.developers.google.com/apis/api/customsearch.googleapis.com) the Custom Search API on your project. (Might need to wait few minutes to propagate)

|

||||

8. Go to the [Custom Search Engine](https://cse.google.com/cse/all) page and click "Add".

|

||||

9. Set up your search engine by following the prompts. You can choose to search the entire web or specific sites.

|

||||

10. Once you've created your search engine, click on "Control Panel" and then "Basics". Copy the "Search engine ID" and set it as an environment variable named `CUSTOM_SEARCH_ENGINE_ID` on your machine. See setting up environment variables below.

|

||||

3. Create a new project by clicking on the "Select a Project" dropdown at the top of the page and clicking "New Project".

|

||||

4. Give it a name and click "Create".

|

||||

|

||||

Set up a custom search API and add to your .env file:

|

||||

5. Go to the [APIs & Services Dashboard](https://console.cloud.google.com/apis/dashboard).

|

||||

6. Click "Enable APIs and Services".

|

||||

7. Search for "Custom Search API" and click on it.

|

||||

8. Click "Enable".

|

||||

9. Go to the [Credentials](https://console.cloud.google.com/apis/credentials) page.

|

||||

10. Click "Create Credentials".

|

||||

11. Choose "API Key".

|

||||

12. Copy the API key.

|

||||

13. Set it as an environment variable named `GOOGLE_API_KEY` on your machine (see how to set up environment variables below).

|

||||

14. [Enable](https://console.developers.google.com/apis/api/customsearch.googleapis.com) the Custom Search API on your project. (Might need to wait few minutes to propagate)

|

||||

|

||||

Set up a custom serach engine and add to your .env file:

|

||||

15. Go to the [Custom Search Engine](https://cse.google.com/cse/all) page.

|

||||

16. Click "Add".

|

||||

17. Set up your search engine by following the prompts. You can choose to search the entire web or specific sites.

|

||||

18. Once you've created your search engine, click on "Control Panel".

|

||||

19. Click "Basics".

|

||||

20. Copy the "Search engine ID".

|

||||

21. Set it as an environment variable named `CUSTOM_SEARCH_ENGINE_ID` on your machine (see how to set up environment variables below).

|

||||

|

||||

_Remember that your free daily custom search quota allows only up to 100 searches. To increase this limit, you need to assign a billing account to the project to profit from up to 10K daily searches._

|

||||

|

||||

@@ -265,19 +287,38 @@ export CUSTOM_SEARCH_ENGINE_ID="YOUR_CUSTOM_SEARCH_ENGINE_ID"

|

||||

|

||||

## Plugins

|

||||

|

||||

See https://github.com/Significant-Gravitas/Auto-GPT-Plugin-Template for the template of the plugins.

|

||||

Use the [Auto-GPT Plugin Template](https://github.com/Significant-Gravitas/Auto-GPT-Plugin-Template) as a starting point for creating your own plugins.

|

||||

|

||||

⚠️💀 WARNING 💀⚠️: Review the code of any plugin you use, this allows for any Python to be executed and do malicious things. Like stealing your API keys.

|

||||

⚠️💀 **WARNING** 💀⚠️: Review the code of any plugin you use thoroughly, as plugins can execute any Python code, potentially leading to malicious activities, such as stealing your API keys.

|

||||

|

||||

Drop the repo's zipfile in the plugins folder.

|

||||

### Plugin Installation Steps

|

||||

|

||||

|

||||

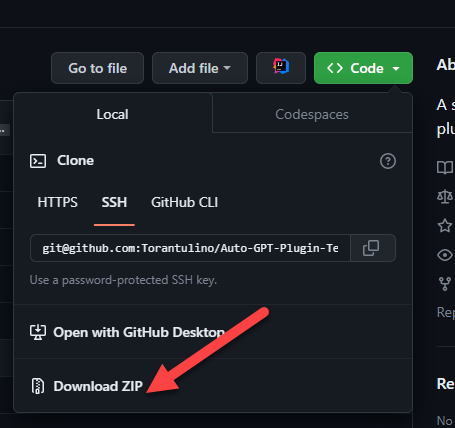

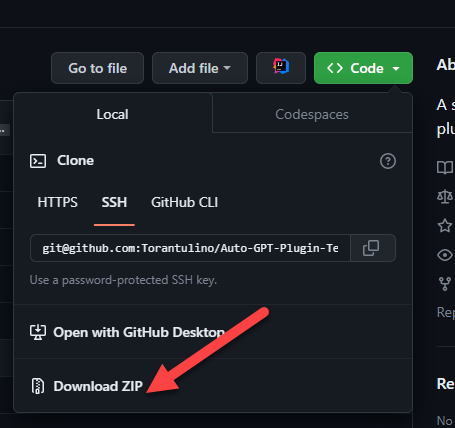

1. **Clone or download the plugin repository:**

|

||||

Clone the plugin repository, or download the repository as a zip file.

|

||||

|

||||

|

||||

|

||||

If you add the plugins class name to the `ALLOWLISTED_PLUGINS` in the `.env` you will not be prompted otherwise you'll be warned before loading the plugin:

|

||||

2. **Install the plugin's dependencies (if any):**

|

||||

Navigate to the plugin's folder in your terminal, and run the following command to install any required dependencies:

|

||||

|

||||

```

|

||||

ALLOWLISTED_PLUGINS=example-plugin1,example-plugin2,example-plugin3

|

||||

```

|

||||

```bash

|

||||

pip install -r requirements.txt

|

||||

```

|

||||

|

||||

3. **Package the plugin as a Zip file:**

|

||||

If you cloned the repository, compress the plugin folder as a Zip file.

|

||||

|

||||

4. **Copy the plugin's Zip file:**

|

||||

Place the plugin's Zip file in the `plugins` folder of the Auto-GPT repository.

|

||||

|

||||

5. **Allowlist the plugin (optional):**

|

||||

Add the plugin's class name to the `ALLOWLISTED_PLUGINS` in the `.env` file to avoid being prompted with a warning when loading the plugin:

|

||||

|

||||

```

|

||||

ALLOWLISTED_PLUGINS=example-plugin1,example-plugin2,example-plugin3

|

||||

```

|

||||

|

||||

If the plugin is not allowlisted, you will be warned before it's loaded.

|

||||

|

||||

## Setting Your Cache Type

|

||||

|

||||

@@ -296,13 +337,13 @@ To switch to either, change the `MEMORY_BACKEND` env variable to the value that

|

||||

### Redis Setup

|

||||

> _**CAUTION**_ \

|

||||

This is not intended to be publicly accessible and lacks security measures. Therefore, avoid exposing Redis to the internet without a password or at all

|

||||

1. Install docker (or Docker Desktop on Windows)

|

||||

2. Launch Redis container

|

||||

1. Install docker (or Docker Desktop on Windows).

|

||||

2. Launch Redis container.

|

||||

```bash

|

||||

docker run -d --name redis-stack-server -p 6379:6379 redis/redis-stack-server:latest

|

||||

```

|

||||

> See https://hub.docker.com/r/redis/redis-stack-server for setting a password and additional configuration.

|

||||

3. Set the following settings in `.env`

|

||||

3. Set the following settings in `.env`.

|

||||

> Replace **PASSWORD** in angled brackets (<>)

|

||||

```bash

|

||||

MEMORY_BACKEND=redis

|

||||

@@ -320,7 +361,7 @@ MEMORY_INDEX=<WHATEVER>

|

||||

|

||||

### 🌲 Pinecone API Key Setup

|

||||

|

||||

Pinecone enables the storage of vast amounts of vector-based memory, allowing for only relevant memories to be loaded for the agent at any given time.

|

||||

Pinecone lets you store vast amounts of vector-based memory, allowing the agent to load only relevant memories at any given time.

|

||||

|

||||

1. Go to [pinecone](https://app.pinecone.io/) and make an account if you don't already have one.

|

||||

2. Choose the `Starter` plan to avoid being charged.

|

||||

@@ -467,7 +508,7 @@ By default, Auto-GPT uses DALL-e for image generation. To use Stable Diffusion,

|

||||

Once you have a token, set these variables in your `.env`:

|

||||

|

||||

```bash

|

||||

IMAGE_PROVIDER=sd

|

||||

IMAGE_PROVIDER=huggingface

|

||||

HUGGINGFACE_API_TOKEN="YOUR_HUGGINGFACE_API_TOKEN"

|

||||

```

|

||||

|

||||

|

||||

@@ -22,10 +22,10 @@ def analyze_code(code: str) -> list[str]:

|

||||

improve the code.

|

||||

"""

|

||||

|

||||

function_string = "def analyze_code(code: str) -> List[str]:"

|

||||

function_string = "def analyze_code(code: str) -> list[str]:"

|

||||

args = [code]

|

||||

description_string = (

|

||||

"Analyzes the given code and returns a list of suggestions" " for improvements."

|

||||

"Analyzes the given code and returns a list of suggestions for improvements."

|

||||

)

|

||||

|

||||

return call_ai_function(function_string, args, description_string)

|

||||

|

||||

@@ -48,8 +48,7 @@ def log_operation(operation: str, filename: str) -> None:

|

||||

if not os.path.exists(LOG_FILE_PATH):

|

||||

with open(LOG_FILE_PATH, "w", encoding="utf-8") as f:

|

||||

f.write("File Operation Logger ")

|

||||

|

||||

append_to_file(LOG_FILE, log_entry, should_log=False)

|

||||

append_to_file(str(LOG_FILE_PATH), log_entry, should_log=False)

|

||||

|

||||

|

||||

def split_file(

|

||||

@@ -94,8 +93,8 @@ def read_file(filename: str) -> str:

|

||||

Returns:

|

||||

str: The contents of the file

|

||||

"""

|

||||

filepath = path_in_workspace(filename)

|

||||

try:

|

||||

filepath = path_in_workspace(filename)

|

||||

with open(filepath, "r", encoding="utf-8") as f:

|

||||

content = f.read()

|

||||

return content

|

||||

@@ -240,7 +239,7 @@ def search_files(directory: str) -> list[str]:

|

||||

|

||||

@command(

|

||||

"download_file",

|

||||

"Search Files",

|

||||

"Download File",

|

||||

'"url": "<url>", "filename": "<filename>"',

|

||||

CFG.allow_downloads,

|

||||

"Error: You do not have user authorization to download files locally.",

|

||||

|

||||

@@ -10,20 +10,21 @@ CFG = Config()

|

||||

|

||||

@command(

|

||||

"clone_repository",

|

||||

"Clone Repositoryy",

|

||||

"Clone Repository",

|

||||

'"repository_url": "<repository_url>", "clone_path": "<clone_path>"',

|

||||

CFG.github_username and CFG.github_api_key,

|

||||

"Configure github_username and github_api_key.",

|

||||

)

|

||||

def clone_repository(repository_url: str, clone_path: str) -> str:

|

||||

"""Clone a GitHub repository locally

|

||||

"""Clone a GitHub repository locally.

|

||||

|

||||

Args:

|

||||

repository_url (str): The URL of the repository to clone

|

||||

clone_path (str): The path to clone the repository to

|

||||

repository_url (str): The URL of the repository to clone.

|

||||

clone_path (str): The path to clone the repository to.

|

||||

|

||||

Returns:

|

||||

str: The result of the clone operation"""

|

||||

str: The result of the clone operation.

|

||||

"""

|

||||

split_url = repository_url.split("//")

|

||||

auth_repo_url = f"//{CFG.github_username}:{CFG.github_api_key}@".join(split_url)

|

||||

safe_clone_path = path_in_workspace(clone_path)

|

||||

|

||||

@@ -17,14 +17,14 @@ def improve_code(suggestions: list[str], code: str) -> str:

|

||||

chat completion api call.

|

||||

|

||||

Parameters:

|

||||

suggestions (List): A list of suggestions around what needs to be improved.

|

||||

suggestions (list): A list of suggestions around what needs to be improved.

|

||||

code (str): Code to be improved.

|

||||

Returns:

|

||||

A result string from create chat completion. Improved code in response.

|

||||

"""

|

||||

|

||||

function_string = (

|

||||

"def generate_improved_code(suggestions: List[str], code: str) -> str:"

|

||||

"def generate_improved_code(suggestions: list[str], code: str) -> str:"

|

||||

)

|

||||

args = [json.dumps(suggestions), code]

|

||||

description_string = (

|

||||

|

||||

@@ -5,9 +5,11 @@ A module that contains the AIConfig class object that contains the configuration

|

||||

from __future__ import annotations

|

||||

|

||||

import os

|

||||

import platform

|

||||

from pathlib import Path

|

||||

from typing import Optional, Type

|

||||

|

||||

import distro

|

||||

import yaml

|

||||

|

||||

from autogpt.prompts.generator import PromptGenerator

|

||||

@@ -130,6 +132,17 @@ class AIConfig:

|

||||

continue

|

||||

prompt_generator = plugin.post_prompt(prompt_generator)

|

||||

|

||||

if cfg.execute_local_commands:

|

||||

# add OS info to prompt

|

||||

os_name = platform.system()

|

||||

os_info = (

|

||||

platform.platform(terse=True)

|

||||

if os_name != "Linux"

|

||||

else distro.name(pretty=True)

|

||||

)

|

||||

|

||||

prompt_start += f"\nThe OS you are running on is: {os_info}"

|

||||

|

||||

# Construct full prompt

|

||||

full_prompt = f"You are {prompt_generator.name}, {prompt_generator.role}\n{prompt_start}\n\nGOALS:\n\n"

|

||||

for i, goal in enumerate(self.ai_goals):

|

||||

|

||||

@@ -10,7 +10,7 @@ from dotenv import load_dotenv

|

||||

|

||||

from autogpt.config.singleton import Singleton

|

||||

|

||||

load_dotenv(verbose=True)

|

||||

load_dotenv(verbose=True, override=True)

|

||||

|

||||

|

||||

class Config(metaclass=Singleton):

|

||||

@@ -174,11 +174,8 @@ class Config(metaclass=Singleton):

|

||||

Returns:

|

||||

None

|

||||

"""

|

||||

try:

|

||||

with open(config_file) as file:

|

||||

config_params = yaml.load(file, Loader=yaml.FullLoader)

|

||||

except FileNotFoundError:

|

||||

config_params = {}

|

||||

with open(config_file) as file:

|

||||

config_params = yaml.load(file, Loader=yaml.FullLoader)

|

||||

self.openai_api_type = config_params.get("azure_api_type") or "azure"

|

||||

self.openai_api_base = config_params.get("azure_api_base") or ""

|

||||

self.openai_api_version = (

|

||||

|

||||

@@ -112,6 +112,9 @@ def create_config(

|

||||

CFG.ai_settings_file = file

|

||||

CFG.skip_reprompt = True

|

||||

|

||||

if browser_name:

|

||||

CFG.selenium_web_browser = browser_name

|

||||

|

||||

if allow_downloads:

|

||||

logger.typewriter_log("Native Downloading:", Fore.GREEN, "ENABLED")

|

||||

logger.typewriter_log(

|

||||

@@ -129,6 +132,3 @@ def create_config(

|

||||

|

||||

if skip_news:

|

||||

CFG.skip_news = True

|

||||

|

||||

if browser_name:

|

||||

CFG.selenium_web_browser = browser_name

|

||||

|

||||

@@ -5,6 +5,12 @@ import yaml

|

||||

from colorama import Fore

|

||||

from git.repo import Repo

|

||||

|

||||

# Use readline if available (for clean_input)

|

||||

try:

|

||||

import readline

|

||||

except:

|

||||

pass

|

||||

|

||||

|

||||

def clean_input(prompt: str = ""):

|

||||

try:

|

||||

|

||||

@@ -10,8 +10,8 @@ services:

|

||||

env_file:

|

||||

- .env

|

||||

volumes:

|

||||

- "./autogpt:/app"

|

||||

- ".env:/app/.env"

|

||||

- "./autogpt:/home/appuser"

|

||||

- ".env:/home/appuser/.env"

|

||||

profiles: ["exclude-from-up"]

|

||||

|

||||

redis:

|

||||

|

||||

@@ -1,5 +1,6 @@

|

||||

beautifulsoup4>=4.12.2

|

||||

colorama==0.4.6

|

||||

distro==1.8.0

|

||||

openai==0.27.2

|

||||

playsound==1.2.2

|

||||

python-dotenv==1.0.0

|

||||

|

||||

0

tests/unit/__init__.py

Normal file

0

tests/unit/__init__.py

Normal file

150

tests/unit/test_file_operations.py

Normal file

150

tests/unit/test_file_operations.py

Normal file

@@ -0,0 +1,150 @@

|

||||

import os

|

||||

import shutil

|

||||

import unittest

|

||||

from pathlib import Path

|

||||

|

||||

from autogpt.commands.file_operations import (

|

||||

LOG_FILE_PATH,

|

||||

append_to_file,

|

||||

check_duplicate_operation,

|

||||

delete_file,

|

||||

log_operation,

|

||||

read_file,

|

||||

search_files,

|

||||

split_file,

|

||||

write_to_file,

|

||||

)

|

||||

from autogpt.config import Config

|

||||

from autogpt.workspace import path_in_workspace

|

||||

|

||||

|

||||

class TestFileOperations(unittest.TestCase):

|

||||

"""

|

||||

This set of unit tests is designed to test the file operations that autoGPT has access to.

|

||||

"""

|

||||

|

||||

def setUp(self):

|

||||

self.test_file = "test_file.txt"

|

||||

self.test_file2 = "test_file2.txt"

|

||||

self.test_directory = "test_directory"

|

||||

self.file_content = "This is a test file.\n"

|

||||

self.file_logger_logs = "file_logger.txt"

|

||||

|

||||

with open(path_in_workspace(self.test_file), "w") as f:

|

||||

f.write(self.file_content)

|

||||

|

||||

if os.path.exists(LOG_FILE_PATH):

|

||||

os.remove(LOG_FILE_PATH)

|

||||

|

||||

def tearDown(self):

|

||||

if os.path.exists(path_in_workspace(self.test_file)):

|

||||

os.remove(path_in_workspace(self.test_file))

|

||||

|

||||

if os.path.exists(self.test_directory):

|

||||

shutil.rmtree(self.test_directory)

|

||||

|

||||

def test_check_duplicate_operation(self):

|

||||

log_operation("write", self.test_file)

|

||||

self.assertTrue(check_duplicate_operation("write", self.test_file))

|

||||

|

||||

# Test logging a file operation

|

||||

def test_log_operation(self):

|

||||

if os.path.exists(self.file_logger_logs):

|

||||

os.remove(self.file_logger_logs)

|

||||

|

||||

log_operation("log_test", self.test_file)

|

||||

with open(LOG_FILE_PATH, "r") as f:

|

||||

content = f.read()

|

||||

self.assertIn("log_test: test_file.txt", content)

|

||||

|

||||

# Test splitting a file into chunks

|

||||

def test_split_file(self):

|

||||

content = "abcdefghij"

|

||||

chunks = list(split_file(content, max_length=4, overlap=1))

|

||||

expected = ["abcd", "defg", "ghij"]

|

||||

self.assertEqual(chunks, expected)

|

||||

|

||||

def test_read_file(self):

|

||||

content = read_file(self.test_file)

|

||||

self.assertEqual(content, self.file_content)

|

||||

|

||||

def test_write_to_file(self):

|

||||

new_content = "This is new content.\n"

|

||||

write_to_file(self.test_file, new_content)

|

||||

with open(path_in_workspace(self.test_file), "r") as f:

|

||||

content = f.read()

|

||||

self.assertEqual(content, new_content)

|

||||

|

||||

def test_append_to_file(self):

|

||||

with open(path_in_workspace(self.test_file), "r") as f:

|

||||

content_before = f.read()

|

||||

|

||||

append_text = "This is appended text.\n"

|

||||

append_to_file(self.test_file, append_text)

|

||||

with open(path_in_workspace(self.test_file), "r") as f:

|

||||

content = f.read()

|

||||

|

||||

self.assertEqual(content, content_before + append_text)

|

||||

|

||||

def test_delete_file(self):

|

||||

delete_file(self.test_file)

|

||||

self.assertFalse(os.path.exists(path_in_workspace(self.test_file)))

|

||||

|

||||

def test_search_files(self):

|

||||

# Case 1: Create files A and B, search for A, and ensure we don't return A and B

|

||||

file_a = "file_a.txt"

|

||||

file_b = "file_b.txt"

|

||||

|

||||

with open(path_in_workspace(file_a), "w") as f:

|

||||

f.write("This is file A.")

|

||||

|

||||

with open(path_in_workspace(file_b), "w") as f:

|

||||

f.write("This is file B.")

|

||||

|

||||

# Create a subdirectory and place a copy of file_a in it

|

||||

if not os.path.exists(path_in_workspace(self.test_directory)):

|

||||

os.makedirs(path_in_workspace(self.test_directory))

|

||||

|

||||

with open(

|

||||

path_in_workspace(os.path.join(self.test_directory, file_a)), "w"

|

||||

) as f:

|

||||

f.write("This is file A in the subdirectory.")

|

||||

|

||||

files = search_files(path_in_workspace(""))

|

||||

self.assertIn(file_a, files)

|

||||

self.assertIn(file_b, files)

|

||||

self.assertIn(os.path.join(self.test_directory, file_a), files)

|

||||

|

||||

# Clean up

|

||||

os.remove(path_in_workspace(file_a))

|

||||

os.remove(path_in_workspace(file_b))

|

||||

os.remove(path_in_workspace(os.path.join(self.test_directory, file_a)))

|

||||

os.rmdir(path_in_workspace(self.test_directory))

|

||||

|

||||

# Case 2: Search for a file that does not exist and make sure we don't throw

|

||||

non_existent_file = "non_existent_file.txt"

|

||||

files = search_files("")

|

||||

self.assertNotIn(non_existent_file, files)

|

||||

|

||||

# Test to ensure we cannot read files out of workspace

|

||||

def test_restrict_workspace(self):

|

||||

CFG = Config()

|

||||

with open(self.test_file2, "w+") as f:

|

||||

f.write("test text")

|

||||

|

||||

CFG.restrict_to_workspace = True

|

||||

|

||||

# Get the absolute path of self.test_file2

|

||||

test_file2_abs_path = os.path.abspath(self.test_file2)

|

||||

|

||||

with self.assertRaises(ValueError):

|

||||

read_file(test_file2_abs_path)

|

||||

|

||||

CFG.restrict_to_workspace = False

|

||||

read_file(test_file2_abs_path)

|

||||

|

||||

os.remove(test_file2_abs_path)

|

||||

|

||||

|

||||

if __name__ == "__main__":

|

||||

unittest.main()

|

||||

Reference in New Issue

Block a user