mirror of

https://github.com/invoke-ai/InvokeAI.git

synced 2026-01-29 21:27:59 -05:00

Compare commits

64 Commits

feat/metad

...

psychedeli

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

75624e9158 | ||

|

|

a2613948d8 | ||

|

|

f8392b2f78 | ||

|

|

358116bc22 | ||

|

|

1e3590111d | ||

|

|

063b800280 | ||

|

|

3935bf92c8 | ||

|

|

066e09b517 | ||

|

|

869b4a8d49 | ||

|

|

13919ff300 | ||

|

|

634e5652ef | ||

|

|

9bdc718df5 | ||

|

|

73ca8ccdb3 | ||

|

|

f37ffda966 | ||

|

|

5a9777d443 | ||

|

|

8072c05ee0 | ||

|

|

75ff4f4ca3 | ||

|

|

30df123221 | ||

|

|

06193ddbe8 | ||

|

|

ce5122f87c | ||

|

|

43ebd68313 | ||

|

|

ec19fcafb1 | ||

|

|

6fcc7d4c4b | ||

|

|

912087e4dc | ||

|

|

593fb95213 | ||

|

|

6d821b32d3 | ||

|

|

297f96c16b | ||

|

|

0e53b27655 | ||

|

|

35ae9f6e71 | ||

|

|

a1d9e6b871 | ||

|

|

f05379f965 | ||

|

|

e34e6d6e80 | ||

|

|

86cb53342a | ||

|

|

e3de996525 | ||

|

|

25a71a1791 | ||

|

|

d16583ad1c | ||

|

|

46db1dd18f | ||

|

|

4c9344b0ee | ||

|

|

cba31efd78 | ||

|

|

4d01b5c0f2 | ||

|

|

e02af8f518 | ||

|

|

c485cf568b | ||

|

|

51451cbf21 | ||

|

|

0363a06963 | ||

|

|

cc280cbef1 | ||

|

|

7544eadd48 | ||

|

|

7d683b4db6 | ||

|

|

60b3c6a201 | ||

|

|

88c8cb61f0 | ||

|

|

43fbac26df | ||

|

|

627444e17c | ||

|

|

5601858f4f | ||

|

|

b5e1ba34b3 | ||

|

|

58aa159a50 | ||

|

|

d8f7c19030 | ||

|

|

24132a7950 | ||

|

|

45d172d5a8 | ||

|

|

3cb6d333f6 | ||

|

|

4570702dd0 | ||

|

|

1d107f30e5 | ||

|

|

79084e9e20 | ||

|

|

fc9b4539a3 | ||

|

|

09ef57718e | ||

|

|

cab8239ba8 |

@@ -296,8 +296,18 @@ code for InvokeAI. For this to work, you will need to install the

|

||||

on your system, please see the [Git Installation

|

||||

Guide](https://github.com/git-guides/install-git)

|

||||

|

||||

You will also need to install the [frontend development toolchain](https://github.com/invoke-ai/InvokeAI/blob/main/docs/contributing/contribution_guides/contributingToFrontend.md).

|

||||

|

||||

If you have a "normal" installation, you should create a totally separate virtual environment for the git-based installation, else the two may interfere.

|

||||

|

||||

> **Why do I need the frontend toolchain**?

|

||||

>

|

||||

> The InvokeAI project uses trunk-based development. That means our `main` branch is the development branch, and releases are tags on that branch. Because development is very active, we don't keep an updated build of the UI in `main` - we only build it for production releases.

|

||||

>

|

||||

> That means that between releases, to have a functioning application when running directly from the repo, you will need to run the UI in dev mode or build it regularly (any time the UI code changes).

|

||||

|

||||

1. Create a fork of the InvokeAI repository through the GitHub UI or [this link](https://github.com/invoke-ai/InvokeAI/fork)

|

||||

1. From the command line, run this command:

|

||||

2. From the command line, run this command:

|

||||

```bash

|

||||

git clone https://github.com/<your_github_username>/InvokeAI.git

|

||||

```

|

||||

@@ -305,10 +315,10 @@ Guide](https://github.com/git-guides/install-git)

|

||||

This will create a directory named `InvokeAI` and populate it with the

|

||||

full source code from your fork of the InvokeAI repository.

|

||||

|

||||

2. Activate the InvokeAI virtual environment as per step (4) of the manual

|

||||

3. Activate the InvokeAI virtual environment as per step (4) of the manual

|

||||

installation protocol (important!)

|

||||

|

||||

3. Enter the InvokeAI repository directory and run one of these

|

||||

4. Enter the InvokeAI repository directory and run one of these

|

||||

commands, based on your GPU:

|

||||

|

||||

=== "CUDA (NVidia)"

|

||||

@@ -334,11 +344,15 @@ installation protocol (important!)

|

||||

Be sure to pass `-e` (for an editable install) and don't forget the

|

||||

dot ("."). It is part of the command.

|

||||

|

||||

You can now run `invokeai` and its related commands. The code will be

|

||||

5. Install the [frontend toolchain](https://github.com/invoke-ai/InvokeAI/blob/main/docs/contributing/contribution_guides/contributingToFrontend.md) and do a production build of the UI as described.

|

||||

|

||||

6. You can now run `invokeai` and its related commands. The code will be

|

||||

read from the repository, so that you can edit the .py source files

|

||||

and watch the code's behavior change.

|

||||

|

||||

4. If you wish to contribute to the InvokeAI project, you are

|

||||

When you pull in new changes to the repo, be sure to re-build the UI.

|

||||

|

||||

7. If you wish to contribute to the InvokeAI project, you are

|

||||

encouraged to establish a GitHub account and "fork"

|

||||

https://github.com/invoke-ai/InvokeAI into your own copy of the

|

||||

repository. You can then use GitHub functions to create and submit

|

||||

|

||||

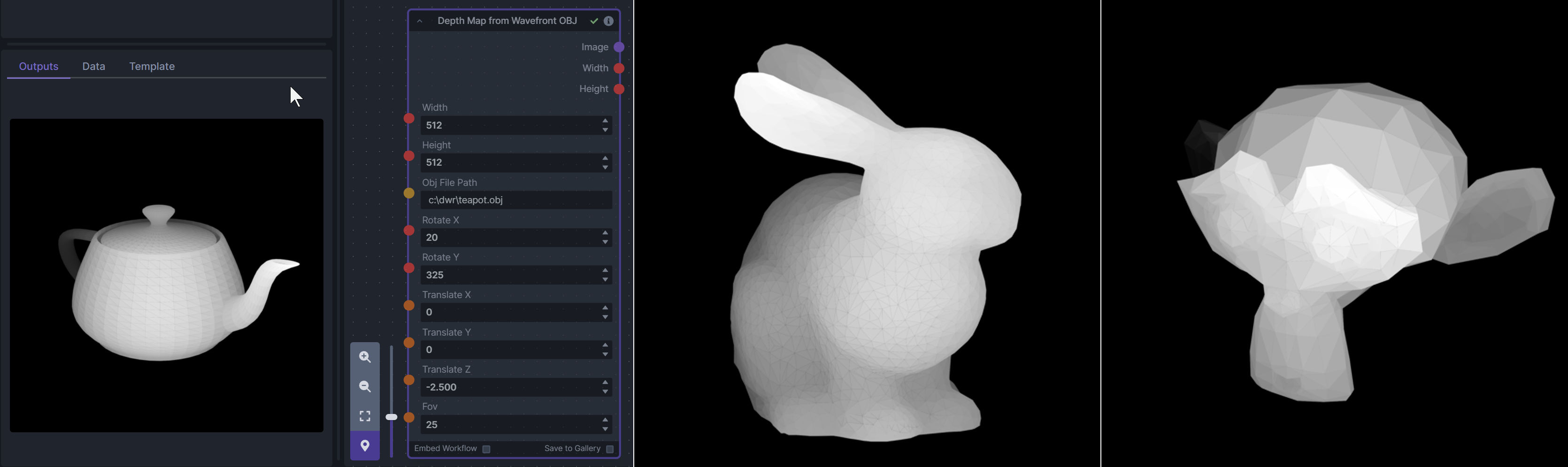

@@ -121,18 +121,6 @@ To be imported, an .obj must use triangulated meshes, so make sure to enable tha

|

||||

**Example Usage:**

|

||||

|

||||

|

||||

--------------------------------

|

||||

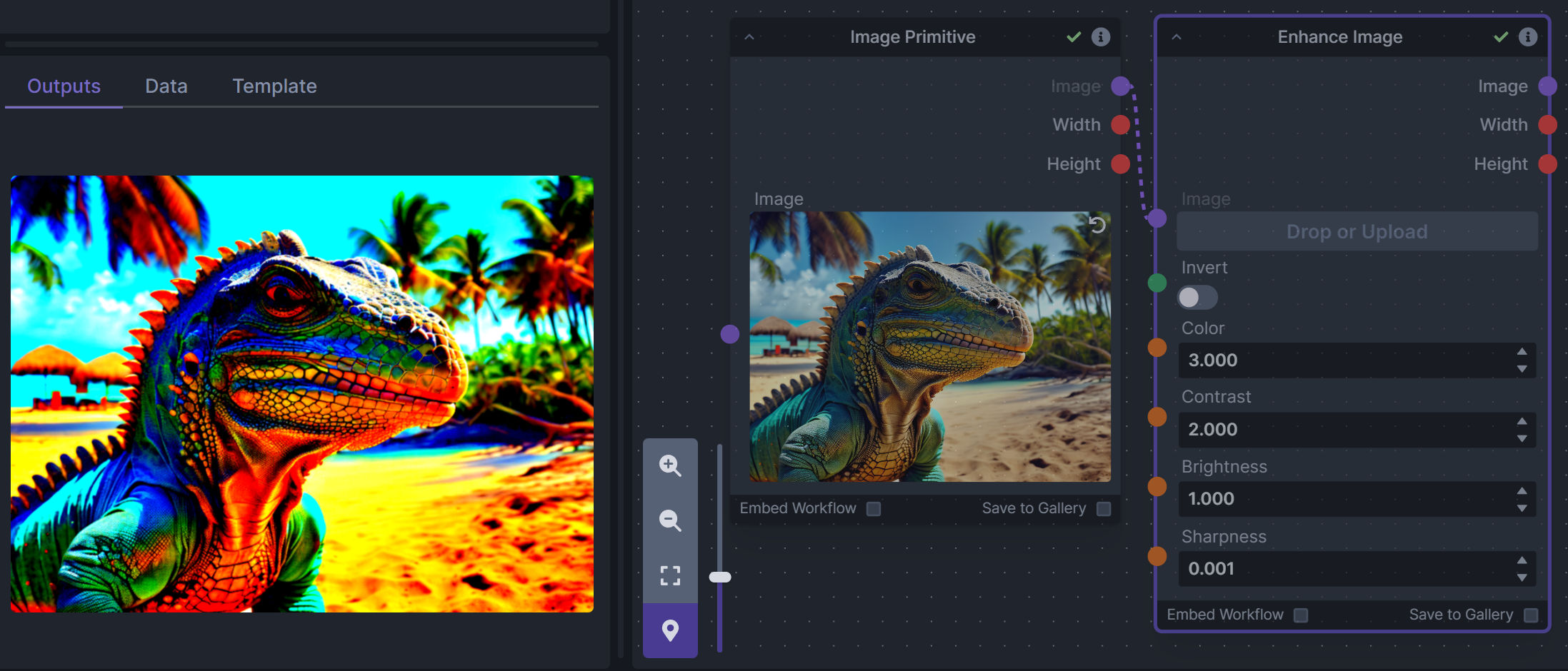

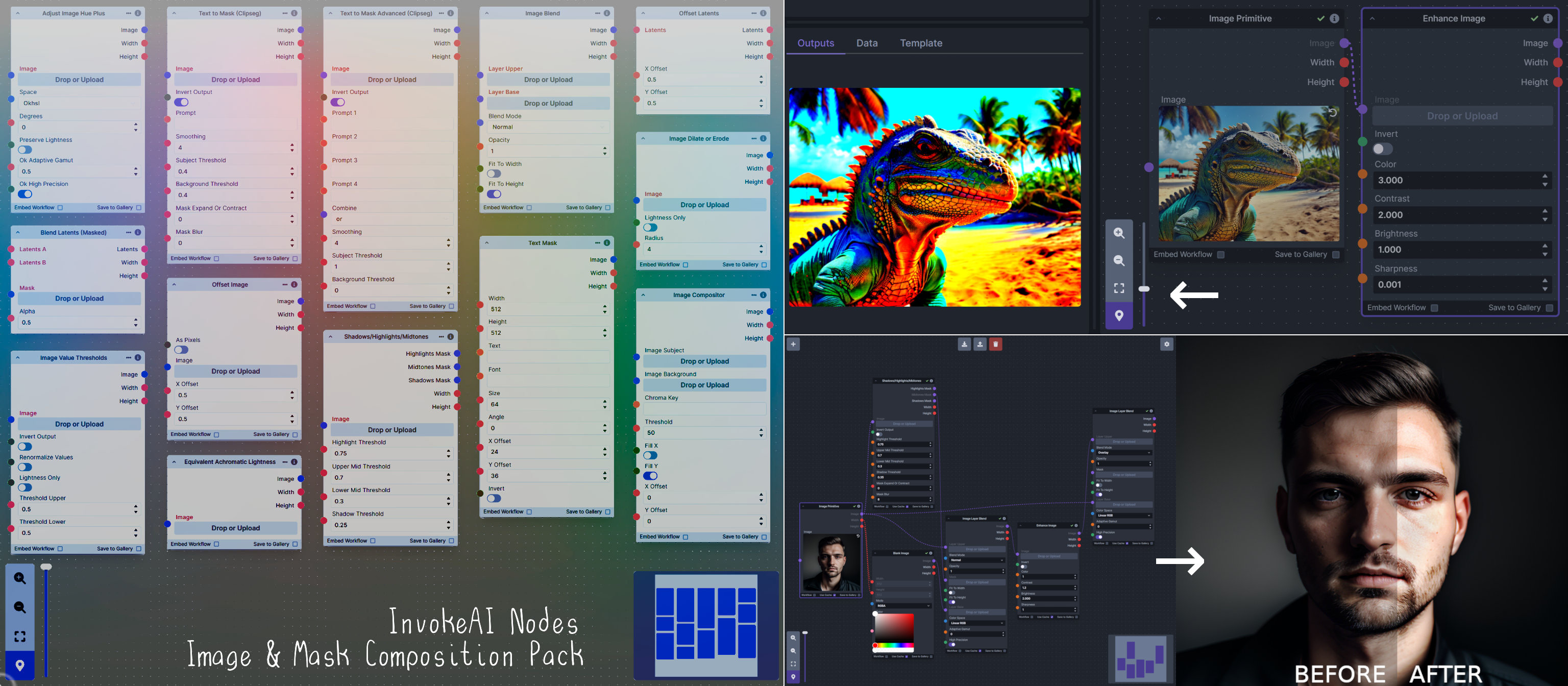

### Enhance Image (simple adjustments)

|

||||

|

||||

**Description:** Boost or reduce color saturation, contrast, brightness, sharpness, or invert colors of any image at any stage with this simple wrapper for pillow [PIL]'s ImageEnhance module.

|

||||

|

||||

Color inversion is toggled with a simple switch, while each of the four enhancer modes are activated by entering a value other than 1 in each corresponding input field. Values less than 1 will reduce the corresponding property, while values greater than 1 will enhance it.

|

||||

|

||||

**Node Link:** https://github.com/dwringer/image-enhance-node

|

||||

|

||||

**Example Usage:**

|

||||

|

||||

|

||||

--------------------------------

|

||||

### Generative Grammar-Based Prompt Nodes

|

||||

|

||||

@@ -153,16 +141,26 @@ This includes 3 Nodes:

|

||||

|

||||

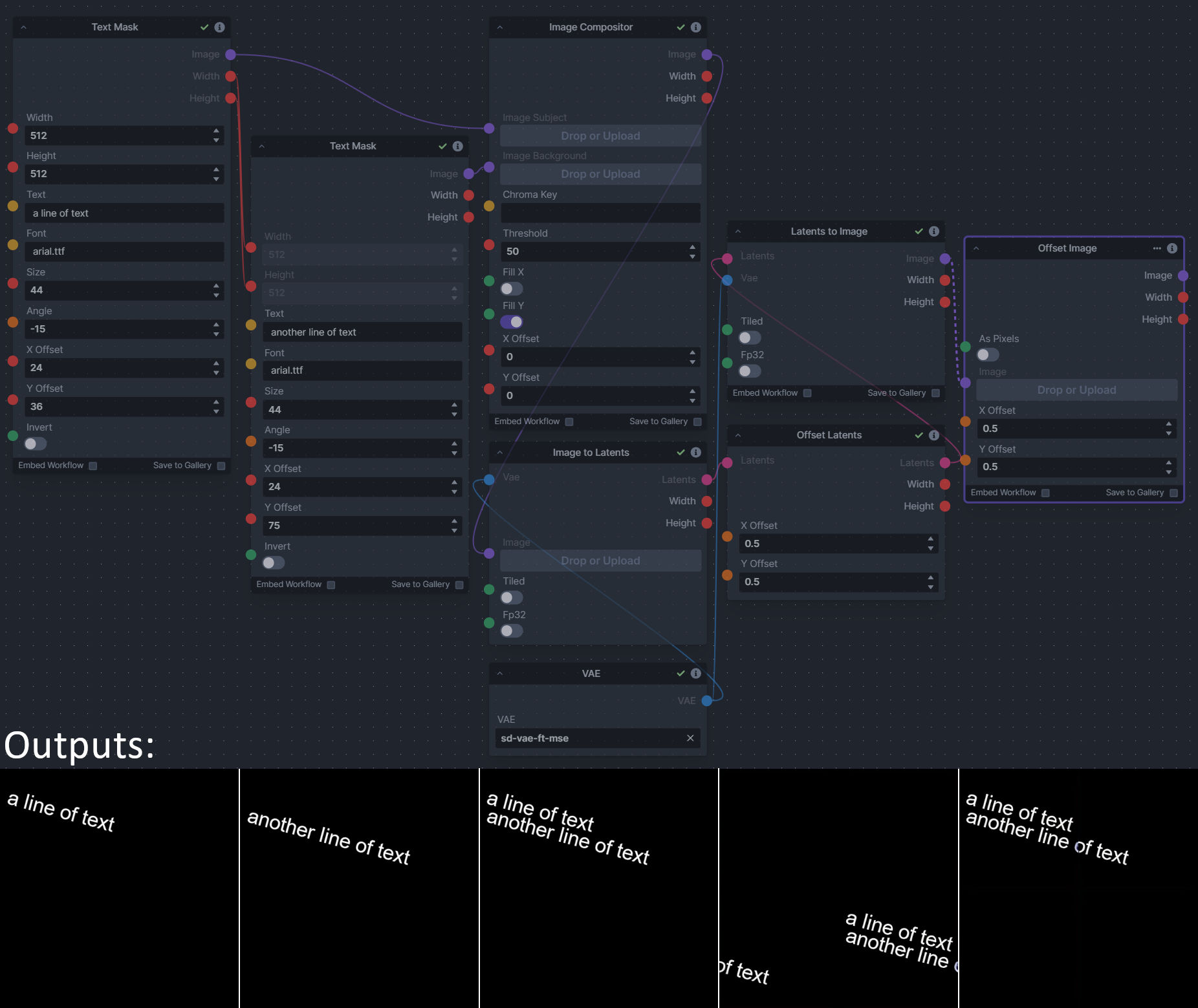

**Description:** This is a pack of nodes for composing masks and images, including a simple text mask creator and both image and latent offset nodes. The offsets wrap around, so these can be used in conjunction with the Seamless node to progressively generate centered on different parts of the seamless tiling.

|

||||

|

||||

This includes 4 Nodes:

|

||||

- *Text Mask (simple 2D)* - create and position a white on black (or black on white) line of text using any font locally available to Invoke.

|

||||

This includes 14 Nodes:

|

||||

- *Adjust Image Hue Plus* - Rotate the hue of an image in one of several different color spaces.

|

||||

- *Blend Latents/Noise (Masked)* - Use a mask to blend part of one latents tensor [including Noise outputs] into another. Can be used to "renoise" sections during a multi-stage [masked] denoising process.

|

||||

- *Enhance Image* - Boost or reduce color saturation, contrast, brightness, sharpness, or invert colors of any image at any stage with this simple wrapper for pillow [PIL]'s ImageEnhance module.

|

||||

- *Equivalent Achromatic Lightness* - Calculates image lightness accounting for Helmholtz-Kohlrausch effect based on a method described by High, Green, and Nussbaum (2023).

|

||||

- *Text to Mask (Clipseg)* - Input a prompt and an image to generate a mask representing areas of the image matched by the prompt.

|

||||

- *Text to Mask Advanced (Clipseg)* - Output up to four prompt masks combined with logical "and", logical "or", or as separate channels of an RGBA image.

|

||||

- *Image Layer Blend* - Perform a layered blend of two images using alpha compositing. Opacity of top layer is selectable, with optional mask and several different blend modes/color spaces.

|

||||

- *Image Compositor* - Take a subject from an image with a flat backdrop and layer it on another image using a chroma key or flood select background removal.

|

||||

- *Image Dilate or Erode* - Dilate or expand a mask (or any image!). This is equivalent to an expand/contract operation.

|

||||

- *Image Value Thresholds* - Clip an image to pure black/white beyond specified thresholds.

|

||||

- *Offset Latents* - Offset a latents tensor in the vertical and/or horizontal dimensions, wrapping it around.

|

||||

- *Offset Image* - Offset an image in the vertical and/or horizontal dimensions, wrapping it around.

|

||||

- *Shadows/Highlights/Midtones* - Extract three masks (with adjustable hard or soft thresholds) representing shadows, midtones, and highlights regions of an image.

|

||||

- *Text Mask (simple 2D)* - create and position a white on black (or black on white) line of text using any font locally available to Invoke.

|

||||

|

||||

**Node Link:** https://github.com/dwringer/composition-nodes

|

||||

|

||||

**Example Usage:**

|

||||

|

||||

**Nodes and Output Examples:**

|

||||

|

||||

|

||||

--------------------------------

|

||||

### Size Stepper Nodes

|

||||

|

||||

@@ -49,7 +49,7 @@ def check_internet() -> bool:

|

||||

return False

|

||||

|

||||

|

||||

logger = InvokeAILogger.getLogger()

|

||||

logger = InvokeAILogger.get_logger()

|

||||

|

||||

|

||||

class ApiDependencies:

|

||||

|

||||

@@ -45,17 +45,13 @@ async def upload_image(

|

||||

if not file.content_type.startswith("image"):

|

||||

raise HTTPException(status_code=415, detail="Not an image")

|

||||

|

||||

metadata: Optional[str] = None

|

||||

workflow: Optional[str] = None

|

||||

|

||||

contents = await file.read()

|

||||

|

||||

try:

|

||||

pil_image = Image.open(io.BytesIO(contents))

|

||||

if crop_visible:

|

||||

bbox = pil_image.getbbox()

|

||||

pil_image = pil_image.crop(bbox)

|

||||

metadata = pil_image.info.get("invokeai_metadata", None)

|

||||

workflow = pil_image.info.get("invokeai_workflow", None)

|

||||

except Exception:

|

||||

# Error opening the image

|

||||

raise HTTPException(status_code=415, detail="Failed to read image")

|

||||

@@ -67,8 +63,6 @@ async def upload_image(

|

||||

image_category=image_category,

|

||||

session_id=session_id,

|

||||

board_id=board_id,

|

||||

metadata=metadata,

|

||||

workflow=workflow,

|

||||

is_intermediate=is_intermediate,

|

||||

)

|

||||

|

||||

|

||||

@@ -146,7 +146,8 @@ async def update_model(

|

||||

async def import_model(

|

||||

location: str = Body(description="A model path, repo_id or URL to import"),

|

||||

prediction_type: Optional[Literal["v_prediction", "epsilon", "sample"]] = Body(

|

||||

description="Prediction type for SDv2 checkpoint files", default="v_prediction"

|

||||

description="Prediction type for SDv2 checkpoints and rare SDv1 checkpoints",

|

||||

default=None,

|

||||

),

|

||||

) -> ImportModelResponse:

|

||||

"""Add a model using its local path, repo_id, or remote URL. Model characteristics will be probed and configured automatically"""

|

||||

|

||||

@@ -8,7 +8,6 @@ app_config.parse_args()

|

||||

|

||||

if True: # hack to make flake8 happy with imports coming after setting up the config

|

||||

import asyncio

|

||||

import logging

|

||||

import mimetypes

|

||||

import socket

|

||||

from inspect import signature

|

||||

@@ -41,7 +40,9 @@ if True: # hack to make flake8 happy with imports coming after setting up the c

|

||||

import invokeai.backend.util.mps_fixes # noqa: F401 (monkeypatching on import)

|

||||

|

||||

|

||||

logger = InvokeAILogger.getLogger(config=app_config)

|

||||

app_config = InvokeAIAppConfig.get_config()

|

||||

app_config.parse_args()

|

||||

logger = InvokeAILogger.get_logger(config=app_config)

|

||||

|

||||

# fix for windows mimetypes registry entries being borked

|

||||

# see https://github.com/invoke-ai/InvokeAI/discussions/3684#discussioncomment-6391352

|

||||

@@ -223,7 +224,7 @@ def invoke_api():

|

||||

exc_info=e,

|

||||

)

|

||||

else:

|

||||

jurigged.watch(logger=InvokeAILogger.getLogger(name="jurigged").info)

|

||||

jurigged.watch(logger=InvokeAILogger.get_logger(name="jurigged").info)

|

||||

|

||||

port = find_port(app_config.port)

|

||||

if port != app_config.port:

|

||||

@@ -242,7 +243,7 @@ def invoke_api():

|

||||

|

||||

# replace uvicorn's loggers with InvokeAI's for consistent appearance

|

||||

for logname in ["uvicorn.access", "uvicorn"]:

|

||||

log = logging.getLogger(logname)

|

||||

log = InvokeAILogger.get_logger(logname)

|

||||

log.handlers.clear()

|

||||

for ch in logger.handlers:

|

||||

log.addHandler(ch)

|

||||

|

||||

@@ -7,8 +7,6 @@ from .services.config import InvokeAIAppConfig

|

||||

# parse_args() must be called before any other imports. if it is not called first, consumers of the config

|

||||

# which are imported/used before parse_args() is called will get the default config values instead of the

|

||||

# values from the command line or config file.

|

||||

config = InvokeAIAppConfig.get_config()

|

||||

config.parse_args()

|

||||

|

||||

if True: # hack to make flake8 happy with imports coming after setting up the config

|

||||

import argparse

|

||||

@@ -61,8 +59,9 @@ if True: # hack to make flake8 happy with imports coming after setting up the c

|

||||

if torch.backends.mps.is_available():

|

||||

import invokeai.backend.util.mps_fixes # noqa: F401 (monkeypatching on import)

|

||||

|

||||

|

||||

logger = InvokeAILogger().getLogger(config=config)

|

||||

config = InvokeAIAppConfig.get_config()

|

||||

config.parse_args()

|

||||

logger = InvokeAILogger().get_logger(config=config)

|

||||

|

||||

|

||||

class CliCommand(BaseModel):

|

||||

|

||||

@@ -71,12 +71,7 @@ class FieldDescriptions:

|

||||

denoised_latents = "Denoised latents tensor"

|

||||

latents = "Latents tensor"

|

||||

strength = "Strength of denoising (proportional to steps)"

|

||||

metadata = "Optional metadata to be saved with the image"

|

||||

metadata_dict_collection = "Collection of MetadataDicts"

|

||||

metadata_item_polymorphic = "A single metadata item or collection of metadata items"

|

||||

metadata_item_label = "Label for this metadata item"

|

||||

metadata_item_value = "The value for this metadata item (may be any type)"

|

||||

workflow = "Optional workflow to be saved with the image"

|

||||

core_metadata = "Optional core metadata to be written to image"

|

||||

interp_mode = "Interpolation mode"

|

||||

torch_antialias = "Whether or not to apply antialiasing (bilinear or bicubic only)"

|

||||

fp32 = "Whether or not to use full float32 precision"

|

||||

@@ -180,12 +175,8 @@ class UIType(str, Enum):

|

||||

Scheduler = "Scheduler"

|

||||

WorkflowField = "WorkflowField"

|

||||

IsIntermediate = "IsIntermediate"

|

||||

MetadataField = "MetadataField"

|

||||

BoardField = "BoardField"

|

||||

Any = "Any"

|

||||

MetadataItem = "MetadataItem"

|

||||

MetadataItemCollection = "MetadataItemCollection"

|

||||

MetadataItemPolymorphic = "MetadataItemPolymorphic"

|

||||

MetadataDict = "MetadataDict"

|

||||

# endregion

|

||||

|

||||

|

||||

@@ -631,8 +622,23 @@ class BaseInvocation(ABC, BaseModel):

|

||||

is_intermediate: bool = InputField(

|

||||

default=False, description="Whether or not this is an intermediate invocation.", ui_type=UIType.IsIntermediate

|

||||

)

|

||||

workflow: Optional[str] = InputField(

|

||||

default=None,

|

||||

description="The workflow to save with the image",

|

||||

ui_type=UIType.WorkflowField,

|

||||

)

|

||||

use_cache: bool = InputField(default=True, description="Whether or not to use the cache")

|

||||

|

||||

@validator("workflow", pre=True)

|

||||

def validate_workflow_is_json(cls, v):

|

||||

if v is None:

|

||||

return None

|

||||

try:

|

||||

json.loads(v)

|

||||

except json.decoder.JSONDecodeError:

|

||||

raise ValueError("Workflow must be valid JSON")

|

||||

return v

|

||||

|

||||

UIConfig: ClassVar[Type[UIConfigBase]]

|

||||

|

||||

|

||||

@@ -737,19 +743,3 @@ def invocation_output(

|

||||

return cls

|

||||

|

||||

return wrapper

|

||||

|

||||

|

||||

class WithWorkflow(BaseModel):

|

||||

workflow: Optional[str] = InputField(

|

||||

default=None, description=FieldDescriptions.workflow, ui_type=UIType.WorkflowField

|

||||

)

|

||||

|

||||

@validator("workflow", pre=True)

|

||||

def validate_workflow_is_json(cls, v):

|

||||

if v is None:

|

||||

return None

|

||||

try:

|

||||

json.loads(v)

|

||||

except json.decoder.JSONDecodeError:

|

||||

raise ValueError("Workflow must be valid JSON")

|

||||

return v

|

||||

|

||||

@@ -25,7 +25,6 @@ from controlnet_aux import (

|

||||

from controlnet_aux.util import HWC3, ade_palette

|

||||

from PIL import Image

|

||||

from pydantic import BaseModel, Field, validator

|

||||

from invokeai.app.invocations.metadata import WithMetadata

|

||||

|

||||

from invokeai.app.invocations.primitives import ImageField, ImageOutput

|

||||

|

||||

@@ -39,7 +38,6 @@ from .baseinvocation import (

|

||||

InputField,

|

||||

InvocationContext,

|

||||

OutputField,

|

||||

WithWorkflow,

|

||||

invocation,

|

||||

invocation_output,

|

||||

)

|

||||

@@ -129,7 +127,7 @@ class ControlNetInvocation(BaseInvocation):

|

||||

@invocation(

|

||||

"image_processor", title="Base Image Processor", tags=["controlnet"], category="controlnet", version="1.0.0"

|

||||

)

|

||||

class ImageProcessorInvocation(BaseInvocation, WithMetadata, WithWorkflow):

|

||||

class ImageProcessorInvocation(BaseInvocation):

|

||||

"""Base class for invocations that preprocess images for ControlNet"""

|

||||

|

||||

image: ImageField = InputField(description="The image to process")

|

||||

@@ -152,7 +150,6 @@ class ImageProcessorInvocation(BaseInvocation, WithMetadata, WithWorkflow):

|

||||

session_id=context.graph_execution_state_id,

|

||||

node_id=self.id,

|

||||

is_intermediate=self.is_intermediate,

|

||||

metadata=self.metadata.data if self.metadata else None,

|

||||

workflow=self.workflow,

|

||||

)

|

||||

|

||||

|

||||

@@ -7,21 +7,13 @@ import cv2

|

||||

import numpy

|

||||

from PIL import Image, ImageChops, ImageFilter, ImageOps

|

||||

|

||||

from invokeai.app.invocations.metadata import WithMetadata

|

||||

from invokeai.app.invocations.metadata import CoreMetadata

|

||||

from invokeai.app.invocations.primitives import BoardField, ColorField, ImageField, ImageOutput

|

||||

from invokeai.backend.image_util.invisible_watermark import InvisibleWatermark

|

||||

from invokeai.backend.image_util.safety_checker import SafetyChecker

|

||||

|

||||

from ..models.image import ImageCategory, ResourceOrigin

|

||||

from .baseinvocation import (

|

||||

BaseInvocation,

|

||||

FieldDescriptions,

|

||||

Input,

|

||||

InputField,

|

||||

InvocationContext,

|

||||

WithWorkflow,

|

||||

invocation,

|

||||

)

|

||||

from .baseinvocation import BaseInvocation, FieldDescriptions, Input, InputField, InvocationContext, invocation

|

||||

|

||||

|

||||

@invocation("show_image", title="Show Image", tags=["image"], category="image", version="1.0.0")

|

||||

@@ -45,7 +37,7 @@ class ShowImageInvocation(BaseInvocation):

|

||||

|

||||

|

||||

@invocation("blank_image", title="Blank Image", tags=["image"], category="image", version="1.0.0")

|

||||

class BlankImageInvocation(BaseInvocation, WithMetadata, WithWorkflow):

|

||||

class BlankImageInvocation(BaseInvocation):

|

||||

"""Creates a blank image and forwards it to the pipeline"""

|

||||

|

||||

width: int = InputField(default=512, description="The width of the image")

|

||||

@@ -63,7 +55,6 @@ class BlankImageInvocation(BaseInvocation, WithMetadata, WithWorkflow):

|

||||

node_id=self.id,

|

||||

session_id=context.graph_execution_state_id,

|

||||

is_intermediate=self.is_intermediate,

|

||||

metadata=self.metadata.data if self.metadata else None,

|

||||

workflow=self.workflow,

|

||||

)

|

||||

|

||||

@@ -75,7 +66,7 @@ class BlankImageInvocation(BaseInvocation, WithMetadata, WithWorkflow):

|

||||

|

||||

|

||||

@invocation("img_crop", title="Crop Image", tags=["image", "crop"], category="image", version="1.0.0")

|

||||

class ImageCropInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

class ImageCropInvocation(BaseInvocation):

|

||||

"""Crops an image to a specified box. The box can be outside of the image."""

|

||||

|

||||

image: ImageField = InputField(description="The image to crop")

|

||||

@@ -97,7 +88,6 @@ class ImageCropInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

node_id=self.id,

|

||||

session_id=context.graph_execution_state_id,

|

||||

is_intermediate=self.is_intermediate,

|

||||

metadata=self.metadata.data if self.metadata else None,

|

||||

workflow=self.workflow,

|

||||

)

|

||||

|

||||

@@ -109,7 +99,7 @@ class ImageCropInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

|

||||

|

||||

@invocation("img_paste", title="Paste Image", tags=["image", "paste"], category="image", version="1.0.1")

|

||||

class ImagePasteInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

class ImagePasteInvocation(BaseInvocation):

|

||||

"""Pastes an image into another image."""

|

||||

|

||||

base_image: ImageField = InputField(description="The base image")

|

||||

@@ -151,7 +141,6 @@ class ImagePasteInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

node_id=self.id,

|

||||

session_id=context.graph_execution_state_id,

|

||||

is_intermediate=self.is_intermediate,

|

||||

metadata=self.metadata.data if self.metadata else None,

|

||||

workflow=self.workflow,

|

||||

)

|

||||

|

||||

@@ -163,7 +152,7 @@ class ImagePasteInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

|

||||

|

||||

@invocation("tomask", title="Mask from Alpha", tags=["image", "mask"], category="image", version="1.0.0")

|

||||

class MaskFromAlphaInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

class MaskFromAlphaInvocation(BaseInvocation):

|

||||

"""Extracts the alpha channel of an image as a mask."""

|

||||

|

||||

image: ImageField = InputField(description="The image to create the mask from")

|

||||

@@ -183,7 +172,6 @@ class MaskFromAlphaInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

node_id=self.id,

|

||||

session_id=context.graph_execution_state_id,

|

||||

is_intermediate=self.is_intermediate,

|

||||

metadata=self.metadata.data if self.metadata else None,

|

||||

workflow=self.workflow,

|

||||

)

|

||||

|

||||

@@ -195,7 +183,7 @@ class MaskFromAlphaInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

|

||||

|

||||

@invocation("img_mul", title="Multiply Images", tags=["image", "multiply"], category="image", version="1.0.0")

|

||||

class ImageMultiplyInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

class ImageMultiplyInvocation(BaseInvocation):

|

||||

"""Multiplies two images together using `PIL.ImageChops.multiply()`."""

|

||||

|

||||

image1: ImageField = InputField(description="The first image to multiply")

|

||||

@@ -214,7 +202,6 @@ class ImageMultiplyInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

node_id=self.id,

|

||||

session_id=context.graph_execution_state_id,

|

||||

is_intermediate=self.is_intermediate,

|

||||

metadata=self.metadata.data if self.metadata else None,

|

||||

workflow=self.workflow,

|

||||

)

|

||||

|

||||

@@ -229,7 +216,7 @@ IMAGE_CHANNELS = Literal["A", "R", "G", "B"]

|

||||

|

||||

|

||||

@invocation("img_chan", title="Extract Image Channel", tags=["image", "channel"], category="image", version="1.0.0")

|

||||

class ImageChannelInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

class ImageChannelInvocation(BaseInvocation):

|

||||

"""Gets a channel from an image."""

|

||||

|

||||

image: ImageField = InputField(description="The image to get the channel from")

|

||||

@@ -247,7 +234,6 @@ class ImageChannelInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

node_id=self.id,

|

||||

session_id=context.graph_execution_state_id,

|

||||

is_intermediate=self.is_intermediate,

|

||||

metadata=self.metadata.data if self.metadata else None,

|

||||

workflow=self.workflow,

|

||||

)

|

||||

|

||||

@@ -262,7 +248,7 @@ IMAGE_MODES = Literal["L", "RGB", "RGBA", "CMYK", "YCbCr", "LAB", "HSV", "I", "F

|

||||

|

||||

|

||||

@invocation("img_conv", title="Convert Image Mode", tags=["image", "convert"], category="image", version="1.0.0")

|

||||

class ImageConvertInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

class ImageConvertInvocation(BaseInvocation):

|

||||

"""Converts an image to a different mode."""

|

||||

|

||||

image: ImageField = InputField(description="The image to convert")

|

||||

@@ -280,7 +266,6 @@ class ImageConvertInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

node_id=self.id,

|

||||

session_id=context.graph_execution_state_id,

|

||||

is_intermediate=self.is_intermediate,

|

||||

metadata=self.metadata.data if self.metadata else None,

|

||||

workflow=self.workflow,

|

||||

)

|

||||

|

||||

@@ -292,7 +277,7 @@ class ImageConvertInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

|

||||

|

||||

@invocation("img_blur", title="Blur Image", tags=["image", "blur"], category="image", version="1.0.0")

|

||||

class ImageBlurInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

class ImageBlurInvocation(BaseInvocation):

|

||||

"""Blurs an image"""

|

||||

|

||||

image: ImageField = InputField(description="The image to blur")

|

||||

@@ -315,7 +300,6 @@ class ImageBlurInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

node_id=self.id,

|

||||

session_id=context.graph_execution_state_id,

|

||||

is_intermediate=self.is_intermediate,

|

||||

metadata=self.metadata.data if self.metadata else None,

|

||||

workflow=self.workflow,

|

||||

)

|

||||

|

||||

@@ -347,13 +331,16 @@ PIL_RESAMPLING_MAP = {

|

||||

|

||||

|

||||

@invocation("img_resize", title="Resize Image", tags=["image", "resize"], category="image", version="1.0.0")

|

||||

class ImageResizeInvocation(BaseInvocation, WithMetadata, WithWorkflow):

|

||||

class ImageResizeInvocation(BaseInvocation):

|

||||

"""Resizes an image to specific dimensions"""

|

||||

|

||||

image: ImageField = InputField(description="The image to resize")

|

||||

width: int = InputField(default=512, gt=0, description="The width to resize to (px)")

|

||||

height: int = InputField(default=512, gt=0, description="The height to resize to (px)")

|

||||

resample_mode: PIL_RESAMPLING_MODES = InputField(default="bicubic", description="The resampling mode")

|

||||

metadata: Optional[CoreMetadata] = InputField(

|

||||

default=None, description=FieldDescriptions.core_metadata, ui_hidden=True

|

||||

)

|

||||

|

||||

def invoke(self, context: InvocationContext) -> ImageOutput:

|

||||

image = context.services.images.get_pil_image(self.image.image_name)

|

||||

@@ -372,7 +359,7 @@ class ImageResizeInvocation(BaseInvocation, WithMetadata, WithWorkflow):

|

||||

node_id=self.id,

|

||||

session_id=context.graph_execution_state_id,

|

||||

is_intermediate=self.is_intermediate,

|

||||

metadata=self.metadata.data if self.metadata else None,

|

||||

metadata=self.metadata.dict() if self.metadata else None,

|

||||

workflow=self.workflow,

|

||||

)

|

||||

|

||||

@@ -384,7 +371,7 @@ class ImageResizeInvocation(BaseInvocation, WithMetadata, WithWorkflow):

|

||||

|

||||

|

||||

@invocation("img_scale", title="Scale Image", tags=["image", "scale"], category="image", version="1.0.0")

|

||||

class ImageScaleInvocation(BaseInvocation, WithMetadata, WithWorkflow):

|

||||

class ImageScaleInvocation(BaseInvocation):

|

||||

"""Scales an image by a factor"""

|

||||

|

||||

image: ImageField = InputField(description="The image to scale")

|

||||

@@ -414,7 +401,6 @@ class ImageScaleInvocation(BaseInvocation, WithMetadata, WithWorkflow):

|

||||

node_id=self.id,

|

||||

session_id=context.graph_execution_state_id,

|

||||

is_intermediate=self.is_intermediate,

|

||||

metadata=self.metadata.data if self.metadata else None,

|

||||

workflow=self.workflow,

|

||||

)

|

||||

|

||||

@@ -426,7 +412,7 @@ class ImageScaleInvocation(BaseInvocation, WithMetadata, WithWorkflow):

|

||||

|

||||

|

||||

@invocation("img_lerp", title="Lerp Image", tags=["image", "lerp"], category="image", version="1.0.0")

|

||||

class ImageLerpInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

class ImageLerpInvocation(BaseInvocation):

|

||||

"""Linear interpolation of all pixels of an image"""

|

||||

|

||||

image: ImageField = InputField(description="The image to lerp")

|

||||

@@ -448,7 +434,6 @@ class ImageLerpInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

node_id=self.id,

|

||||

session_id=context.graph_execution_state_id,

|

||||

is_intermediate=self.is_intermediate,

|

||||

metadata=self.metadata.data if self.metadata else None,

|

||||

workflow=self.workflow,

|

||||

)

|

||||

|

||||

@@ -460,7 +445,7 @@ class ImageLerpInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

|

||||

|

||||

@invocation("img_ilerp", title="Inverse Lerp Image", tags=["image", "ilerp"], category="image", version="1.0.0")

|

||||

class ImageInverseLerpInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

class ImageInverseLerpInvocation(BaseInvocation):

|

||||

"""Inverse linear interpolation of all pixels of an image"""

|

||||

|

||||

image: ImageField = InputField(description="The image to lerp")

|

||||

@@ -482,7 +467,6 @@ class ImageInverseLerpInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

node_id=self.id,

|

||||

session_id=context.graph_execution_state_id,

|

||||

is_intermediate=self.is_intermediate,

|

||||

metadata=self.metadata.data if self.metadata else None,

|

||||

workflow=self.workflow,

|

||||

)

|

||||

|

||||

@@ -494,10 +478,13 @@ class ImageInverseLerpInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

|

||||

|

||||

@invocation("img_nsfw", title="Blur NSFW Image", tags=["image", "nsfw"], category="image", version="1.0.0")

|

||||

class ImageNSFWBlurInvocation(BaseInvocation, WithMetadata, WithWorkflow):

|

||||

class ImageNSFWBlurInvocation(BaseInvocation):

|

||||

"""Add blur to NSFW-flagged images"""

|

||||

|

||||

image: ImageField = InputField(description="The image to check")

|

||||

metadata: Optional[CoreMetadata] = InputField(

|

||||

default=None, description=FieldDescriptions.core_metadata, ui_hidden=True

|

||||

)

|

||||

|

||||

def invoke(self, context: InvocationContext) -> ImageOutput:

|

||||

image = context.services.images.get_pil_image(self.image.image_name)

|

||||

@@ -518,7 +505,7 @@ class ImageNSFWBlurInvocation(BaseInvocation, WithMetadata, WithWorkflow):

|

||||

node_id=self.id,

|

||||

session_id=context.graph_execution_state_id,

|

||||

is_intermediate=self.is_intermediate,

|

||||

metadata=self.metadata.data if self.metadata else None,

|

||||

metadata=self.metadata.dict() if self.metadata else None,

|

||||

workflow=self.workflow,

|

||||

)

|

||||

|

||||

@@ -538,11 +525,14 @@ class ImageNSFWBlurInvocation(BaseInvocation, WithMetadata, WithWorkflow):

|

||||

@invocation(

|

||||

"img_watermark", title="Add Invisible Watermark", tags=["image", "watermark"], category="image", version="1.0.0"

|

||||

)

|

||||

class ImageWatermarkInvocation(BaseInvocation, WithMetadata, WithWorkflow):

|

||||

class ImageWatermarkInvocation(BaseInvocation):

|

||||

"""Add an invisible watermark to an image"""

|

||||

|

||||

image: ImageField = InputField(description="The image to check")

|

||||

text: str = InputField(default="InvokeAI", description="Watermark text")

|

||||

metadata: Optional[CoreMetadata] = InputField(

|

||||

default=None, description=FieldDescriptions.core_metadata, ui_hidden=True

|

||||

)

|

||||

|

||||

def invoke(self, context: InvocationContext) -> ImageOutput:

|

||||

image = context.services.images.get_pil_image(self.image.image_name)

|

||||

@@ -554,7 +544,7 @@ class ImageWatermarkInvocation(BaseInvocation, WithMetadata, WithWorkflow):

|

||||

node_id=self.id,

|

||||

session_id=context.graph_execution_state_id,

|

||||

is_intermediate=self.is_intermediate,

|

||||

metadata=self.metadata.data if self.metadata else None,

|

||||

metadata=self.metadata.dict() if self.metadata else None,

|

||||

workflow=self.workflow,

|

||||

)

|

||||

|

||||

@@ -566,7 +556,7 @@ class ImageWatermarkInvocation(BaseInvocation, WithMetadata, WithWorkflow):

|

||||

|

||||

|

||||

@invocation("mask_edge", title="Mask Edge", tags=["image", "mask", "inpaint"], category="image", version="1.0.0")

|

||||

class MaskEdgeInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

class MaskEdgeInvocation(BaseInvocation):

|

||||

"""Applies an edge mask to an image"""

|

||||

|

||||

image: ImageField = InputField(description="The image to apply the mask to")

|

||||

@@ -600,7 +590,6 @@ class MaskEdgeInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

node_id=self.id,

|

||||

session_id=context.graph_execution_state_id,

|

||||

is_intermediate=self.is_intermediate,

|

||||

metadata=self.metadata.data if self.metadata else None,

|

||||

workflow=self.workflow,

|

||||

)

|

||||

|

||||

@@ -614,7 +603,7 @@ class MaskEdgeInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

@invocation(

|

||||

"mask_combine", title="Combine Masks", tags=["image", "mask", "multiply"], category="image", version="1.0.0"

|

||||

)

|

||||

class MaskCombineInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

class MaskCombineInvocation(BaseInvocation):

|

||||

"""Combine two masks together by multiplying them using `PIL.ImageChops.multiply()`."""

|

||||

|

||||

mask1: ImageField = InputField(description="The first mask to combine")

|

||||

@@ -633,7 +622,6 @@ class MaskCombineInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

node_id=self.id,

|

||||

session_id=context.graph_execution_state_id,

|

||||

is_intermediate=self.is_intermediate,

|

||||

metadata=self.metadata.data if self.metadata else None,

|

||||

workflow=self.workflow,

|

||||

)

|

||||

|

||||

@@ -645,7 +633,7 @@ class MaskCombineInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

|

||||

|

||||

@invocation("color_correct", title="Color Correct", tags=["image", "color"], category="image", version="1.0.0")

|

||||

class ColorCorrectInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

class ColorCorrectInvocation(BaseInvocation):

|

||||

"""

|

||||

Shifts the colors of a target image to match the reference image, optionally

|

||||

using a mask to only color-correct certain regions of the target image.

|

||||

@@ -744,7 +732,6 @@ class ColorCorrectInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

node_id=self.id,

|

||||

session_id=context.graph_execution_state_id,

|

||||

is_intermediate=self.is_intermediate,

|

||||

metadata=self.metadata.data if self.metadata else None,

|

||||

workflow=self.workflow,

|

||||

)

|

||||

|

||||

@@ -756,7 +743,7 @@ class ColorCorrectInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

|

||||

|

||||

@invocation("img_hue_adjust", title="Adjust Image Hue", tags=["image", "hue"], category="image", version="1.0.0")

|

||||

class ImageHueAdjustmentInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

class ImageHueAdjustmentInvocation(BaseInvocation):

|

||||

"""Adjusts the Hue of an image."""

|

||||

|

||||

image: ImageField = InputField(description="The image to adjust")

|

||||

@@ -784,7 +771,6 @@ class ImageHueAdjustmentInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

node_id=self.id,

|

||||

is_intermediate=self.is_intermediate,

|

||||

session_id=context.graph_execution_state_id,

|

||||

metadata=self.metadata.data if self.metadata else None,

|

||||

workflow=self.workflow,

|

||||

)

|

||||

|

||||

@@ -860,7 +846,7 @@ CHANNEL_FORMATS = {

|

||||

category="image",

|

||||

version="1.0.0",

|

||||

)

|

||||

class ImageChannelOffsetInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

class ImageChannelOffsetInvocation(BaseInvocation):

|

||||

"""Add or subtract a value from a specific color channel of an image."""

|

||||

|

||||

image: ImageField = InputField(description="The image to adjust")

|

||||

@@ -894,7 +880,6 @@ class ImageChannelOffsetInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

node_id=self.id,

|

||||

is_intermediate=self.is_intermediate,

|

||||

session_id=context.graph_execution_state_id,

|

||||

metadata=self.metadata.data if self.metadata else None,

|

||||

workflow=self.workflow,

|

||||

)

|

||||

|

||||

@@ -931,7 +916,7 @@ class ImageChannelOffsetInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

category="image",

|

||||

version="1.0.0",

|

||||

)

|

||||

class ImageChannelMultiplyInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

class ImageChannelMultiplyInvocation(BaseInvocation):

|

||||

"""Scale a specific color channel of an image."""

|

||||

|

||||

image: ImageField = InputField(description="The image to adjust")

|

||||

@@ -971,7 +956,6 @@ class ImageChannelMultiplyInvocation(BaseInvocation, WithWorkflow, WithMetadata)

|

||||

is_intermediate=self.is_intermediate,

|

||||

session_id=context.graph_execution_state_id,

|

||||

workflow=self.workflow,

|

||||

metadata=self.metadata.data if self.metadata else None,

|

||||

)

|

||||

|

||||

return ImageOutput(

|

||||

@@ -991,11 +975,16 @@ class ImageChannelMultiplyInvocation(BaseInvocation, WithWorkflow, WithMetadata)

|

||||

version="1.0.1",

|

||||

use_cache=False,

|

||||

)

|

||||

class SaveImageInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

class SaveImageInvocation(BaseInvocation):

|

||||

"""Saves an image. Unlike an image primitive, this invocation stores a copy of the image."""

|

||||

|

||||

image: ImageField = InputField(description=FieldDescriptions.image)

|

||||

board: Optional[BoardField] = InputField(default=None, description=FieldDescriptions.board, input=Input.Direct)

|

||||

metadata: CoreMetadata = InputField(

|

||||

default=None,

|

||||

description=FieldDescriptions.core_metadata,

|

||||

ui_hidden=True,

|

||||

)

|

||||

|

||||

def invoke(self, context: InvocationContext) -> ImageOutput:

|

||||

image = context.services.images.get_pil_image(self.image.image_name)

|

||||

@@ -1008,7 +997,7 @@ class SaveImageInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

node_id=self.id,

|

||||

session_id=context.graph_execution_state_id,

|

||||

is_intermediate=self.is_intermediate,

|

||||

metadata=self.metadata.data if self.metadata else None,

|

||||

metadata=self.metadata.dict() if self.metadata else None,

|

||||

workflow=self.workflow,

|

||||

)

|

||||

|

||||

|

||||

@@ -5,7 +5,6 @@ from typing import Literal, Optional, get_args

|

||||

|

||||

import numpy as np

|

||||

from PIL import Image, ImageOps

|

||||

from invokeai.app.invocations.metadata import WithMetadata

|

||||

|

||||

from invokeai.app.invocations.primitives import ColorField, ImageField, ImageOutput

|

||||

from invokeai.app.util.misc import SEED_MAX, get_random_seed

|

||||

@@ -14,7 +13,7 @@ from invokeai.backend.image_util.lama import LaMA

|

||||

from invokeai.backend.image_util.patchmatch import PatchMatch

|

||||

|

||||

from ..models.image import ImageCategory, ResourceOrigin

|

||||

from .baseinvocation import BaseInvocation, InputField, InvocationContext, WithWorkflow, invocation

|

||||

from .baseinvocation import BaseInvocation, InputField, InvocationContext, invocation

|

||||

from .image import PIL_RESAMPLING_MAP, PIL_RESAMPLING_MODES

|

||||

|

||||

|

||||

@@ -120,7 +119,7 @@ def tile_fill_missing(im: Image.Image, tile_size: int = 16, seed: Optional[int]

|

||||

|

||||

|

||||

@invocation("infill_rgba", title="Solid Color Infill", tags=["image", "inpaint"], category="inpaint", version="1.0.0")

|

||||

class InfillColorInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

class InfillColorInvocation(BaseInvocation):

|

||||

"""Infills transparent areas of an image with a solid color"""

|

||||

|

||||

image: ImageField = InputField(description="The image to infill")

|

||||

@@ -144,7 +143,6 @@ class InfillColorInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

node_id=self.id,

|

||||

session_id=context.graph_execution_state_id,

|

||||

is_intermediate=self.is_intermediate,

|

||||

metadata=self.metadata.data if self.metadata else None,

|

||||

workflow=self.workflow,

|

||||

)

|

||||

|

||||

@@ -156,7 +154,7 @@ class InfillColorInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

|

||||

|

||||

@invocation("infill_tile", title="Tile Infill", tags=["image", "inpaint"], category="inpaint", version="1.0.0")

|

||||

class InfillTileInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

class InfillTileInvocation(BaseInvocation):

|

||||

"""Infills transparent areas of an image with tiles of the image"""

|

||||

|

||||

image: ImageField = InputField(description="The image to infill")

|

||||

@@ -181,7 +179,6 @@ class InfillTileInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

node_id=self.id,

|

||||

session_id=context.graph_execution_state_id,

|

||||

is_intermediate=self.is_intermediate,

|

||||

metadata=self.metadata.data if self.metadata else None,

|

||||

workflow=self.workflow,

|

||||

)

|

||||

|

||||

@@ -195,7 +192,7 @@ class InfillTileInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

@invocation(

|

||||

"infill_patchmatch", title="PatchMatch Infill", tags=["image", "inpaint"], category="inpaint", version="1.0.0"

|

||||

)

|

||||

class InfillPatchMatchInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

class InfillPatchMatchInvocation(BaseInvocation):

|

||||

"""Infills transparent areas of an image using the PatchMatch algorithm"""

|

||||

|

||||

image: ImageField = InputField(description="The image to infill")

|

||||

@@ -235,7 +232,6 @@ class InfillPatchMatchInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

node_id=self.id,

|

||||

session_id=context.graph_execution_state_id,

|

||||

is_intermediate=self.is_intermediate,

|

||||

metadata=self.metadata.data if self.metadata else None,

|

||||

workflow=self.workflow,

|

||||

)

|

||||

|

||||

@@ -247,7 +243,7 @@ class InfillPatchMatchInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

|

||||

|

||||

@invocation("infill_lama", title="LaMa Infill", tags=["image", "inpaint"], category="inpaint", version="1.0.0")

|

||||

class LaMaInfillInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

class LaMaInfillInvocation(BaseInvocation):

|

||||

"""Infills transparent areas of an image using the LaMa model"""

|

||||

|

||||

image: ImageField = InputField(description="The image to infill")

|

||||

@@ -264,8 +260,6 @@ class LaMaInfillInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

node_id=self.id,

|

||||

session_id=context.graph_execution_state_id,

|

||||

is_intermediate=self.is_intermediate,

|

||||

metadata=self.metadata.data if self.metadata else None,

|

||||

workflow=self.workflow,

|

||||

)

|

||||

|

||||

return ImageOutput(

|

||||

@@ -276,7 +270,7 @@ class LaMaInfillInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

|

||||

|

||||

@invocation("infill_cv2", title="CV2 Infill", tags=["image", "inpaint"], category="inpaint")

|

||||

class CV2InfillInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

class CV2InfillInvocation(BaseInvocation):

|

||||

"""Infills transparent areas of an image using OpenCV Inpainting"""

|

||||

|

||||

image: ImageField = InputField(description="The image to infill")

|

||||

@@ -293,8 +287,6 @@ class CV2InfillInvocation(BaseInvocation, WithWorkflow, WithMetadata):

|

||||

node_id=self.id,

|

||||

session_id=context.graph_execution_state_id,

|

||||

is_intermediate=self.is_intermediate,

|

||||

metadata=self.metadata.data if self.metadata else None,

|

||||

workflow=self.workflow,

|

||||

)

|

||||

|

||||

return ImageOutput(

|

||||

|

||||

@@ -23,7 +23,7 @@ from pydantic import validator

|

||||

from torchvision.transforms.functional import resize as tv_resize

|

||||

|

||||

from invokeai.app.invocations.ip_adapter import IPAdapterField

|

||||

from invokeai.app.invocations.metadata import WithMetadata

|

||||

from invokeai.app.invocations.metadata import CoreMetadata

|

||||

from invokeai.app.invocations.primitives import (

|

||||

DenoiseMaskField,

|

||||

DenoiseMaskOutput,

|

||||

@@ -62,7 +62,6 @@ from .baseinvocation import (

|

||||

InvocationContext,

|

||||

OutputField,

|

||||

UIType,

|

||||

WithWorkflow,

|

||||

invocation,

|

||||

invocation_output,

|

||||

)

|

||||

@@ -622,7 +621,7 @@ class DenoiseLatentsInvocation(BaseInvocation):

|

||||

@invocation(

|

||||

"l2i", title="Latents to Image", tags=["latents", "image", "vae", "l2i"], category="latents", version="1.0.0"

|

||||

)

|

||||

class LatentsToImageInvocation(BaseInvocation, WithMetadata, WithWorkflow):

|

||||

class LatentsToImageInvocation(BaseInvocation):

|

||||

"""Generates an image from latents."""

|

||||

|

||||

latents: LatentsField = InputField(

|

||||

@@ -635,6 +634,11 @@ class LatentsToImageInvocation(BaseInvocation, WithMetadata, WithWorkflow):

|

||||

)

|

||||

tiled: bool = InputField(default=False, description=FieldDescriptions.tiled)

|

||||

fp32: bool = InputField(default=DEFAULT_PRECISION == "float32", description=FieldDescriptions.fp32)

|

||||

metadata: CoreMetadata = InputField(

|

||||

default=None,

|

||||

description=FieldDescriptions.core_metadata,

|

||||

ui_hidden=True,

|

||||

)

|

||||

|

||||

@torch.no_grad()

|

||||

def invoke(self, context: InvocationContext) -> ImageOutput:

|

||||

@@ -703,7 +707,7 @@ class LatentsToImageInvocation(BaseInvocation, WithMetadata, WithWorkflow):

|

||||

node_id=self.id,

|

||||

session_id=context.graph_execution_state_id,

|

||||

is_intermediate=self.is_intermediate,

|

||||

metadata=self.metadata.data if self.metadata else None,

|

||||

metadata=self.metadata.dict() if self.metadata else None,

|

||||

workflow=self.workflow,

|

||||

)

|

||||

|

||||

|

||||

@@ -1,19 +1,18 @@

|

||||

from typing import Any, Optional, Union

|

||||

from typing import Optional

|

||||

|

||||

from pydantic import BaseModel, Field

|

||||

from pydantic import Field

|

||||

|

||||

from invokeai.app.invocations.baseinvocation import (

|

||||

BaseInvocation,

|

||||

BaseInvocationOutput,

|

||||

FieldDescriptions,

|

||||

InputField,

|

||||

InvocationContext,

|

||||

OutputField,

|

||||

UIType,

|

||||

invocation,

|

||||

invocation_output,

|

||||

)

|

||||

from invokeai.app.invocations.model import LoRAModelField

|

||||

from invokeai.app.invocations.controlnet_image_processors import ControlField

|

||||

from invokeai.app.invocations.model import LoRAModelField, MainModelField, VAEModelField

|

||||

from invokeai.app.util.model_exclude_null import BaseModelExcludeNull

|

||||

|

||||

from ...version import __version__

|

||||

@@ -26,78 +25,159 @@ class LoRAMetadataField(BaseModelExcludeNull):

|

||||

weight: float = Field(description="The weight of the LoRA model")

|

||||

|

||||

|

||||

class CoreMetadata(BaseModelExcludeNull):

|

||||

"""Core generation metadata for an image generated in InvokeAI."""

|

||||

|

||||

app_version: str = Field(default=__version__, description="The version of InvokeAI used to generate this image")

|

||||

generation_mode: str = Field(

|

||||

description="The generation mode that output this image",

|

||||

)

|

||||

created_by: Optional[str] = Field(description="The name of the creator of the image")

|

||||

positive_prompt: str = Field(description="The positive prompt parameter")

|

||||

negative_prompt: str = Field(description="The negative prompt parameter")

|

||||

width: int = Field(description="The width parameter")

|

||||

height: int = Field(description="The height parameter")

|

||||

seed: int = Field(description="The seed used for noise generation")

|

||||

rand_device: str = Field(description="The device used for random number generation")

|

||||

cfg_scale: float = Field(description="The classifier-free guidance scale parameter")

|

||||

steps: int = Field(description="The number of steps used for inference")

|

||||

scheduler: str = Field(description="The scheduler used for inference")

|

||||

clip_skip: Optional[int] = Field(

|

||||

default=None,

|

||||

description="The number of skipped CLIP layers",

|

||||

)

|

||||

model: MainModelField = Field(description="The main model used for inference")

|

||||

controlnets: list[ControlField] = Field(description="The ControlNets used for inference")

|

||||

loras: list[LoRAMetadataField] = Field(description="The LoRAs used for inference")

|

||||

vae: Optional[VAEModelField] = Field(

|

||||

default=None,

|

||||

description="The VAE used for decoding, if the main model's default was not used",

|

||||

)

|

||||

|

||||

# Latents-to-Latents

|

||||

strength: Optional[float] = Field(

|

||||

default=None,

|

||||

description="The strength used for latents-to-latents",

|

||||

)

|

||||

init_image: Optional[str] = Field(default=None, description="The name of the initial image")

|

||||

|

||||

# SDXL

|

||||

positive_style_prompt: Optional[str] = Field(default=None, description="The positive style prompt parameter")

|

||||

negative_style_prompt: Optional[str] = Field(default=None, description="The negative style prompt parameter")

|

||||

|

||||

# SDXL Refiner

|

||||

refiner_model: Optional[MainModelField] = Field(default=None, description="The SDXL Refiner model used")

|

||||

refiner_cfg_scale: Optional[float] = Field(

|

||||

default=None,

|

||||

description="The classifier-free guidance scale parameter used for the refiner",

|

||||

)

|

||||

refiner_steps: Optional[int] = Field(default=None, description="The number of steps used for the refiner")

|

||||

refiner_scheduler: Optional[str] = Field(default=None, description="The scheduler used for the refiner")

|

||||

refiner_positive_aesthetic_score: Optional[float] = Field(

|

||||

default=None, description="The aesthetic score used for the refiner"

|

||||

)

|

||||

refiner_negative_aesthetic_score: Optional[float] = Field(

|

||||

default=None, description="The aesthetic score used for the refiner"

|

||||

)

|

||||

refiner_start: Optional[float] = Field(default=None, description="The start value used for refiner denoising")

|

||||

|

||||

|

||||

class ImageMetadata(BaseModelExcludeNull):

|

||||

"""An image's generation metadata"""

|

||||

|

||||

metadata: Optional[dict] = Field(default=None, description="The metadata associated with the image")

|

||||

workflow: Optional[dict] = Field(default=None, description="The workflow associated with the image")

|

||||

metadata: Optional[dict] = Field(

|

||||

default=None,

|

||||

description="The image's core metadata, if it was created in the Linear or Canvas UI",

|

||||

)

|

||||

graph: Optional[dict] = Field(default=None, description="The graph that created the image")

|

||||

|

||||

|

||||

class MetadataItem(BaseModel):

|

||||

label: str = Field(description=FieldDescriptions.metadata_item_label)

|

||||

value: Any = Field(description=FieldDescriptions.metadata_item_value)

|

||||

@invocation_output("metadata_accumulator_output")

|

||||

class MetadataAccumulatorOutput(BaseInvocationOutput):

|

||||

"""The output of the MetadataAccumulator node"""

|

||||

|

||||

metadata: CoreMetadata = OutputField(description="The core metadata for the image")

|

||||

|

||||

|

||||

@invocation_output("metadata_item_output")

|

||||

class MetadataItemOutput(BaseInvocationOutput):

|

||||

"""Metadata Item Output"""

|

||||

@invocation(

|

||||

"metadata_accumulator", title="Metadata Accumulator", tags=["metadata"], category="metadata", version="1.0.0"

|

||||

)

|

||||

class MetadataAccumulatorInvocation(BaseInvocation):

|

||||

"""Outputs a Core Metadata Object"""

|

||||

|

||||

item: MetadataItem = OutputField(description="Metadata Item")

|

||||

generation_mode: str = InputField(

|

||||

description="The generation mode that output this image",

|

||||

)

|

||||

positive_prompt: str = InputField(description="The positive prompt parameter")

|

||||

negative_prompt: str = InputField(description="The negative prompt parameter")

|

||||

width: int = InputField(description="The width parameter")

|

||||

height: int = InputField(description="The height parameter")

|

||||

seed: int = InputField(description="The seed used for noise generation")

|

||||

rand_device: str = InputField(description="The device used for random number generation")

|

||||

cfg_scale: float = InputField(description="The classifier-free guidance scale parameter")

|

||||

steps: int = InputField(description="The number of steps used for inference")

|

||||

scheduler: str = InputField(description="The scheduler used for inference")

|

||||

clip_skip: Optional[int] = Field(

|

||||

default=None,

|

||||

description="The number of skipped CLIP layers",

|

||||

)

|

||||

model: MainModelField = InputField(description="The main model used for inference")

|

||||

controlnets: list[ControlField] = InputField(description="The ControlNets used for inference")

|

||||

loras: list[LoRAMetadataField] = InputField(description="The LoRAs used for inference")

|

||||

strength: Optional[float] = InputField(

|

||||

default=None,

|

||||

description="The strength used for latents-to-latents",

|

||||

)

|

||||

init_image: Optional[str] = InputField(

|

||||

default=None,

|

||||

description="The name of the initial image",

|

||||

)

|

||||

vae: Optional[VAEModelField] = InputField(

|

||||

default=None,

|

||||

description="The VAE used for decoding, if the main model's default was not used",

|

||||

)

|

||||

|

||||

# SDXL

|

||||

positive_style_prompt: Optional[str] = InputField(

|

||||

default=None,

|

||||

description="The positive style prompt parameter",

|

||||

)

|

||||

negative_style_prompt: Optional[str] = InputField(

|

||||

default=None,

|

||||

description="The negative style prompt parameter",

|

||||

)

|

||||

|

||||

@invocation("metadata_item", title="Metadata Item", tags=["metadata"], category="metadata", version="1.0.0")

|

||||

class MetadataItemInvocation(BaseInvocation):

|

||||

"""Used to create an arbitrary metadata item. Provide "label" and make a connection to "value" to store that data as the value."""

|

||||

# SDXL Refiner

|

||||

refiner_model: Optional[MainModelField] = InputField(

|

||||

default=None,

|

||||

description="The SDXL Refiner model used",

|

||||

)

|

||||

refiner_cfg_scale: Optional[float] = InputField(

|

||||

default=None,

|

||||

description="The classifier-free guidance scale parameter used for the refiner",

|

||||

)

|

||||

refiner_steps: Optional[int] = InputField(

|

||||

default=None,

|

||||

description="The number of steps used for the refiner",

|

||||

)

|

||||

refiner_scheduler: Optional[str] = InputField(

|

||||

default=None,

|

||||

description="The scheduler used for the refiner",

|

||||

)

|

||||

refiner_positive_aesthetic_score: Optional[float] = InputField(

|

||||

default=None,

|

||||

description="The aesthetic score used for the refiner",

|

||||

)

|

||||

refiner_negative_aesthetic_score: Optional[float] = InputField(

|

||||

default=None,

|

||||

description="The aesthetic score used for the refiner",

|

||||

)

|

||||

refiner_start: Optional[float] = InputField(

|

||||

default=None,

|

||||

description="The start value used for refiner denoising",

|

||||

)

|

||||

|

||||

label: str = InputField(description=FieldDescriptions.metadata_item_label)

|

||||

value: Any = InputField(description=FieldDescriptions.metadata_item_value, ui_type=UIType.Any)

|

||||

def invoke(self, context: InvocationContext) -> MetadataAccumulatorOutput:

|

||||

"""Collects and outputs a CoreMetadata object"""

|

||||

|

||||

def invoke(self, context: InvocationContext) -> MetadataItemOutput:

|

||||

return MetadataItemOutput(item=MetadataItem(label=self.label, value=self.value))

|

||||

|

||||

|

||||

class MetadataDict(BaseModel):

|

||||

"""Accepts a single MetadataItem or collection of MetadataItems (use a Collect node)."""

|

||||

|

||||

data: dict[str, Any] = Field(description="Metadata dict")

|

||||

|

||||

|

||||

@invocation_output("metadata_dict")

|

||||

class MetadataDictOutput(BaseInvocationOutput):

|

||||

metadata_dict: MetadataDict = OutputField(description="Metadata Dict")

|

||||

|

||||

|

||||

@invocation("metadata", title="Metadata", tags=["metadata"], category="metadata", version="1.0.0")

|

||||

class MetadataInvocation(BaseInvocation):

|

||||

"""Takes a MetadataItem or collection of MetadataItems and outputs a MetadataDict."""

|

||||

|

||||

items: Union[list[MetadataItem], MetadataItem] = InputField(description=FieldDescriptions.metadata_item_polymorphic)

|

||||

|

||||

def invoke(self, context: InvocationContext) -> MetadataDictOutput:

|

||||

if isinstance(self.items, MetadataItem):

|

||||

# single metadata item

|

||||

data = {self.items.label: self.items.value}

|

||||

else:

|

||||

# collection of metadata items

|

||||

data = {item.label: item.value for item in self.items}

|

||||

|

||||

data.update({"app_version": __version__})

|

||||

return MetadataDictOutput(metadata_dict=(MetadataDict(data=data)))

|

||||

|

||||

|

||||

@invocation("merge_metadata_dict", title="Metadata Merge", tags=["metadata"], category="metadata", version="1.0.0")

|

||||

class MergeMetadataDictInvocation(BaseInvocation):

|

||||

"""Merged a collection of MetadataDict into a single MetadataDict."""

|

||||

|

||||

collection: list[MetadataDict] = InputField(description=FieldDescriptions.metadata_dict_collection)

|

||||

|

||||

def invoke(self, context: InvocationContext) -> MetadataDictOutput:

|

||||

data = {}

|

||||

for item in self.collection:

|

||||

data.update(item.data)

|

||||

|

||||

return MetadataDictOutput(metadata_dict=MetadataDict(data=data))

|

||||

|

||||

|

||||

class WithMetadata(BaseModel):

|

||||

metadata: Optional[MetadataDict] = InputField(default=None, description=FieldDescriptions.metadata)

|

||||

return MetadataAccumulatorOutput(metadata=CoreMetadata(**self.dict()))

|

||||

|

||||

@@ -12,7 +12,7 @@ from diffusers.image_processor import VaeImageProcessor

|

||||

from pydantic import BaseModel, Field, validator

|

||||

from tqdm import tqdm

|

||||

|

||||

from invokeai.app.invocations.metadata import WithMetadata

|

||||

from invokeai.app.invocations.metadata import CoreMetadata

|

||||

from invokeai.app.invocations.primitives import ConditioningField, ConditioningOutput, ImageField, ImageOutput

|

||||

from invokeai.app.util.step_callback import stable_diffusion_step_callback

|

||||