mirror of

https://github.com/invoke-ai/InvokeAI.git

synced 2026-01-15 09:18:00 -05:00

Compare commits

262 Commits

psychedeli

...

test

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

ad786130ff | ||

|

|

77a444f3bc | ||

|

|

24209b60a4 | ||

|

|

cf2b847e33 | ||

|

|

5f35ad078d | ||

|

|

43266b18c7 | ||

|

|

d521145c36 | ||

|

|

bf359bd91f | ||

|

|

25ad74922e | ||

|

|

d8c31e9ed5 | ||

|

|

fc958217eb | ||

|

|

5010412341 | ||

|

|

0e93c4e856 | ||

|

|

98e6c62214 | ||

|

|

e1d2d382cf | ||

|

|

d349e00965 | ||

|

|

bbe1097c05 | ||

|

|

a10acde5eb | ||

|

|

171532ec44 | ||

|

|

54cbadeffa | ||

|

|

a76e58017c | ||

|

|

17be3e1234 | ||

|

|

73ba6b03ab | ||

|

|

6f37fbdee4 | ||

|

|

1928d1af29 | ||

|

|

f6127a1b6b | ||

|

|

7f457ca03d | ||

|

|

2b972cda6c | ||

|

|

47b0e1d7b4 | ||

|

|

fe0a16c846 | ||

|

|

8c61cda4b8 | ||

|

|

f19c6069a9 | ||

|

|

75663ec81e | ||

|

|

40a568c060 | ||

|

|

8e7aa74a16 | ||

|

|

fcba4382b2 | ||

|

|

6f45931711 | ||

|

|

278392d52c | ||

|

|

b2f942d714 | ||

|

|

6bc2253894 | ||

|

|

97d6f207d8 | ||

|

|

dc9a9d7bec | ||

|

|

15a3e49a40 | ||

|

|

7ccfc499dc | ||

|

|

56d0d80a39 | ||

|

|

2d64ee7f9e | ||

|

|

10ada84404 | ||

|

|

7744e01e2c | ||

|

|

ce8e5f9adf | ||

|

|

fc1021b6be | ||

|

|

fadfe1dfe9 | ||

|

|

2716ae353b | ||

|

|

bf9f7271dd | ||

|

|

d3821594df | ||

|

|

631ad1596f | ||

|

|

dfe32e467d | ||

|

|

3575cf3b3b | ||

|

|

29c3f49182 | ||

|

|

d2149a8380 | ||

|

|

6532d9ffa1 | ||

|

|

89db8c83c2 | ||

|

|

fc09ab7e13 | ||

|

|

9646157ad5 | ||

|

|

b89ec2b9c3 | ||

|

|

d2fb29cf0d | ||

|

|

d1fce4b70b | ||

|

|

f50f95a81d | ||

|

|

3611029057 | ||

|

|

402cf9b0ee | ||

|

|

88bee96ca3 | ||

|

|

5048fc7c9e | ||

|

|

2a35d93a4d | ||

|

|

10fac5c085 | ||

|

|

58850ded22 | ||

|

|

f21ebdfaca | ||

|

|

c4f1e94cc4 | ||

|

|

dbbcce9f70 | ||

|

|

cc52896bd9 | ||

|

|

d12314fb8b | ||

|

|

07b88e3017 | ||

|

|

0b85f2487c | ||

|

|

5530d3fcd2 | ||

|

|

7b1b24900f | ||

|

|

f52fb45276 | ||

|

|

fb9f0339a2 | ||

|

|

ac501ee742 | ||

|

|

2182ccf8d1 | ||

|

|

fc674ff1b8 | ||

|

|

708ac6a511 | ||

|

|

d0e0b64fc8 | ||

|

|

a23580664d | ||

|

|

0edf01d927 | ||

|

|

4af5b9cbf7 | ||

|

|

1bf973d46e | ||

|

|

72252e3ff7 | ||

|

|

8d2596c288 | ||

|

|

0ffb7ecaa8 | ||

|

|

10f30fc599 | ||

|

|

136570aa1d | ||

|

|

5a30b507e0 | ||

|

|

d47fbf283c | ||

|

|

7c24312d3f | ||

|

|

905cd8c639 | ||

|

|

b13ba55c26 | ||

|

|

82747e2260 | ||

|

|

910553f49a | ||

|

|

faabd83717 | ||

|

|

5ad77ece4b | ||

|

|

6b3c413a5b | ||

|

|

2a923d1f69 | ||

|

|

c54a5ce10e | ||

|

|

14fbe41834 | ||

|

|

64ebe042b5 | ||

|

|

5b2ed4ffb4 | ||

|

|

a49b8febed | ||

|

|

e543db5a5d | ||

|

|

670f3aa165 | ||

|

|

c0534d6519 | ||

|

|

7bc6c23dfa | ||

|

|

851ce36250 | ||

|

|

d631088566 | ||

|

|

f0bf733309 | ||

|

|

65af7dd8f8 | ||

|

|

74c666aaa2 | ||

|

|

45f9aca7e5 | ||

|

|

9fb624f390 | ||

|

|

962e51320b | ||

|

|

44932923eb | ||

|

|

ffcf6dfde6 | ||

|

|

be52eb153c | ||

|

|

bd97c6b708 | ||

|

|

9940cbfa87 | ||

|

|

77aeb9a421 | ||

|

|

2bad8b9f29 | ||

|

|

8e943b2ce1 | ||

|

|

5d3ab4f333 | ||

|

|

1047d08835 | ||

|

|

516cc258f9 | ||

|

|

7c2aa1dc20 | ||

|

|

035f1e12e1 | ||

|

|

4c93202ee4 | ||

|

|

227046bdb0 | ||

|

|

83b123f1f6 | ||

|

|

320ef15ee9 | ||

|

|

6905c61912 | ||

|

|

494bde785e | ||

|

|

732ab38ca6 | ||

|

|

ba38aa56a5 | ||

|

|

0a48c5a712 | ||

|

|

133ab91c8d | ||

|

|

7a672bd2b2 | ||

|

|

7dee6f51a3 | ||

|

|

3c029eee29 | ||

|

|

1a8f9d1ecb | ||

|

|

80d329c900 | ||

|

|

89db749d89 | ||

|

|

18164fc72a | ||

|

|

75de20af6a | ||

|

|

cb1509bf52 | ||

|

|

10cd814cf7 | ||

|

|

8ef38ecc7c | ||

|

|

69937d68d2 | ||

|

|

40f9e49b5e | ||

|

|

98fa234529 | ||

|

|

fe889235cc | ||

|

|

462c1d4c9b | ||

|

|

0ed36158c8 | ||

|

|

f3c138a208 | ||

|

|

61242bf86a | ||

|

|

d118d02df4 | ||

|

|

58b56e9b1e | ||

|

|

1f751f8c21 | ||

|

|

ca95a3bd0d | ||

|

|

55b40a9425 | ||

|

|

90083cc88d | ||

|

|

ead754432a | ||

|

|

fa9ea93477 | ||

|

|

fe0cf2c160 | ||

|

|

a681fa4b03 | ||

|

|

1cc686734b | ||

|

|

82e8b92ba0 | ||

|

|

e86658f864 | ||

|

|

ad136c2680 | ||

|

|

35374ec531 | ||

|

|

ed82bf6bb8 | ||

|

|

078c9b6964 | ||

|

|

1a9d2f1701 | ||

|

|

3e93159bce | ||

|

|

b57ebe52e4 | ||

|

|

ba4616ff89 | ||

|

|

dcfbd49e1b | ||

|

|

913fc83cbf | ||

|

|

6b8ce34eb3 | ||

|

|

9508e0c9db | ||

|

|

9c720da021 | ||

|

|

e1b576c72d | ||

|

|

971ccfb081 | ||

|

|

43a3c3c7ea | ||

|

|

4df1cdb34d | ||

|

|

3f860c3523 | ||

|

|

d8d0c9af09 | ||

|

|

9403672ac0 | ||

|

|

94591840a7 | ||

|

|

26b91a538a | ||

|

|

7ca456d674 | ||

|

|

78828b6b9c | ||

|

|

166ff9d301 | ||

|

|

4f97bd4418 | ||

|

|

e0e001758a | ||

|

|

c1887135b3 | ||

|

|

096d195d6e | ||

|

|

7870b90717 | ||

|

|

9854b244fd | ||

|

|

7d800e1ce3 | ||

|

|

1c8b1fbc53 | ||

|

|

594a3aef93 | ||

|

|

78377469db | ||

|

|

fbe6452c45 | ||

|

|

3f4ea073d1 | ||

|

|

8b7f8eaea2 | ||

|

|

88e16ce051 | ||

|

|

421440cae0 | ||

|

|

421021cede | ||

|

|

020d4302d1 | ||

|

|

8c59d2e5af | ||

|

|

17d451eaa7 | ||

|

|

23a06fd06d | ||

|

|

010c8e8038 | ||

|

|

dfc635223c | ||

|

|

37121a3a24 | ||

|

|

51b5de799a | ||

|

|

eadbe6abf7 | ||

|

|

16f48a816f | ||

|

|

95838e5559 | ||

|

|

3e8d62b1d1 | ||

|

|

2acc93eb8e | ||

|

|

fbb61f2334 | ||

|

|

be85c7972b | ||

|

|

3a586fc9c4 | ||

|

|

2479a59e5e | ||

|

|

7d0ac2c36d | ||

|

|

519b892f0c | ||

|

|

763dcacfd3 | ||

|

|

3599d546e6 | ||

|

|

22a84930f6 | ||

|

|

d64e17e043 | ||

|

|

ba54277011 | ||

|

|

5915a4a51c | ||

|

|

4580ba0d87 | ||

|

|

b9fd2e9e76 | ||

|

|

75b65597af | ||

|

|

2a3c0ab5d2 | ||

|

|

7d61373b82 | ||

|

|

7d65555a5a | ||

|

|

123f2b2dbc | ||

|

|

1e4e42556e | ||

|

|

1f6699ac43 | ||

|

|

ace8665411 | ||

|

|

7fa5bae8fd | ||

|

|

f9faca7c91 | ||

|

|

594fd3ba6d | ||

|

|

44d68f5ed5 |

2

.github/workflows/pypi-release.yml

vendored

2

.github/workflows/pypi-release.yml

vendored

@@ -28,7 +28,7 @@ jobs:

|

||||

run: twine check dist/*

|

||||

|

||||

- name: check PyPI versions

|

||||

if: github.ref == 'refs/heads/main' || github.ref == 'refs/heads/v2.3'

|

||||

if: github.ref == 'refs/heads/main' || startsWith(github.ref, 'refs/heads/release/')

|

||||

run: |

|

||||

pip install --upgrade requests

|

||||

python -c "\

|

||||

|

||||

@@ -47,34 +47,9 @@ pip install ".[dev,test]"

|

||||

These are optional groups of packages which are defined within the `pyproject.toml`

|

||||

and will be required for testing the changes you make to the code.

|

||||

|

||||

### Running Tests

|

||||

|

||||

We use [pytest](https://docs.pytest.org/en/7.2.x/) for our test suite. Tests can

|

||||

be found under the `./tests` folder and can be run with a single `pytest`

|

||||

command. Optionally, to review test coverage you can append `--cov`.

|

||||

|

||||

```zsh

|

||||

pytest --cov

|

||||

```

|

||||

|

||||

Test outcomes and coverage will be reported in the terminal. In addition a more

|

||||

detailed report is created in both XML and HTML format in the `./coverage`

|

||||

folder. The HTML one in particular can help identify missing statements

|

||||

requiring tests to ensure coverage. This can be run by opening

|

||||

`./coverage/html/index.html`.

|

||||

|

||||

For example.

|

||||

|

||||

```zsh

|

||||

pytest --cov; open ./coverage/html/index.html

|

||||

```

|

||||

|

||||

??? info "HTML coverage report output"

|

||||

|

||||

|

||||

|

||||

|

||||

### Tests

|

||||

|

||||

See the [tests documentation](./TESTS.md) for information about running and writing tests.

|

||||

### Reloading Changes

|

||||

|

||||

Experimenting with changes to the Python source code is a drag if you have to re-start the server —

|

||||

|

||||

89

docs/contributing/TESTS.md

Normal file

89

docs/contributing/TESTS.md

Normal file

@@ -0,0 +1,89 @@

|

||||

# InvokeAI Backend Tests

|

||||

|

||||

We use `pytest` to run the backend python tests. (See [pyproject.toml](/pyproject.toml) for the default `pytest` options.)

|

||||

|

||||

## Fast vs. Slow

|

||||

All tests are categorized as either 'fast' (no test annotation) or 'slow' (annotated with the `@pytest.mark.slow` decorator).

|

||||

|

||||

'Fast' tests are run to validate every PR, and are fast enough that they can be run routinely during development.

|

||||

|

||||

'Slow' tests are currently only run manually on an ad-hoc basis. In the future, they may be automated to run nightly. Most developers are only expected to run the 'slow' tests that directly relate to the feature(s) that they are working on.

|

||||

|

||||

As a rule of thumb, tests should be marked as 'slow' if there is a chance that they take >1s (e.g. on a CPU-only machine with slow internet connection). Common examples of slow tests are tests that depend on downloading a model, or running model inference.

|

||||

|

||||

## Running Tests

|

||||

|

||||

Below are some common test commands:

|

||||

```bash

|

||||

# Run the fast tests. (This implicitly uses the configured default option: `-m "not slow"`.)

|

||||

pytest tests/

|

||||

|

||||

# Equivalent command to run the fast tests.

|

||||

pytest tests/ -m "not slow"

|

||||

|

||||

# Run the slow tests.

|

||||

pytest tests/ -m "slow"

|

||||

|

||||

# Run the slow tests from a specific file.

|

||||

pytest tests/path/to/slow_test.py -m "slow"

|

||||

|

||||

# Run all tests (fast and slow).

|

||||

pytest tests -m ""

|

||||

```

|

||||

|

||||

## Test Organization

|

||||

|

||||

All backend tests are in the [`tests/`](/tests/) directory. This directory mirrors the organization of the `invokeai/` directory. For example, tests for `invokeai/model_management/model_manager.py` would be found in `tests/model_management/test_model_manager.py`.

|

||||

|

||||

TODO: The above statement is aspirational. A re-organization of legacy tests is required to make it true.

|

||||

|

||||

## Tests that depend on models

|

||||

|

||||

There are a few things to keep in mind when adding tests that depend on models.

|

||||

|

||||

1. If a required model is not already present, it should automatically be downloaded as part of the test setup.

|

||||

2. If a model is already downloaded, it should not be re-downloaded unnecessarily.

|

||||

3. Take reasonable care to keep the total number of models required for the tests low. Whenever possible, re-use models that are already required for other tests. If you are adding a new model, consider including a comment to explain why it is required/unique.

|

||||

|

||||

There are several utilities to help with model setup for tests. Here is a sample test that depends on a model:

|

||||

```python

|

||||

import pytest

|

||||

import torch

|

||||

|

||||

from invokeai.backend.model_management.models.base import BaseModelType, ModelType

|

||||

from invokeai.backend.util.test_utils import install_and_load_model

|

||||

|

||||

@pytest.mark.slow

|

||||

def test_model(model_installer, torch_device):

|

||||

model_info = install_and_load_model(

|

||||

model_installer=model_installer,

|

||||

model_path_id_or_url="HF/dummy_model_id",

|

||||

model_name="dummy_model",

|

||||

base_model=BaseModelType.StableDiffusion1,

|

||||

model_type=ModelType.Dummy,

|

||||

)

|

||||

|

||||

dummy_input = build_dummy_input(torch_device)

|

||||

|

||||

with torch.no_grad(), model_info as model:

|

||||

model.to(torch_device, dtype=torch.float32)

|

||||

output = model(dummy_input)

|

||||

|

||||

# Validate output...

|

||||

|

||||

```

|

||||

|

||||

## Test Coverage

|

||||

|

||||

To review test coverage, append `--cov` to your pytest command:

|

||||

```bash

|

||||

pytest tests/ --cov

|

||||

```

|

||||

|

||||

Test outcomes and coverage will be reported in the terminal. In addition, a more detailed report is created in both XML and HTML format in the `./coverage` folder. The HTML output is particularly helpful in identifying untested statements where coverage should be improved. The HTML report can be viewed by opening `./coverage/html/index.html`.

|

||||

|

||||

??? info "HTML coverage report output"

|

||||

|

||||

|

||||

|

||||

|

||||

@@ -12,7 +12,7 @@ To get started, take a look at our [new contributors checklist](newContributorCh

|

||||

Once you're setup, for more information, you can review the documentation specific to your area of interest:

|

||||

|

||||

* #### [InvokeAI Architecure](../ARCHITECTURE.md)

|

||||

* #### [Frontend Documentation](development_guides/contributingToFrontend.md)

|

||||

* #### [Frontend Documentation](./contributingToFrontend.md)

|

||||

* #### [Node Documentation](../INVOCATIONS.md)

|

||||

* #### [Local Development](../LOCAL_DEVELOPMENT.md)

|

||||

|

||||

|

||||

@@ -256,6 +256,10 @@ manager, please follow these steps:

|

||||

*highly recommended** if your virtual environment is located outside of

|

||||

your runtime directory.

|

||||

|

||||

!!! tip

|

||||

|

||||

On linux, it is recommended to run invokeai with the following env var: `MALLOC_MMAP_THRESHOLD_=1048576`. For example: `MALLOC_MMAP_THRESHOLD_=1048576 invokeai --web`. This helps to prevent memory fragmentation that can lead to memory accumulation over time. This env var is set automatically when running via `invoke.sh`.

|

||||

|

||||

10. Render away!

|

||||

|

||||

Browse the [features](../features/index.md) section to learn about all the

|

||||

|

||||

@@ -8,14 +8,42 @@ To download a node, simply download the `.py` node file from the link and add it

|

||||

|

||||

To use a community workflow, download the the `.json` node graph file and load it into Invoke AI via the **Load Workflow** button in the Workflow Editor.

|

||||

|

||||

--------------------------------

|

||||

- Community Nodes

|

||||

+ [Depth Map from Wavefront OBJ](#depth-map-from-wavefront-obj)

|

||||

+ [Film Grain](#film-grain)

|

||||

+ [Generative Grammar-Based Prompt Nodes](#generative-grammar-based-prompt-nodes)

|

||||

+ [GPT2RandomPromptMaker](#gpt2randompromptmaker)

|

||||

+ [Grid to Gif](#grid-to-gif)

|

||||

+ [Halftone](#halftone)

|

||||

+ [Ideal Size](#ideal-size)

|

||||

+ [Image and Mask Composition Pack](#image-and-mask-composition-pack)

|

||||

+ [Image to Character Art Image Nodes](#image-to-character-art-image-nodes)

|

||||

+ [Image Picker](#image-picker)

|

||||

+ [Load Video Frame](#load-video-frame)

|

||||

+ [Make 3D](#make-3d)

|

||||

+ [Oobabooga](#oobabooga)

|

||||

+ [Prompt Tools](#prompt-tools)

|

||||

+ [Retroize](#retroize)

|

||||

+ [Size Stepper Nodes](#size-stepper-nodes)

|

||||

+ [Text font to Image](#text-font-to-image)

|

||||

+ [Thresholding](#thresholding)

|

||||

+ [XY Image to Grid and Images to Grids nodes](#xy-image-to-grid-and-images-to-grids-nodes)

|

||||

- [Example Node Template](#example-node-template)

|

||||

- [Disclaimer](#disclaimer)

|

||||

- [Help](#help)

|

||||

|

||||

|

||||

--------------------------------

|

||||

### Ideal Size

|

||||

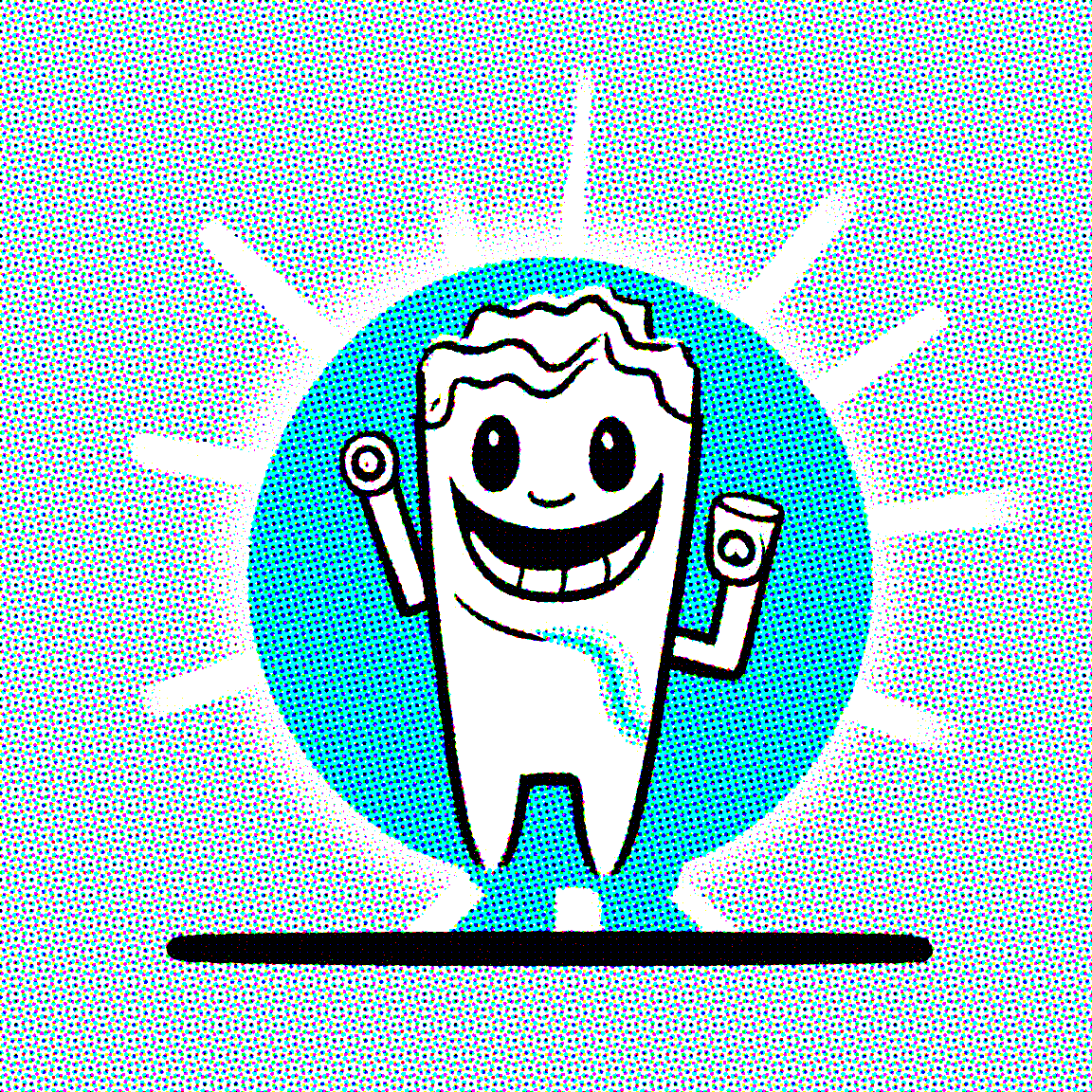

### Depth Map from Wavefront OBJ

|

||||

|

||||

**Description:** This node calculates an ideal image size for a first pass of a multi-pass upscaling. The aim is to avoid duplication that results from choosing a size larger than the model is capable of.

|

||||

**Description:** Render depth maps from Wavefront .obj files (triangulated) using this simple 3D renderer utilizing numpy and matplotlib to compute and color the scene. There are simple parameters to change the FOV, camera position, and model orientation.

|

||||

|

||||

**Node Link:** https://github.com/JPPhoto/ideal-size-node

|

||||

To be imported, an .obj must use triangulated meshes, so make sure to enable that option if exporting from a 3D modeling program. This renderer makes each triangle a solid color based on its average depth, so it will cause anomalies if your .obj has large triangles. In Blender, the Remesh modifier can be helpful to subdivide a mesh into small pieces that work well given these limitations.

|

||||

|

||||

**Node Link:** https://github.com/dwringer/depth-from-obj-node

|

||||

|

||||

**Example Usage:**

|

||||

</br><img src="https://raw.githubusercontent.com/dwringer/depth-from-obj-node/main/depth_from_obj_usage.jpg" width="500" />

|

||||

|

||||

--------------------------------

|

||||

### Film Grain

|

||||

@@ -25,68 +53,19 @@ To use a community workflow, download the the `.json` node graph file and load i

|

||||

**Node Link:** https://github.com/JPPhoto/film-grain-node

|

||||

|

||||

--------------------------------

|

||||

### Image Picker

|

||||

### Generative Grammar-Based Prompt Nodes

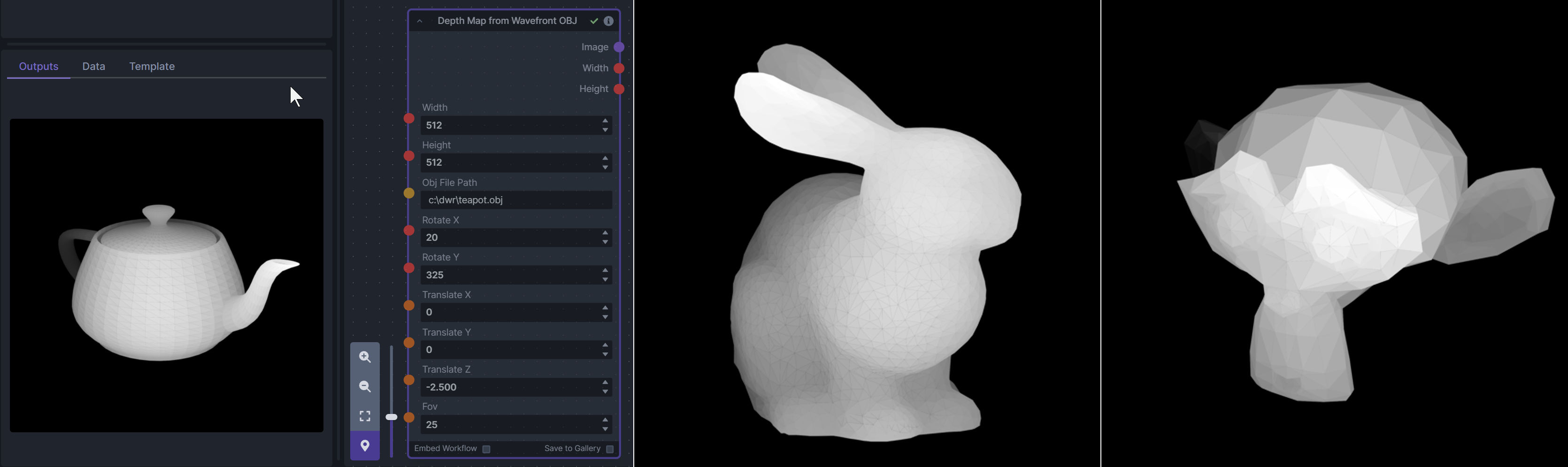

|

||||

|

||||

**Description:** This InvokeAI node takes in a collection of images and randomly chooses one. This can be useful when you have a number of poses to choose from for a ControlNet node, or a number of input images for another purpose.

|

||||

**Description:** This set of 3 nodes generates prompts from simple user-defined grammar rules (loaded from custom files - examples provided below). The prompts are made by recursively expanding a special template string, replacing nonterminal "parts-of-speech" until no nonterminal terms remain in the string.

|

||||

|

||||

**Node Link:** https://github.com/JPPhoto/image-picker-node

|

||||

This includes 3 Nodes:

|

||||

- *Lookup Table from File* - loads a YAML file "prompt" section (or of a whole folder of YAML's) into a JSON-ified dictionary (Lookups output)

|

||||

- *Lookups Entry from Prompt* - places a single entry in a new Lookups output under the specified heading

|

||||

- *Prompt from Lookup Table* - uses a Collection of Lookups as grammar rules from which to randomly generate prompts.

|

||||

|

||||

--------------------------------

|

||||

### Thresholding

|

||||

**Node Link:** https://github.com/dwringer/generative-grammar-prompt-nodes

|

||||

|

||||

**Description:** This node generates masks for highlights, midtones, and shadows given an input image. You can optionally specify a blur for the lookup table used in making those masks from the source image.

|

||||

|

||||

**Node Link:** https://github.com/JPPhoto/thresholding-node

|

||||

|

||||

**Examples**

|

||||

|

||||

Input:

|

||||

|

||||

{: style="height:512px;width:512px"}

|

||||

|

||||

Highlights/Midtones/Shadows:

|

||||

|

||||

<img src="https://github.com/invoke-ai/InvokeAI/assets/34005131/727021c1-36ff-4ec8-90c8-105e00de986d" style="width: 30%" />

|

||||

<img src="https://github.com/invoke-ai/InvokeAI/assets/34005131/0b721bfc-f051-404e-b905-2f16b824ddfe" style="width: 30%" />

|

||||

<img src="https://github.com/invoke-ai/InvokeAI/assets/34005131/04c1297f-1c88-42b6-a7df-dd090b976286" style="width: 30%" />

|

||||

|

||||

Highlights/Midtones/Shadows (with LUT blur enabled):

|

||||

|

||||

<img src="https://github.com/invoke-ai/InvokeAI/assets/34005131/19aa718a-70c1-4668-8169-d68f4bd13771" style="width: 30%" />

|

||||

<img src="https://github.com/invoke-ai/InvokeAI/assets/34005131/0a440e43-697f-4d17-82ee-f287467df0a5" style="width: 30%" />

|

||||

<img src="https://github.com/invoke-ai/InvokeAI/assets/34005131/0701fd0f-2ca7-4fe2-8613-2b52547bafce" style="width: 30%" />

|

||||

|

||||

--------------------------------

|

||||

### Halftone

|

||||

|

||||

**Description**: Halftone converts the source image to grayscale and then performs halftoning. CMYK Halftone converts the image to CMYK and applies a per-channel halftoning to make the source image look like a magazine or newspaper. For both nodes, you can specify angles and halftone dot spacing.

|

||||

|

||||

**Node Link:** https://github.com/JPPhoto/halftone-node

|

||||

|

||||

**Example**

|

||||

|

||||

Input:

|

||||

|

||||

{: style="height:512px;width:512px"}

|

||||

|

||||

Halftone Output:

|

||||

|

||||

{: style="height:512px;width:512px"}

|

||||

|

||||

CMYK Halftone Output:

|

||||

|

||||

{: style="height:512px;width:512px"}

|

||||

|

||||

--------------------------------

|

||||

### Retroize

|

||||

|

||||

**Description:** Retroize is a collection of nodes for InvokeAI to "Retroize" images. Any image can be given a fresh coat of retro paint with these nodes, either from your gallery or from within the graph itself. It includes nodes to pixelize, quantize, palettize, and ditherize images; as well as to retrieve palettes from existing images.

|

||||

|

||||

**Node Link:** https://github.com/Ar7ific1al/invokeai-retroizeinode/

|

||||

|

||||

**Retroize Output Examples**

|

||||

|

||||

|

||||

**Example Usage:**

|

||||

</br><img src="https://raw.githubusercontent.com/dwringer/generative-grammar-prompt-nodes/main/lookuptables_usage.jpg" width="500" />

|

||||

|

||||

--------------------------------

|

||||

### GPT2RandomPromptMaker

|

||||

@@ -99,76 +78,49 @@ CMYK Halftone Output:

|

||||

|

||||

Generated Prompt: An enchanted weapon will be usable by any character regardless of their alignment.

|

||||

|

||||

|

||||

<img src="https://github.com/mickr777/InvokeAI/assets/115216705/8496ba09-bcdd-4ff7-8076-ff213b6a1e4c" width="200" />

|

||||

|

||||

--------------------------------

|

||||

### Load Video Frame

|

||||

### Grid to Gif

|

||||

|

||||

**Description:** This is a video frame image provider + indexer/video creation nodes for hooking up to iterators and ranges and ControlNets and such for invokeAI node experimentation. Think animation + ControlNet outputs.

|

||||

**Description:** One node that turns a grid image into an image collection, one node that turns an image collection into a gif.

|

||||

|

||||

**Node Link:** https://github.com/helix4u/load_video_frame

|

||||

**Node Link:** https://github.com/mildmisery/invokeai-GridToGifNode/blob/main/GridToGif.py

|

||||

|

||||

**Example Node Graph:** https://github.com/helix4u/load_video_frame/blob/main/Example_Workflow.json

|

||||

**Example Node Graph:** https://github.com/mildmisery/invokeai-GridToGifNode/blob/main/Grid%20to%20Gif%20Example%20Workflow.json

|

||||

|

||||

**Output Example:**

|

||||

**Output Examples**

|

||||

|

||||

|

||||

[Full mp4 of Example Output test.mp4](https://github.com/helix4u/load_video_frame/blob/main/test.mp4)

|

||||

<img src="https://raw.githubusercontent.com/mildmisery/invokeai-GridToGifNode/main/input.png" width="300" />

|

||||

<img src="https://raw.githubusercontent.com/mildmisery/invokeai-GridToGifNode/main/output.gif" width="300" />

|

||||

|

||||

--------------------------------

|

||||

### Halftone

|

||||

|

||||

### Oobabooga

|

||||

**Description**: Halftone converts the source image to grayscale and then performs halftoning. CMYK Halftone converts the image to CMYK and applies a per-channel halftoning to make the source image look like a magazine or newspaper. For both nodes, you can specify angles and halftone dot spacing.

|

||||

|

||||

**Description:** asks a local LLM running in Oobabooga's Text-Generation-Webui to write a prompt based on the user input.

|

||||

**Node Link:** https://github.com/JPPhoto/halftone-node

|

||||

|

||||

**Link:** https://github.com/sammyf/oobabooga-node

|

||||

**Example**

|

||||

|

||||

Input:

|

||||

|

||||

**Example:**

|

||||

<img src="https://github.com/invoke-ai/InvokeAI/assets/34005131/fd5efb9f-4355-4409-a1c2-c1ca99e0cab4" width="300" />

|

||||

|

||||

"describe a new mystical creature in its natural environment"

|

||||

Halftone Output:

|

||||

|

||||

*can return*

|

||||

<img src="https://github.com/invoke-ai/InvokeAI/assets/34005131/7e606f29-e68f-4d46-b3d5-97f799a4ec2f" width="300" />

|

||||

|

||||

"The mystical creature I am describing to you is called the "Glimmerwing". It is a majestic, iridescent being that inhabits the depths of the most enchanted forests and glimmering lakes. Its body is covered in shimmering scales that reflect every color of the rainbow, and it has delicate, translucent wings that sparkle like diamonds in the sunlight. The Glimmerwing's home is a crystal-clear lake, surrounded by towering trees with leaves that shimmer like jewels. In this serene environment, the Glimmerwing spends its days swimming gracefully through the water, chasing schools of glittering fish and playing with the gentle ripples of the lake's surface.

|

||||

As the sun sets, the Glimmerwing perches on a branch of one of the trees, spreading its wings to catch the last rays of light. The creature's scales glow softly, casting a rainbow of colors across the forest floor. The Glimmerwing sings a haunting melody, its voice echoing through the stillness of the night air. Its song is said to have the power to heal the sick and bring peace to troubled souls. Those who are lucky enough to hear the Glimmerwing's song are forever changed by its beauty and grace."

|

||||

CMYK Halftone Output:

|

||||

|

||||

|

||||

|

||||

**Requirement**

|

||||

|

||||

a Text-Generation-Webui instance (might work remotely too, but I never tried it) and obviously InvokeAI 3.x

|

||||

|

||||

**Note**

|

||||

|

||||

This node works best with SDXL models, especially as the style can be described independantly of the LLM's output.

|

||||

<img src="https://github.com/invoke-ai/InvokeAI/assets/34005131/c59c578f-db8e-4d66-8c66-2851752d75ea" width="300" />

|

||||

|

||||

--------------------------------

|

||||

### Depth Map from Wavefront OBJ

|

||||

### Ideal Size

|

||||

|

||||

**Description:** Render depth maps from Wavefront .obj files (triangulated) using this simple 3D renderer utilizing numpy and matplotlib to compute and color the scene. There are simple parameters to change the FOV, camera position, and model orientation.

|

||||

**Description:** This node calculates an ideal image size for a first pass of a multi-pass upscaling. The aim is to avoid duplication that results from choosing a size larger than the model is capable of.

|

||||

|

||||

To be imported, an .obj must use triangulated meshes, so make sure to enable that option if exporting from a 3D modeling program. This renderer makes each triangle a solid color based on its average depth, so it will cause anomalies if your .obj has large triangles. In Blender, the Remesh modifier can be helpful to subdivide a mesh into small pieces that work well given these limitations.

|

||||

|

||||

**Node Link:** https://github.com/dwringer/depth-from-obj-node

|

||||

|

||||

**Example Usage:**

|

||||

|

||||

|

||||

--------------------------------

|

||||

### Generative Grammar-Based Prompt Nodes

|

||||

|

||||

**Description:** This set of 3 nodes generates prompts from simple user-defined grammar rules (loaded from custom files - examples provided below). The prompts are made by recursively expanding a special template string, replacing nonterminal "parts-of-speech" until no more nonterminal terms remain in the string.

|

||||

|

||||

This includes 3 Nodes:

|

||||

- *Lookup Table from File* - loads a YAML file "prompt" section (or of a whole folder of YAML's) into a JSON-ified dictionary (Lookups output)

|

||||

- *Lookups Entry from Prompt* - places a single entry in a new Lookups output under the specified heading

|

||||

- *Prompt from Lookup Table* - uses a Collection of Lookups as grammar rules from which to randomly generate prompts.

|

||||

|

||||

**Node Link:** https://github.com/dwringer/generative-grammar-prompt-nodes

|

||||

|

||||

**Example Usage:**

|

||||

|

||||

**Node Link:** https://github.com/JPPhoto/ideal-size-node

|

||||

|

||||

--------------------------------

|

||||

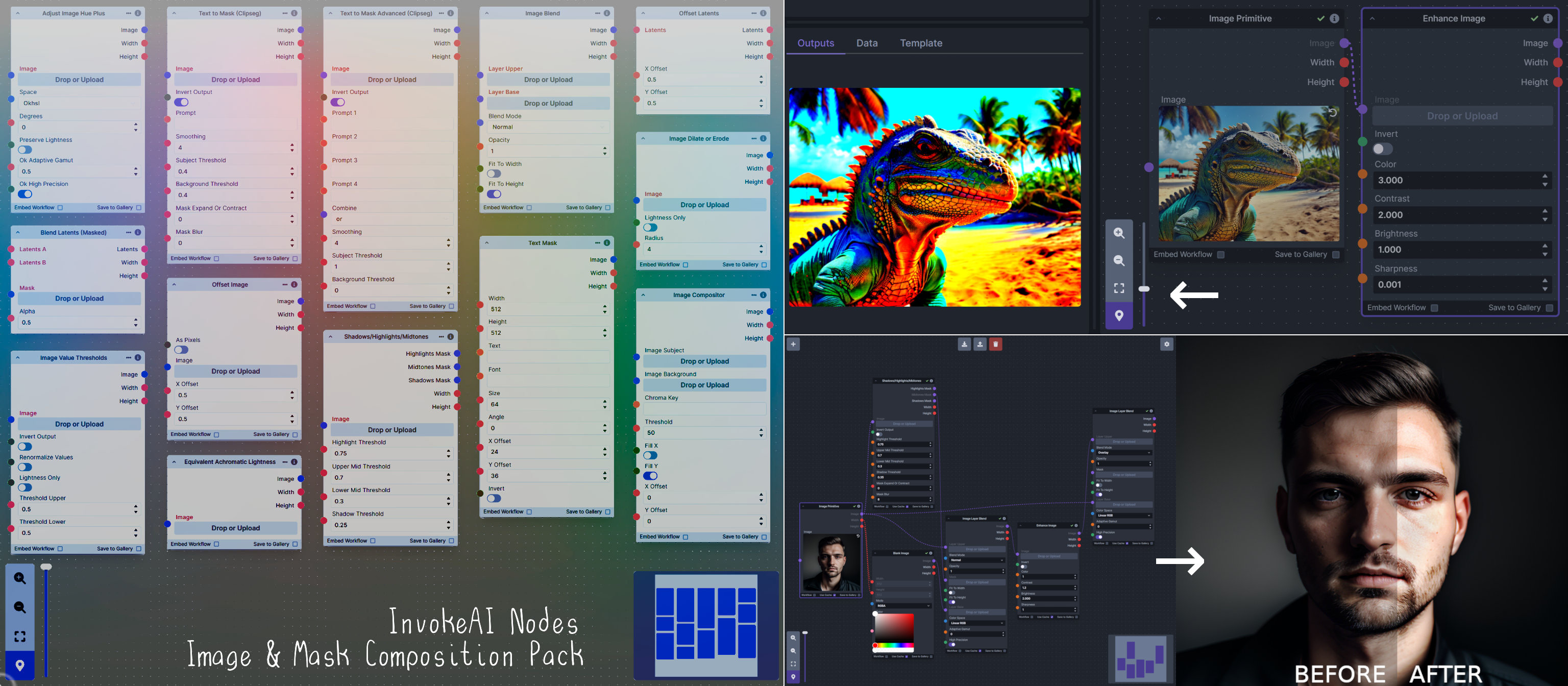

### Image and Mask Composition Pack

|

||||

@@ -194,45 +146,88 @@ This includes 15 Nodes:

|

||||

- *Text Mask (simple 2D)* - create and position a white on black (or black on white) line of text using any font locally available to Invoke.

|

||||

|

||||

**Node Link:** https://github.com/dwringer/composition-nodes

|

||||

|

||||

**Nodes and Output Examples:**

|

||||

|

||||

|

||||

</br><img src="https://raw.githubusercontent.com/dwringer/composition-nodes/main/composition_pack_overview.jpg" width="500" />

|

||||

|

||||

--------------------------------

|

||||

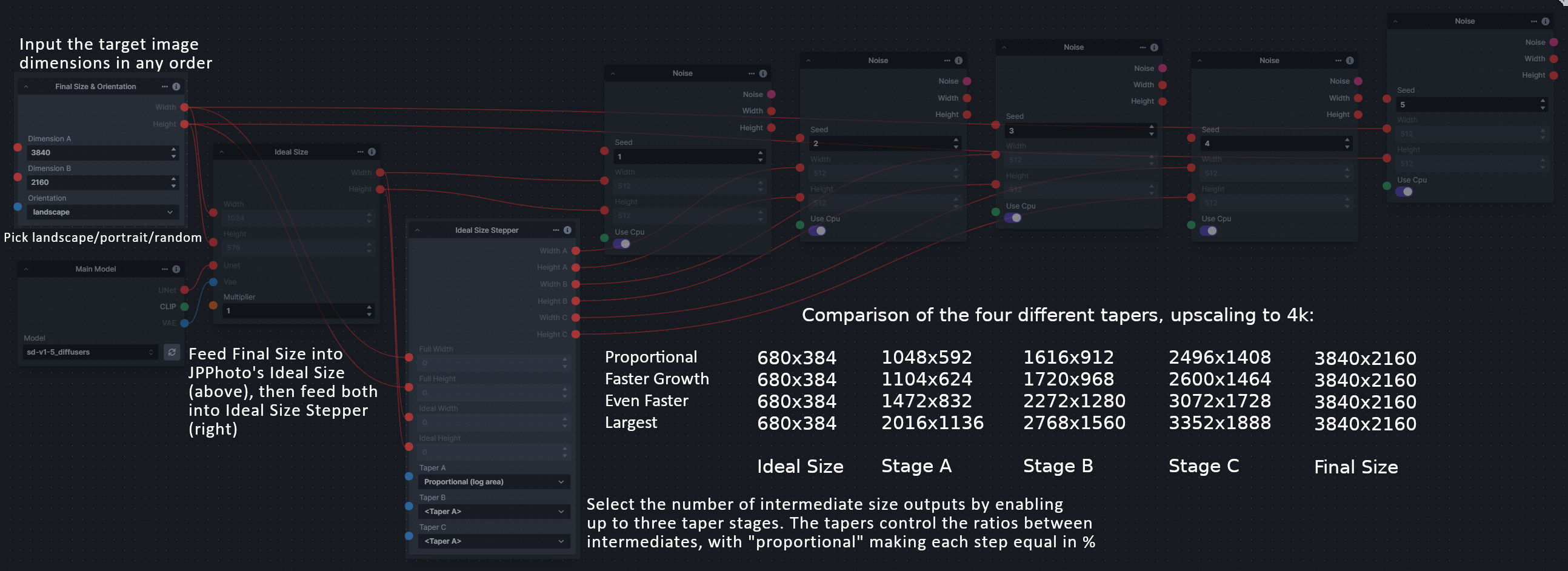

### Size Stepper Nodes

|

||||

### Image to Character Art Image Nodes

|

||||

|

||||

**Description:** This is a set of nodes for calculating the necessary size increments for doing upscaling workflows. Use the *Final Size & Orientation* node to enter your full size dimensions and orientation (portrait/landscape/random), then plug that and your initial generation dimensions into the *Ideal Size Stepper* and get 1, 2, or 3 intermediate pairs of dimensions for upscaling. Note this does not output the initial size or full size dimensions: the 1, 2, or 3 outputs of this node are only the intermediate sizes.

|

||||

**Description:** Group of nodes to convert an input image into ascii/unicode art Image

|

||||

|

||||

A third node is included, *Random Switch (Integers)*, which is just a generic version of Final Size with no orientation selection.

|

||||

|

||||

**Node Link:** https://github.com/dwringer/size-stepper-nodes

|

||||

|

||||

**Example Usage:**

|

||||

|

||||

|

||||

--------------------------------

|

||||

|

||||

### Text font to Image

|

||||

|

||||

**Description:** text font to text image node for InvokeAI, download a font to use (or if in font cache uses it from there), the text is always resized to the image size, but can control that with padding, optional 2nd line

|

||||

|

||||

**Node Link:** https://github.com/mickr777/textfontimage

|

||||

**Node Link:** https://github.com/mickr777/imagetoasciiimage

|

||||

|

||||

**Output Examples**

|

||||

|

||||

|

||||

|

||||

Results after using the depth controlnet

|

||||

|

||||

|

||||

|

||||

|

||||

<img src="https://user-images.githubusercontent.com/115216705/271817646-8e061fcc-9a2c-4fa9-bcc7-c0f7b01e9056.png" width="300" /><img src="https://github.com/mickr777/imagetoasciiimage/assets/115216705/3c4990eb-2f42-46b9-90f9-0088b939dc6a" width="300" /></br>

|

||||

<img src="https://github.com/mickr777/imagetoasciiimage/assets/115216705/fee7f800-a4a8-41e2-a66b-c66e4343307e" width="300" />

|

||||

<img src="https://github.com/mickr777/imagetoasciiimage/assets/115216705/1d9c1003-a45f-45c2-aac7-46470bb89330" width="300" />

|

||||

|

||||

--------------------------------

|

||||

|

||||

### Image Picker

|

||||

|

||||

**Description:** This InvokeAI node takes in a collection of images and randomly chooses one. This can be useful when you have a number of poses to choose from for a ControlNet node, or a number of input images for another purpose.

|

||||

|

||||

**Node Link:** https://github.com/JPPhoto/image-picker-node

|

||||

|

||||

--------------------------------

|

||||

### Load Video Frame

|

||||

|

||||

**Description:** This is a video frame image provider + indexer/video creation nodes for hooking up to iterators and ranges and ControlNets and such for invokeAI node experimentation. Think animation + ControlNet outputs.

|

||||

|

||||

**Node Link:** https://github.com/helix4u/load_video_frame

|

||||

|

||||

**Example Node Graph:** https://github.com/helix4u/load_video_frame/blob/main/Example_Workflow.json

|

||||

|

||||

**Output Example:**

|

||||

|

||||

<img src="https://github.com/helix4u/load_video_frame/blob/main/testmp4_embed_converted.gif" width="500" />

|

||||

[Full mp4 of Example Output test.mp4](https://github.com/helix4u/load_video_frame/blob/main/test.mp4)

|

||||

|

||||

--------------------------------

|

||||

### Make 3D

|

||||

|

||||

**Description:** Create compelling 3D stereo images from 2D originals.

|

||||

|

||||

**Node Link:** [https://gitlab.com/srcrr/shift3d/-/raw/main/make3d.py](https://gitlab.com/srcrr/shift3d)

|

||||

|

||||

**Example Node Graph:** https://gitlab.com/srcrr/shift3d/-/raw/main/example-workflow.json?ref_type=heads&inline=false

|

||||

|

||||

**Output Examples**

|

||||

|

||||

<img src="https://gitlab.com/srcrr/shift3d/-/raw/main/example-1.png" width="300" />

|

||||

<img src="https://gitlab.com/srcrr/shift3d/-/raw/main/example-2.png" width="300" />

|

||||

|

||||

--------------------------------

|

||||

### Oobabooga

|

||||

|

||||

**Description:** asks a local LLM running in Oobabooga's Text-Generation-Webui to write a prompt based on the user input.

|

||||

|

||||

**Link:** https://github.com/sammyf/oobabooga-node

|

||||

|

||||

**Example:**

|

||||

|

||||

"describe a new mystical creature in its natural environment"

|

||||

|

||||

*can return*

|

||||

|

||||

"The mystical creature I am describing to you is called the "Glimmerwing". It is a majestic, iridescent being that inhabits the depths of the most enchanted forests and glimmering lakes. Its body is covered in shimmering scales that reflect every color of the rainbow, and it has delicate, translucent wings that sparkle like diamonds in the sunlight. The Glimmerwing's home is a crystal-clear lake, surrounded by towering trees with leaves that shimmer like jewels. In this serene environment, the Glimmerwing spends its days swimming gracefully through the water, chasing schools of glittering fish and playing with the gentle ripples of the lake's surface.

|

||||

As the sun sets, the Glimmerwing perches on a branch of one of the trees, spreading its wings to catch the last rays of light. The creature's scales glow softly, casting a rainbow of colors across the forest floor. The Glimmerwing sings a haunting melody, its voice echoing through the stillness of the night air. Its song is said to have the power to heal the sick and bring peace to troubled souls. Those who are lucky enough to hear the Glimmerwing's song are forever changed by its beauty and grace."

|

||||

|

||||

<img src="https://github.com/sammyf/oobabooga-node/assets/42468608/cecdd820-93dd-4c35-abbf-607e001fb2ed" width="300" />

|

||||

|

||||

**Requirement**

|

||||

|

||||

a Text-Generation-Webui instance (might work remotely too, but I never tried it) and obviously InvokeAI 3.x

|

||||

|

||||

**Note**

|

||||

|

||||

This node works best with SDXL models, especially as the style can be described independently of the LLM's output.

|

||||

|

||||

--------------------------------

|

||||

### Prompt Tools

|

||||

|

||||

**Description:** A set of InvokeAI nodes that add general prompt manipulation tools. These where written to accompany the PromptsFromFile node and other prompt generation nodes.

|

||||

**Description:** A set of InvokeAI nodes that add general prompt manipulation tools. These were written to accompany the PromptsFromFile node and other prompt generation nodes.

|

||||

|

||||

1. PromptJoin - Joins to prompts into one.

|

||||

2. PromptReplace - performs a search and replace on a prompt. With the option of using regex.

|

||||

@@ -249,51 +244,83 @@ See full docs here: https://github.com/skunkworxdark/Prompt-tools-nodes/edit/mai

|

||||

**Node Link:** https://github.com/skunkworxdark/Prompt-tools-nodes

|

||||

|

||||

--------------------------------

|

||||

### Retroize

|

||||

|

||||

**Description:** Retroize is a collection of nodes for InvokeAI to "Retroize" images. Any image can be given a fresh coat of retro paint with these nodes, either from your gallery or from within the graph itself. It includes nodes to pixelize, quantize, palettize, and ditherize images; as well as to retrieve palettes from existing images.

|

||||

|

||||

**Node Link:** https://github.com/Ar7ific1al/invokeai-retroizeinode/

|

||||

|

||||

**Retroize Output Examples**

|

||||

|

||||

<img src="https://github.com/Ar7ific1al/InvokeAI_nodes_retroize/assets/2306586/de8b4fa6-324c-4c2d-b36c-297600c73974" width="500" />

|

||||

|

||||

--------------------------------

|

||||

### Size Stepper Nodes

|

||||

|

||||

**Description:** This is a set of nodes for calculating the necessary size increments for doing upscaling workflows. Use the *Final Size & Orientation* node to enter your full size dimensions and orientation (portrait/landscape/random), then plug that and your initial generation dimensions into the *Ideal Size Stepper* and get 1, 2, or 3 intermediate pairs of dimensions for upscaling. Note this does not output the initial size or full size dimensions: the 1, 2, or 3 outputs of this node are only the intermediate sizes.

|

||||

|

||||

A third node is included, *Random Switch (Integers)*, which is just a generic version of Final Size with no orientation selection.

|

||||

|

||||

**Node Link:** https://github.com/dwringer/size-stepper-nodes

|

||||

|

||||

**Example Usage:**

|

||||

</br><img src="https://raw.githubusercontent.com/dwringer/size-stepper-nodes/main/size_nodes_usage.jpg" width="500" />

|

||||

|

||||

--------------------------------

|

||||

### Text font to Image

|

||||

|

||||

**Description:** text font to text image node for InvokeAI, download a font to use (or if in font cache uses it from there), the text is always resized to the image size, but can control that with padding, optional 2nd line

|

||||

|

||||

**Node Link:** https://github.com/mickr777/textfontimage

|

||||

|

||||

**Output Examples**

|

||||

|

||||

<img src="https://github.com/mickr777/InvokeAI/assets/115216705/c21b0af3-d9c6-4c16-9152-846a23effd36" width="300" />

|

||||

|

||||

Results after using the depth controlnet

|

||||

|

||||

<img src="https://github.com/mickr777/InvokeAI/assets/115216705/915f1a53-968e-43eb-aa61-07cd8f1a733a" width="300" />

|

||||

<img src="https://github.com/mickr777/InvokeAI/assets/115216705/821ef89e-8a60-44f5-b94e-471a9d8690cc" width="300" />

|

||||

<img src="https://github.com/mickr777/InvokeAI/assets/115216705/2befcb6d-49f4-4bfd-b5fc-1fee19274f89" width="300" />

|

||||

|

||||

--------------------------------

|

||||

### Thresholding

|

||||

|

||||

**Description:** This node generates masks for highlights, midtones, and shadows given an input image. You can optionally specify a blur for the lookup table used in making those masks from the source image.

|

||||

|

||||

**Node Link:** https://github.com/JPPhoto/thresholding-node

|

||||

|

||||

**Examples**

|

||||

|

||||

Input:

|

||||

|

||||

<img src="https://github.com/invoke-ai/InvokeAI/assets/34005131/c88ada13-fb3d-484c-a4fe-947b44712632" width="300" />

|

||||

|

||||

Highlights/Midtones/Shadows:

|

||||

|

||||

<img src="https://github.com/invoke-ai/InvokeAI/assets/34005131/727021c1-36ff-4ec8-90c8-105e00de986d" width="300" />

|

||||

<img src="https://github.com/invoke-ai/InvokeAI/assets/34005131/0b721bfc-f051-404e-b905-2f16b824ddfe" width="300" />

|

||||

<img src="https://github.com/invoke-ai/InvokeAI/assets/34005131/04c1297f-1c88-42b6-a7df-dd090b976286" width="300" />

|

||||

|

||||

Highlights/Midtones/Shadows (with LUT blur enabled):

|

||||

|

||||

<img src="https://github.com/invoke-ai/InvokeAI/assets/34005131/19aa718a-70c1-4668-8169-d68f4bd13771" width="300" />

|

||||

<img src="https://github.com/invoke-ai/InvokeAI/assets/34005131/0a440e43-697f-4d17-82ee-f287467df0a5" width="300" />

|

||||

<img src="https://github.com/invoke-ai/InvokeAI/assets/34005131/0701fd0f-2ca7-4fe2-8613-2b52547bafce" width="300" />

|

||||

|

||||

--------------------------------

|

||||

### XY Image to Grid and Images to Grids nodes

|

||||

|

||||

**Description:** Image to grid nodes and supporting tools.

|

||||

|

||||

1. "Images To Grids" node - Takes a collection of images and creates a grid(s) of images. If there are more images than the size of a single grid then mutilple grids will be created until it runs out of images.

|

||||

2. "XYImage To Grid" node - Converts a collection of XYImages into a labeled Grid of images. The XYImages collection has to be built using the supporoting nodes. See example node setups for more details.

|

||||

|

||||

1. "Images To Grids" node - Takes a collection of images and creates a grid(s) of images. If there are more images than the size of a single grid then multiple grids will be created until it runs out of images.

|

||||

2. "XYImage To Grid" node - Converts a collection of XYImages into a labeled Grid of images. The XYImages collection has to be built using the supporting nodes. See example node setups for more details.

|

||||

|

||||

See full docs here: https://github.com/skunkworxdark/XYGrid_nodes/edit/main/README.md

|

||||

|

||||

**Node Link:** https://github.com/skunkworxdark/XYGrid_nodes

|

||||

|

||||

--------------------------------

|

||||

|

||||

### Image to Character Art Image Node's

|

||||

|

||||

**Description:** Group of nodes to convert an input image into ascii/unicode art Image

|

||||

|

||||

**Node Link:** https://github.com/mickr777/imagetoasciiimage

|

||||

|

||||

**Output Examples**

|

||||

|

||||

<img src="https://github.com/invoke-ai/InvokeAI/assets/115216705/8e061fcc-9a2c-4fa9-bcc7-c0f7b01e9056" width="300" />

|

||||

<img src="https://github.com/mickr777/imagetoasciiimage/assets/115216705/3c4990eb-2f42-46b9-90f9-0088b939dc6a" width="300" /></br>

|

||||

<img src="https://github.com/mickr777/imagetoasciiimage/assets/115216705/fee7f800-a4a8-41e2-a66b-c66e4343307e" width="300" />

|

||||

<img src="https://github.com/mickr777/imagetoasciiimage/assets/115216705/1d9c1003-a45f-45c2-aac7-46470bb89330" width="300" />

|

||||

|

||||

--------------------------------

|

||||

|

||||

### Grid to Gif

|

||||

|

||||

**Description:** One node that turns a grid image into an image colletion, one node that turns an image collection into a gif

|

||||

|

||||

**Node Link:** https://github.com/mildmisery/invokeai-GridToGifNode/blob/main/GridToGif.py

|

||||

|

||||

**Example Node Graph:** https://github.com/mildmisery/invokeai-GridToGifNode/blob/main/Grid%20to%20Gif%20Example%20Workflow.json

|

||||

|

||||

**Output Examples**

|

||||

|

||||

<img src="https://raw.githubusercontent.com/mildmisery/invokeai-GridToGifNode/main/input.png" width="300" />

|

||||

<img src="https://raw.githubusercontent.com/mildmisery/invokeai-GridToGifNode/main/output.gif" width="300" />

|

||||

|

||||

--------------------------------

|

||||

|

||||

### Example Node Template

|

||||

|

||||

**Description:** This node allows you to do super cool things with InvokeAI.

|

||||

@@ -304,7 +331,7 @@ See full docs here: https://github.com/skunkworxdark/XYGrid_nodes/edit/main/READ

|

||||

|

||||

**Output Examples**

|

||||

|

||||

{: style="height:115px;width:240px"}

|

||||

</br><img src="https://invoke-ai.github.io/InvokeAI/assets/invoke_ai_banner.png" width="500" />

|

||||

|

||||

|

||||

## Disclaimer

|

||||

|

||||

@@ -46,6 +46,9 @@ if [ "$(uname -s)" == "Darwin" ]; then

|

||||

export PYTORCH_ENABLE_MPS_FALLBACK=1

|

||||

fi

|

||||

|

||||

# Avoid glibc memory fragmentation. See invokeai/backend/model_management/README.md for details.

|

||||

export MALLOC_MMAP_THRESHOLD_=1048576

|

||||

|

||||

# Primary function for the case statement to determine user input

|

||||

do_choice() {

|

||||

case $1 in

|

||||

|

||||

@@ -322,3 +322,20 @@ async def unstar_images_in_list(

|

||||

return ImagesUpdatedFromListResult(updated_image_names=updated_image_names)

|

||||

except Exception:

|

||||

raise HTTPException(status_code=500, detail="Failed to unstar images")

|

||||

|

||||

|

||||

class ImagesDownloaded(BaseModel):

|

||||

response: Optional[str] = Field(

|

||||

description="If defined, the message to display to the user when images begin downloading"

|

||||

)

|

||||

|

||||

|

||||

@images_router.post("/download", operation_id="download_images_from_list", response_model=ImagesDownloaded)

|

||||

async def download_images_from_list(

|

||||

image_names: list[str] = Body(description="The list of names of images to download", embed=True),

|

||||

board_id: Optional[str] = Body(

|

||||

default=None, description="The board from which image should be downloaded from", embed=True

|

||||

),

|

||||

) -> ImagesDownloaded:

|

||||

# return ImagesDownloaded(response="Your images are downloading")

|

||||

raise HTTPException(status_code=501, detail="Endpoint is not yet implemented")

|

||||

|

||||

@@ -30,8 +30,8 @@ class SocketIO:

|

||||

|

||||

async def _handle_sub_queue(self, sid, data, *args, **kwargs):

|

||||

if "queue_id" in data:

|

||||

self.__sio.enter_room(sid, data["queue_id"])

|

||||

await self.__sio.enter_room(sid, data["queue_id"])

|

||||

|

||||

async def _handle_unsub_queue(self, sid, data, *args, **kwargs):

|

||||

if "queue_id" in data:

|

||||

self.__sio.enter_room(sid, data["queue_id"])

|

||||

await self.__sio.enter_room(sid, data["queue_id"])

|

||||

|

||||

@@ -68,6 +68,7 @@ class FieldDescriptions:

|

||||

height = "Height of output (px)"

|

||||

control = "ControlNet(s) to apply"

|

||||

ip_adapter = "IP-Adapter to apply"

|

||||

t2i_adapter = "T2I-Adapter(s) to apply"

|

||||

denoised_latents = "Denoised latents tensor"

|

||||

latents = "Latents tensor"

|

||||

strength = "Strength of denoising (proportional to steps)"

|

||||

|

||||

@@ -46,6 +46,8 @@ class FaceResultData(TypedDict):

|

||||

y_center: float

|

||||

mesh_width: int

|

||||

mesh_height: int

|

||||

chunk_x_offset: int

|

||||

chunk_y_offset: int

|

||||

|

||||

|

||||

class FaceResultDataWithId(FaceResultData):

|

||||

@@ -78,6 +80,48 @@ FONT_SIZE = 32

|

||||

FONT_STROKE_WIDTH = 4

|

||||

|

||||

|

||||

def coalesce_faces(face1: FaceResultData, face2: FaceResultData) -> FaceResultData:

|

||||

face1_x_offset = face1["chunk_x_offset"] - min(face1["chunk_x_offset"], face2["chunk_x_offset"])

|

||||

face2_x_offset = face2["chunk_x_offset"] - min(face1["chunk_x_offset"], face2["chunk_x_offset"])

|

||||

face1_y_offset = face1["chunk_y_offset"] - min(face1["chunk_y_offset"], face2["chunk_y_offset"])

|

||||

face2_y_offset = face2["chunk_y_offset"] - min(face1["chunk_y_offset"], face2["chunk_y_offset"])

|

||||

|

||||

new_im_width = (

|

||||

max(face1["image"].width, face2["image"].width)

|

||||

+ max(face1["chunk_x_offset"], face2["chunk_x_offset"])

|

||||

- min(face1["chunk_x_offset"], face2["chunk_x_offset"])

|

||||

)

|

||||

new_im_height = (

|

||||

max(face1["image"].height, face2["image"].height)

|

||||

+ max(face1["chunk_y_offset"], face2["chunk_y_offset"])

|

||||

- min(face1["chunk_y_offset"], face2["chunk_y_offset"])

|

||||

)

|

||||

pil_image = Image.new(mode=face1["image"].mode, size=(new_im_width, new_im_height))

|

||||

pil_image.paste(face1["image"], (face1_x_offset, face1_y_offset))

|

||||

pil_image.paste(face2["image"], (face2_x_offset, face2_y_offset))

|

||||

|

||||

# Mask images are always from the origin

|

||||

new_mask_im_width = max(face1["mask"].width, face2["mask"].width)

|

||||

new_mask_im_height = max(face1["mask"].height, face2["mask"].height)

|

||||

mask_pil = create_white_image(new_mask_im_width, new_mask_im_height)

|

||||

black_image = create_black_image(face1["mask"].width, face1["mask"].height)

|

||||

mask_pil.paste(black_image, (0, 0), ImageOps.invert(face1["mask"]))

|

||||

black_image = create_black_image(face2["mask"].width, face2["mask"].height)

|

||||

mask_pil.paste(black_image, (0, 0), ImageOps.invert(face2["mask"]))

|

||||

|

||||

new_face = FaceResultData(

|

||||

image=pil_image,

|

||||

mask=mask_pil,

|

||||

x_center=max(face1["x_center"], face2["x_center"]),

|

||||

y_center=max(face1["y_center"], face2["y_center"]),

|

||||

mesh_width=max(face1["mesh_width"], face2["mesh_width"]),

|

||||

mesh_height=max(face1["mesh_height"], face2["mesh_height"]),

|

||||

chunk_x_offset=max(face1["chunk_x_offset"], face2["chunk_x_offset"]),

|

||||

chunk_y_offset=max(face2["chunk_y_offset"], face2["chunk_y_offset"]),

|

||||

)

|

||||

return new_face

|

||||

|

||||

|

||||

def prepare_faces_list(

|

||||

face_result_list: list[FaceResultData],

|

||||

) -> list[FaceResultDataWithId]:

|

||||

@@ -91,7 +135,7 @@ def prepare_faces_list(

|

||||

should_add = True

|

||||

candidate_x_center = candidate["x_center"]

|

||||

candidate_y_center = candidate["y_center"]

|

||||

for face in deduped_faces:

|

||||

for idx, face in enumerate(deduped_faces):

|

||||

face_center_x = face["x_center"]

|

||||

face_center_y = face["y_center"]

|

||||

face_radius_w = face["mesh_width"] / 2

|

||||

@@ -105,6 +149,7 @@ def prepare_faces_list(

|

||||

)

|

||||

|

||||

if p < 1: # Inside of the already-added face's radius

|

||||

deduped_faces[idx] = coalesce_faces(face, candidate)

|

||||

should_add = False

|

||||

break

|

||||

|

||||

@@ -138,7 +183,6 @@ def generate_face_box_mask(

|

||||

chunk_x_offset: int = 0,

|

||||

chunk_y_offset: int = 0,

|

||||

draw_mesh: bool = True,

|

||||

check_bounds: bool = True,

|

||||

) -> list[FaceResultData]:

|

||||

result = []

|

||||

mask_pil = None

|

||||

@@ -211,33 +255,20 @@ def generate_face_box_mask(

|

||||

mask_pil = create_white_image(w + chunk_x_offset, h + chunk_y_offset)

|

||||

mask_pil.paste(init_mask_pil, (chunk_x_offset, chunk_y_offset))

|

||||

|

||||

left_side = x_center - mesh_width

|

||||

right_side = x_center + mesh_width

|

||||

top_side = y_center - mesh_height

|

||||

bottom_side = y_center + mesh_height

|

||||

im_width, im_height = pil_image.size

|

||||

over_w = im_width * 0.1

|

||||

over_h = im_height * 0.1

|

||||

if not check_bounds or (

|

||||

(left_side >= -over_w)

|

||||

and (right_side < im_width + over_w)

|

||||

and (top_side >= -over_h)

|

||||

and (bottom_side < im_height + over_h)

|

||||

):

|

||||

x_center = float(x_center)

|

||||

y_center = float(y_center)

|

||||

face = FaceResultData(

|

||||

image=pil_image,

|

||||

mask=mask_pil or create_white_image(*pil_image.size),

|

||||

x_center=x_center + chunk_x_offset,

|

||||

y_center=y_center + chunk_y_offset,

|

||||

mesh_width=mesh_width,

|

||||

mesh_height=mesh_height,

|

||||

)

|

||||

x_center = float(x_center)

|

||||

y_center = float(y_center)

|

||||

face = FaceResultData(

|

||||

image=pil_image,

|

||||

mask=mask_pil or create_white_image(*pil_image.size),

|

||||

x_center=x_center + chunk_x_offset,

|

||||

y_center=y_center + chunk_y_offset,

|

||||

mesh_width=mesh_width,

|

||||

mesh_height=mesh_height,

|

||||

chunk_x_offset=chunk_x_offset,

|

||||

chunk_y_offset=chunk_y_offset,

|

||||

)

|

||||

|

||||

result.append(face)

|

||||

else:

|

||||

context.services.logger.info("FaceTools --> Face out of bounds, ignoring.")

|

||||

result.append(face)

|

||||

|

||||

return result

|

||||

|

||||

@@ -346,7 +377,6 @@ def get_faces_list(

|

||||

chunk_x_offset=0,

|

||||

chunk_y_offset=0,

|

||||

draw_mesh=draw_mesh,

|

||||

check_bounds=False,

|

||||

)

|

||||

if should_chunk or len(result) == 0:

|

||||

context.services.logger.info("FaceTools --> Chunking image (chunk toggled on, or no face found in full image).")

|

||||

@@ -360,24 +390,26 @@ def get_faces_list(

|

||||

if width > height:

|

||||

# Landscape - slice the image horizontally

|

||||

fx = 0.0

|

||||

steps = int(width * 2 / height)

|

||||

steps = int(width * 2 / height) + 1

|

||||

increment = (width - height) / (steps - 1)

|

||||

while fx <= (width - height):

|

||||

x = int(fx)

|

||||

image_chunks.append(image.crop((x, 0, x + height - 1, height - 1)))

|

||||

image_chunks.append(image.crop((x, 0, x + height, height)))

|

||||

x_offsets.append(x)

|

||||

y_offsets.append(0)

|

||||

fx += (width - height) / steps

|

||||

fx += increment

|

||||

context.services.logger.info(f"FaceTools --> Chunk starting at x = {x}")

|

||||

elif height > width:

|

||||

# Portrait - slice the image vertically

|

||||

fy = 0.0

|

||||

steps = int(height * 2 / width)

|

||||

steps = int(height * 2 / width) + 1

|

||||

increment = (height - width) / (steps - 1)

|

||||

while fy <= (height - width):

|

||||

y = int(fy)

|

||||

image_chunks.append(image.crop((0, y, width - 1, y + width - 1)))

|

||||

image_chunks.append(image.crop((0, y, width, y + width)))

|

||||

x_offsets.append(0)

|

||||

y_offsets.append(y)

|

||||

fy += (height - width) / steps

|

||||

fy += increment

|

||||

context.services.logger.info(f"FaceTools --> Chunk starting at y = {y}")

|

||||

|

||||

for idx in range(len(image_chunks)):

|

||||

@@ -404,7 +436,7 @@ def get_faces_list(

|

||||

return all_faces

|

||||

|

||||

|

||||

@invocation("face_off", title="FaceOff", tags=["image", "faceoff", "face", "mask"], category="image", version="1.0.1")

|

||||

@invocation("face_off", title="FaceOff", tags=["image", "faceoff", "face", "mask"], category="image", version="1.0.2")

|

||||

class FaceOffInvocation(BaseInvocation):

|

||||

"""Bound, extract, and mask a face from an image using MediaPipe detection"""

|

||||

|

||||

@@ -498,7 +530,7 @@ class FaceOffInvocation(BaseInvocation):

|

||||

return output

|

||||

|

||||

|

||||

@invocation("face_mask_detection", title="FaceMask", tags=["image", "face", "mask"], category="image", version="1.0.1")

|

||||

@invocation("face_mask_detection", title="FaceMask", tags=["image", "face", "mask"], category="image", version="1.0.2")

|

||||

class FaceMaskInvocation(BaseInvocation):

|

||||

"""Face mask creation using mediapipe face detection"""

|

||||

|

||||

@@ -616,7 +648,7 @@ class FaceMaskInvocation(BaseInvocation):

|

||||

|

||||

|

||||

@invocation(

|

||||

"face_identifier", title="FaceIdentifier", tags=["image", "face", "identifier"], category="image", version="1.0.1"

|

||||

"face_identifier", title="FaceIdentifier", tags=["image", "face", "identifier"], category="image", version="1.0.2"

|

||||

)

|

||||

class FaceIdentifierInvocation(BaseInvocation):

|

||||

"""Outputs an image with detected face IDs printed on each face. For use with other FaceTools."""

|

||||

|

||||

@@ -10,7 +10,7 @@ import torch

|

||||

import torchvision.transforms as T

|

||||

from diffusers import AutoencoderKL, AutoencoderTiny

|

||||

from diffusers.image_processor import VaeImageProcessor

|

||||

from diffusers.models import UNet2DConditionModel

|

||||

from diffusers.models.adapter import FullAdapterXL, T2IAdapter

|

||||

from diffusers.models.attention_processor import (

|

||||

AttnProcessor2_0,

|

||||

LoRAAttnProcessor2_0,

|

||||

@@ -33,6 +33,7 @@ from invokeai.app.invocations.primitives import (

|

||||

LatentsOutput,

|

||||

build_latents_output,

|

||||

)

|

||||

from invokeai.app.invocations.t2i_adapter import T2IAdapterField

|

||||

from invokeai.app.util.controlnet_utils import prepare_control_image

|

||||

from invokeai.app.util.step_callback import stable_diffusion_step_callback

|

||||

from invokeai.backend.ip_adapter.ip_adapter import IPAdapter, IPAdapterPlus

|

||||

@@ -47,6 +48,7 @@ from ...backend.stable_diffusion.diffusers_pipeline import (

|

||||

ControlNetData,

|

||||

IPAdapterData,

|

||||

StableDiffusionGeneratorPipeline,

|

||||

T2IAdapterData,

|

||||

image_resized_to_grid_as_tensor,

|

||||

)

|

||||

from ...backend.stable_diffusion.diffusion.shared_invokeai_diffusion import PostprocessingSettings

|

||||

@@ -196,7 +198,7 @@ def get_scheduler(

|

||||

title="Denoise Latents",

|

||||

tags=["latents", "denoise", "txt2img", "t2i", "t2l", "img2img", "i2i", "l2l"],

|

||||

category="latents",

|

||||

version="1.1.0",

|

||||

version="1.3.0",

|

||||

)

|

||||

class DenoiseLatentsInvocation(BaseInvocation):

|

||||

"""Denoises noisy latents to decodable images"""

|

||||

@@ -223,12 +225,15 @@ class DenoiseLatentsInvocation(BaseInvocation):

|

||||

input=Input.Connection,

|

||||

ui_order=5,

|

||||

)

|

||||

ip_adapter: Optional[IPAdapterField] = InputField(

|

||||

ip_adapter: Optional[Union[IPAdapterField, list[IPAdapterField]]] = InputField(

|

||||

description=FieldDescriptions.ip_adapter, title="IP-Adapter", default=None, input=Input.Connection, ui_order=6

|

||||

)

|

||||

t2i_adapter: Union[T2IAdapterField, list[T2IAdapterField]] = InputField(

|

||||

description=FieldDescriptions.t2i_adapter, title="T2I-Adapter", default=None, input=Input.Connection, ui_order=7

|

||||

)

|

||||

latents: Optional[LatentsField] = InputField(description=FieldDescriptions.latents, input=Input.Connection)

|

||||

denoise_mask: Optional[DenoiseMaskField] = InputField(

|

||||

default=None, description=FieldDescriptions.mask, input=Input.Connection, ui_order=7

|

||||

default=None, description=FieldDescriptions.mask, input=Input.Connection, ui_order=8

|

||||

)

|

||||

|

||||

@validator("cfg_scale")

|

||||

@@ -404,52 +409,150 @@ class DenoiseLatentsInvocation(BaseInvocation):

|

||||

def prep_ip_adapter_data(

|

||||

self,

|

||||

context: InvocationContext,

|

||||

ip_adapter: Optional[IPAdapterField],

|

||||

ip_adapter: Optional[Union[IPAdapterField, list[IPAdapterField]]],

|

||||

conditioning_data: ConditioningData,

|

||||

unet: UNet2DConditionModel,

|

||||

exit_stack: ExitStack,

|

||||

) -> Optional[IPAdapterData]:

|

||||

) -> Optional[list[IPAdapterData]]:

|

||||

"""If IP-Adapter is enabled, then this function loads the requisite models, and adds the image prompt embeddings

|

||||

to the `conditioning_data` (in-place).

|

||||

"""

|

||||

if ip_adapter is None:

|

||||

return None

|

||||

|

||||

image_encoder_model_info = context.services.model_manager.get_model(

|

||||

model_name=ip_adapter.image_encoder_model.model_name,

|

||||

model_type=ModelType.CLIPVision,

|

||||

base_model=ip_adapter.image_encoder_model.base_model,

|

||||

context=context,

|

||||

)

|

||||

# ip_adapter could be a list or a single IPAdapterField. Normalize to a list here.

|

||||

if not isinstance(ip_adapter, list):

|

||||

ip_adapter = [ip_adapter]

|

||||

|

||||

ip_adapter_model: Union[IPAdapter, IPAdapterPlus] = exit_stack.enter_context(

|

||||

context.services.model_manager.get_model(

|

||||

model_name=ip_adapter.ip_adapter_model.model_name,

|

||||

model_type=ModelType.IPAdapter,

|

||||

base_model=ip_adapter.ip_adapter_model.base_model,

|

||||

if len(ip_adapter) == 0:

|

||||

return None

|

||||

|

||||

ip_adapter_data_list = []

|

||||

conditioning_data.ip_adapter_conditioning = []

|

||||

for single_ip_adapter in ip_adapter:

|

||||

ip_adapter_model: Union[IPAdapter, IPAdapterPlus] = exit_stack.enter_context(

|

||||

context.services.model_manager.get_model(

|

||||

model_name=single_ip_adapter.ip_adapter_model.model_name,

|

||||

model_type=ModelType.IPAdapter,

|

||||

base_model=single_ip_adapter.ip_adapter_model.base_model,

|

||||

context=context,

|

||||

)

|

||||

)

|

||||

|

||||

image_encoder_model_info = context.services.model_manager.get_model(

|

||||

model_name=single_ip_adapter.image_encoder_model.model_name,

|

||||

model_type=ModelType.CLIPVision,

|

||||

base_model=single_ip_adapter.image_encoder_model.base_model,

|

||||

context=context,

|

||||

)

|