Compare commits

971 Commits

release-1.

...

2.0.0-rc8

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

f5dfd5b0dc | ||

|

|

47a97f7e97 | ||

|

|

3c146ebf9e | ||

|

|

efbcbb0d91 | ||

|

|

578d8b0cb4 | ||

|

|

2b1aaf4ee7 | ||

|

|

4a7f5c7469 | ||

|

|

98fe044dee | ||

|

|

97684d78d3 | ||

|

|

57791834ab | ||

|

|

7a701506a4 | ||

|

|

3d7bc074cf | ||

|

|

70bb7f4a61 | ||

|

|

9c9cb71544 | ||

|

|

a7515624b2 | ||

|

|

9f34ddfcea | ||

|

|

c6a7be63b8 | ||

|

|

75165957c9 | ||

|

|

d60df54f69 | ||

|

|

82481a6f9c | ||

|

|

90d64388ab | ||

|

|

3444c8e6b8 | ||

|

|

d84321e080 | ||

|

|

6542556ebd | ||

|

|

70bbb670ec | ||

|

|

5f42d08945 | ||

|

|

911c99f125 | ||

|

|

2154dd2349 | ||

|

|

f3050fefce | ||

|

|

183b98384f | ||

|

|

6d475ee290 | ||

|

|

2f29b78a00 | ||

|

|

bcb6e2e506 | ||

|

|

194b875cf3 | ||

|

|

b2cd98259d | ||

|

|

4d5b208601 | ||

|

|

488890e6bb | ||

|

|

3feda31d82 | ||

|

|

c4b4a0e56e | ||

|

|

95c7742c9c | ||

|

|

44e3995425 | ||

|

|

7e6443c882 | ||

|

|

5dd9e30c2f | ||

|

|

f368f682e1 | ||

|

|

d16f0c8a8f | ||

|

|

18e667f98e | ||

|

|

a09c64a1fe | ||

|

|

4c482fe24a | ||

|

|

609983ffa8 | ||

|

|

0f9bff66bc | ||

|

|

7f31a79431 | ||

|

|

c5a0fc8f68 | ||

|

|

87cb35f5da | ||

|

|

5d911b43c0 | ||

|

|

483097f31c | ||

|

|

7a3eae4572 | ||

|

|

db349aa3ce | ||

|

|

b5c114c5b7 | ||

|

|

f34279b3e7 | ||

|

|

815addc452 | ||

|

|

d2db92236a | ||

|

|

ef20df8933 | ||

|

|

f041510659 | ||

|

|

feb405f19a | ||

|

|

2c8806341f | ||

|

|

b8e4c13746 | ||

|

|

40828df663 | ||

|

|

0a217b5f15 | ||

|

|

88a9f33422 | ||

|

|

ffcb31faef | ||

|

|

ea67040ef1 | ||

|

|

e79069a957 | ||

|

|

1ab09e7a06 | ||

|

|

7c6dbcb14a | ||

|

|

8e97bc24a4 | ||

|

|

5a88be3744 | ||

|

|

8ba5e385ec | ||

|

|

a0f4af087c | ||

|

|

958d7650dd | ||

|

|

e246e7c8b9 | ||

|

|

72834ad16c | ||

|

|

36ac66fff2 | ||

|

|

a53e1125e6 | ||

|

|

a3a8404f91 | ||

|

|

3902c467b9 | ||

|

|

40430ad29c | ||

|

|

fb6beaa347 | ||

|

|

1a0cf1320b | ||

|

|

fe28c5fbdc | ||

|

|

0c354eccaa | ||

|

|

33162355be | ||

|

|

a626533cd4 | ||

|

|

2d1c3d7b0b | ||

|

|

22b290daad | ||

|

|

2cbf1e6f4b | ||

|

|

3d075a6b5b | ||

|

|

c7c9abdba3 | ||

|

|

846fd32209 | ||

|

|

6197f81ba0 | ||

|

|

b09491ec45 | ||

|

|

8c9f2ae705 | ||

|

|

d3a4311c3d | ||

|

|

6b838c6105 | ||

|

|

779422d01b | ||

|

|

b947290801 | ||

|

|

f8bd1e9d78 | ||

|

|

38a9f72e11 | ||

|

|

ce3b1162ea | ||

|

|

06802150d9 | ||

|

|

e737ba09be | ||

|

|

6b56d45d85 | ||

|

|

5f4bca0147 | ||

|

|

98271a0267 | ||

|

|

743342816b | ||

|

|

fe00a8c05c | ||

|

|

36c9a7d39c | ||

|

|

acc5199f85 | ||

|

|

6e4dc229e2 | ||

|

|

d641d8ab6d | ||

|

|

8a7ca4a766 | ||

|

|

4254e4dd60 | ||

|

|

ba80f656b3 | ||

|

|

fb0341fdbf | ||

|

|

8366eee9c2 | ||

|

|

97ec1b156c | ||

|

|

6e54f504e7 | ||

|

|

f93963cd6b | ||

|

|

e49e83e944 | ||

|

|

dff4850a82 | ||

|

|

800f9615c2 | ||

|

|

29336387be | ||

|

|

984575b579 | ||

|

|

af8383c770 | ||

|

|

3491a1688b | ||

|

|

ac1999929f | ||

|

|

862a34a211 | ||

|

|

c78ae752bb | ||

|

|

cad237b4c8 | ||

|

|

c2e100e6bf | ||

|

|

bc9f892cab | ||

|

|

79f23ad031 | ||

|

|

52b952526e | ||

|

|

61790bb76a | ||

|

|

b1a3fd945d | ||

|

|

e19aab4a9b | ||

|

|

ce3fe6cce1 | ||

|

|

be99d5a4bd | ||

|

|

14616f4178 | ||

|

|

b512d198f0 | ||

|

|

61b19d406c | ||

|

|

d80fff70f2 | ||

|

|

d87bd29a68 | ||

|

|

d63897fc39 | ||

|

|

fdf6a542bf | ||

|

|

8926bfb237 | ||

|

|

3f53973a2a | ||

|

|

4247e75426 | ||

|

|

485fe67c92 | ||

|

|

b40bfb5116 | ||

|

|

f0fd138ffc | ||

|

|

f79874c586 | ||

|

|

61a3234f43 | ||

|

|

1f4306423a | ||

|

|

e759ed4bd6 | ||

|

|

f368ebea00 | ||

|

|

460dc897ad | ||

|

|

72702b9f16 | ||

|

|

db537f154e | ||

|

|

76ab7b1bfe | ||

|

|

d2b57029c8 | ||

|

|

1853870811 | ||

|

|

3f25ad59c3 | ||

|

|

d16d0d3726 | ||

|

|

66896dcbbe | ||

|

|

98950e67e9 | ||

|

|

af8d73a8e8 | ||

|

|

089327241e | ||

|

|

5e23ec25f9 | ||

|

|

9050069858 | ||

|

|

47408bb568 | ||

|

|

c78c39e676 | ||

|

|

636c356aaf | ||

|

|

3d2175c9f8 | ||

|

|

e2bd492764 | ||

|

|

65cfb0f312 | ||

|

|

66dac1884b | ||

|

|

ac51ec4939 | ||

|

|

b1d1063a25 | ||

|

|

0678b24ebb | ||

|

|

53b4c3cc60 | ||

|

|

d117d23469 | ||

|

|

16a06ba66e | ||

|

|

6858c14d94 | ||

|

|

bf21a0bf02 | ||

|

|

a3463abf13 | ||

|

|

880142708d | ||

|

|

e69aa94800 | ||

|

|

660641e720 | ||

|

|

cd8be1d0e9 | ||

|

|

413064cf45 | ||

|

|

40b3d07900 | ||

|

|

803a51d5ad | ||

|

|

5f22a72188 | ||

|

|

48aca04a72 | ||

|

|

665fd8aebf | ||

|

|

21da4592d1 | ||

|

|

f1d4862b13 | ||

|

|

88e3b6d310 | ||

|

|

0ab5f2159d | ||

|

|

9b4d328be0 | ||

|

|

bdbc76fcd4 | ||

|

|

110c4f70df | ||

|

|

28f06c7200 | ||

|

|

c0aa92ea13 | ||

|

|

8c751d342d | ||

|

|

883b2b6e62 | ||

|

|

9903ce60f0 | ||

|

|

50ac367a38 | ||

|

|

7cf7ba42fb | ||

|

|

a80119f826 | ||

|

|

069f91f930 | ||

|

|

6142cf25cc | ||

|

|

72dd5b18ee | ||

|

|

93001f48f7 | ||

|

|

19174949b6 | ||

|

|

a1739a73b4 | ||

|

|

60f0090786 | ||

|

|

6987c77e2a | ||

|

|

e91aad6527 | ||

|

|

0305c63a07 | ||

|

|

fff01f2068 | ||

|

|

25777cf922 | ||

|

|

2e5169c74b | ||

|

|

05c1810f11 | ||

|

|

2cf294e6de | ||

|

|

b93f04ee38 | ||

|

|

0632a3a2ea | ||

|

|

8731b498c0 | ||

|

|

f408ef2e6c | ||

|

|

f360e85d61 | ||

|

|

283a0d72c7 | ||

|

|

cd69d258aa | ||

|

|

1b5013ab72 | ||

|

|

e8bb39370c | ||

|

|

43c9288534 | ||

|

|

408e3774e0 | ||

|

|

1b0d6a9bdb | ||

|

|

810112577f | ||

|

|

fc61ddab3c | ||

|

|

d5209965bc | ||

|

|

18a9a7c159 | ||

|

|

3bc40506fd | ||

|

|

555f21cd25 | ||

|

|

d176fb07cd | ||

|

|

30de9fcfae | ||

|

|

e02bfd00a8 | ||

|

|

a28636dd4a | ||

|

|

b3ea8fe24e | ||

|

|

e33ed45cfc | ||

|

|

a1813fd23c | ||

|

|

7a6587d3dd | ||

|

|

cc0cf147c8 | ||

|

|

4cf4853ae4 | ||

|

|

90d8f0af73 | ||

|

|

c0e1fb5f71 | ||

|

|

e8e6be0ebe | ||

|

|

7830fd8ca1 | ||

|

|

4efee2a1ec | ||

|

|

e902b50bfc | ||

|

|

c08eedf264 | ||

|

|

1ee3023cdd | ||

|

|

3e8a861fc0 | ||

|

|

cae0579ba9 | ||

|

|

f06f69a81a | ||

|

|

b970ec4ce9 | ||

|

|

a22ae23e9e | ||

|

|

bb75174f4a | ||

|

|

27b238999f | ||

|

|

893bdca0a8 | ||

|

|

de47f68b61 | ||

|

|

6af9f2716e | ||

|

|

60b83ff07e | ||

|

|

38c9001e8e | ||

|

|

7335f908af | ||

|

|

96b90be5c3 | ||

|

|

06ad4387a2 | ||

|

|

a637c2418a | ||

|

|

5f8f2e63eb | ||

|

|

c6e4352c3f | ||

|

|

8c72da3643 | ||

|

|

23af057e5c | ||

|

|

bde9d6d33b | ||

|

|

c14bdcb8fd | ||

|

|

f816526d0d | ||

|

|

50d607ffea | ||

|

|

57577401bd | ||

|

|

58c63fe339 | ||

|

|

7b0cbb34d6 | ||

|

|

37c44ced1d | ||

|

|

e59307d284 | ||

|

|

2a6999d500 | ||

|

|

5ab7c68cc7 | ||

|

|

e92122f2c2 | ||

|

|

ead0e92bac | ||

|

|

682d74754c | ||

|

|

082df27ecd | ||

|

|

dc024845cf | ||

|

|

94ca13c494 | ||

|

|

1f29cb1dc1 | ||

|

|

f404c692ad | ||

|

|

6bf19cd897 | ||

|

|

2743e17588 | ||

|

|

f0b500fba8 | ||

|

|

aaec6baeca | ||

|

|

61611d7d0d | ||

|

|

73154a25d4 | ||

|

|

f4a275d1b5 | ||

|

|

c3712b013f | ||

|

|

3692f223e1 | ||

|

|

fccf809e3a | ||

|

|

23e62efdc5 | ||

|

|

6ea0a7699e | ||

|

|

1e8e5245eb | ||

|

|

4f926fc470 | ||

|

|

a0a9b12daf | ||

|

|

f3292a6953 | ||

|

|

062f3e8f31 | ||

|

|

20ffd4082c | ||

|

|

578638c258 | ||

|

|

cdc78cc6a1 | ||

|

|

c98ade9b25 | ||

|

|

fe0f5bcc11 | ||

|

|

df98178018 | ||

|

|

0b0cde2351 | ||

|

|

5b4c37e043 | ||

|

|

3c4c4d71c9 | ||

|

|

ea2b0828d8 | ||

|

|

045aa7a9a3 | ||

|

|

d478a241a8 | ||

|

|

0a4397094e | ||

|

|

0b786f61cc | ||

|

|

b68cb521ba | ||

|

|

e1f0ee819d | ||

|

|

f2c3fba28d | ||

|

|

676c772f11 | ||

|

|

016fd65f6a | ||

|

|

09bf6dd7c1 | ||

|

|

6e927acd58 | ||

|

|

383b870499 | ||

|

|

98f189cc69 | ||

|

|

dbc9134630 | ||

|

|

746162b578 | ||

|

|

0071f43b2c | ||

|

|

6d09f8c6b2 | ||

|

|

66e9fd4771 | ||

|

|

ef6609abcb | ||

|

|

2f93418095 | ||

|

|

9bcb0dff96 | ||

|

|

f84372efd8 | ||

|

|

334045b27d | ||

|

|

071f65a892 | ||

|

|

e30827e19b | ||

|

|

af98524179 | ||

|

|

e994073b5b | ||

|

|

ad292b095d | ||

|

|

d8685ad66b | ||

|

|

239f41f3e0 | ||

|

|

e0951f28cf | ||

|

|

100f2e8f57 | ||

|

|

7ade11c4f3 | ||

|

|

2faa116238 | ||

|

|

c94b8cd959 | ||

|

|

0c1a2b68bf | ||

|

|

c06dc5b85b | ||

|

|

34fa6e38e7 | ||

|

|

7b9958e59d | ||

|

|

f8775f2f2d | ||

|

|

b74354795d | ||

|

|

9461c8127d | ||

|

|

b5ed668eff | ||

|

|

c6c19f1b3c | ||

|

|

20ba51ce7d | ||

|

|

e45f46d673 | ||

|

|

b3e026aa4e | ||

|

|

89540f293b | ||

|

|

ed8ee8c690 | ||

|

|

31daf1f0d7 | ||

|

|

5b692f4720 | ||

|

|

b89aadb3c9 | ||

|

|

b9183b00a0 | ||

|

|

7b28b5c9a1 | ||

|

|

994c6b7512 | ||

|

|

42072fc15c | ||

|

|

103b30f915 | ||

|

|

1799bf5e42 | ||

|

|

17e755e062 | ||

|

|

ae963fcfdc | ||

|

|

3c732500e7 | ||

|

|

cd494c2f6c | ||

|

|

443fcd030f | ||

|

|

fefcdffb55 | ||

|

|

fa7fe382b7 | ||

|

|

d8d30ab4cb | ||

|

|

61f46cac31 | ||

|

|

df4c80f177 | ||

|

|

df95a7ddf2 | ||

|

|

fb7a9f37e4 | ||

|

|

1e3200801f | ||

|

|

b4debcc4ad | ||

|

|

622db491b2 | ||

|

|

0db8d6943c | ||

|

|

37e2418ee0 | ||

|

|

d81bc46218 | ||

|

|

40b61870f6 | ||

|

|

6cab2e0ca0 | ||

|

|

ba4892e03f | ||

|

|

2b9f8e7218 | ||

|

|

6cb6c4a911 | ||

|

|

693bed5514 | ||

|

|

fe12c6c099 | ||

|

|

67fbaa7c31 | ||

|

|

ddc68b01f7 | ||

|

|

f9feaac8c7 | ||

|

|

d1de1e357a | ||

|

|

cbac95b02a | ||

|

|

00d2d0e90e | ||

|

|

d1a2c4cd8c | ||

|

|

403d02d94f | ||

|

|

9a8fecb2cb | ||

|

|

45af30f3a4 | ||

|

|

58baf9533b | ||

|

|

f59b399f52 | ||

|

|

10f4c0c6b3 | ||

|

|

f9b272a7b9 | ||

|

|

96d7639d2a | ||

|

|

e6011631a1 | ||

|

|

54b9cb49c1 | ||

|

|

60b731e7ab | ||

|

|

ec2dc24ad7 | ||

|

|

357e1ad35f | ||

|

|

340189fa0d | ||

|

|

8d2afefe6a | ||

|

|

9faf7025c6 | ||

|

|

511924c9ab | ||

|

|

4d997145b4 | ||

|

|

9df743e2bf | ||

|

|

ccb2b7c2fb | ||

|

|

30e69f8b32 | ||

|

|

df4d1162b5 | ||

|

|

81bb44319a | ||

|

|

bb05a43787 | ||

|

|

66ff890b85 | ||

|

|

dd3fff1d3e | ||

|

|

d8d2043467 | ||

|

|

94a7b3cc07 | ||

|

|

b02ea331df | ||

|

|

9208bfd151 | ||

|

|

80579a30e5 | ||

|

|

5818528aa6 | ||

|

|

6ec7eab85a | ||

|

|

e6179af46a | ||

|

|

d15c75ecae | ||

|

|

2e438542e9 | ||

|

|

54c5665635 | ||

|

|

8a8c093795 | ||

|

|

7fa45b0540 | ||

|

|

89da371f48 | ||

|

|

10c51b4f35 | ||

|

|

ecb84ecc10 | ||

|

|

0d1aad53ef | ||

|

|

d0a71dc361 | ||

|

|

f31aa32e4d | ||

|

|

e1a6d0c138 | ||

|

|

0aa3dfbc35 | ||

|

|

5ad080f056 | ||

|

|

d4941ca833 | ||

|

|

00b002f731 | ||

|

|

82a223c5f6 | ||

|

|

654ec17000 | ||

|

|

e1f6ea2be7 | ||

|

|

5941ee620c | ||

|

|

a18d0b9ef1 | ||

|

|

eeecc33aaa | ||

|

|

dfad1dccf4 | ||

|

|

d016017b6d | ||

|

|

9b28c65e4b | ||

|

|

0a6c98e47d | ||

|

|

dedf8a3692 | ||

|

|

993158fc6a | ||

|

|

5e15f1e017 | ||

|

|

b9592ff2dc | ||

|

|

0bc6779361 | ||

|

|

2a292d5b82 | ||

|

|

4a5a228fd8 | ||

|

|

6665f4494f | ||

|

|

dbf2c63c90 | ||

|

|

bf1beaa607 | ||

|

|

7dee9efb24 | ||

|

|

9d6d728b51 | ||

|

|

1c649e4663 | ||

|

|

ea60d036d1 | ||

|

|

4d197f699e | ||

|

|

a3e07fb84a | ||

|

|

9fa1f31bf2 | ||

|

|

77db46f99e | ||

|

|

190ba78960 | ||

|

|

012c0dfdeb | ||

|

|

25d9ccc509 | ||

|

|

9cdf3aca7d | ||

|

|

49a96b90d8 | ||

|

|

aba94b85e8 | ||

|

|

aac5102cf3 | ||

|

|

c705ff5e72 | ||

|

|

b20f2bcd7e | ||

|

|

95f4ae4c1e | ||

|

|

a73017939f | ||

|

|

45673e8723 | ||

|

|

3f8a289e9b | ||

|

|

0ab5a36464 | ||

|

|

443a4ad87c | ||

|

|

585b47fdd1 | ||

|

|

5e433728b5 | ||

|

|

7708f4fb98 | ||

|

|

b86a1deb00 | ||

|

|

4951e66103 | ||

|

|

79b445b0ca | ||

|

|

a323070a4d | ||

|

|

f7662c1808 | ||

|

|

93c242c9fb | ||

|

|

c7c6cd7735 | ||

|

|

77ca83e103 | ||

|

|

0ea145d188 | ||

|

|

162285ae86 | ||

|

|

37c921dfe2 | ||

|

|

4f72cb44ad | ||

|

|

878ef2e9e0 | ||

|

|

4923118610 | ||

|

|

defafc0e8e | ||

|

|

16f6a6731d | ||

|

|

19fb66f3d5 | ||

|

|

0881d429f2 | ||

|

|

9a29d442b4 | ||

|

|

d301836fbd | ||

|

|

da95729d90 | ||

|

|

70aa674e9e | ||

|

|

737a97c898 | ||

|

|

8748370f44 | ||

|

|

839e30e4b8 | ||

|

|

e21938c12d | ||

|

|

eeff8e9033 | ||

|

|

336e16ef85 | ||

|

|

eceb7d2b54 | ||

|

|

9775a3502c | ||

|

|

f240e878e5 | ||

|

|

529fc57f2b | ||

|

|

0ca9d1f228 | ||

|

|

b656d333de | ||

|

|

7136603604 | ||

|

|

5cbea51f31 | ||

|

|

2cf8de9234 | ||

|

|

f9239af7dc | ||

|

|

97c0c4bfe8 | ||

|

|

c6be8f320d | ||

|

|

bfb2781279 | ||

|

|

5c43988862 | ||

|

|

62863ac586 | ||

|

|

99122708ca | ||

|

|

817c4a26de | ||

|

|

ecc6b75a3e | ||

|

|

bf707d9e75 | ||

|

|

db52991b9d | ||

|

|

a34d8813b6 | ||

|

|

103b3e7965 | ||

|

|

f74e52079b | ||

|

|

e3be28ecca | ||

|

|

dbfc35ece2 | ||

|

|

4185afea5c | ||

|

|

723d074442 | ||

|

|

6d2084e030 | ||

|

|

4a0354c604 | ||

|

|

424f4fe244 | ||

|

|

348b4b8be5 | ||

|

|

75f633cda8 | ||

|

|

2b3acc7b87 | ||

|

|

044e1ec2a8 | ||

|

|

10db192cc4 | ||

|

|

79ac0f3420 | ||

|

|

c41599746d | ||

|

|

c85ae00b33 | ||

|

|

7f0cc7072b | ||

|

|

1b5aae3ef3 | ||

|

|

6abf739315 | ||

|

|

db825b8138 | ||

|

|

33874bae8d | ||

|

|

afee7f9cea | ||

|

|

653144694f | ||

|

|

c33a84cdfd | ||

|

|

bd1715ff5c | ||

|

|

f8a540881c | ||

|

|

c71d8750f7 | ||

|

|

244239e5f6 | ||

|

|

711d49ed30 | ||

|

|

7996a30e3a | ||

|

|

d0832bfcaa | ||

|

|

a69ca31f34 | ||

|

|

049ea02fc7 | ||

|

|

ab39bc0bac | ||

|

|

5c6b612a72 | ||

|

|

56f155c590 | ||

|

|

bd4fc64156 | ||

|

|

41687746be | ||

|

|

171f8db742 | ||

|

|

d7e67b62f0 | ||

|

|

d1d044aa87 | ||

|

|

8b0d1e59fe | ||

|

|

edada042b3 | ||

|

|

29ab3c2028 | ||

|

|

7670ecc63f | ||

|

|

dd2aedacaf | ||

|

|

dc500946ad | ||

|

|

a48c03e0f4 | ||

|

|

f6284777e6 | ||

|

|

7647490617 | ||

|

|

dbc8fc7900 | ||

|

|

eef788981c | ||

|

|

5b22acca6d | ||

|

|

8c8b34a889 | ||

|

|

7ff94383ce | ||

|

|

0891910cac | ||

|

|

720e5cd651 | ||

|

|

1ad2a8e567 | ||

|

|

52d8bb2836 | ||

|

|

caf4ea3d89 | ||

|

|

95c088b303 | ||

|

|

a20113d5a3 | ||

|

|

0f93dadd6a | ||

|

|

f4004f660e | ||

|

|

4406fd138d | ||

|

|

fd7a72e147 | ||

|

|

3a2be621f3 | ||

|

|

5116c8178c | ||

|

|

91e826e5f4 | ||

|

|

751283a2de | ||

|

|

6266d9e8d6 | ||

|

|

c22c3dec56 | ||

|

|

138956e516 | ||

|

|

60be735e80 | ||

|

|

d0d95d3a2a | ||

|

|

b90a215000 | ||

|

|

6270e313b8 | ||

|

|

a01b7bdc40 | ||

|

|

1eee8111b9 | ||

|

|

64eca42610 | ||

|

|

21a1f681dc | ||

|

|

2882c2d0a6 | ||

|

|

fb857f05ba | ||

|

|

4ffdf73412 | ||

|

|

9130ad7e08 | ||

|

|

d66010410c | ||

|

|

6566c2298c | ||

|

|

063b4a1995 | ||

|

|

18cdb556bd | ||

|

|

8d16a69b80 | ||

|

|

a406b588b4 | ||

|

|

5454a0edc2 | ||

|

|

fe5cc79249 | ||

|

|

361cc42829 | ||

|

|

91cce6b4c3 | ||

|

|

9d88abe2ea | ||

|

|

a61e49bc97 | ||

|

|

d0df894c9f | ||

|

|

f46916d521 | ||

|

|

12755c6ef6 | ||

|

|

cc4f33bf3a | ||

|

|

d8c0d020eb | ||

|

|

1a4bed2e55 | ||

|

|

02bee4fdb1 | ||

|

|

e918cb1a8a | ||

|

|

d922b53c26 | ||

|

|

0163310a47 | ||

|

|

423d25716d | ||

|

|

1d999ba974 | ||

|

|

27d4bb5624 | ||

|

|

c78b496da6 | ||

|

|

dd2af3f93c | ||

|

|

2d65b03f05 | ||

|

|

2288412ef2 | ||

|

|

6bff985496 | ||

|

|

918ade12ed | ||

|

|

70ef83ac30 | ||

|

|

b6cf8b9052 | ||

|

|

68f62c8352 | ||

|

|

33936430d0 | ||

|

|

81b3de9c65 | ||

|

|

ad6cf6f2f7 | ||

|

|

ecef72ca39 | ||

|

|

92d1ed744a | ||

|

|

da4bf95fbc | ||

|

|

d43c5c01e3 | ||

|

|

51278c7a10 | ||

|

|

6ef7c1ad4e | ||

|

|

33cc16473f | ||

|

|

1701c2ea94 | ||

|

|

2e299a1daf | ||

|

|

0b582a40d0 | ||

|

|

1306457b27 | ||

|

|

f4a19af04f | ||

|

|

58545ba057 | ||

|

|

4fe265735a | ||

|

|

2b7f32502c | ||

|

|

3ee82d8a3b | ||

|

|

629ca09fda | ||

|

|

833de06299 | ||

|

|

68eabab2af | ||

|

|

a4f69e62d7 | ||

|

|

7db51d0171 | ||

|

|

1b3c7acce3 | ||

|

|

e6b2c15fc5 | ||

|

|

d319b8a762 | ||

|

|

db580ccefd | ||

|

|

9e99fcbc16 | ||

|

|

346c9b66ec | ||

|

|

a52870684a | ||

|

|

2455bb38a4 | ||

|

|

01e05a98de | ||

|

|

2cac4697aa | ||

|

|

c5e95adb49 | ||

|

|

91565970c2 | ||

|

|

09bd9fa47e | ||

|

|

dc30adfbb4 | ||

|

|

fa98601bfb | ||

|

|

66fe110148 | ||

|

|

bf50ab9dd6 | ||

|

|

70119602a0 | ||

|

|

28fe84177e | ||

|

|

35d3f0ed90 | ||

|

|

0433b3d625 | ||

|

|

4b560b50c2 | ||

|

|

9ad79207c2 | ||

|

|

0be2351c97 | ||

|

|

ed513397b2 | ||

|

|

c52ba1b022 | ||

|

|

d022d0dd11 | ||

|

|

a14fd69a5a | ||

|

|

0d2e6f90c8 | ||

|

|

58e3562652 | ||

|

|

b622819051 | ||

|

|

a547c33327 | ||

|

|

31b77dbaf8 | ||

|

|

4280788c18 | ||

|

|

146e75a1de | ||

|

|

8a2b849620 | ||

|

|

462a1961e4 | ||

|

|

84c10346fb | ||

|

|

2aa8393272 | ||

|

|

c83d01b369 | ||

|

|

5354122094 | ||

|

|

64444025a9 | ||

|

|

d566ee092a | ||

|

|

b983d61e93 | ||

|

|

153c93bdd4 | ||

|

|

3be1cee17c | ||

|

|

bdb0651eb2 | ||

|

|

1480ef84dc | ||

|

|

1714816fe2 | ||

|

|

b5565d2c82 | ||

|

|

4fad71cd8c | ||

|

|

d126db2413 | ||

|

|

7811d20f21 | ||

|

|

d524e5797d | ||

|

|

8ca4d6542d | ||

|

|

a51e18ea98 | ||

|

|

8bf321f6ae | ||

|

|

5d13207aa6 | ||

|

|

dae2b26765 | ||

|

|

713b2a03dc | ||

|

|

186d0f9d10 | ||

|

|

55b448818e | ||

|

|

b4babf7680 | ||

|

|

85f32752fe | ||

|

|

b757384aba | ||

|

|

a5d21d7c94 | ||

|

|

8f3520e2d5 | ||

|

|

19e4298cf9 | ||

|

|

42ffcd7204 | ||

|

|

d48299e56c | ||

|

|

2e22d9ecf1 | ||

|

|

18597ad1d9 | ||

|

|

0173d3a8fc | ||

|

|

e7658b941e | ||

|

|

a7a62d39d4 | ||

|

|

24ce56b3db | ||

|

|

3220f73f0a | ||

|

|

27a1044e65 | ||

|

|

39c56f20be | ||

|

|

f6b2ec61b2 | ||

|

|

e57d6fd1a6 | ||

|

|

1b40a31a89 | ||

|

|

4fce1063c4 | ||

|

|

f9862a3d88 | ||

|

|

81ad239197 | ||

|

|

ed38c97ed8 | ||

|

|

4f8e7356b3 | ||

|

|

c363f033e8 | ||

|

|

22c25b3615 | ||

|

|

7fe7cdc8c9 | ||

|

|

e26fee78b5 | ||

|

|

63178c6a8c | ||

|

|

6fb2f1ed6e | ||

|

|

38701a6d7b | ||

|

|

31fa92a83f | ||

|

|

0abfc3cac6 | ||

|

|

d483fcb53a | ||

|

|

c7db038c96 | ||

|

|

132d23e55d | ||

|

|

90cbc6362c | ||

|

|

f33ae1bdf4 | ||

|

|

754525be82 | ||

|

|

d9eab7f383 | ||

|

|

f695988915 | ||

|

|

5d19294810 | ||

|

|

77803cf233 | ||

|

|

4acfb76be6 | ||

|

|

fd13526454 | ||

|

|

7718af041c | ||

|

|

30dbf0e589 | ||

|

|

070795a3b4 | ||

|

|

e351d6ffe5 | ||

|

|

46464ac677 | ||

|

|

03d8eb19e0 | ||

|

|

fef632e0e1 | ||

|

|

05061a70b3 | ||

|

|

617a029ae7 | ||

|

|

7ae79b350e | ||

|

|

9a8cd9684e | ||

|

|

18899be4ae | ||

|

|

3ea505bc2d | ||

|

|

e2ae6d288d | ||

|

|

41b26e0520 | ||

|

|

b6053108c1 | ||

|

|

22365a3f12 | ||

|

|

594c0eeb8c | ||

|

|

529040708b | ||

|

|

f0e2fa781f | ||

|

|

87b7446228 | ||

|

|

8a517fdc17 | ||

|

|

373a2d9c32 | ||

|

|

1f8bc9482a | ||

|

|

b85773f332 | ||

|

|

ddc0e9b4d8 | ||

|

|

44a48d0981 | ||

|

|

8bbe7936bd | ||

|

|

9e7865704a | ||

|

|

ac02a775e4 | ||

|

|

7c485a1a4a | ||

|

|

36bc989a27 | ||

|

|

ea2ee33be8 | ||

|

|

5d67986997 | ||

|

|

7dfca3dcb5 | ||

|

|

e0de42bd03 | ||

|

|

614974a8e8 | ||

|

|

6e49c070bb | ||

|

|

08a9702b73 | ||

|

|

042a9043d1 | ||

|

|

a7ac93a899 | ||

|

|

3b2569ebdd | ||

|

|

8b9a520c5c | ||

|

|

ba03289c14 | ||

|

|

d1551b1bd4 | ||

|

|

fab9e1a423 | ||

|

|

59be6c815d | ||

|

|

ff6c11406b | ||

|

|

6f90c7daf6 | ||

|

|

38ed6393fa | ||

|

|

a5a3300fc6 | ||

|

|

0ab03a5fde | ||

|

|

800132970e | ||

|

|

555f13e469 | ||

|

|

9b5101cd8d | ||

|

|

7040995ceb | ||

|

|

5129f256a3 | ||

|

|

b0b4ccf521 | ||

|

|

ed72ff3268 | ||

|

|

89805a5239 | ||

|

|

e00397f9ca | ||

|

|

12f59e1daa | ||

|

|

cf750f62db | ||

|

|

0f28663805 | ||

|

|

f3fad22cb6 | ||

|

|

7bf0bc5208 | ||

|

|

4e5aa7e714 | ||

|

|

46a223f229 | ||

|

|

eb9f0be91a | ||

|

|

4f02b72c9c | ||

|

|

dd670200bb | ||

|

|

8f89a2456a | ||

|

|

407d70a987 | ||

|

|

f1ffb5b51b | ||

|

|

4f1664ec4f | ||

|

|

fcdd95b652 | ||

|

|

470a62dbbe | ||

|

|

2c08cf7175 | ||

|

|

539c15966d | ||

|

|

5f844807cb | ||

|

|

cb86b9ae6e | ||

|

|

3a30a8f2d2 | ||

|

|

60ed004328 | ||

|

|

dbb9132f4d | ||

|

|

5711b6d611 | ||

|

|

f1bed52530 | ||

|

|

23fb4a72bb | ||

|

|

c38b6964b4 | ||

|

|

e202441f0c | ||

|

|

d051d86df6 | ||

|

|

b49475a54f | ||

|

|

797de3257c | ||

|

|

31b22e057d | ||

|

|

078859207d | ||

|

|

a10baf5808 | ||

|

|

0eba55ddbc | ||

|

|

19fa222810 | ||

|

|

b3e3b0e861 | ||

|

|

dde2994d10 | ||

|

|

888ca39ce2 | ||

|

|

f4c95bfec0 | ||

|

|

91d3e4605e | ||

|

|

652c67c90e | ||

|

|

2114c386ad | ||

|

|

6d2b4cbda1 | ||

|

|

562831fc4b | ||

|

|

d04518e65e | ||

|

|

d598b6c79d | ||

|

|

4ec21a5423 | ||

|

|

b64c902354 | ||

|

|

2ada3288e7 | ||

|

|

91966e9ffa | ||

|

|

2ad73246f9 | ||

|

|

d3a802db69 | ||

|

|

b95908daec | ||

|

|

79add5f0b6 | ||

|

|

650ae3eb13 | ||

|

|

0e3059728c | ||

|

|

b7735b3788 | ||

|

|

39b55ae016 | ||

|

|

e82c5eba18 | ||

|

|

1c8ecacddf | ||

|

|

26dc05e0e0 | ||

|

|

49247b4aa4 | ||

|

|

eb58276a2c | ||

|

|

72a9d75330 | ||

|

|

1a7743f3c2 | ||

|

|

0b4459b707 | ||

|

|

c521ac08ee | ||

|

|

29727f3e12 | ||

|

|

51b9a1d8d3 | ||

|

|

ab131cb55e | ||

|

|

269fcf92d9 | ||

|

|

8b682ac83b | ||

|

|

36e4130f1c | ||

|

|

b978536385 | ||

|

|

0a7fe6f2d9 | ||

|

|

b12955c963 | ||

|

|

9133087850 | ||

|

|

25fa0ad1f2 | ||

|

|

df9f088eb4 | ||

|

|

b1600d4ca3 | ||

|

|

0efc3bf780 | ||

|

|

dd16fe16bb | ||

|

|

4d72644db4 | ||

|

|

7ea168227c | ||

|

|

ef8ddffe46 |

32

.dev_scripts/diff_images.py

Normal file

@@ -0,0 +1,32 @@

|

||||

import argparse

|

||||

|

||||

import numpy as np

|

||||

from PIL import Image

|

||||

|

||||

|

||||

def read_image_int16(image_path):

|

||||

image = Image.open(image_path)

|

||||

return np.array(image).astype(np.int16)

|

||||

|

||||

|

||||

def calc_images_mean_L1(image1_path, image2_path):

|

||||

image1 = read_image_int16(image1_path)

|

||||

image2 = read_image_int16(image2_path)

|

||||

assert image1.shape == image2.shape

|

||||

|

||||

mean_L1 = np.abs(image1 - image2).mean()

|

||||

return mean_L1

|

||||

|

||||

|

||||

def parse_args():

|

||||

parser = argparse.ArgumentParser()

|

||||

parser.add_argument('image1_path')

|

||||

parser.add_argument('image2_path')

|

||||

args = parser.parse_args()

|

||||

return args

|

||||

|

||||

|

||||

if __name__ == '__main__':

|

||||

args = parse_args()

|

||||

mean_L1 = calc_images_mean_L1(args.image1_path, args.image2_path)

|

||||

print(mean_L1)

|

||||

|

After Width: | Height: | Size: 416 KiB |

1

.dev_scripts/sample_command.txt

Normal file

@@ -0,0 +1 @@

|

||||

"a photograph of an astronaut riding a horse" -s50 -S42

|

||||

19

.dev_scripts/test_regression_txt2img_dream_v1_4.sh

Normal file

@@ -0,0 +1,19 @@

|

||||

# generate an image

|

||||

PROMPT_FILE=".dev_scripts/sample_command.txt"

|

||||

OUT_DIR="outputs/img-samples/test_regression_txt2img_v1_4"

|

||||

SAMPLES_DIR=${OUT_DIR}

|

||||

python scripts/dream.py \

|

||||

--from_file ${PROMPT_FILE} \

|

||||

--outdir ${OUT_DIR} \

|

||||

--sampler plms

|

||||

|

||||

# original output by CompVis/stable-diffusion

|

||||

IMAGE1=".dev_scripts/images/v1_4_astronaut_rides_horse_plms_step50_seed42.png"

|

||||

# new output

|

||||

IMAGE2=`ls -A ${SAMPLES_DIR}/*.png | sort | tail -n 1`

|

||||

|

||||

echo ""

|

||||

echo "comparing the following two images"

|

||||

echo "IMAGE1: ${IMAGE1}"

|

||||

echo "IMAGE2: ${IMAGE2}"

|

||||

python .dev_scripts/diff_images.py ${IMAGE1} ${IMAGE2}

|

||||

23

.dev_scripts/test_regression_txt2img_v1_4.sh

Normal file

@@ -0,0 +1,23 @@

|

||||

# generate an image

|

||||

PROMPT="a photograph of an astronaut riding a horse"

|

||||

OUT_DIR="outputs/txt2img-samples/test_regression_txt2img_v1_4"

|

||||

SAMPLES_DIR="outputs/txt2img-samples/test_regression_txt2img_v1_4/samples"

|

||||

python scripts/orig_scripts/txt2img.py \

|

||||

--prompt "${PROMPT}" \

|

||||

--outdir ${OUT_DIR} \

|

||||

--plms \

|

||||

--ddim_steps 50 \

|

||||

--n_samples 1 \

|

||||

--n_iter 1 \

|

||||

--seed 42

|

||||

|

||||

# original output by CompVis/stable-diffusion

|

||||

IMAGE1=".dev_scripts/images/v1_4_astronaut_rides_horse_plms_step50_seed42.png"

|

||||

# new output

|

||||

IMAGE2=`ls -A ${SAMPLES_DIR}/*.png | sort | tail -n 1`

|

||||

|

||||

echo ""

|

||||

echo "comparing the following two images"

|

||||

echo "IMAGE1: ${IMAGE1}"

|

||||

echo "IMAGE2: ${IMAGE2}"

|

||||

python .dev_scripts/diff_images.py ${IMAGE1} ${IMAGE2}

|

||||

4

.gitattributes

vendored

Normal file

@@ -0,0 +1,4 @@

|

||||

# Auto normalizes line endings on commit so devs don't need to change local settings.

|

||||

# Only affects text files and ignores other file types.

|

||||

# For more info see: https://www.aleksandrhovhannisyan.com/blog/crlf-vs-lf-normalizing-line-endings-in-git/

|

||||

* text=auto

|

||||

36

.github/ISSUE_TEMPLATE/bug_report.md

vendored

Normal file

@@ -0,0 +1,36 @@

|

||||

---

|

||||

name: Bug report

|

||||

about: Create a report to help us improve

|

||||

title: ''

|

||||

labels: ''

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

**Describe your environment**

|

||||

- GPU: [cuda/amd/mps/cpu]

|

||||

- VRAM: [if known]

|

||||

- CPU arch: [x86/arm]

|

||||

- OS: [Linux/Windows/macOS]

|

||||

- Python: [Anaconda/miniconda/miniforge/pyenv/other (explain)]

|

||||

- Branch: [if `git status` says anything other than "On branch main" paste it here]

|

||||

- Commit: [run `git show` and paste the line that starts with "Merge" here]

|

||||

|

||||

**Describe the bug**

|

||||

A clear and concise description of what the bug is.

|

||||

|

||||

**To Reproduce**

|

||||

Steps to reproduce the behavior:

|

||||

1. Go to '...'

|

||||

2. Click on '....'

|

||||

3. Scroll down to '....'

|

||||

4. See error

|

||||

|

||||

**Expected behavior**

|

||||

A clear and concise description of what you expected to happen.

|

||||

|

||||

**Screenshots**

|

||||

If applicable, add screenshots to help explain your problem.

|

||||

|

||||

**Additional context**

|

||||

Add any other context about the problem here.

|

||||

20

.github/ISSUE_TEMPLATE/feature_request.md

vendored

Normal file

@@ -0,0 +1,20 @@

|

||||

---

|

||||

name: Feature request

|

||||

about: Suggest an idea for this project

|

||||

title: ''

|

||||

labels: ''

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

**Is your feature request related to a problem? Please describe.**

|

||||

A clear and concise description of what the problem is. Ex. I'm always frustrated when [...]

|

||||

|

||||

**Describe the solution you'd like**

|

||||

A clear and concise description of what you want to happen.

|

||||

|

||||

**Describe alternatives you've considered**

|

||||

A clear and concise description of any alternative solutions or features you've considered.

|

||||

|

||||

**Additional context**

|

||||

Add any other context or screenshots about the feature request here.

|

||||

70

.github/workflows/create-caches.yml

vendored

Normal file

@@ -0,0 +1,70 @@

|

||||

name: Create Caches

|

||||

on:

|

||||

workflow_dispatch

|

||||

jobs:

|

||||

build:

|

||||

strategy:

|

||||

matrix:

|

||||

os: [ ubuntu-latest, macos-12 ]

|

||||

name: Create Caches on ${{ matrix.os }} conda

|

||||

runs-on: ${{ matrix.os }}

|

||||

steps:

|

||||

- name: Set platform variables

|

||||

id: vars

|

||||

run: |

|

||||

if [ "$RUNNER_OS" = "macOS" ]; then

|

||||

echo "::set-output name=ENV_FILE::environment-mac.yml"

|

||||

echo "::set-output name=PYTHON_BIN::/usr/local/miniconda/envs/ldm/bin/python"

|

||||

elif [ "$RUNNER_OS" = "Linux" ]; then

|

||||

echo "::set-output name=ENV_FILE::environment.yml"

|

||||

echo "::set-output name=PYTHON_BIN::/usr/share/miniconda/envs/ldm/bin/python"

|

||||

fi

|

||||

- name: Checkout sources

|

||||

uses: actions/checkout@v3

|

||||

- name: Use Cached Stable Diffusion v1.4 Model

|

||||

id: cache-sd-v1-4

|

||||

uses: actions/cache@v3

|

||||

env:

|

||||

cache-name: cache-sd-v1-4

|

||||

with:

|

||||

path: models/ldm/stable-diffusion-v1/model.ckpt

|

||||

key: ${{ env.cache-name }}

|

||||

restore-keys: |

|

||||

${{ env.cache-name }}

|

||||

- name: Download Stable Diffusion v1.4 Model

|

||||

if: ${{ steps.cache-sd-v1-4.outputs.cache-hit != 'true' }}

|

||||

run: |

|

||||

if [ ! -e models/ldm/stable-diffusion-v1 ]; then

|

||||

mkdir -p models/ldm/stable-diffusion-v1

|

||||

fi

|

||||

if [ ! -e models/ldm/stable-diffusion-v1/model.ckpt ]; then

|

||||

curl -o models/ldm/stable-diffusion-v1/model.ckpt ${{ secrets.SD_V1_4_URL }}

|

||||

fi

|

||||

- name: Use Cached Dependencies

|

||||

id: cache-conda-env-ldm

|

||||

uses: actions/cache@v3

|

||||

env:

|

||||

cache-name: cache-conda-env-ldm

|

||||

with:

|

||||

path: ~/.conda/envs/ldm

|

||||

key: ${{ env.cache-name }}

|

||||

restore-keys: |

|

||||

${{ env.cache-name }}-${{ runner.os }}-${{ hashFiles(steps.vars.outputs.ENV_FILE) }}

|

||||

- name: Install Dependencies

|

||||

if: ${{ steps.cache-conda-env-ldm.outputs.cache-hit != 'true' }}

|

||||

run: |

|

||||

conda env create -f ${{ steps.vars.outputs.ENV_FILE }}

|

||||

- name: Use Cached Huggingface and Torch models

|

||||

id: cache-huggingface-torch

|

||||

uses: actions/cache@v3

|

||||

env:

|

||||

cache-name: cache-huggingface-torch

|

||||

with:

|

||||

path: ~/.cache

|

||||

key: ${{ env.cache-name }}

|

||||

restore-keys: |

|

||||

${{ env.cache-name }}-${{ hashFiles('scripts/preload_models.py') }}

|

||||

- name: Download Huggingface and Torch models

|

||||

if: ${{ steps.cache-huggingface-torch.outputs.cache-hit != 'true' }}

|

||||

run: |

|

||||

${{ steps.vars.outputs.PYTHON_BIN }} scripts/preload_models.py

|

||||

28

.github/workflows/mkdocs-flow.yml

vendored

Normal file

@@ -0,0 +1,28 @@

|

||||

name: Deploy

|

||||

on:

|

||||

push:

|

||||

branches:

|

||||

- main

|

||||

pull_request:

|

||||

branches:

|

||||

- main

|

||||

jobs:

|

||||

build:

|

||||

name: Deploy docs to GitHub Pages

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- name: Checkout

|

||||

uses: actions/checkout@v3

|

||||

with:

|

||||

fetch-depth: 0

|

||||

- name: Build

|

||||

uses: Tiryoh/actions-mkdocs@v0

|

||||

with:

|

||||

mkdocs_version: 'latest' # option

|

||||

requirements: '/requirements-mkdocs.txt' # option

|

||||

configfile: '/mkdocs.yml' # option

|

||||

- name: Deploy

|

||||

uses: peaceiris/actions-gh-pages@v3

|

||||

with:

|

||||

github_token: ${{ secrets.GITHUB_TOKEN }}

|

||||

publish_dir: ./site

|

||||

97

.github/workflows/test-invoke-conda.yml

vendored

Normal file

@@ -0,0 +1,97 @@

|

||||

name: Test Invoke with Conda

|

||||

on:

|

||||

push:

|

||||

branches:

|

||||

- 'main'

|

||||

- 'development'

|

||||

jobs:

|

||||

os_matrix:

|

||||

strategy:

|

||||

matrix:

|

||||

os: [ ubuntu-latest, macos-12 ]

|

||||

name: Test invoke.py on ${{ matrix.os }} with conda

|

||||

runs-on: ${{ matrix.os }}

|

||||

steps:

|

||||

- run: |

|

||||

echo The PR was merged

|

||||

- name: Set platform variables

|

||||

id: vars

|

||||

run: |

|

||||

# Note, can't "activate" via github action; specifying the env's python has the same effect

|

||||

if [ "$RUNNER_OS" = "macOS" ]; then

|

||||

echo "::set-output name=ENV_FILE::environment-mac.yml"

|

||||

echo "::set-output name=PYTHON_BIN::/usr/local/miniconda/envs/ldm/bin/python"

|

||||

elif [ "$RUNNER_OS" = "Linux" ]; then

|

||||

echo "::set-output name=ENV_FILE::environment.yml"

|

||||

echo "::set-output name=PYTHON_BIN::/usr/share/miniconda/envs/ldm/bin/python"

|

||||

fi

|

||||

- name: Checkout sources

|

||||

uses: actions/checkout@v3

|

||||

- name: Use Cached Stable Diffusion v1.4 Model

|

||||

id: cache-sd-v1-4

|

||||

uses: actions/cache@v3

|

||||

env:

|

||||

cache-name: cache-sd-v1-4

|

||||

with:

|

||||

path: models/ldm/stable-diffusion-v1/model.ckpt

|

||||

key: ${{ env.cache-name }}

|

||||

restore-keys: |

|

||||

${{ env.cache-name }}

|

||||

- name: Download Stable Diffusion v1.4 Model

|

||||

if: ${{ steps.cache-sd-v1-4.outputs.cache-hit != 'true' }}

|

||||

run: |

|

||||

if [ ! -e models/ldm/stable-diffusion-v1 ]; then

|

||||

mkdir -p models/ldm/stable-diffusion-v1

|

||||

fi

|

||||

if [ ! -e models/ldm/stable-diffusion-v1/model.ckpt ]; then

|

||||

curl -o models/ldm/stable-diffusion-v1/model.ckpt ${{ secrets.SD_V1_4_URL }}

|

||||

fi

|

||||

- name: Use Cached Dependencies

|

||||

id: cache-conda-env-ldm

|

||||

uses: actions/cache@v3

|

||||

env:

|

||||

cache-name: cache-conda-env-ldm

|

||||

with:

|

||||

path: ~/.conda/envs/ldm

|

||||

key: ${{ env.cache-name }}

|

||||

restore-keys: |

|

||||

${{ env.cache-name }}-${{ runner.os }}-${{ hashFiles(steps.vars.outputs.ENV_FILE) }}

|

||||

- name: Install Dependencies

|

||||

if: ${{ steps.cache-conda-env-ldm.outputs.cache-hit != 'true' }}

|

||||

run: |

|

||||

conda env create -f ${{ steps.vars.outputs.ENV_FILE }}

|

||||

- name: Use Cached Huggingface and Torch models

|

||||

id: cache-hugginface-torch

|

||||

uses: actions/cache@v3

|

||||

env:

|

||||

cache-name: cache-hugginface-torch

|

||||

with:

|

||||

path: ~/.cache

|

||||

key: ${{ env.cache-name }}

|

||||

restore-keys: |

|

||||

${{ env.cache-name }}-${{ hashFiles('scripts/preload_models.py') }}

|

||||

- name: Download Huggingface and Torch models

|

||||

if: ${{ steps.cache-hugginface-torch.outputs.cache-hit != 'true' }}

|

||||

run: |

|

||||

${{ steps.vars.outputs.PYTHON_BIN }} scripts/preload_models.py

|

||||

# - name: Run tmate

|

||||

# uses: mxschmitt/action-tmate@v3

|

||||

# timeout-minutes: 30

|

||||

- name: Run the tests

|

||||

run: |

|

||||

# Note, can't "activate" via github action; specifying the env's python has the same effect

|

||||

if [ $(uname) = "Darwin" ]; then

|

||||

export PYTORCH_ENABLE_MPS_FALLBACK=1

|

||||

fi

|

||||

# Utterly hacky, but I don't know how else to do this

|

||||

if [[ ${{ github.ref }} == 'refs/heads/master' ]]; then

|

||||

time ${{ steps.vars.outputs.PYTHON_BIN }} scripts/invoke.py --from_file tests/preflight_prompts.txt

|

||||

elif [[ ${{ github.ref }} == 'refs/heads/development' ]]; then

|

||||

time ${{ steps.vars.outputs.PYTHON_BIN }} scripts/invoke.py --from_file tests/dev_prompts.txt

|

||||

fi

|

||||

mkdir -p outputs/img-samples

|

||||

- name: Archive results

|

||||

uses: actions/upload-artifact@v3

|

||||

with:

|

||||

name: results

|

||||

path: outputs/img-samples

|

||||

28

.gitignore

vendored

@@ -1,6 +1,13 @@

|

||||

# ignore default image save location and model symbolic link

|

||||

outputs/

|

||||

models/ldm/stable-diffusion-v1/model.ckpt

|

||||

ldm/dream/restoration/codeformer/weights

|

||||

|

||||

# ignore the Anaconda/Miniconda installer used while building Docker image

|

||||

anaconda.sh

|

||||

|

||||

# ignore a directory which serves as a place for initial images

|

||||

inputs/

|

||||

|

||||

# Byte-compiled / optimized / DLL files

|

||||

__pycache__/

|

||||

@@ -170,6 +177,25 @@ cython_debug/

|

||||

#.idea/

|

||||

|

||||

src

|

||||

logs/

|

||||

**/__pycache__/

|

||||

outputs

|

||||

|

||||

# Logs and associated folders

|

||||

# created from generated embeddings.

|

||||

logs

|

||||

testtube

|

||||

checkpoints

|

||||

# If it's a Mac

|

||||

.DS_Store

|

||||

|

||||

# Let the frontend manage its own gitignore

|

||||

!frontend/*

|

||||

|

||||

# Scratch folder

|

||||

.scratch/

|

||||

.vscode/

|

||||

gfpgan/

|

||||

models/ldm/stable-diffusion-v1/model.sha256

|

||||

|

||||

# GFPGAN model files

|

||||

gfpgan/

|

||||

|

||||

13

.gitmodules

vendored

@@ -1,13 +0,0 @@

|

||||

[submodule "taming-transformers"]

|

||||

path = src/taming-transformers

|

||||

url = https://github.com/CompVis/taming-transformers.git

|

||||

ignore = dirty

|

||||

[submodule "clip"]

|

||||

path = src/clip

|

||||

url = https://github.com/openai/CLIP.git

|

||||

ignore = dirty

|

||||

[submodule "k-diffusion"]

|

||||

path = src/k-diffusion

|

||||

url = https://github.com/lstein/k-diffusion.git

|

||||

ignore = dirty

|

||||

|

||||

13

.prettierrc.yaml

Normal file

@@ -0,0 +1,13 @@

|

||||

endOfLine: lf

|

||||

tabWidth: 2

|

||||

useTabs: false

|

||||

singleQuote: true

|

||||

quoteProps: as-needed

|

||||

embeddedLanguageFormatting: auto

|

||||

overrides:

|

||||

- files: '*.md'

|

||||

options:

|

||||

proseWrap: always

|

||||

printWidth: 80

|

||||

parser: markdown

|

||||

cursorOffset: -1

|

||||

@@ -1,210 +0,0 @@

|

||||

# Original README from CompViz/stable-diffusion

|

||||

*Stable Diffusion was made possible thanks to a collaboration with [Stability AI](https://stability.ai/) and [Runway](https://runwayml.com/) and builds upon our previous work:*

|

||||

|

||||

[**High-Resolution Image Synthesis with Latent Diffusion Models**](https://ommer-lab.com/research/latent-diffusion-models/)<br/>

|

||||

[Robin Rombach](https://github.com/rromb)\*,

|

||||

[Andreas Blattmann](https://github.com/ablattmann)\*,

|

||||

[Dominik Lorenz](https://github.com/qp-qp)\,

|

||||

[Patrick Esser](https://github.com/pesser),

|

||||

[Björn Ommer](https://hci.iwr.uni-heidelberg.de/Staff/bommer)<br/>

|

||||

|

||||

**CVPR '22 Oral**

|

||||

|

||||

which is available on [GitHub](https://github.com/CompVis/latent-diffusion). PDF at [arXiv](https://arxiv.org/abs/2112.10752). Please also visit our [Project page](https://ommer-lab.com/research/latent-diffusion-models/).

|

||||

|

||||

|

||||

[Stable Diffusion](#stable-diffusion-v1) is a latent text-to-image diffusion

|

||||

model.

|

||||

Thanks to a generous compute donation from [Stability AI](https://stability.ai/) and support from [LAION](https://laion.ai/), we were able to train a Latent Diffusion Model on 512x512 images from a subset of the [LAION-5B](https://laion.ai/blog/laion-5b/) database.

|

||||

Similar to Google's [Imagen](https://arxiv.org/abs/2205.11487),

|

||||

this model uses a frozen CLIP ViT-L/14 text encoder to condition the model on text prompts.

|

||||

With its 860M UNet and 123M text encoder, the model is relatively lightweight and runs on a GPU with at least 10GB VRAM.

|

||||

See [this section](#stable-diffusion-v1) below and the [model card](https://huggingface.co/CompVis/stable-diffusion).

|

||||

|

||||

|

||||

## Requirements

|

||||

|

||||

A suitable [conda](https://conda.io/) environment named `ldm` can be created

|

||||

and activated with:

|

||||

|

||||

```

|

||||

conda env create -f environment.yaml

|

||||

conda activate ldm

|

||||

```

|

||||

|

||||

You can also update an existing [latent diffusion](https://github.com/CompVis/latent-diffusion) environment by running

|

||||

|

||||

```

|

||||

conda install pytorch torchvision -c pytorch

|

||||

pip install transformers==4.19.2

|

||||

pip install -e .

|

||||

```

|

||||

|

||||

## Stable Diffusion v1

|

||||

|

||||

Stable Diffusion v1 refers to a specific configuration of the model

|

||||

architecture that uses a downsampling-factor 8 autoencoder with an 860M UNet

|

||||

and CLIP ViT-L/14 text encoder for the diffusion model. The model was pretrained on 256x256 images and

|

||||

then finetuned on 512x512 images.

|

||||

|

||||

*Note: Stable Diffusion v1 is a general text-to-image diffusion model and therefore mirrors biases and (mis-)conceptions that are present

|

||||

in its training data.

|

||||

Details on the training procedure and data, as well as the intended use of the model can be found in the corresponding [model card](https://huggingface.co/CompVis/stable-diffusion).

|

||||

Research into the safe deployment of general text-to-image models is an ongoing effort. To prevent misuse and harm, we currently provide access to the checkpoints only for [academic research purposes upon request](https://stability.ai/academia-access-form).

|

||||

**This is an experiment in safe and community-driven publication of a capable and general text-to-image model. We are working on a public release with a more permissive license that also incorporates ethical considerations.***

|

||||

|

||||

[Request access to Stable Diffusion v1 checkpoints for academic research](https://stability.ai/academia-access-form)

|

||||

|

||||

### Weights

|

||||

|

||||

We currently provide three checkpoints, `sd-v1-1.ckpt`, `sd-v1-2.ckpt` and `sd-v1-3.ckpt`,

|

||||

which were trained as follows,

|

||||

|

||||

- `sd-v1-1.ckpt`: 237k steps at resolution `256x256` on [laion2B-en](https://huggingface.co/datasets/laion/laion2B-en).

|

||||

194k steps at resolution `512x512` on [laion-high-resolution](https://huggingface.co/datasets/laion/laion-high-resolution) (170M examples from LAION-5B with resolution `>= 1024x1024`).

|

||||

- `sd-v1-2.ckpt`: Resumed from `sd-v1-1.ckpt`.

|

||||

515k steps at resolution `512x512` on "laion-improved-aesthetics" (a subset of laion2B-en,

|

||||

filtered to images with an original size `>= 512x512`, estimated aesthetics score `> 5.0`, and an estimated watermark probability `< 0.5`. The watermark estimate is from the LAION-5B metadata, the aesthetics score is estimated using an [improved aesthetics estimator](https://github.com/christophschuhmann/improved-aesthetic-predictor)).

|

||||

- `sd-v1-3.ckpt`: Resumed from `sd-v1-2.ckpt`. 195k steps at resolution `512x512` on "laion-improved-aesthetics" and 10\% dropping of the text-conditioning to improve [classifier-free guidance sampling](https://arxiv.org/abs/2207.12598).

|

||||

|

||||

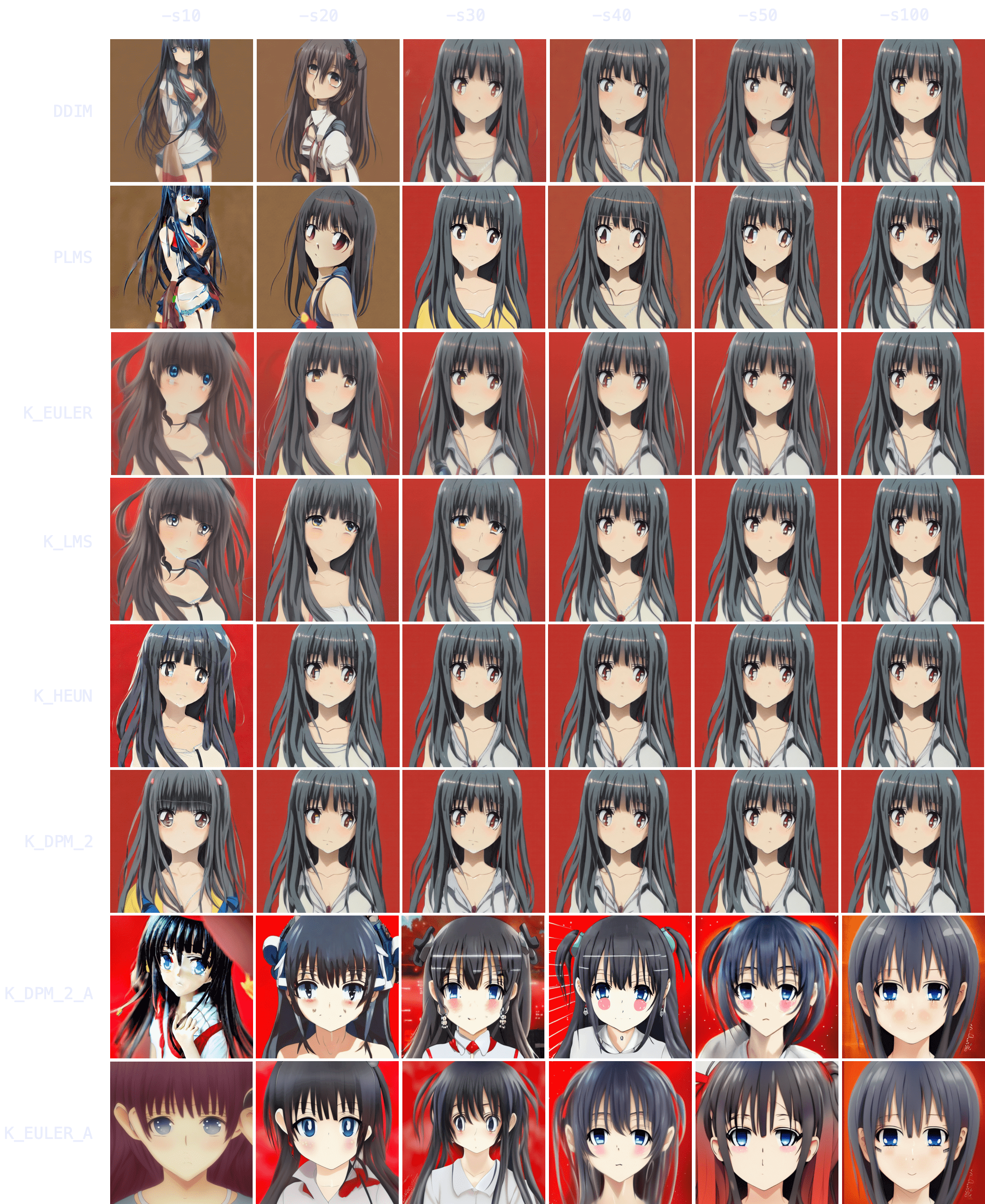

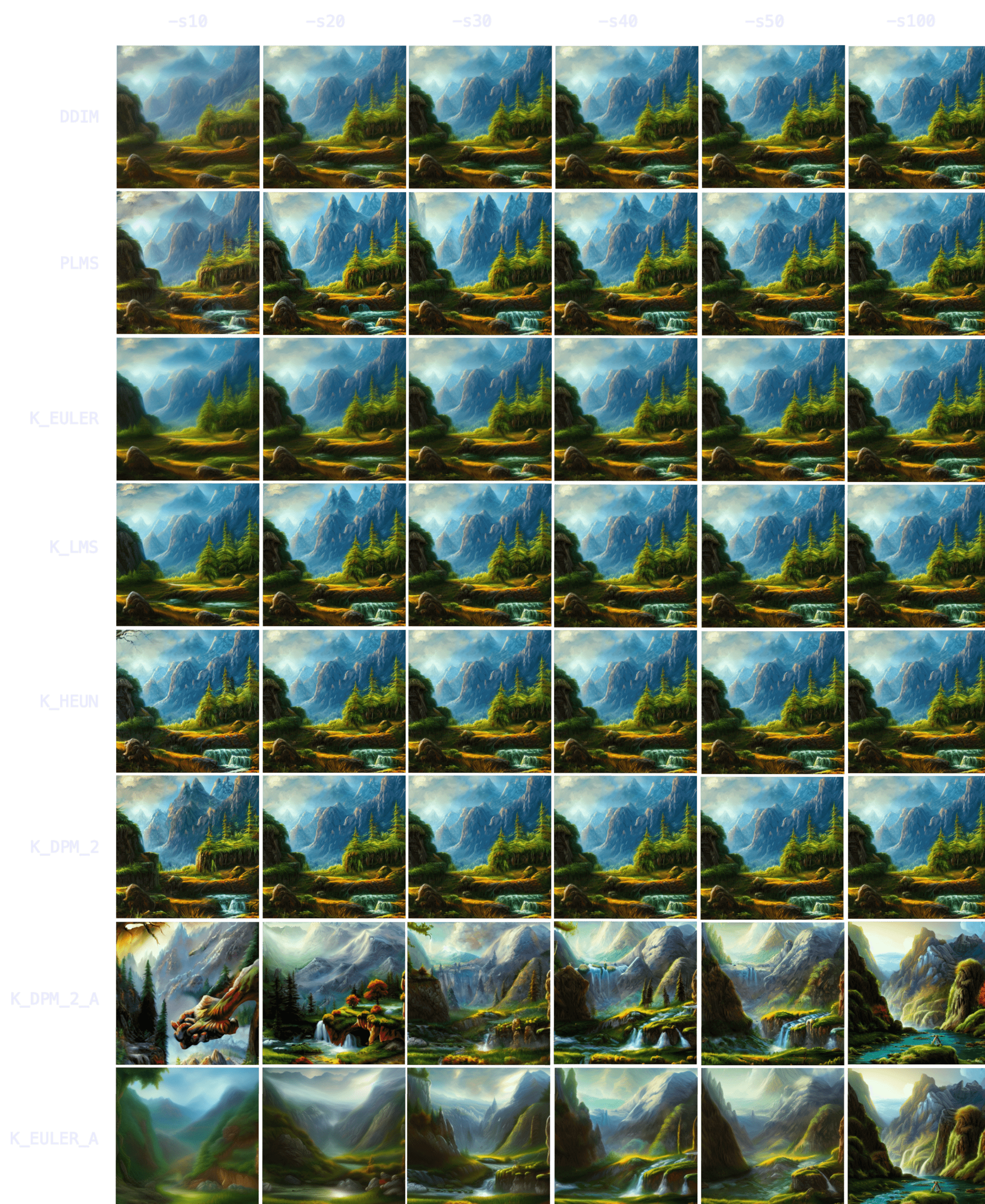

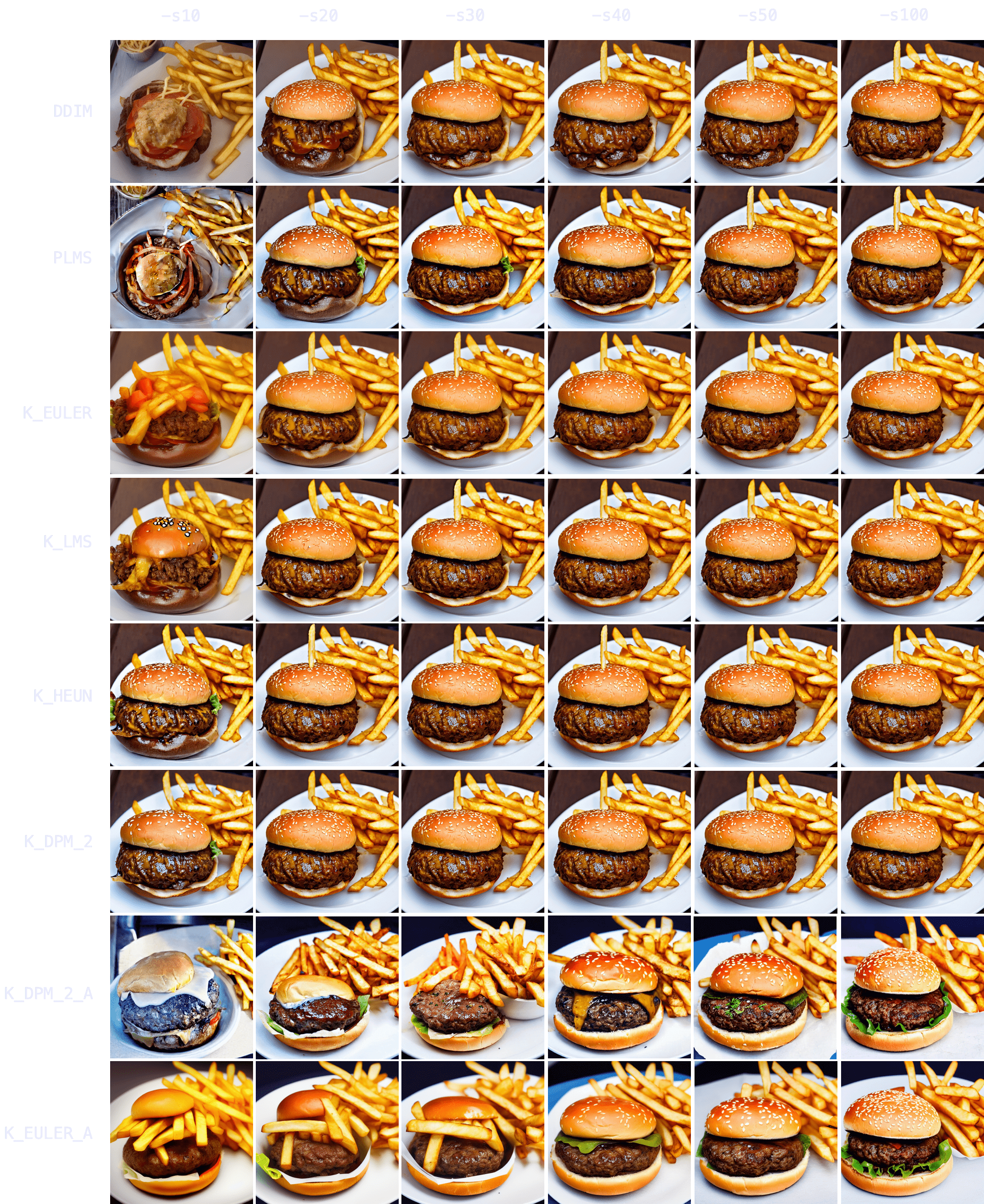

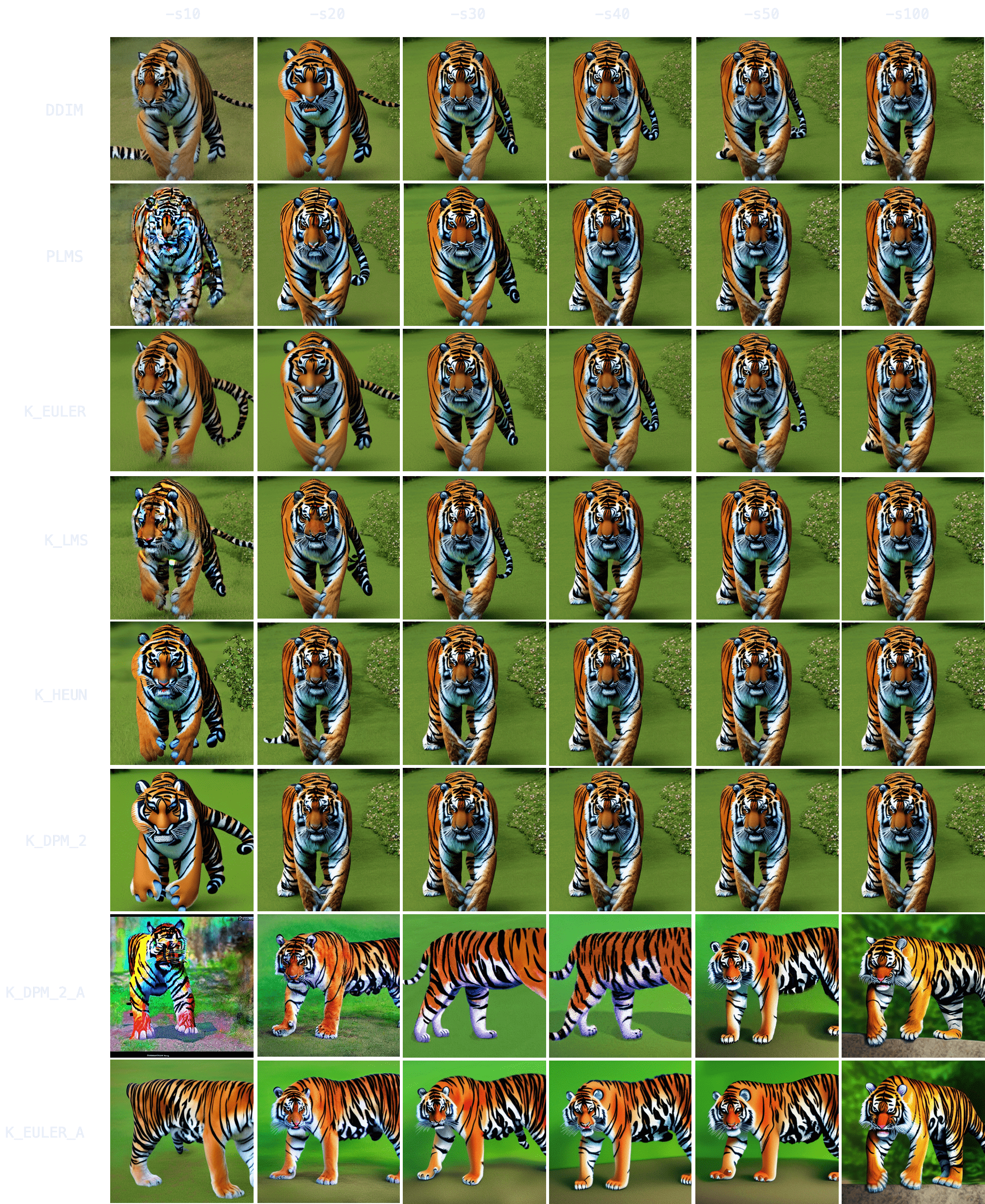

Evaluations with different classifier-free guidance scales (1.5, 2.0, 3.0, 4.0,

|

||||

5.0, 6.0, 7.0, 8.0) and 50 PLMS sampling

|

||||

steps show the relative improvements of the checkpoints:

|

||||

|

||||

|

||||

|

||||

|

||||

### Text-to-Image with Stable Diffusion

|

||||

|

||||

|

||||

|

||||

Stable Diffusion is a latent diffusion model conditioned on the (non-pooled) text embeddings of a CLIP ViT-L/14 text encoder.

|

||||

|

||||

|

||||

#### Sampling Script

|

||||

|

||||

After [obtaining the weights](#weights), link them

|

||||

```

|

||||

mkdir -p models/ldm/stable-diffusion-v1/

|

||||

ln -s <path/to/model.ckpt> models/ldm/stable-diffusion-v1/model.ckpt

|

||||

```

|

||||

and sample with

|

||||

```

|

||||

python scripts/txt2img.py --prompt "a photograph of an astronaut riding a horse" --plms

|

||||

```

|

||||

|

||||

By default, this uses a guidance scale of `--scale 7.5`, [Katherine Crowson's implementation](https://github.com/CompVis/latent-diffusion/pull/51) of the [PLMS](https://arxiv.org/abs/2202.09778) sampler,

|

||||

and renders images of size 512x512 (which it was trained on) in 50 steps. All supported arguments are listed below (type `python scripts/txt2img.py --help`).

|

||||

|

||||

```commandline

|

||||

usage: txt2img.py [-h] [--prompt [PROMPT]] [--outdir [OUTDIR]] [--skip_grid] [--skip_save] [--ddim_steps DDIM_STEPS] [--plms] [--laion400m] [--fixed_code] [--ddim_eta DDIM_ETA] [--n_iter N_ITER] [--H H] [--W W] [--C C] [--f F] [--n_samples N_SAMPLES] [--n_rows N_ROWS]

|

||||

[--scale SCALE] [--from-file FROM_FILE] [--config CONFIG] [--ckpt CKPT] [--seed SEED] [--precision {full,autocast}]

|

||||

|

||||

optional arguments:

|

||||

-h, --help show this help message and exit

|

||||

--prompt [PROMPT] the prompt to render

|

||||

--outdir [OUTDIR] dir to write results to

|

||||

--skip_grid do not save a grid, only individual samples. Helpful when evaluating lots of samples

|

||||

--skip_save do not save individual samples. For speed measurements.

|

||||

--ddim_steps DDIM_STEPS

|

||||

number of ddim sampling steps

|

||||

--plms use plms sampling

|

||||

--laion400m uses the LAION400M model

|

||||

--fixed_code if enabled, uses the same starting code across samples

|

||||

--ddim_eta DDIM_ETA ddim eta (eta=0.0 corresponds to deterministic sampling

|

||||

--n_iter N_ITER sample this often

|

||||

--H H image height, in pixel space

|

||||

--W W image width, in pixel space

|

||||

--C C latent channels

|

||||

--f F downsampling factor

|

||||

--n_samples N_SAMPLES

|

||||

how many samples to produce for each given prompt. A.k.a. batch size

|

||||

(note that the seeds for each image in the batch will be unavailable)

|

||||

--n_rows N_ROWS rows in the grid (default: n_samples)

|

||||

--scale SCALE unconditional guidance scale: eps = eps(x, empty) + scale * (eps(x, cond) - eps(x, empty))

|

||||

--from-file FROM_FILE

|

||||

if specified, load prompts from this file

|

||||

--config CONFIG path to config which constructs model

|

||||

--ckpt CKPT path to checkpoint of model

|

||||

--seed SEED the seed (for reproducible sampling)

|

||||

--precision {full,autocast}

|

||||

evaluate at this precision

|

||||

|

||||

```

|

||||

Note: The inference config for all v1 versions is designed to be used with EMA-only checkpoints.

|

||||

For this reason `use_ema=False` is set in the configuration, otherwise the code will try to switch from

|

||||

non-EMA to EMA weights. If you want to examine the effect of EMA vs no EMA, we provide "full" checkpoints

|

||||

which contain both types of weights. For these, `use_ema=False` will load and use the non-EMA weights.

|

||||

|

||||

|

||||

#### Diffusers Integration

|

||||

|

||||

Another way to download and sample Stable Diffusion is by using the [diffusers library](https://github.com/huggingface/diffusers/tree/main#new--stable-diffusion-is-now-fully-compatible-with-diffusers)

|

||||

```py

|

||||

# make sure you're logged in with `huggingface-cli login`

|

||||

from torch import autocast

|

||||

from diffusers import StableDiffusionPipeline, LMSDiscreteScheduler

|

||||

|

||||

pipe = StableDiffusionPipeline.from_pretrained(

|

||||

"CompVis/stable-diffusion-v1-3-diffusers",

|

||||

use_auth_token=True

|

||||

)

|

||||

|

||||

prompt = "a photo of an astronaut riding a horse on mars"

|

||||

with autocast("cuda"):

|

||||

image = pipe(prompt)["sample"][0]

|

||||

|

||||

image.save("astronaut_rides_horse.png")

|

||||

```

|

||||

|

||||

|

||||

|

||||

### Image Modification with Stable Diffusion

|

||||

|

||||

By using a diffusion-denoising mechanism as first proposed by [SDEdit](https://arxiv.org/abs/2108.01073), the model can be used for different

|

||||

tasks such as text-guided image-to-image translation and upscaling. Similar to the txt2img sampling script,

|

||||

we provide a script to perform image modification with Stable Diffusion.

|

||||

|

||||

The following describes an example where a rough sketch made in [Pinta](https://www.pinta-project.com/) is converted into a detailed artwork.

|

||||

```

|

||||

python scripts/img2img.py --prompt "A fantasy landscape, trending on artstation" --init-img <path-to-img.jpg> --strength 0.8

|

||||

```

|

||||

Here, strength is a value between 0.0 and 1.0, that controls the amount of noise that is added to the input image.

|

||||

Values that approach 1.0 allow for lots of variations but will also produce images that are not semantically consistent with the input. See the following example.

|

||||

|

||||

**Input**

|

||||

|

||||

|

||||

|

||||

**Outputs**

|

||||

|

||||

|

||||

|

||||

|

||||

This procedure can, for example, also be used to upscale samples from the base model.

|

||||

|

||||

|

||||

## Comments

|

||||

|

||||

- Our codebase for the diffusion models builds heavily on [OpenAI's ADM codebase](https://github.com/openai/guided-diffusion)

|

||||

and [https://github.com/lucidrains/denoising-diffusion-pytorch](https://github.com/lucidrains/denoising-diffusion-pytorch).

|

||||

Thanks for open-sourcing!

|

||||

|

||||

- The implementation of the transformer encoder is from [x-transformers](https://github.com/lucidrains/x-transformers) by [lucidrains](https://github.com/lucidrains?tab=repositories).

|

||||

|

||||

|

||||

## BibTeX

|

||||

|

||||

```

|

||||

@misc{rombach2021highresolution,

|

||||

title={High-Resolution Image Synthesis with Latent Diffusion Models},

|

||||

author={Robin Rombach and Andreas Blattmann and Dominik Lorenz and Patrick Esser and Björn Ommer},

|

||||

year={2021},

|

||||

eprint={2112.10752},

|

||||

archivePrefix={arXiv},

|

||||

primaryClass={cs.CV}

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

|

||||

549

README.md

@@ -1,461 +1,200 @@

|

||||

# Stable Diffusion Dream Script

|

||||

<div align="center">

|

||||

|

||||

This is a fork of CompVis/stable-diffusion, the wonderful open source

|

||||

text-to-image generator.

|

||||

# InvokeAI: A Stable Diffusion Toolkit

|

||||

|

||||

The original has been modified in several ways:

|

||||

_Note: This fork is rapidly evolving. Please use the

|

||||

[Issues](https://github.com/invoke-ai/InvokeAI/issues) tab to

|

||||

report bugs and make feature requests. Be sure to use the provided

|

||||

templates. They will help aid diagnose issues faster._

|

||||

|

||||

## Interactive command-line interface similar to the Discord bot

|

||||

_This repository was formally known as lstein/stable-diffusion_

|

||||

|

||||

The *dream.py* script, located in scripts/dream.py,

|

||||

provides an interactive interface to image generation similar to

|

||||

the "dream mothership" bot that Stable AI provided on its Discord

|

||||

server. Unlike the txt2img.py and img2img.py scripts provided in the

|

||||

original CompViz/stable-diffusion source code repository, the

|

||||

time-consuming initialization of the AI model

|

||||

initialization only happens once. After that image generation

|

||||

from the command-line interface is very fast.

|

||||

# **Table of Contents**

|

||||

|

||||

The script uses the readline library to allow for in-line editing,

|

||||

command history (up and down arrows), autocompletion, and more. To help

|

||||

keep track of which prompts generated which images, the script writes a

|

||||

log file of image names and prompts to the selected output directory.

|

||||

In addition, as of version 1.02, it also writes the prompt into the PNG

|

||||

file's metadata where it can be retrieved using scripts/images2prompt.py

|

||||

|

||||

|

||||