mirror of

https://github.com/invoke-ai/InvokeAI.git

synced 2026-01-15 08:28:14 -05:00

Compare commits

316 Commits

test/node-

...

releases/v

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

cfe8708996 | ||

|

|

4a0a1c30db | ||

|

|

3432fd72f8 | ||

|

|

05a43c41f9 | ||

|

|

bb48617101 | ||

|

|

aa2f68f608 | ||

|

|

fbccce7573 | ||

|

|

a35087ee6e | ||

|

|

03e463dc89 | ||

|

|

d467e138a4 | ||

|

|

ba4aaea45b | ||

|

|

53eb23b8b6 | ||

|

|

8b969053e7 | ||

|

|

98a076260b | ||

|

|

b3f4f28d76 | ||

|

|

acee4bd282 | ||

|

|

50d254fdb7 | ||

|

|

0cfc1c5f86 | ||

|

|

1419977e89 | ||

|

|

a953944894 | ||

|

|

a4cdaa245e | ||

|

|

105a4234b0 | ||

|

|

34c563060f | ||

|

|

d45c47db81 | ||

|

|

c771a4027f | ||

|

|

3fd27b1aa9 | ||

|

|

d59e534cad | ||

|

|

0c97a1e7e7 | ||

|

|

c8b109f52e | ||

|

|

a2613948d8 | ||

|

|

f8392b2f78 | ||

|

|

358116bc22 | ||

|

|

1e3590111d | ||

|

|

063b800280 | ||

|

|

3935bf92c8 | ||

|

|

066e09b517 | ||

|

|

869b4a8d49 | ||

|

|

13919ff300 | ||

|

|

634e5652ef | ||

|

|

9bdc718df5 | ||

|

|

73ca8ccdb3 | ||

|

|

f37ffda966 | ||

|

|

5a9777d443 | ||

|

|

8072c05ee0 | ||

|

|

75ff4f4ca3 | ||

|

|

30df123221 | ||

|

|

06193ddbe8 | ||

|

|

ce5122f87c | ||

|

|

43ebd68313 | ||

|

|

ec19fcafb1 | ||

|

|

6fcc7d4c4b | ||

|

|

912087e4dc | ||

|

|

593fb95213 | ||

|

|

6d821b32d3 | ||

|

|

297f96c16b | ||

|

|

0e53b27655 | ||

|

|

35ae9f6e71 | ||

|

|

a1d9e6b871 | ||

|

|

f05379f965 | ||

|

|

e34e6d6e80 | ||

|

|

86cb53342a | ||

|

|

e3de996525 | ||

|

|

25a71a1791 | ||

|

|

d16583ad1c | ||

|

|

46db1dd18f | ||

|

|

4c9344b0ee | ||

|

|

cba31efd78 | ||

|

|

4d01b5c0f2 | ||

|

|

e02af8f518 | ||

|

|

c485cf568b | ||

|

|

51451cbf21 | ||

|

|

0363a06963 | ||

|

|

cc280cbef1 | ||

|

|

7544eadd48 | ||

|

|

7d683b4db6 | ||

|

|

60b3c6a201 | ||

|

|

88c8cb61f0 | ||

|

|

43fbac26df | ||

|

|

627444e17c | ||

|

|

5601858f4f | ||

|

|

b152fbf72f | ||

|

|

f95111772a | ||

|

|

14ce7cf09c | ||

|

|

28a1a6939f | ||

|

|

6d2b4013f8 | ||

|

|

ca7a7b57bb | ||

|

|

c5d0e65a24 | ||

|

|

6cc7b55ec5 | ||

|

|

883e9973ec | ||

|

|

9e7d829906 | ||

|

|

456a0a59e0 | ||

|

|

4f2bf7e7e8 | ||

|

|

77e93888cf | ||

|

|

fa54974bff | ||

|

|

7ac99d6bc3 | ||

|

|

aa82f9360c | ||

|

|

5aefa49d7d | ||

|

|

b6e9cd4fe2 | ||

|

|

6d1057c560 | ||

|

|

b4790002c7 | ||

|

|

e02700a782 | ||

|

|

83ce8ef1ec | ||

|

|

19e487b5ee | ||

|

|

aa4b56baf2 | ||

|

|

d3a2be69f1 | ||

|

|

02c087ee37 | ||

|

|

cab8d9bb20 | ||

|

|

28e6a7139b | ||

|

|

1625854eaf | ||

|

|

3940371851 | ||

|

|

34213acd39 | ||

|

|

d3d42d4278 | ||

|

|

9638c321f5 | ||

|

|

f87b042162 | ||

|

|

183e2c3ee0 | ||

|

|

098d506b95 | ||

|

|

7aa33c352b | ||

|

|

bf62553150 | ||

|

|

2b08d9e53b | ||

|

|

8954953eca | ||

|

|

eb2fcbe28a | ||

|

|

e78b36a9f7 | ||

|

|

144ede031e | ||

|

|

8ca37bba33 | ||

|

|

a608340c89 | ||

|

|

7fecebf7db | ||

|

|

b915d74127 | ||

|

|

6ec347bd41 | ||

|

|

e54843acc9 | ||

|

|

0960518088 | ||

|

|

21de74fac4 | ||

|

|

8ce9b6c51e | ||

|

|

b64ade586d | ||

|

|

3c44a74ba5 | ||

|

|

24d0901d8e | ||

|

|

b1b5f70ea6 | ||

|

|

6392098961 | ||

|

|

2c39aec22d | ||

|

|

d066bc6d19 | ||

|

|

e487bcd0f7 | ||

|

|

e0f8274f49 | ||

|

|

69e3513e90 | ||

|

|

7e706f02cb | ||

|

|

41dad2013a | ||

|

|

3f554d6824 | ||

|

|

202c5a48c6 | ||

|

|

2d71f6f4b8 | ||

|

|

0420874f56 | ||

|

|

f222b871e9 | ||

|

|

8b8d589033 | ||

|

|

f4c895257a | ||

|

|

10af5a26f2 | ||

|

|

1088adeb0a | ||

|

|

ad49380cd1 | ||

|

|

b2fe24c401 | ||

|

|

b128db1d58 | ||

|

|

f7f0630d97 | ||

|

|

5075e9c899 | ||

|

|

3c1549cf5c | ||

|

|

9faa53ceb1 | ||

|

|

32672cfeda | ||

|

|

b5266f89ad | ||

|

|

7a3b467ce0 | ||

|

|

bdfdf854fc | ||

|

|

1c38cce16d | ||

|

|

4cdca45228 | ||

|

|

bfed08673a | ||

|

|

c1aa2b82eb | ||

|

|

0a09f84b07 | ||

|

|

b7938d9ca9 | ||

|

|

977e348a35 | ||

|

|

864f2270c3 | ||

|

|

8b44d83859 | ||

|

|

0b6315de71 | ||

|

|

578e682562 | ||

|

|

92b49e45bb | ||

|

|

b05b8ef677 | ||

|

|

382e2139bd | ||

|

|

d7ebe3f048 | ||

|

|

5c2bdf626b | ||

|

|

390a1c9fbb | ||

|

|

c46d9b8768 | ||

|

|

ef8d9843dd | ||

|

|

dc2e1a42bc | ||

|

|

2a3909da94 | ||

|

|

e0dddbd38e | ||

|

|

231b7a5000 | ||

|

|

b7773c9962 | ||

|

|

11c501fc80 | ||

|

|

7be5743011 | ||

|

|

c48e648cbb | ||

|

|

29b4ddcc7f | ||

|

|

7ee13879e3 | ||

|

|

ced297ed21 | ||

|

|

3e813ead1f | ||

|

|

820ec08e9a | ||

|

|

4dd289b337 | ||

|

|

b60b1e359e | ||

|

|

208286e97a | ||

|

|

f7b64304ae | ||

|

|

834751e877 | ||

|

|

e7a10d310f | ||

|

|

2ce07a4730 | ||

|

|

45d5ab20ec | ||

|

|

343df03a92 | ||

|

|

b57acb7353 | ||

|

|

7bf7c16a5d | ||

|

|

56340c24c8 | ||

|

|

afe9756667 | ||

|

|

fcea65770f | ||

|

|

16664da5b6 | ||

|

|

c104807201 | ||

|

|

990ce9a1da | ||

|

|

18095ecc44 | ||

|

|

fe19f11abf | ||

|

|

c2f074dc2f | ||

|

|

e02a557454 | ||

|

|

fca60862e2 | ||

|

|

94c186bb4c | ||

|

|

a22c8cb3a1 | ||

|

|

781e8521d5 | ||

|

|

d114d0ba95 | ||

|

|

cc8b7a74da | ||

|

|

388554448a | ||

|

|

cadc0839a6 | ||

|

|

d5160648d0 | ||

|

|

6d0ea42a94 | ||

|

|

2c1100509f | ||

|

|

c34b359c36 | ||

|

|

77d135967f | ||

|

|

ebf26687cb | ||

|

|

1c8991a3df | ||

|

|

3d52656176 | ||

|

|

a2777decd4 | ||

|

|

d219167849 | ||

|

|

090db1ab3a | ||

|

|

468253aa14 | ||

|

|

3ee9a21647 | ||

|

|

0d823901ef | ||

|

|

7ee55489bb | ||

|

|

163ece9aee | ||

|

|

7b2e6deaf1 | ||

|

|

63f94579c5 | ||

|

|

3dfff278aa | ||

|

|

aa7d945b23 | ||

|

|

88db094cf2 | ||

|

|

50a0691514 | ||

|

|

a255624984 | ||

|

|

2630fe3608 | ||

|

|

dee6f86d5e | ||

|

|

6ca6cf713c | ||

|

|

3f7d5b4e0f | ||

|

|

91596d9527 | ||

|

|

d669f0855d | ||

|

|

b2d5b53b5f | ||

|

|

ddc148b70b | ||

|

|

c2d43f007b | ||

|

|

7703bf2ca1 | ||

|

|

b5e1ba34b3 | ||

|

|

23fdf0156f | ||

|

|

cdbf40c9b2 | ||

|

|

46c9dcb113 | ||

|

|

6df79045fa | ||

|

|

d776e0a0a9 | ||

|

|

94ec3da7b5 | ||

|

|

f44496a579 | ||

|

|

99fe95ab03 | ||

|

|

95ecb1a0c1 | ||

|

|

bd15874cf6 | ||

|

|

30ab81b6bb | ||

|

|

78195491bc | ||

|

|

58aa159a50 | ||

|

|

d8f7c19030 | ||

|

|

c63390f6e1 | ||

|

|

cbd451c610 | ||

|

|

b0f91f2e75 | ||

|

|

3ac68cde66 | ||

|

|

a69b1cd598 | ||

|

|

65a76a086b | ||

|

|

07381e5a26 | ||

|

|

6bb378a101 | ||

|

|

7df67d077a | ||

|

|

b761807219 | ||

|

|

fb1b03960e | ||

|

|

74bfb5e1f9 | ||

|

|

bc1bce18b0 | ||

|

|

942ecbbde4 | ||

|

|

79db0e9e93 | ||

|

|

0c17f8604f | ||

|

|

054edc4077 | ||

|

|

5a9993772d | ||

|

|

f2cd9e9ae2 | ||

|

|

9f86cfa471 | ||

|

|

8c1390166f | ||

|

|

1ad98ce999 | ||

|

|

5f4a62810e | ||

|

|

35b7ae90ae | ||

|

|

9ed4d487d2 | ||

|

|

69d37217b8 | ||

|

|

7afdefb0e5 | ||

|

|

24132a7950 | ||

|

|

dff466244d | ||

|

|

45d172d5a8 | ||

|

|

f5d95ffed5 | ||

|

|

6f9c1c6d4e | ||

|

|

811c82a677 | ||

|

|

4f0e43ec1b | ||

|

|

26a7b7b66d | ||

|

|

8611ffe32d | ||

|

|

3cb6d333f6 | ||

|

|

4570702dd0 | ||

|

|

1d107f30e5 | ||

|

|

79084e9e20 | ||

|

|

fc9b4539a3 | ||

|

|

09ef57718e | ||

|

|

cab8239ba8 |

@@ -159,7 +159,7 @@ groups in `invokeia.yaml`:

|

||||

| `host` | `localhost` | Name or IP address of the network interface that the web server will listen on |

|

||||

| `port` | `9090` | Network port number that the web server will listen on |

|

||||

| `allow_origins` | `[]` | A list of host names or IP addresses that are allowed to connect to the InvokeAI API in the format `['host1','host2',...]` |

|

||||

| `allow_credentials | `true` | Require credentials for a foreign host to access the InvokeAI API (don't change this) |

|

||||

| `allow_credentials` | `true` | Require credentials for a foreign host to access the InvokeAI API (don't change this) |

|

||||

| `allow_methods` | `*` | List of HTTP methods ("GET", "POST") that the web server is allowed to use when accessing the API |

|

||||

| `allow_headers` | `*` | List of HTTP headers that the web server will accept when accessing the API |

|

||||

|

||||

|

||||

336

docs/features/UTILITIES.md

Normal file

336

docs/features/UTILITIES.md

Normal file

@@ -0,0 +1,336 @@

|

||||

---

|

||||

title: Command-line Utilities

|

||||

---

|

||||

|

||||

# :material-file-document: Utilities

|

||||

|

||||

# Command-line Utilities

|

||||

|

||||

InvokeAI comes with several scripts that are accessible via the

|

||||

command line. To access these commands, start the "developer's

|

||||

console" from the launcher (`invoke.bat` menu item [8]). Users who are

|

||||

familiar with Python can alternatively activate InvokeAI's virtual

|

||||

environment (typically, but not necessarily `invokeai/.venv`).

|

||||

|

||||

In the developer's console, type the script's name to run it. To get a

|

||||

synopsis of what a utility does and the command-line arguments it

|

||||

accepts, pass it the `-h` argument, e.g.

|

||||

|

||||

```bash

|

||||

invokeai-merge -h

|

||||

```

|

||||

## **invokeai-web**

|

||||

|

||||

This script launches the web server and is effectively identical to

|

||||

selecting option [1] in the launcher. An advantage of launching the

|

||||

server from the command line is that you can override any setting

|

||||

configuration option in `invokeai.yaml` using like-named command-line

|

||||

arguments. For example, to temporarily change the size of the RAM

|

||||

cache to 7 GB, you can launch as follows:

|

||||

|

||||

```bash

|

||||

invokeai-web --ram 7

|

||||

```

|

||||

|

||||

## **invokeai-merge**

|

||||

|

||||

This is the model merge script, the same as launcher option [4]. Call

|

||||

it with the `--gui` command-line argument to start the interactive

|

||||

console-based GUI. Alternatively, you can run it non-interactively

|

||||

using command-line arguments as illustrated in the example below which

|

||||

merges models named `stable-diffusion-1.5` and `inkdiffusion` into a new model named

|

||||

`my_new_model`:

|

||||

|

||||

```bash

|

||||

invokeai-merge --force --base-model sd-1 --models stable-diffusion-1.5 inkdiffusion --merged_model_name my_new_model

|

||||

```

|

||||

|

||||

## **invokeai-ti**

|

||||

|

||||

This is the textual inversion training script that is run by launcher

|

||||

option [3]. Call it with `--gui` to run the interactive console-based

|

||||

front end. It can also be run non-interactively. It has about a

|

||||

zillion arguments, but a typical training session can be launched

|

||||

with:

|

||||

|

||||

```bash

|

||||

invokeai-ti --model stable-diffusion-1.5 \

|

||||

--placeholder_token 'jello' \

|

||||

--learnable_property object \

|

||||

--num_train_epochs 50 \

|

||||

--train_data_dir /path/to/training/images \

|

||||

--output_dir /path/to/trained/model

|

||||

```

|

||||

|

||||

(Note that \\ is the Linux/Mac long-line continuation character. Use ^

|

||||

in Windows).

|

||||

|

||||

## **invokeai-install**

|

||||

|

||||

This is the console-based model install script that is run by launcher

|

||||

option [5]. If called without arguments, it will launch the

|

||||

interactive console-based interface. It can also be used

|

||||

non-interactively to list, add and remove models as shown by these

|

||||

examples:

|

||||

|

||||

* This will download and install three models from CivitAI, HuggingFace,

|

||||

and local disk:

|

||||

|

||||

```bash

|

||||

invokeai-install --add https://civitai.com/api/download/models/161302 ^

|

||||

gsdf/Counterfeit-V3.0 ^

|

||||

D:\Models\merge_model_two.safetensors

|

||||

```

|

||||

(Note that ^ is the Windows long-line continuation character. Use \\ on

|

||||

Linux/Mac).

|

||||

|

||||

* This will list installed models of type `main`:

|

||||

|

||||

```bash

|

||||

invokeai-model-install --list-models main

|

||||

```

|

||||

|

||||

* This will delete the models named `voxel-ish` and `realisticVision`:

|

||||

|

||||

```bash

|

||||

invokeai-model-install --delete voxel-ish realisticVision

|

||||

```

|

||||

|

||||

## **invokeai-configure**

|

||||

|

||||

This is the console-based configure script that ran when InvokeAI was

|

||||

first installed. You can run it again at any time to change the

|

||||

configuration, repair a broken install.

|

||||

|

||||

Called without any arguments, `invokeai-configure` enters interactive

|

||||

mode with two screens. The first screen is a form that provides access

|

||||

to most of InvokeAI's configuration options. The second screen lets

|

||||

you download, add, and delete models interactively. When you exit the

|

||||

second screen, the script will add any missing "support models"

|

||||

needed for core functionality, and any selected "sd weights" which are

|

||||

the model checkpoint/diffusers files.

|

||||

|

||||

This behavior can be changed via a series of command-line

|

||||

arguments. Here are some of the useful ones:

|

||||

|

||||

* `invokeai-configure --skip-sd-weights --skip-support-models`

|

||||

This will run just the configuration part of the utility, skipping

|

||||

downloading of support models and stable diffusion weights.

|

||||

|

||||

* `invokeai-configure --yes`

|

||||

This will run the configure script non-interactively. It will set the

|

||||

configuration options to their default values, install/repair support

|

||||

models, and download the "recommended" set of SD models.

|

||||

|

||||

* `invokeai-configure --yes --default_only`

|

||||

This will run the configure script non-interactively. In contrast to

|

||||

the previous command, it will only download the default SD model,

|

||||

Stable Diffusion v1.5

|

||||

|

||||

* `invokeai-configure --yes --default_only --skip-sd-weights`

|

||||

This is similar to the previous command, but will not download any

|

||||

SD models at all. It is usually used to repair a broken install.

|

||||

|

||||

By default, `invokeai-configure` runs on the currently active InvokeAI

|

||||

root folder. To run it against a different root, pass it the `--root

|

||||

</path/to/root>` argument.

|

||||

|

||||

Lastly, you can use `invokeai-configure` to create a working root

|

||||

directory entirely from scratch. Assuming you wish to make a root directory

|

||||

named `InvokeAI-New`, run this command:

|

||||

|

||||

```bash

|

||||

invokeai-configure --root InvokeAI-New --yes --default_only

|

||||

```

|

||||

This will create a minimally functional root directory. You can now

|

||||

launch the web server against it with `invokeai-web --root InvokeAI-New`.

|

||||

|

||||

## **invokeai-update**

|

||||

|

||||

This is the interactive console-based script that is run by launcher

|

||||

menu item [9] to update to a new version of InvokeAI. It takes no

|

||||

command-line arguments.

|

||||

|

||||

## **invokeai-metadata**

|

||||

|

||||

This is a script which takes a list of InvokeAI-generated images and

|

||||

outputs their metadata in the same JSON format that you get from the

|

||||

`</>` button in the Web GUI. For example:

|

||||

|

||||

```bash

|

||||

$ invokeai-metadata ffe2a115-b492-493c-afff-7679aa034b50.png

|

||||

ffe2a115-b492-493c-afff-7679aa034b50.png:

|

||||

{

|

||||

"app_version": "3.1.0",

|

||||

"cfg_scale": 8.0,

|

||||

"clip_skip": 0,

|

||||

"controlnets": [],

|

||||

"generation_mode": "sdxl_txt2img",

|

||||

"height": 1024,

|

||||

"loras": [],

|

||||

"model": {

|

||||

"base_model": "sdxl",

|

||||

"model_name": "stable-diffusion-xl-base-1.0",

|

||||

"model_type": "main"

|

||||

},

|

||||

"negative_prompt": "",

|

||||

"negative_style_prompt": "",

|

||||

"positive_prompt": "military grade sushi dinner for shock troopers",

|

||||

"positive_style_prompt": "",

|

||||

"rand_device": "cpu",

|

||||

"refiner_cfg_scale": 7.5,

|

||||

"refiner_model": {

|

||||

"base_model": "sdxl-refiner",

|

||||

"model_name": "sd_xl_refiner_1.0",

|

||||

"model_type": "main"

|

||||

},

|

||||

"refiner_negative_aesthetic_score": 2.5,

|

||||

"refiner_positive_aesthetic_score": 6.0,

|

||||

"refiner_scheduler": "euler",

|

||||

"refiner_start": 0.8,

|

||||

"refiner_steps": 20,

|

||||

"scheduler": "euler",

|

||||

"seed": 387129902,

|

||||

"steps": 25,

|

||||

"width": 1024

|

||||

}

|

||||

```

|

||||

|

||||

You may list multiple files on the command line.

|

||||

|

||||

## **invokeai-import-images**

|

||||

|

||||

InvokeAI uses a database to store information about images it

|

||||

generated, and just copying the image files from one InvokeAI root

|

||||

directory to another does not automatically import those images into

|

||||

the destination's gallery. This script allows you to bulk import

|

||||

images generated by one instance of InvokeAI into a gallery maintained

|

||||

by another. It also works on images generated by older versions of

|

||||

InvokeAI, going way back to version 1.

|

||||

|

||||

This script has an interactive mode only. The following example shows

|

||||

it in action:

|

||||

|

||||

```bash

|

||||

$ invokeai-import-images

|

||||

===============================================================================

|

||||

This script will import images generated by earlier versions of

|

||||

InvokeAI into the currently installed root directory:

|

||||

/home/XXXX/invokeai-main

|

||||

If this is not what you want to do, type ctrl-C now to cancel.

|

||||

===============================================================================

|

||||

= Configuration & Settings

|

||||

Found invokeai.yaml file at /home/XXXX/invokeai-main/invokeai.yaml:

|

||||

Database : /home/XXXX/invokeai-main/databases/invokeai.db

|

||||

Outputs : /home/XXXX/invokeai-main/outputs/images

|

||||

|

||||

Use these paths for import (yes) or choose different ones (no) [Yn]:

|

||||

Inputs: Specify absolute path containing InvokeAI .png images to import: /home/XXXX/invokeai-2.3/outputs/images/

|

||||

Include files from subfolders recursively [yN]?

|

||||

|

||||

Options for board selection for imported images:

|

||||

1) Select an existing board name. (found 4)

|

||||

2) Specify a board name to create/add to.

|

||||

3) Create/add to board named 'IMPORT'.

|

||||

4) Create/add to board named 'IMPORT' with the current datetime string appended (.e.g IMPORT_20230919T203519Z).

|

||||

5) Create/add to board named 'IMPORT' with a the original file app_version appended (.e.g IMPORT_2.2.5).

|

||||

Specify desired board option: 3

|

||||

|

||||

===============================================================================

|

||||

= Import Settings Confirmation

|

||||

|

||||

Database File Path : /home/XXXX/invokeai-main/databases/invokeai.db

|

||||

Outputs/Images Directory : /home/XXXX/invokeai-main/outputs/images

|

||||

Import Image Source Directory : /home/XXXX/invokeai-2.3/outputs/images/

|

||||

Recurse Source SubDirectories : No

|

||||

Count of .png file(s) found : 5785

|

||||

Board name option specified : IMPORT

|

||||

Database backup will be taken at : /home/XXXX/invokeai-main/databases/backup

|

||||

|

||||

Notes about the import process:

|

||||

- Source image files will not be modified, only copied to the outputs directory.

|

||||

- If the same file name already exists in the destination, the file will be skipped.

|

||||

- If the same file name already has a record in the database, the file will be skipped.

|

||||

- Invoke AI metadata tags will be updated/written into the imported copy only.

|

||||

- On the imported copy, only Invoke AI known tags (latest and legacy) will be retained (dream, sd-metadata, invokeai, invokeai_metadata)

|

||||

- A property 'imported_app_version' will be added to metadata that can be viewed in the UI's metadata viewer.

|

||||

- The new 3.x InvokeAI outputs folder structure is flat so recursively found source imges will all be placed into the single outputs/images folder.

|

||||

|

||||

Do you wish to continue with the import [Yn] ?

|

||||

|

||||

Making DB Backup at /home/lstein/invokeai-main/databases/backup/backup-20230919T203519Z-invokeai.db...Done!

|

||||

|

||||

===============================================================================

|

||||

Importing /home/XXXX/invokeai-2.3/outputs/images/17d09907-297d-4db3-a18a-60b337feac66.png

|

||||

... (5785 more lines) ...

|

||||

===============================================================================

|

||||

= Import Complete - Elpased Time: 0.28 second(s)

|

||||

|

||||

Source File(s) : 5785

|

||||

Total Imported : 5783

|

||||

Skipped b/c file already exists on disk : 1

|

||||

Skipped b/c file already exists in db : 0

|

||||

Errors during import : 1

|

||||

```

|

||||

## **invokeai-db-maintenance**

|

||||

|

||||

This script helps maintain the integrity of your InvokeAI database by

|

||||

finding and fixing three problems that can arise over time:

|

||||

|

||||

1. An image was manually deleted from the outputs directory, leaving a

|

||||

dangling image record in the InvokeAI database. This will cause a

|

||||

black image to appear in the gallery. This is an "orphaned database

|

||||

image record." The script can fix this by running a "clean"

|

||||

operation on the database, removing the orphaned entries.

|

||||

|

||||

2. An image is present in the outputs directory but there is no

|

||||

corresponding entry in the database. This can happen when the image

|

||||

is added manually to the outputs directory, or if a crash occurred

|

||||

after the image was generated but before the database was

|

||||

completely updated. The symptom is that the image is present in the

|

||||

outputs folder but doesn't appear in the InvokeAI gallery. This is

|

||||

called an "orphaned image file." The script can fix this problem by

|

||||

running an "archive" operation in which orphaned files are moved

|

||||

into a directory named `outputs/images-archive`. If you wish, you

|

||||

can then run `invokeai-image-import` to reimport these images back

|

||||

into the database.

|

||||

|

||||

3. The thumbnail for an image is missing, again causing a black

|

||||

gallery thumbnail. This is fixed by running the "thumbnaiils"

|

||||

operation, which simply regenerates and re-registers the missing

|

||||

thumbnail.

|

||||

|

||||

You can find and fix all three of these problems in a single go by

|

||||

executing this command:

|

||||

|

||||

```bash

|

||||

invokeai-db-maintenance --operation all

|

||||

```

|

||||

|

||||

Or you can run just the clean and thumbnail operations like this:

|

||||

|

||||

```bash

|

||||

invokeai-db-maintenance -operation clean, thumbnail

|

||||

```

|

||||

|

||||

If called without any arguments, the script will ask you which

|

||||

operations you wish to perform.

|

||||

|

||||

## **invokeai-migrate3**

|

||||

|

||||

This script will migrate settings and models (but not images!) from an

|

||||

InvokeAI v2.3 root folder to an InvokeAI 3.X folder. Call it with the

|

||||

source and destination root folders like this:

|

||||

|

||||

```bash

|

||||

invokeai-migrate3 --from ~/invokeai-2.3 --to invokeai-3.1.1

|

||||

```

|

||||

|

||||

Both directories must previously have been properly created and

|

||||

initialized by `invokeai-configure`. If you wish to migrate the images

|

||||

contained in the older root as well, you can use the

|

||||

`invokeai-image-migrate` script described earlier.

|

||||

|

||||

---

|

||||

|

||||

Copyright (c) 2023, Lincoln Stein and the InvokeAI Development Team

|

||||

@@ -51,6 +51,9 @@ Prevent InvokeAI from displaying unwanted racy images.

|

||||

### * [Controlling Logging](LOGGING.md)

|

||||

Control how InvokeAI logs status messages.

|

||||

|

||||

### * [Command-line Utilities](UTILITIES.md)

|

||||

A list of the command-line utilities available with InvokeAI.

|

||||

|

||||

<!-- OUT OF DATE

|

||||

### * [Miscellaneous](OTHER.md)

|

||||

Run InvokeAI on Google Colab, generate images with repeating patterns,

|

||||

|

||||

@@ -147,6 +147,7 @@ Mac and Linux machines, and runs on GPU cards with as little as 4 GB of RAM.

|

||||

|

||||

### InvokeAI Configuration

|

||||

- [Guide to InvokeAI Runtime Settings](features/CONFIGURATION.md)

|

||||

- [Database Maintenance and other Command Line Utilities](features/UTILITIES.md)

|

||||

|

||||

## :octicons-log-16: Important Changes Since Version 2.3

|

||||

|

||||

|

||||

@@ -296,8 +296,18 @@ code for InvokeAI. For this to work, you will need to install the

|

||||

on your system, please see the [Git Installation

|

||||

Guide](https://github.com/git-guides/install-git)

|

||||

|

||||

You will also need to install the [frontend development toolchain](https://github.com/invoke-ai/InvokeAI/blob/main/docs/contributing/contribution_guides/contributingToFrontend.md).

|

||||

|

||||

If you have a "normal" installation, you should create a totally separate virtual environment for the git-based installation, else the two may interfere.

|

||||

|

||||

> **Why do I need the frontend toolchain**?

|

||||

>

|

||||

> The InvokeAI project uses trunk-based development. That means our `main` branch is the development branch, and releases are tags on that branch. Because development is very active, we don't keep an updated build of the UI in `main` - we only build it for production releases.

|

||||

>

|

||||

> That means that between releases, to have a functioning application when running directly from the repo, you will need to run the UI in dev mode or build it regularly (any time the UI code changes).

|

||||

|

||||

1. Create a fork of the InvokeAI repository through the GitHub UI or [this link](https://github.com/invoke-ai/InvokeAI/fork)

|

||||

1. From the command line, run this command:

|

||||

2. From the command line, run this command:

|

||||

```bash

|

||||

git clone https://github.com/<your_github_username>/InvokeAI.git

|

||||

```

|

||||

@@ -305,10 +315,10 @@ Guide](https://github.com/git-guides/install-git)

|

||||

This will create a directory named `InvokeAI` and populate it with the

|

||||

full source code from your fork of the InvokeAI repository.

|

||||

|

||||

2. Activate the InvokeAI virtual environment as per step (4) of the manual

|

||||

3. Activate the InvokeAI virtual environment as per step (4) of the manual

|

||||

installation protocol (important!)

|

||||

|

||||

3. Enter the InvokeAI repository directory and run one of these

|

||||

4. Enter the InvokeAI repository directory and run one of these

|

||||

commands, based on your GPU:

|

||||

|

||||

=== "CUDA (NVidia)"

|

||||

@@ -334,11 +344,15 @@ installation protocol (important!)

|

||||

Be sure to pass `-e` (for an editable install) and don't forget the

|

||||

dot ("."). It is part of the command.

|

||||

|

||||

You can now run `invokeai` and its related commands. The code will be

|

||||

5. Install the [frontend toolchain](https://github.com/invoke-ai/InvokeAI/blob/main/docs/contributing/contribution_guides/contributingToFrontend.md) and do a production build of the UI as described.

|

||||

|

||||

6. You can now run `invokeai` and its related commands. The code will be

|

||||

read from the repository, so that you can edit the .py source files

|

||||

and watch the code's behavior change.

|

||||

|

||||

4. If you wish to contribute to the InvokeAI project, you are

|

||||

When you pull in new changes to the repo, be sure to re-build the UI.

|

||||

|

||||

7. If you wish to contribute to the InvokeAI project, you are

|

||||

encouraged to establish a GitHub account and "fork"

|

||||

https://github.com/invoke-ai/InvokeAI into your own copy of the

|

||||

repository. You can then use GitHub functions to create and submit

|

||||

|

||||

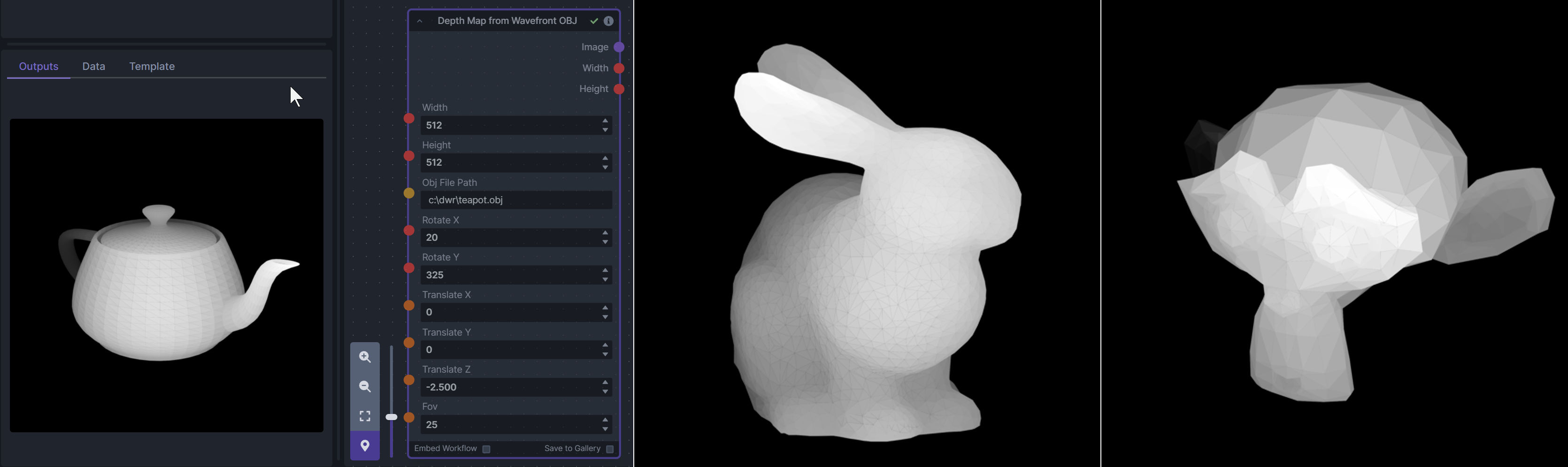

@@ -121,18 +121,6 @@ To be imported, an .obj must use triangulated meshes, so make sure to enable tha

|

||||

**Example Usage:**

|

||||

|

||||

|

||||

--------------------------------

|

||||

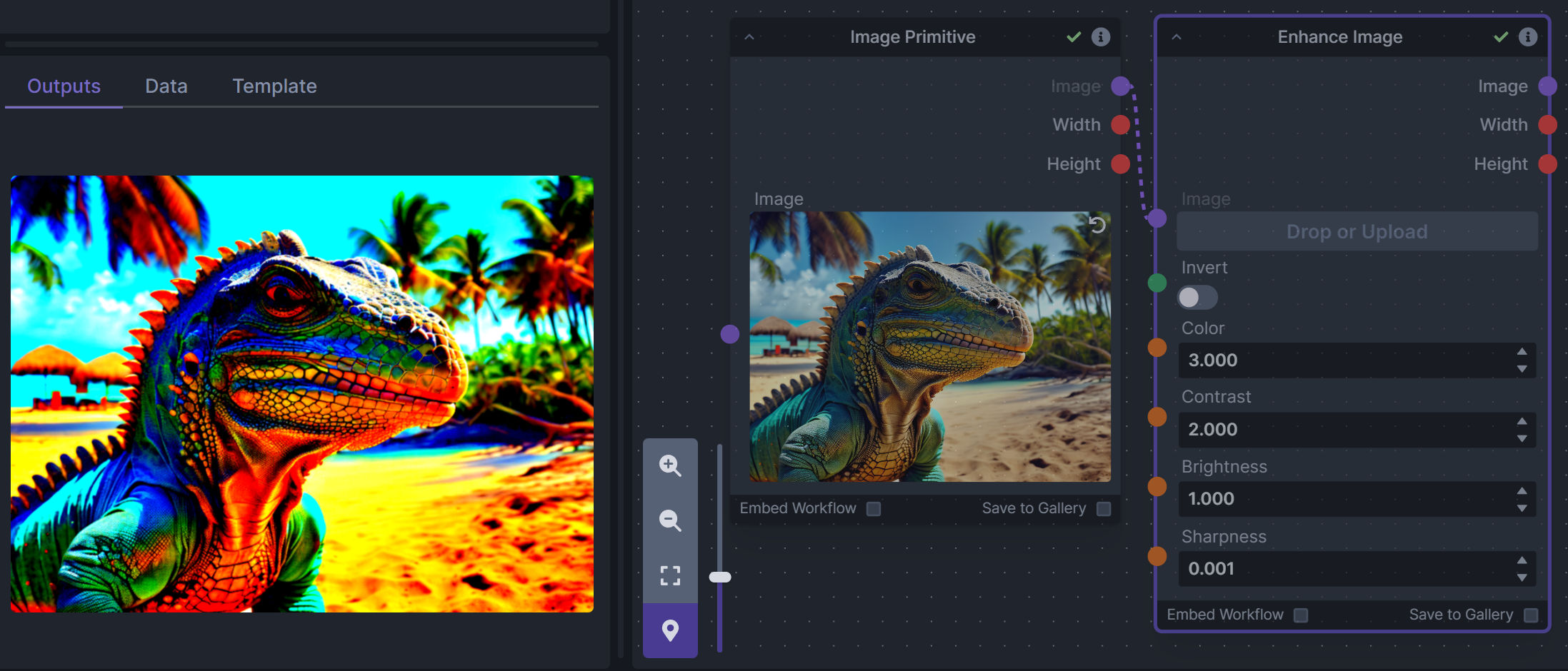

### Enhance Image (simple adjustments)

|

||||

|

||||

**Description:** Boost or reduce color saturation, contrast, brightness, sharpness, or invert colors of any image at any stage with this simple wrapper for pillow [PIL]'s ImageEnhance module.

|

||||

|

||||

Color inversion is toggled with a simple switch, while each of the four enhancer modes are activated by entering a value other than 1 in each corresponding input field. Values less than 1 will reduce the corresponding property, while values greater than 1 will enhance it.

|

||||

|

||||

**Node Link:** https://github.com/dwringer/image-enhance-node

|

||||

|

||||

**Example Usage:**

|

||||

|

||||

|

||||

--------------------------------

|

||||

### Generative Grammar-Based Prompt Nodes

|

||||

|

||||

@@ -153,16 +141,26 @@ This includes 3 Nodes:

|

||||

|

||||

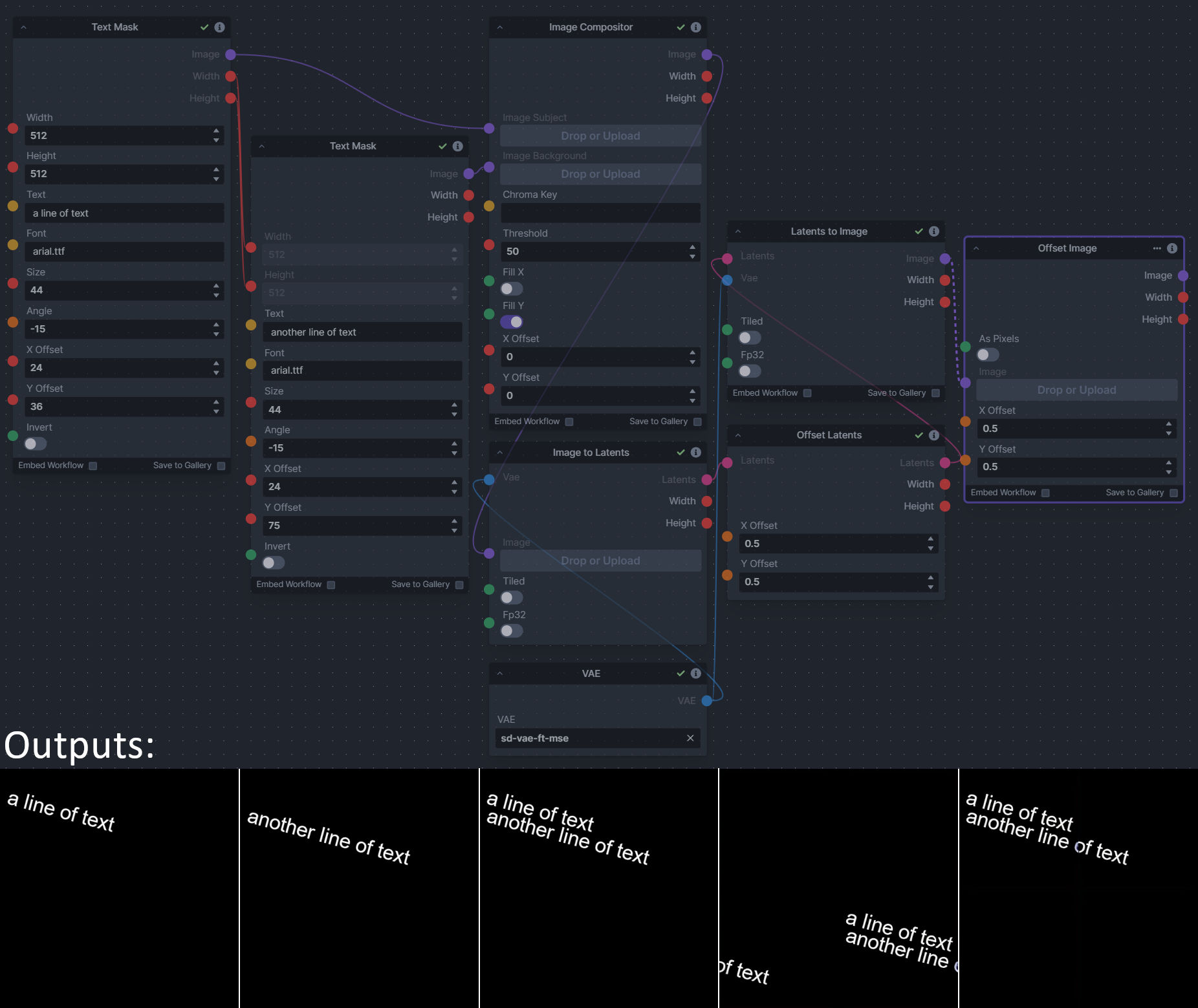

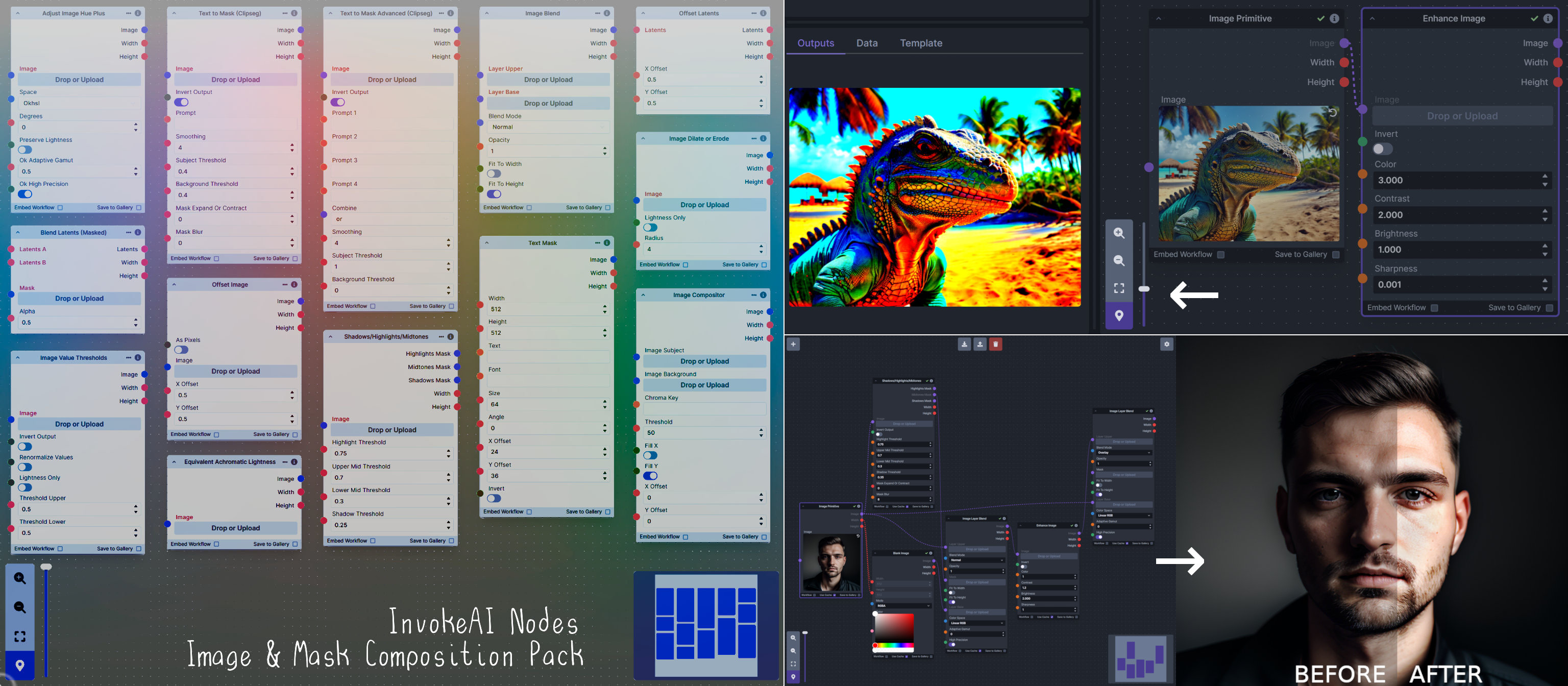

**Description:** This is a pack of nodes for composing masks and images, including a simple text mask creator and both image and latent offset nodes. The offsets wrap around, so these can be used in conjunction with the Seamless node to progressively generate centered on different parts of the seamless tiling.

|

||||

|

||||

This includes 4 Nodes:

|

||||

- *Text Mask (simple 2D)* - create and position a white on black (or black on white) line of text using any font locally available to Invoke.

|

||||

This includes 14 Nodes:

|

||||

- *Adjust Image Hue Plus* - Rotate the hue of an image in one of several different color spaces.

|

||||

- *Blend Latents/Noise (Masked)* - Use a mask to blend part of one latents tensor [including Noise outputs] into another. Can be used to "renoise" sections during a multi-stage [masked] denoising process.

|

||||

- *Enhance Image* - Boost or reduce color saturation, contrast, brightness, sharpness, or invert colors of any image at any stage with this simple wrapper for pillow [PIL]'s ImageEnhance module.

|

||||

- *Equivalent Achromatic Lightness* - Calculates image lightness accounting for Helmholtz-Kohlrausch effect based on a method described by High, Green, and Nussbaum (2023).

|

||||

- *Text to Mask (Clipseg)* - Input a prompt and an image to generate a mask representing areas of the image matched by the prompt.

|

||||

- *Text to Mask Advanced (Clipseg)* - Output up to four prompt masks combined with logical "and", logical "or", or as separate channels of an RGBA image.

|

||||

- *Image Layer Blend* - Perform a layered blend of two images using alpha compositing. Opacity of top layer is selectable, with optional mask and several different blend modes/color spaces.

|

||||

- *Image Compositor* - Take a subject from an image with a flat backdrop and layer it on another image using a chroma key or flood select background removal.

|

||||

- *Image Dilate or Erode* - Dilate or expand a mask (or any image!). This is equivalent to an expand/contract operation.

|

||||

- *Image Value Thresholds* - Clip an image to pure black/white beyond specified thresholds.

|

||||

- *Offset Latents* - Offset a latents tensor in the vertical and/or horizontal dimensions, wrapping it around.

|

||||

- *Offset Image* - Offset an image in the vertical and/or horizontal dimensions, wrapping it around.

|

||||

- *Shadows/Highlights/Midtones* - Extract three masks (with adjustable hard or soft thresholds) representing shadows, midtones, and highlights regions of an image.

|

||||

- *Text Mask (simple 2D)* - create and position a white on black (or black on white) line of text using any font locally available to Invoke.

|

||||

|

||||

**Node Link:** https://github.com/dwringer/composition-nodes

|

||||

|

||||

**Example Usage:**

|

||||

|

||||

**Nodes and Output Examples:**

|

||||

|

||||

|

||||

--------------------------------

|

||||

### Size Stepper Nodes

|

||||

@@ -196,6 +194,40 @@ Results after using the depth controlnet

|

||||

|

||||

--------------------------------

|

||||

|

||||

### Prompt Tools

|

||||

|

||||

**Description:** A set of InvokeAI nodes that add general prompt manipulation tools. These where written to accompany the PromptsFromFile node and other prompt generation nodes.

|

||||

|

||||

1. PromptJoin - Joins to prompts into one.

|

||||

2. PromptReplace - performs a search and replace on a prompt. With the option of using regex.

|

||||

3. PromptSplitNeg - splits a prompt into positive and negative using the old V2 method of [] for negative.

|

||||

4. PromptToFile - saves a prompt or collection of prompts to a file. one per line. There is an append/overwrite option.

|

||||

5. PTFieldsCollect - Converts image generation fields into a Json format string that can be passed to Prompt to file.

|

||||

6. PTFieldsExpand - Takes Json string and converts it to individual generation parameters This can be fed from the Prompts to file node.

|

||||

7. PromptJoinThree - Joins 3 prompt together.

|

||||

8. PromptStrength - This take a string and float and outputs another string in the format of (string)strength like the weighted format of compel.

|

||||

9. PromptStrengthCombine - This takes a collection of prompt strength strings and outputs a string in the .and() or .blend() format that can be fed into a proper prompt node.

|

||||

|

||||

See full docs here: https://github.com/skunkworxdark/Prompt-tools-nodes/edit/main/README.md

|

||||

|

||||

**Node Link:** https://github.com/skunkworxdark/Prompt-tools-nodes

|

||||

|

||||

--------------------------------

|

||||

|

||||

### XY Image to Grid and Images to Grids nodes

|

||||

|

||||

**Description:** Image to grid nodes and supporting tools.

|

||||

|

||||

1. "Images To Grids" node - Takes a collection of images and creates a grid(s) of images. If there are more images than the size of a single grid then mutilple grids will be created until it runs out of images.

|

||||

2. "XYImage To Grid" node - Converts a collection of XYImages into a labeled Grid of images. The XYImages collection has to be built using the supporoting nodes. See example node setups for more details.

|

||||

|

||||

|

||||

See full docs here: https://github.com/skunkworxdark/XYGrid_nodes/edit/main/README.md

|

||||

|

||||

**Node Link:** https://github.com/skunkworxdark/XYGrid_nodes

|

||||

|

||||

--------------------------------

|

||||

|

||||

### Example Node Template

|

||||

|

||||

**Description:** This node allows you to do super cool things with InvokeAI.

|

||||

|

||||

@@ -332,6 +332,7 @@ class InvokeAiInstance:

|

||||

Configure the InvokeAI runtime directory

|

||||

"""

|

||||

|

||||

auto_install = False

|

||||

# set sys.argv to a consistent state

|

||||

new_argv = [sys.argv[0]]

|

||||

for i in range(1, len(sys.argv)):

|

||||

@@ -340,13 +341,17 @@ class InvokeAiInstance:

|

||||

new_argv.append(el)

|

||||

new_argv.append(sys.argv[i + 1])

|

||||

elif el in ["-y", "--yes", "--yes-to-all"]:

|

||||

new_argv.append(el)

|

||||

auto_install = True

|

||||

sys.argv = new_argv

|

||||

|

||||

import messages

|

||||

import requests # to catch download exceptions

|

||||

from messages import introduction

|

||||

|

||||

introduction()

|

||||

auto_install = auto_install or messages.user_wants_auto_configuration()

|

||||

if auto_install:

|

||||

sys.argv.append("--yes")

|

||||

else:

|

||||

messages.introduction()

|

||||

|

||||

from invokeai.frontend.install.invokeai_configure import invokeai_configure

|

||||

|

||||

|

||||

@@ -7,7 +7,7 @@ import os

|

||||

import platform

|

||||

from pathlib import Path

|

||||

|

||||

from prompt_toolkit import prompt

|

||||

from prompt_toolkit import HTML, prompt

|

||||

from prompt_toolkit.completion import PathCompleter

|

||||

from prompt_toolkit.validation import Validator

|

||||

from rich import box, print

|

||||

@@ -65,17 +65,50 @@ def confirm_install(dest: Path) -> bool:

|

||||

if dest.exists():

|

||||

print(f":exclamation: Directory {dest} already exists :exclamation:")

|

||||

dest_confirmed = Confirm.ask(

|

||||

":stop_sign: Are you sure you want to (re)install in this location?",

|

||||

":stop_sign: (re)install in this location?",

|

||||

default=False,

|

||||

)

|

||||

else:

|

||||

print(f"InvokeAI will be installed in {dest}")

|

||||

dest_confirmed = not Confirm.ask("Would you like to pick a different location?", default=False)

|

||||

dest_confirmed = Confirm.ask("Use this location?", default=True)

|

||||

console.line()

|

||||

|

||||

return dest_confirmed

|

||||

|

||||

|

||||

def user_wants_auto_configuration() -> bool:

|

||||

"""Prompt the user to choose between manual and auto configuration."""

|

||||

console.rule("InvokeAI Configuration Section")

|

||||

console.print(

|

||||

Panel(

|

||||

Group(

|

||||

"\n".join(

|

||||

[

|

||||

"Libraries are installed and InvokeAI will now set up its root directory and configuration. Choose between:",

|

||||

"",

|

||||

" * AUTOMATIC configuration: install reasonable defaults and a minimal set of starter models.",

|

||||

" * MANUAL configuration: manually inspect and adjust configuration options and pick from a larger set of starter models.",

|

||||

"",

|

||||

"Later you can fine tune your configuration by selecting option [6] 'Change InvokeAI startup options' from the invoke.bat/invoke.sh launcher script.",

|

||||

]

|

||||

),

|

||||

),

|

||||

box=box.MINIMAL,

|

||||

padding=(1, 1),

|

||||

)

|

||||

)

|

||||

choice = (

|

||||

prompt(

|

||||

HTML("Choose <b><a></b>utomatic or <b><m></b>anual configuration [a/m] (a): "),

|

||||

validator=Validator.from_callable(

|

||||

lambda n: n == "" or n.startswith(("a", "A", "m", "M")), error_message="Please select 'a' or 'm'"

|

||||

),

|

||||

)

|

||||

or "a"

|

||||

)

|

||||

return choice.lower().startswith("a")

|

||||

|

||||

|

||||

def dest_path(dest=None) -> Path:

|

||||

"""

|

||||

Prompt the user for the destination path and create the path

|

||||

|

||||

@@ -17,9 +17,10 @@ echo 6. Change InvokeAI startup options

|

||||

echo 7. Re-run the configure script to fix a broken install or to complete a major upgrade

|

||||

echo 8. Open the developer console

|

||||

echo 9. Update InvokeAI

|

||||

echo 10. Command-line help

|

||||

echo 10. Run the InvokeAI image database maintenance script

|

||||

echo 11. Command-line help

|

||||

echo Q - Quit

|

||||

set /P choice="Please enter 1-10, Q: [1] "

|

||||

set /P choice="Please enter 1-11, Q: [1] "

|

||||

if not defined choice set choice=1

|

||||

IF /I "%choice%" == "1" (

|

||||

echo Starting the InvokeAI browser-based UI..

|

||||

@@ -58,8 +59,11 @@ IF /I "%choice%" == "1" (

|

||||

echo Running invokeai-update...

|

||||

python -m invokeai.frontend.install.invokeai_update

|

||||

) ELSE IF /I "%choice%" == "10" (

|

||||

echo Running the db maintenance script...

|

||||

python .venv\Scripts\invokeai-db-maintenance.exe

|

||||

) ELSE IF /I "%choice%" == "11" (

|

||||

echo Displaying command line help...

|

||||

python .venv\Scripts\invokeai.exe --help %*

|

||||

python .venv\Scripts\invokeai-web.exe --help %*

|

||||

pause

|

||||

exit /b

|

||||

) ELSE IF /I "%choice%" == "q" (

|

||||

|

||||

@@ -97,13 +97,13 @@ do_choice() {

|

||||

;;

|

||||

10)

|

||||

clear

|

||||

printf "Command-line help\n"

|

||||

invokeai --help

|

||||

printf "Running the db maintenance script\n"

|

||||

invokeai-db-maintenance --root ${INVOKEAI_ROOT}

|

||||

;;

|

||||

"HELP 1")

|

||||

11)

|

||||

clear

|

||||

printf "Command-line help\n"

|

||||

invokeai --help

|

||||

invokeai-web --help

|

||||

;;

|

||||

*)

|

||||

clear

|

||||

@@ -125,7 +125,10 @@ do_dialog() {

|

||||

6 "Change InvokeAI startup options"

|

||||

7 "Re-run the configure script to fix a broken install or to complete a major upgrade"

|

||||

8 "Open the developer console"

|

||||

9 "Update InvokeAI")

|

||||

9 "Update InvokeAI"

|

||||

10 "Run the InvokeAI image database maintenance script"

|

||||

11 "Command-line help"

|

||||

)

|

||||

|

||||

choice=$(dialog --clear \

|

||||

--backtitle "\Zb\Zu\Z3InvokeAI" \

|

||||

@@ -157,9 +160,10 @@ do_line_input() {

|

||||

printf "7: Re-run the configure script to fix a broken install\n"

|

||||

printf "8: Open the developer console\n"

|

||||

printf "9: Update InvokeAI\n"

|

||||

printf "10: Command-line help\n"

|

||||

printf "10: Run the InvokeAI image database maintenance script\n"

|

||||

printf "11: Command-line help\n"

|

||||

printf "Q: Quit\n\n"

|

||||

read -p "Please enter 1-10, Q: [1] " yn

|

||||

read -p "Please enter 1-11, Q: [1] " yn

|

||||

choice=${yn:='1'}

|

||||

do_choice $choice

|

||||

clear

|

||||

|

||||

@@ -1,5 +1,6 @@

|

||||

# Copyright (c) 2022 Kyle Schouviller (https://github.com/kyle0654)

|

||||

|

||||

import sqlite3

|

||||

from logging import Logger

|

||||

|

||||

from invokeai.app.services.board_image_record_storage import SqliteBoardImageRecordStorage

|

||||

@@ -9,7 +10,10 @@ from invokeai.app.services.boards import BoardService, BoardServiceDependencies

|

||||

from invokeai.app.services.config import InvokeAIAppConfig

|

||||

from invokeai.app.services.image_record_storage import SqliteImageRecordStorage

|

||||

from invokeai.app.services.images import ImageService, ImageServiceDependencies

|

||||

from invokeai.app.services.invocation_cache.invocation_cache_memory import MemoryInvocationCache

|

||||

from invokeai.app.services.resource_name import SimpleNameService

|

||||

from invokeai.app.services.session_processor.session_processor_default import DefaultSessionProcessor

|

||||

from invokeai.app.services.session_queue.session_queue_sqlite import SqliteSessionQueue

|

||||

from invokeai.app.services.urls import LocalUrlService

|

||||

from invokeai.backend.util.logging import InvokeAILogger

|

||||

from invokeai.version.invokeai_version import __version__

|

||||

@@ -25,6 +29,7 @@ from ..services.latent_storage import DiskLatentsStorage, ForwardCacheLatentsSto

|

||||

from ..services.model_manager_service import ModelManagerService

|

||||

from ..services.processor import DefaultInvocationProcessor

|

||||

from ..services.sqlite import SqliteItemStorage

|

||||

from ..services.thread import lock

|

||||

from .events import FastAPIEventService

|

||||

|

||||

|

||||

@@ -44,7 +49,7 @@ def check_internet() -> bool:

|

||||

return False

|

||||

|

||||

|

||||

logger = InvokeAILogger.getLogger()

|

||||

logger = InvokeAILogger.get_logger()

|

||||

|

||||

|

||||

class ApiDependencies:

|

||||

@@ -63,22 +68,32 @@ class ApiDependencies:

|

||||

output_folder = config.output_path

|

||||

|

||||

# TODO: build a file/path manager?

|

||||

db_path = config.db_path

|

||||

db_path.parent.mkdir(parents=True, exist_ok=True)

|

||||

db_location = str(db_path)

|

||||

if config.use_memory_db:

|

||||

db_location = ":memory:"

|

||||

else:

|

||||

db_path = config.db_path

|

||||

db_path.parent.mkdir(parents=True, exist_ok=True)

|

||||

db_location = str(db_path)

|

||||

|

||||

logger.info(f"Using database at {db_location}")

|

||||

db_conn = sqlite3.connect(db_location, check_same_thread=False) # TODO: figure out a better threading solution

|

||||

|

||||

if config.log_sql:

|

||||

db_conn.set_trace_callback(print)

|

||||

db_conn.execute("PRAGMA foreign_keys = ON;")

|

||||

|

||||

graph_execution_manager = SqliteItemStorage[GraphExecutionState](

|

||||

filename=db_location, table_name="graph_executions"

|

||||

conn=db_conn, table_name="graph_executions", lock=lock

|

||||

)

|

||||

|

||||

urls = LocalUrlService()

|

||||

image_record_storage = SqliteImageRecordStorage(db_location)

|

||||

image_record_storage = SqliteImageRecordStorage(conn=db_conn, lock=lock)

|

||||

image_file_storage = DiskImageFileStorage(f"{output_folder}/images")

|

||||

names = SimpleNameService()

|

||||

latents = ForwardCacheLatentsStorage(DiskLatentsStorage(f"{output_folder}/latents"))

|

||||

|

||||

board_record_storage = SqliteBoardRecordStorage(db_location)

|

||||

board_image_record_storage = SqliteBoardImageRecordStorage(db_location)

|

||||

board_record_storage = SqliteBoardRecordStorage(conn=db_conn, lock=lock)

|

||||

board_image_record_storage = SqliteBoardImageRecordStorage(conn=db_conn, lock=lock)

|

||||

|

||||

boards = BoardService(

|

||||

services=BoardServiceDependencies(

|

||||

@@ -120,18 +135,29 @@ class ApiDependencies:

|

||||

boards=boards,

|

||||

board_images=board_images,

|

||||

queue=MemoryInvocationQueue(),

|

||||

graph_library=SqliteItemStorage[LibraryGraph](filename=db_location, table_name="graphs"),

|

||||

graph_library=SqliteItemStorage[LibraryGraph](conn=db_conn, lock=lock, table_name="graphs"),

|

||||

graph_execution_manager=graph_execution_manager,

|

||||

processor=DefaultInvocationProcessor(),

|

||||

configuration=config,

|

||||

performance_statistics=InvocationStatsService(graph_execution_manager),

|

||||

logger=logger,

|

||||

session_queue=SqliteSessionQueue(conn=db_conn, lock=lock),

|

||||

session_processor=DefaultSessionProcessor(),

|

||||

invocation_cache=MemoryInvocationCache(max_cache_size=config.node_cache_size),

|

||||

)

|

||||

|

||||

create_system_graphs(services.graph_library)

|

||||

|

||||

ApiDependencies.invoker = Invoker(services)

|

||||

|

||||

try:

|

||||

lock.acquire()

|

||||

db_conn.execute("VACUUM;")

|

||||

db_conn.commit()

|

||||

logger.info("Cleaned database")

|

||||

finally:

|

||||

lock.release()

|

||||

|

||||

@staticmethod

|

||||

def shutdown():

|

||||

if ApiDependencies.invoker:

|

||||

|

||||

@@ -7,6 +7,7 @@ from fastapi.routing import APIRouter

|

||||

from pydantic import BaseModel, Field

|

||||

|

||||

from invokeai.app.invocations.upscale import ESRGAN_MODELS

|

||||

from invokeai.app.services.invocation_cache.invocation_cache_common import InvocationCacheStatus

|

||||

from invokeai.backend.image_util.invisible_watermark import InvisibleWatermark

|

||||

from invokeai.backend.image_util.patchmatch import PatchMatch

|

||||

from invokeai.backend.image_util.safety_checker import SafetyChecker

|

||||

@@ -103,3 +104,43 @@ async def set_log_level(

|

||||

"""Sets the log verbosity level"""

|

||||

ApiDependencies.invoker.services.logger.setLevel(level)

|

||||

return LogLevel(ApiDependencies.invoker.services.logger.level)

|

||||

|

||||

|

||||

@app_router.delete(

|

||||

"/invocation_cache",

|

||||

operation_id="clear_invocation_cache",

|

||||

responses={200: {"description": "The operation was successful"}},

|

||||

)

|

||||

async def clear_invocation_cache() -> None:

|

||||

"""Clears the invocation cache"""

|

||||

ApiDependencies.invoker.services.invocation_cache.clear()

|

||||

|

||||

|

||||

@app_router.put(

|

||||

"/invocation_cache/enable",

|

||||

operation_id="enable_invocation_cache",

|

||||

responses={200: {"description": "The operation was successful"}},

|

||||

)

|

||||

async def enable_invocation_cache() -> None:

|

||||

"""Clears the invocation cache"""

|

||||

ApiDependencies.invoker.services.invocation_cache.enable()

|

||||

|

||||

|

||||

@app_router.put(

|

||||

"/invocation_cache/disable",

|

||||

operation_id="disable_invocation_cache",

|

||||

responses={200: {"description": "The operation was successful"}},

|

||||

)

|

||||

async def disable_invocation_cache() -> None:

|

||||

"""Clears the invocation cache"""

|

||||

ApiDependencies.invoker.services.invocation_cache.disable()

|

||||

|

||||

|

||||

@app_router.get(

|

||||

"/invocation_cache/status",

|

||||

operation_id="get_invocation_cache_status",

|

||||

responses={200: {"model": InvocationCacheStatus}},

|

||||

)

|

||||

async def get_invocation_cache_status() -> InvocationCacheStatus:

|

||||

"""Clears the invocation cache"""

|

||||

return ApiDependencies.invoker.services.invocation_cache.get_status()

|

||||

|

||||

@@ -146,7 +146,8 @@ async def update_model(

|

||||

async def import_model(

|

||||

location: str = Body(description="A model path, repo_id or URL to import"),

|

||||

prediction_type: Optional[Literal["v_prediction", "epsilon", "sample"]] = Body(

|

||||

description="Prediction type for SDv2 checkpoint files", default="v_prediction"

|

||||

description="Prediction type for SDv2 checkpoints and rare SDv1 checkpoints",

|

||||

default=None,

|

||||

),

|

||||

) -> ImportModelResponse:

|

||||

"""Add a model using its local path, repo_id, or remote URL. Model characteristics will be probed and configured automatically"""

|

||||

|

||||

247

invokeai/app/api/routers/session_queue.py

Normal file

247

invokeai/app/api/routers/session_queue.py

Normal file

@@ -0,0 +1,247 @@

|

||||

from typing import Optional

|

||||

|

||||

from fastapi import Body, Path, Query

|

||||

from fastapi.routing import APIRouter

|

||||

from pydantic import BaseModel

|

||||

|

||||

from invokeai.app.services.session_processor.session_processor_common import SessionProcessorStatus

|

||||

from invokeai.app.services.session_queue.session_queue_common import (

|

||||

QUEUE_ITEM_STATUS,

|

||||

Batch,

|

||||

BatchStatus,

|

||||

CancelByBatchIDsResult,

|

||||

ClearResult,

|

||||

EnqueueBatchResult,

|

||||

EnqueueGraphResult,

|

||||

PruneResult,

|

||||

SessionQueueItem,

|

||||

SessionQueueItemDTO,

|

||||

SessionQueueStatus,

|

||||

)

|

||||

from invokeai.app.services.shared.models import CursorPaginatedResults

|

||||

|

||||

from ...services.graph import Graph

|

||||

from ..dependencies import ApiDependencies

|

||||

|

||||

session_queue_router = APIRouter(prefix="/v1/queue", tags=["queue"])

|

||||

|

||||

|

||||

class SessionQueueAndProcessorStatus(BaseModel):

|

||||

"""The overall status of session queue and processor"""

|

||||

|

||||

queue: SessionQueueStatus

|

||||

processor: SessionProcessorStatus

|

||||

|

||||

|

||||

@session_queue_router.post(

|

||||

"/{queue_id}/enqueue_graph",

|

||||

operation_id="enqueue_graph",

|

||||

responses={

|

||||

201: {"model": EnqueueGraphResult},

|

||||

},

|

||||

)

|

||||

async def enqueue_graph(

|

||||

queue_id: str = Path(description="The queue id to perform this operation on"),

|

||||

graph: Graph = Body(description="The graph to enqueue"),

|

||||

prepend: bool = Body(default=False, description="Whether or not to prepend this batch in the queue"),

|

||||

) -> EnqueueGraphResult:

|

||||

"""Enqueues a graph for single execution."""

|

||||

|

||||

return ApiDependencies.invoker.services.session_queue.enqueue_graph(queue_id=queue_id, graph=graph, prepend=prepend)

|

||||

|

||||

|

||||

@session_queue_router.post(

|

||||

"/{queue_id}/enqueue_batch",

|

||||

operation_id="enqueue_batch",

|

||||

responses={

|

||||

201: {"model": EnqueueBatchResult},

|

||||

},

|

||||

)

|

||||

async def enqueue_batch(

|

||||

queue_id: str = Path(description="The queue id to perform this operation on"),

|

||||

batch: Batch = Body(description="Batch to process"),

|

||||

prepend: bool = Body(default=False, description="Whether or not to prepend this batch in the queue"),

|

||||

) -> EnqueueBatchResult:

|

||||

"""Processes a batch and enqueues the output graphs for execution."""

|

||||

|

||||

return ApiDependencies.invoker.services.session_queue.enqueue_batch(queue_id=queue_id, batch=batch, prepend=prepend)

|

||||

|

||||

|

||||

@session_queue_router.get(

|

||||

"/{queue_id}/list",

|

||||

operation_id="list_queue_items",

|

||||

responses={

|

||||

200: {"model": CursorPaginatedResults[SessionQueueItemDTO]},

|

||||

},

|

||||

)

|

||||

async def list_queue_items(

|

||||

queue_id: str = Path(description="The queue id to perform this operation on"),

|

||||

limit: int = Query(default=50, description="The number of items to fetch"),

|

||||

status: Optional[QUEUE_ITEM_STATUS] = Query(default=None, description="The status of items to fetch"),

|

||||

cursor: Optional[int] = Query(default=None, description="The pagination cursor"),

|

||||

priority: int = Query(default=0, description="The pagination cursor priority"),

|

||||

) -> CursorPaginatedResults[SessionQueueItemDTO]:

|

||||

"""Gets all queue items (without graphs)"""

|

||||

|

||||

return ApiDependencies.invoker.services.session_queue.list_queue_items(

|

||||

queue_id=queue_id, limit=limit, status=status, cursor=cursor, priority=priority

|

||||

)

|

||||

|

||||

|

||||

@session_queue_router.put(

|

||||

"/{queue_id}/processor/resume",

|

||||

operation_id="resume",

|

||||

responses={200: {"model": SessionProcessorStatus}},

|

||||

)

|

||||

async def resume(

|

||||

queue_id: str = Path(description="The queue id to perform this operation on"),

|

||||

) -> SessionProcessorStatus:

|

||||

"""Resumes session processor"""

|

||||

return ApiDependencies.invoker.services.session_processor.resume()

|

||||

|

||||

|

||||

@session_queue_router.put(

|

||||

"/{queue_id}/processor/pause",

|

||||

operation_id="pause",

|

||||

responses={200: {"model": SessionProcessorStatus}},

|

||||

)

|

||||

async def Pause(

|

||||

queue_id: str = Path(description="The queue id to perform this operation on"),

|

||||

) -> SessionProcessorStatus:

|

||||

"""Pauses session processor"""

|

||||

return ApiDependencies.invoker.services.session_processor.pause()

|

||||

|

||||

|

||||

@session_queue_router.put(

|

||||

"/{queue_id}/cancel_by_batch_ids",

|

||||

operation_id="cancel_by_batch_ids",

|

||||

responses={200: {"model": CancelByBatchIDsResult}},

|

||||

)

|

||||

async def cancel_by_batch_ids(

|

||||

queue_id: str = Path(description="The queue id to perform this operation on"),

|

||||

batch_ids: list[str] = Body(description="The list of batch_ids to cancel all queue items for", embed=True),

|

||||

) -> CancelByBatchIDsResult:

|

||||

"""Immediately cancels all queue items from the given batch ids"""

|

||||

return ApiDependencies.invoker.services.session_queue.cancel_by_batch_ids(queue_id=queue_id, batch_ids=batch_ids)

|

||||

|

||||

|

||||

@session_queue_router.put(

|

||||

"/{queue_id}/clear",

|

||||

operation_id="clear",

|

||||

responses={

|

||||

200: {"model": ClearResult},

|

||||

},

|

||||

)

|

||||

async def clear(

|

||||

queue_id: str = Path(description="The queue id to perform this operation on"),

|

||||

) -> ClearResult:

|

||||

"""Clears the queue entirely, immediately canceling the currently-executing session"""

|

||||

queue_item = ApiDependencies.invoker.services.session_queue.get_current(queue_id)

|

||||

if queue_item is not None:

|

||||

ApiDependencies.invoker.services.session_queue.cancel_queue_item(queue_item.item_id)

|

||||

clear_result = ApiDependencies.invoker.services.session_queue.clear(queue_id)

|

||||

return clear_result

|

||||

|

||||

|

||||

@session_queue_router.put(

|

||||

"/{queue_id}/prune",

|

||||

operation_id="prune",

|

||||

responses={

|

||||

200: {"model": PruneResult},

|

||||

},

|

||||

)

|

||||

async def prune(

|

||||

queue_id: str = Path(description="The queue id to perform this operation on"),

|

||||

) -> PruneResult:

|

||||

"""Prunes all completed or errored queue items"""

|

||||

return ApiDependencies.invoker.services.session_queue.prune(queue_id)

|

||||

|

||||

|

||||

@session_queue_router.get(

|

||||

"/{queue_id}/current",

|

||||

operation_id="get_current_queue_item",

|

||||

responses={

|

||||

200: {"model": Optional[SessionQueueItem]},

|

||||

},

|

||||

)

|

||||

async def get_current_queue_item(

|

||||

queue_id: str = Path(description="The queue id to perform this operation on"),

|

||||

) -> Optional[SessionQueueItem]:

|

||||

"""Gets the currently execution queue item"""

|

||||

return ApiDependencies.invoker.services.session_queue.get_current(queue_id)

|

||||

|

||||

|

||||

@session_queue_router.get(

|

||||

"/{queue_id}/next",

|

||||

operation_id="get_next_queue_item",

|

||||

responses={

|

||||

200: {"model": Optional[SessionQueueItem]},

|

||||

},

|

||||

)

|

||||

async def get_next_queue_item(

|

||||

queue_id: str = Path(description="The queue id to perform this operation on"),

|

||||

) -> Optional[SessionQueueItem]:

|

||||

"""Gets the next queue item, without executing it"""

|

||||

return ApiDependencies.invoker.services.session_queue.get_next(queue_id)

|

||||

|

||||