- Added support for pyreadline3 so that Window users can benefit.

- Added the !search command to search the history for a matching string:

~~~

!search puppies

[20] puppies at the food bowl -Ak_lms

[54] house overrun by hungry puppies -C20 -s100

~~~

- Added the !clear command to clear the in-memory and on-disk

command history.

- embiggen needs to use ddim sampler due to low step count

- --hires_fix option needs to be written to log and command string

- fix call signature of _init_image_mask()

- When generating multiple images, the first seed was being used for second

and subsequent files. This should only happen when variations are being

generated. Now fixed.

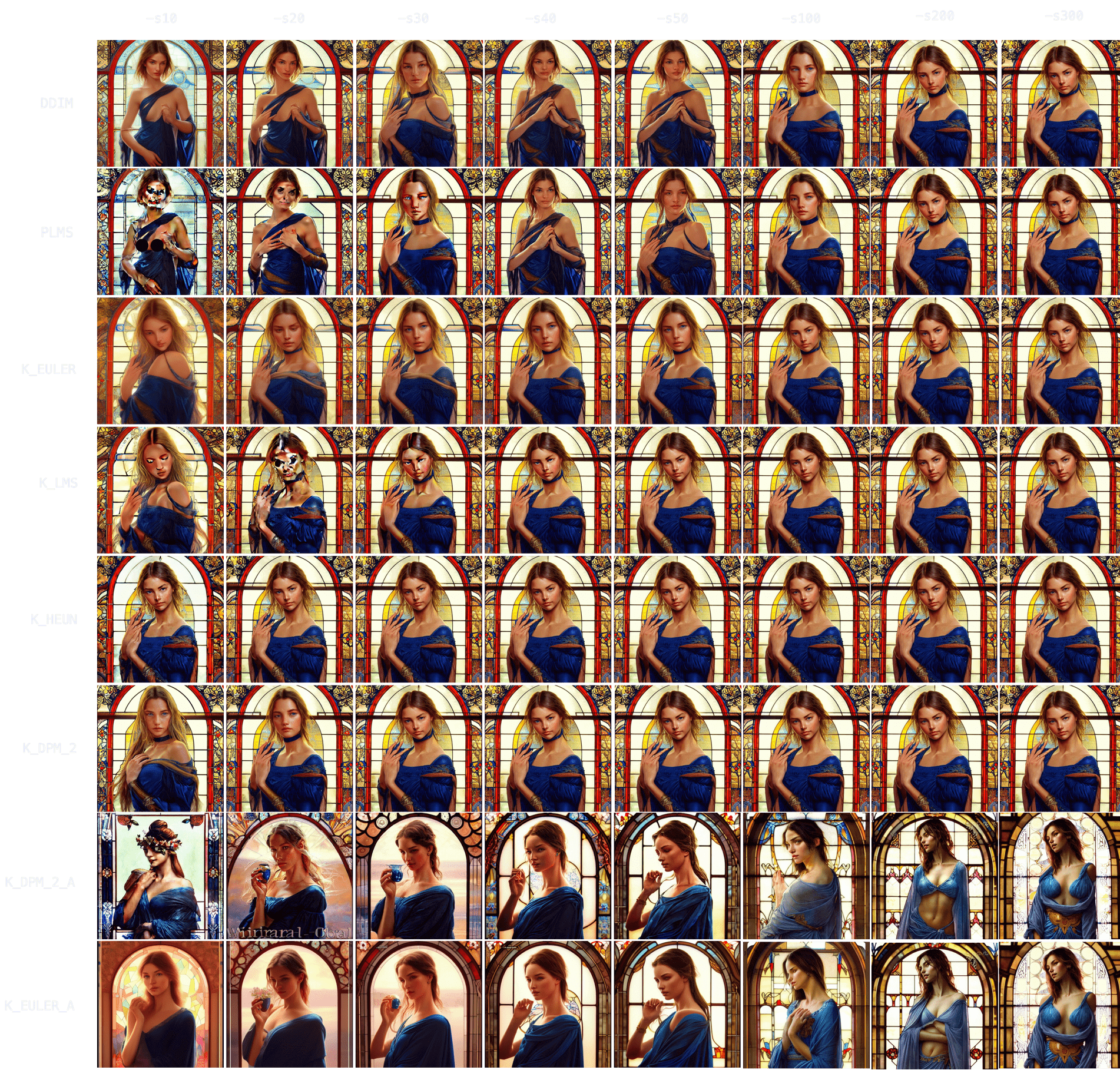

- img2img confirmed working with all samplers

- inpainting working on ddim & plms. Changes to k-diffusion

module seem to be needed for inpainting support.

- switched k-diffuser noise schedule to original karras schedule,

which reduces the step number needed for good results

-if readline.set_auto_history() is not implemented, as in pyreadline3, will fall

back gracefully to automatic history saving. The only issue with this is that

-!history commands will be recorded in the history.

-!fetch on missing file no longer crashes script

-!history is now one of the autocomplete commands

-.dream_history now stored in output directory rather than ~user directory.

An important limitation of the last feature is that the history is

loaded and saved to the .dream_history file in the --outdir directory

specified at script launch time. It is not swapped around when the

--outdir is changed during the session.

Add message about interpolation size

Fix crash if sampler not set to DDIM, change parameter name to hires_fix

Hi res mode fix duplicates with img2img scaling

Add message about interpolation size

Fix crash if sampler not set to DDIM, change parameter name to hires_fix

Hi res mode fix duplicates with img2img scaling

-if readline.set_auto_history() is not implemented, as in pyreadline3, will fall

back gracefully to automatic history saving. The only issue with this is that

-!history commands will be recorded in the history.

-!fetch on missing file no longer crashes script

-!history is now one of the autocomplete commands

-.dream_history now stored in output directory rather than ~user directory.

An important limitation of the last feature is that the history is

loaded and saved to the .dream_history file in the --outdir directory

specified at script launch time. It is not swapped around when the

--outdir is changed during the session.

- When --save_orig *not* provided during image generation with

upscaling/face fixing, an extra image file was being created. This

PR fixes the problem.

- Also generalizes the tab autocomplete for image paths such that

autocomplete searches the output directory for all path-modifying

options except for --outdir.

- normalized how filenames are written out when postprocessing invoked

- various fixes of bugs encountered during testing

- updated documentation

- updated help text

- Enhance tab completion functionality

- Each of the switches that read a filepath (e.g. --init_img) will trigger file path completion. The

-S switch will display a list of recently-used seeds.

- Added new !fetch command to retrieve the metadata from a previously-generated image and populate the

readline linebuffer with the appropriate editable command to regenerate.

- Added new !history command to display previous commands and reload them for modification.

- The !fetch and !fix commands both autocomplete *and* search automatically through the current

outdir for files.

- The completer maintains a list of recently used seeds and will try to autocomplete them.

- normalized how filenames are written out when postprocessing invoked

- various fixes of bugs encountered during testing

- updated documentation

- updated help text

- Enhance tab completion functionality

- Each of the switches that read a filepath (e.g. --init_img) will trigger file path completion. The

-S switch will display a list of recently-used seeds.

- Added new !fetch command to retrieve the metadata from a previously-generated image and populate the

readline linebuffer with the appropriate editable command to regenerate.

- Added new !history command to display previous commands and reload them for modification.

- The !fetch and !fix commands both autocomplete *and* search automatically through the current

outdir for files.

- The completer maintains a list of recently used seeds and will try to autocomplete them.

- args.py will now attempt to return a metadata-containing Args

object using the following methods:

1. By looking for the 'sd-metadata' tag in the PNG info

2. By looking from the 'Dream' tag

3. As a last resort, fetch the seed from the filename and assume

defaults for all other options.

Pin `openh264` to 2.3.0 until OpenCV supports 2.3.1 or newer. Added just to `environment-mac.yml` since I know this happens on M1 / Apple Silicon Macs (running macOS 13) and that's all I can test on.

Either Huggingface's 'transformers' lib introduced a regression in v4.22, or we changed how we're using 'transformers' in such a way that we break when using v4.22.

Pin to 'transformers==4.21.*'

Signed-off-by: Ben Alkov <ben.alkov@gmail.com>

In the case of this "point", the Warp Terminal cannot be clicked directly to trigger the browser to open, and Chrome is a blank page. It should open properly once you remove it

Build the base generator in same place and way as other generators to reduce the chance of missed arguments in the future.

Fixes crash with display in-progress images, though note the feature still doesn't work for other reasons.

- For unknown reasons, conda removes the base directory from the path

on Macintoshes when pyproject.toml is present (even if the file is

empty). This commit renames pyproject.toml to pyproject.toml.hide

until the issue is understood better.

1. Add ldm/dream/restoration/__init__.py file that was inadvertently not

committed earlier.

2. Add '.' to sys.path to address weird mac problem reported in #723

- Adapted from PR #489, author Dominic Letz [https://github.com/dominicletz]

- Too many upstream changes to merge, so frankensteined it in.

- Added support for !fix syntax

- Added documentation

- The seed printed needs to be the one generated prior to the

initial noising operation. To do this, I added a new "first_seed"

argument to the image callback in dream.py.

- Closes#641

- modify strength of embiggen to reduce tiling ghosts

- normalize naming of postprocessed files (could improve more to avoid

name collisions)

- move restoration modules under ldm.dream

- supports gfpgan, esrgan, codeformer and embiggen

- To use:

dream> !fix ./outputs/img-samples/000056.292144555.png -ft gfpgan -U2 -G0.8

dream> !fix ./outputs/img-samples/000056.292144555.png -ft codeformer -G 0.8

dream> !fix ./outputs/img-samples/000056.29214455.png -U4

dream> !fix ./outputs/img-samples/000056.292144555.png -embiggen 1.5

The first example invokes gfpgan to fix faces and esrgan to upscale.

The second example invokes codeformer to fix faces, no upscaling

The third example uses esrgan to upscale 4X

The four example runs embiggen to enlarge 1.5X

- This is very preliminary work. There are some anomalies to note:

1. The syntax is non-obvious. I would prefer something like:

!fix esrgan,gfpgan

!fix esrgan

!fix embiggen,codeformer

However, this will require refactoring the gfpgan and embiggen

code.

2. Images generated using gfpgan, esrgan or codeformer all are named

"xxxxxx.xxxxxx.postprocessed.png" and the original is saved.

However, the prefix is a new one that is not related to the

original.

3. Images generated using embiggen are named "xxxxx.xxxxxxx.png",

and once again the prefix is new. I'm not sure whether the

prefix should be aligned with the original file's prefix or not.

Probably not, but opinions welcome.

Please don't make me keep having to cclean this up

1) Centering of the front matter is completely wrecked

2) We are not a billboard for Discord. We have a perfectly good badge - if the *link* needs fixing, fix it.

3) Stop putting HTML in Markdown

4) We need to state who and what we are once, clearly, not 3 times...

- <b>InvokeAI: A Stable Diffusion Toolkit</b>

- # Stable Diffusion Dream Script

- # **InvokeAI - A Stable Diffusion Toolkit**

5) Headings in Markdown SHOULD NOT HAVE additional formatting

Allowed values are 'auto', 'float32', 'autocast', 'float16'. If not specified or 'auto' a working precision is automatically selected based on the torch device.

Context: #526

Deprecated --full_precision / -F

Tested on both cuda and cpu by calling scripts/dream.py without arguments and checked the auto configuration worked. With --precision=auto/float32/autocast/float16 it performs as expected, either working or failing with a reasonable error. Also checked Img2Img.

1. let users install Rust right at the beginning in order to avoid some troubleshooting later on

2. add "conda deactivate" for troubleshooting once ldm was activated

Fix conflict

Update INSTALL_MAC.md

- modify strength of embiggen to reduce tiling ghosts

- normalize naming of postprocessed files (could improve more to avoid

name collisions)

- move restoration modules under ldm.dream

- supports gfpgan, esrgan, codeformer and embiggen

- To use:

dream> !fix ./outputs/img-samples/000056.292144555.png -ft gfpgan -U2 -G0.8

dream> !fix ./outputs/img-samples/000056.292144555.png -ft codeformer -G 0.8

dream> !fix ./outputs/img-samples/000056.29214455.png -U4

dream> !fix ./outputs/img-samples/000056.292144555.png -embiggen 1.5

The first example invokes gfpgan to fix faces and esrgan to upscale.

The second example invokes codeformer to fix faces, no upscaling

The third example uses esrgan to upscale 4X

The four example runs embiggen to enlarge 1.5X

- This is very preliminary work. There are some anomalies to note:

1. The syntax is non-obvious. I would prefer something like:

!fix esrgan,gfpgan

!fix esrgan

!fix embiggen,codeformer

However, this will require refactoring the gfpgan and embiggen

code.

2. Images generated using gfpgan, esrgan or codeformer all are named

"xxxxxx.xxxxxx.postprocessed.png" and the original is saved.

However, the prefix is a new one that is not related to the

original.

3. Images generated using embiggen are named "xxxxx.xxxxxxx.png",

and once again the prefix is new. I'm not sure whether the

prefix should be aligned with the original file's prefix or not.

Probably not, but opinions welcome.

fix double bs python path in cli.md and fix tables again

add more keys in cli.md

fix annotations in install_mac.md

remove torchaudio from pythorch-nightly installation

fix self reference

- supports gfpgan, esrgan, codeformer and embiggen

- To use:

dream> !fix ./outputs/img-samples/000056.292144555.png -ft gfpgan -U2 -G0.8

dream> !fix ./outputs/img-samples/000056.292144555.png -ft codeformer -G 0.8

dream> !fix ./outputs/img-samples/000056.29214455.png -U4

dream> !fix ./outputs/img-samples/000056.292144555.png -embiggen 1.5

The first example invokes gfpgan to fix faces and esrgan to upscale.

The second example invokes codeformer to fix faces, no upscaling

The third example uses esrgan to upscale 4X

The four example runs embiggen to enlarge 1.5X

- This is very preliminary work. There are some anomalies to note:

1. The syntax is non-obvious. I would prefer something like:

!fix esrgan,gfpgan

!fix esrgan

!fix embiggen,codeformer

However, this will require refactoring the gfpgan and embiggen

code.

2. Images generated using gfpgan, esrgan or codeformer all are named

"xxxxxx.xxxxxx.postprocessed.png" and the original is saved.

However, the prefix is a new one that is not related to the

original.

3. Images generated using embiggen are named "xxxxx.xxxxxxx.png",

and once again the prefix is new. I'm not sure whether the

prefix should be aligned with the original file's prefix or not.

Probably not, but opinions welcome.

* Support color correction for img2img and inpainting, avoiding the shift to magenta seen when running images through img2img repeatedly.

* Fix docs for color correction

* add --init_color to prompt reconstruction

* For best results, the --init_color option should point to the *very first* image used in the sequence of img2img operations. Otherwise color correction will skew towards cyan.

Co-authored-by: Lincoln Stein <lincoln.stein@gmail.com>

- use git-revision-date-localized with enabled creation date

- update requirements-mkdocs.txt and pin verisons

- add requirements

- add dev addr

- fix template

- use better icons for repo and edit button

- remove odd extension

disabled toc in order to view those large tables

added linebreaks in long cells to stop multiline arguments/shortcut

added backticks arround arguments to stop interpreting `<...>` as html

added missing identifiers to codeblocks

changed html tags to markdown to insert the png

Fixes:

File "stable-diffusion/ldm/modules/diffusionmodules/model.py", line 37, in nonlinearity

return x*torch.sigmoid(x)

RuntimeError: CUDA out of memory. Tried to allocate 1.56 GiB [..]

Now up to 1536x1280 is possible on 8GB VRAM.

Also remove unused SiLU class.

* Added linux to the workflows

- rename workflow files

Signed-off-by: Ben Alkov <ben.alkov@gmail.com>

* fixes: run on merge to 'main', 'dev';

- reduce dev merge test cases to 1 (1 takes 11 minutes 😯)

- fix model cache name

Signed-off-by: Ben Alkov <ben.alkov@gmail.com>

* add test prompts to workflows

Signed-off-by: Ben Alkov <ben.alkov@gmail.com>

Signed-off-by: Ben Alkov <ben.alkov@gmail.com>

Co-authored-by: James Reynolds <magnsuviri@me.com>

Apply ~6% speedup by moving * self.scale to earlier on a smaller tensor.

When we have enough VRAM don't make a useless zeros tensor.

Switch between cuda/mps/cpu based on q.device.type to allow cleaner per architecture future optimizations.

For cuda and cpu keep VRAM usage and faster slicing consistent.

For cpu use smaller slices. Tested ~20% faster on i7, 9.8 to 7.7 s/it.

Fix = typo to self.mem_total >= 8 in einsum_op_mps_v2 as per #582 discussion.

- fixes no closing quote in pretty-printed dream_prompt string

- removes unecessary -f switch when txt2img used

In addition, this commit does an experimental commenting-out of the

random.seed() call in the variation-generating part of ldm.dream.generator.base.

This fixes the problem of two calls that use the same seed and -v0.1

generating different images (#641). However, it does not fix the issue

of two images generated using the same seed and -VXXXXXX being

different.

- switch badge service to badgen, as I couldn't figure out shields.io

Signed-off-by: Ben Alkov <ben.alkov@gmail.com>

Signed-off-by: Ben Alkov <ben.alkov@gmail.com>

* Added linux to the workflows

- rename workflow files

Signed-off-by: Ben Alkov <ben.alkov@gmail.com>

* fixes: run on merge to 'main', 'dev';

- reduce dev merge test cases to 1 (1 takes 11 minutes 😯)

- fix model cache name

Signed-off-by: Ben Alkov <ben.alkov@gmail.com>

* add test prompts to workflows

Signed-off-by: Ben Alkov <ben.alkov@gmail.com>

Signed-off-by: Ben Alkov <ben.alkov@gmail.com>

Co-authored-by: James Reynolds <magnsuviri@me.com>

Co-authored-by: Ben Alkov <ben.alkov@gmail.com>

Co-authored-by: Lincoln Stein <lincoln.stein@gmail.com>

* due to changes in the metadata written to PNG files, web server cannot

display images

* issue is identified and will be fixed in next 24h

* Python 3.9 required for flask/react web server; environment must be

updated.

* Implements rudimentary api

* Fixes blocking in API

* Adds UI to monorepo > src/frontend/

* Updates frontend/README

* Reverts conda env name to `ldm`

* Fixes environment yamls

* CORS config for testing

* Fixes LogViewer position

* API WID

* Adds actions to image viewer

* Increases vite chunkSizeWarningLimit to 1500

* Implements init image

* Implements state persistence in localStorage

* Improve progress data handling

* Final build

* Fixes mimetypes error on windows

* Adds error logging

* Fixes bugged img2img strength component

* Adds sourcemaps to dev build

* Fixes missing key

* Changes connection status indicator to text

* Adds ability to serve other hosts than localhost

* Adding Flask API server

* Removes source maps from config

* Fixes prop transfer

* Add missing packages and add CORS support

* Adding API doc

* Remove defaults from openapi doc

* Adds basic error handling for server config query

* Mostly working socket.io implementation.

* Fixes bug preventing mask upload

* Fixes bug with sampler name not written to metadata

* UI Overhaul, numerous fixes

Co-authored-by: Kyle Schouviller <kyle0654@hotmail.com>

Co-authored-by: Lincoln Stein <lincoln.stein@gmail.com>

* Feature complete for #266 with exception of several small deviations:

1. initial image and model weight hashes use full sha256 hash rather than first 8 digits

2. Initialization parameters for post-processing steps not provided

3. Uses top-level "images" tags for both a single image and a grid of images. This change was suggested in a comment.

* Added scripts/sd_metadata.py to retrieve and print metadata from PNG files

* New ldm.dream.args.Args class is a namespace like object which holds all defaults and can be modified during exection to hold current settings.

* Modified dream.py and server.py to accommodate Args class.

This change makes it so any API clients can show the same error as what

happens in the terminal where you run the API. Useful for various WebUIs

to display more helpful error messages to users.

Co-authored-by: CapableWeb <capableweb@domain.com>

* Refactor generate.py and dream.py

* config file path (models.yaml) is parsed inside Generate() to simplify

API

* Better handling of keyboard interrupts in file loading mode vs

interactive

* Removed oodles of unused variables.

* move nonfunctional inpainting out of the scripts directory

* fix ugly ddim tqdm formatting

* fix embiggen breakage, formatting fixes

* fix web server handling of rel and abs outdir paths

* Can now specify either a relative or absolute path for outdir

* Outdir path does not need to be inside the stable-diffusion directory

* Closes security hole that allowed user to read any file within

stable-diffusion (eek!)

* Closes#536

* revert inadvertent change of conda env name (#528)

* Refactor generate.py and dream.py

* config file path (models.yaml) is parsed inside Generate() to simplify

API

* Better handling of keyboard interrupts in file loading mode vs

interactive

* Removed oodles of unused variables.

* move nonfunctional inpainting out of the scripts directory

* fix ugly ddim tqdm formatting

* Refactor pip requirements across the board

Signed-off-by: Ben Alkov <ben.alkov@gmail.com>

* fix name, version in setup.py

Signed-off-by: Ben Alkov <ben.alkov@gmail.com>

* Update notebooks for new requirements file changes

Signed-off-by: Ben Alkov <ben.alkov@gmail.com>

* slightly more consistent in how the different scenarios are described

* moved the stuff about `/usr/bin/python` to be adjacent to the stuff about `/usr/bin/python3`

* added an example of the 'option 1' goal state

* described a way to directly answer the question: how many snakes are living in your computer?

Code cleanup and attention.py einsum_ops update for M1 16-32GB performance.

Expected: On par with fastest ever from 8 to 128GB for 512x512. Allows large images.

When running on just cpu (intel), a call to torch.layer_norm would error with RuntimeError: expected scalar type BFloat16 but found Float

Fix buggy device handling in model.py.

Tested with scripts/dream.py --full_precision on just cpu on intel laptop. Works but slow at ~10s/it.

* Add Embiggen automation

* Make embiggen_tiles masking more intelligent and count from one (at least for the user), rewrite sections of Embiggen README, fix various typos throughout README

* drop duplicate log message

commit 1c649e4663

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Mon Sep 12 13:29:16 2022 -0400

fix torchvision dependency version #511

commit 4d197f699e

Merge: a3e07fb190ba78

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Mon Sep 12 07:29:19 2022 -0400

Merge branch 'development' of github.com:lstein/stable-diffusion into development

commit a3e07fb84a

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Mon Sep 12 07:28:58 2022 -0400

fix grid crash

commit 9fa1f31bf2

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Mon Sep 12 07:07:05 2022 -0400

fix opencv and realesrgan dependencies in mac install

commit 190ba78960

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Mon Sep 12 01:50:58 2022 -0400

Update requirements-mac.txt

Fixed dangling dash on last line.

commit 25d9ccc509

Author: Any-Winter-4079 <50542132+Any-Winter-4079@users.noreply.github.com>

Date: Mon Sep 12 03:17:29 2022 +0200

Update model.py

commit 9cdf3aca7d

Author: Any-Winter-4079 <50542132+Any-Winter-4079@users.noreply.github.com>

Date: Mon Sep 12 02:52:36 2022 +0200

Update attention.py

Performance improvements to generate larger images in M1 #431

Update attention.py

Added dtype=r1.dtype to softmax

commit 49a96b90d8

Author: Mihai <299015+mh-dm@users.noreply.github.com>

Date: Sat Sep 10 16:58:07 2022 +0300

~7% speedup (1.57 to 1.69it/s) from switch to += in ldm.modules.attention. (#482)

Tested on 8GB eGPU nvidia setup so YMMV.

512x512 output, max VRAM stays same.

commit aba94b85e8

Author: Niek van der Maas <mail@niekvandermaas.nl>

Date: Fri Sep 9 15:01:37 2022 +0200

Fix macOS `pyenv` instructions, add code block highlight (#441)

Fix: `anaconda3-latest` does not work, specify the correct virtualenv, add missing init.

commit aac5102cf3

Author: Henry van Megen <h.vanmegen@gmail.com>

Date: Thu Sep 8 05:16:35 2022 +0200

Disabled debug output (#436)

Co-authored-by: Henry van Megen <hvanmegen@gmail.com>

commit 0ab5a36464

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 17:19:46 2022 -0400

fix missing lines in outputs

commit 5e433728b5

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 16:20:14 2022 -0400

upped max_steps in v1-finetune.yaml and fixed TI docs to address #493

commit 7708f4fb98

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 16:03:37 2022 -0400

slight efficiency gain by using += in attention.py

commit b86a1deb00

Author: blessedcoolant <54517381+blessedcoolant@users.noreply.github.com>

Date: Mon Sep 12 07:47:12 2022 +1200

Remove print statement styling (#504)

Co-authored-by: Lincoln Stein <lincoln.stein@gmail.com>

commit 4951e66103

Author: chromaticist <mhostick@gmail.com>

Date: Sun Sep 11 12:44:26 2022 -0700

Adding support for .bin files from huggingface concepts (#498)

* Adding support for .bin files from huggingface concepts

* Updating documentation to include huggingface .bin info

commit 79b445b0ca

Merge: a323070 f7662c1

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 15:39:38 2022 -0400

Merge branch 'development' of github.com:lstein/stable-diffusion into development

commit a323070a4d

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 15:28:57 2022 -0400

update requirements for new location of gfpgan

commit f7662c1808

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 15:00:24 2022 -0400

update requirements for changed location of gfpgan

commit 93c242c9fb

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 14:47:58 2022 -0400

make gfpgan_model_exists flag available to web interface

commit c7c6cd7735

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 14:43:07 2022 -0400

Update UPSCALE.md

New instructions needed to accommodate fact that the ESRGAN and GFPGAN packages are now installed by environment.yaml.

commit 77ca83e103

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 14:31:56 2022 -0400

Update CLI.md

Final documentation tweak.

commit 0ea145d188

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 14:29:26 2022 -0400

Update CLI.md

More doc fixes.

commit 162285ae86

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 14:28:45 2022 -0400

Update CLI.md

Minor documentation fix

commit 37c921dfe2

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 14:26:41 2022 -0400

documentation enhancements

commit 4f72cb44ad

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 13:05:38 2022 -0400

moved the notebook files into their own directory

commit 878ef2e9e0

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 12:58:06 2022 -0400

documentation tweaks

commit 4923118610

Merge: 16f6a67defafc0

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 12:51:25 2022 -0400

Merge branch 'development' of github.com:lstein/stable-diffusion into development

commit defafc0e8e

Author: Dominic Letz <dominic@diode.io>

Date: Sun Sep 11 18:51:01 2022 +0200

Enable upscaling on m1 (#474)

commit 16f6a6731d

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 12:47:26 2022 -0400

install GFPGAN inside SD repository in order to fix 'dark cast' issue #169

commit 0881d429f2

Author: blessedcoolant <54517381+blessedcoolant@users.noreply.github.com>

Date: Mon Sep 12 03:52:43 2022 +1200

Docs Update (#466)

Authored-by: @blessedcoolant

Co-authored-by: Lincoln Stein <lincoln.stein@gmail.com>

commit 9a29d442b4

Author: Gérald LONLAS <gerald@lonlas.com>

Date: Sun Sep 11 23:23:18 2022 +0800

Revert "Add 3x Upscale option on the Web UI (#442)" (#488)

This reverts commit f8a540881c.

commit d301836fbd

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 10:52:19 2022 -0400

can select prior output for init_img using -1, -2, etc

commit 70aa674e9e

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 10:34:06 2022 -0400

merge PR #495 - keep using float16 in ldm.modules.attention

commit 8748370f44

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 10:22:32 2022 -0400

negative -S indexing recovers correct previous seed; closes issue #476

commit 839e30e4b8

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 11 10:02:44 2022 -0400

improve CUDA VRAM monitoring

extra check that device==cuda before getting VRAM stats

commit bfb2781279

Author: tildebyte <337875+tildebyte@users.noreply.github.com>

Date: Sat Sep 10 10:15:56 2022 -0400

fix(readme): add note about updating env via conda (#475)

commit 5c43988862

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sat Sep 10 10:02:43 2022 -0400

reduce VRAM memory usage by half during model loading

* This moves the call to half() before model.to(device) to avoid GPU

copy of full model. Improves speed and reduces memory usage dramatically

* This fix contributed by @mh-dm (Mihai)

commit 99122708ca

Merge: 817c4a2ecc6b75

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sat Sep 10 09:54:34 2022 -0400

Merge branch 'development' of github.com:lstein/stable-diffusion into development

commit 817c4a26de

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sat Sep 10 09:53:27 2022 -0400

remove -F option from normalized prompt; closes#483

commit ecc6b75a3e

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sat Sep 10 09:53:27 2022 -0400

remove -F option from normalized prompt

commit 723d074442

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Fri Sep 9 18:49:51 2022 -0400

Allow ctrl c when using --from_file (#472)

* added ansi escapes to highlight key parts of CLI session

* adjust exception handling so that ^C will abort when reading prompts from a file

commit 75f633cda8

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Fri Sep 9 12:03:45 2022 -0400

re-add new logo

commit 10db192cc4

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Fri Sep 9 09:26:10 2022 -0400

changes to dogettx optimizations to run on m1

* Author @any-winter-4079

* Author @dogettx

Thanks to many individuals who contributed time and hardware to

benchmarking and debugging these changes.

commit c85ae00b33

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Thu Sep 8 23:57:45 2022 -0400

fix bug which caused seed to get "stuck" on previous image even when UI specified -1

commit 1b5aae3ef3

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Thu Sep 8 22:36:47 2022 -0400

add icon to dream web server

commit 6abf739315

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Thu Sep 8 22:25:09 2022 -0400

add favicon to web server

commit db825b8138

Merge: 33874baafee7f9

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Thu Sep 8 22:17:37 2022 -0400

Merge branch 'deNULL-development' into development

commit 33874bae8d

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Thu Sep 8 22:16:29 2022 -0400

Squashed commit of the following:

commit afee7f9cea

Merge: 6531446171f8db

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Thu Sep 8 22:14:32 2022 -0400

Merge branch 'development' of github.com:deNULL/stable-diffusion into deNULL-development

commit 171f8db742

Author: Denis Olshin <me@denull.ru>

Date: Thu Sep 8 03:15:20 2022 +0300

saving full prompt to metadata when using web ui

commit d7e67b62f0

Author: Denis Olshin <me@denull.ru>

Date: Thu Sep 8 01:51:47 2022 +0300

better logic for clicking to make variations

commit afee7f9cea

Merge: 6531446171f8db

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Thu Sep 8 22:14:32 2022 -0400

Merge branch 'development' of github.com:deNULL/stable-diffusion into deNULL-development

commit 653144694f

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Thu Sep 8 20:41:37 2022 -0400

work around unexplained crash when timesteps=1000 (#440)

* work around unexplained crash when timesteps=1000

* this fix seems to work

commit c33a84cdfd

Author: blessedcoolant <54517381+blessedcoolant@users.noreply.github.com>

Date: Fri Sep 9 12:39:51 2022 +1200

Add New Logo (#454)

* Add instructions on how to install alongside pyenv (#393)

Like probably many others, I have a lot of different virtualenvs, one for each project. Most of them are handled by `pyenv`.

After installing according to these instructions I had issues with ´pyenv`and `miniconda` fighting over the $PATH of my system.

But then I stumbled upon this nice solution on SO: https://stackoverflow.com/a/73139031 , upon which I have based my suggested changes.

It runs perfectly on my M1 setup, with the anaconda setup as a virtual environment handled by pyenv.

Feel free to incorporate these instructions as you see fit.

Thanks a million for all your hard work.

* Disabled debug output (#436)

Co-authored-by: Henry van Megen <hvanmegen@gmail.com>

* Add New Logo

Co-authored-by: Håvard Gulldahl <havard@lurtgjort.no>

Co-authored-by: Henry van Megen <h.vanmegen@gmail.com>

Co-authored-by: Henry van Megen <hvanmegen@gmail.com>

Co-authored-by: Lincoln Stein <lincoln.stein@gmail.com>

commit f8a540881c

Author: Gérald LONLAS <gerald@lonlas.com>

Date: Fri Sep 9 01:45:54 2022 +0800

Add 3x Upscale option on the Web UI (#442)

commit 244239e5f6

Author: James Reynolds <magnusviri@users.noreply.github.com>

Date: Thu Sep 8 05:36:33 2022 -0600

macOS CI workflow, dream.py exits with an error, but the workflow com… (#396)

* macOS CI workflow, dream.py exits with an error, but the workflow completes.

* Files for testing

Co-authored-by: James Reynolds <magnsuviri@me.com>

Co-authored-by: Lincoln Stein <lincoln.stein@gmail.com>

commit 711d49ed30

Author: James Reynolds <magnusviri@users.noreply.github.com>

Date: Thu Sep 8 05:35:08 2022 -0600

Cache model workflow (#394)

* Add workflow that caches the model, step 1 for CI

* Change name of workflow job

Co-authored-by: James Reynolds <magnsuviri@me.com>

Co-authored-by: Lincoln Stein <lincoln.stein@gmail.com>

commit 7996a30e3a

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Thu Sep 8 07:34:03 2022 -0400

add auto-creation of mask for inpainting (#438)

* now use a single init image for both image and mask

* turn on debugging for now to write out mask and image

* add back -M option as a fallback

commit a69ca31f34

Author: elliotsayes <elliotsayes@gmail.com>

Date: Thu Sep 8 15:30:06 2022 +1200

.gitignore WebUI temp files (#430)

* Add instructions on how to install alongside pyenv (#393)

Like probably many others, I have a lot of different virtualenvs, one for each project. Most of them are handled by `pyenv`.

After installing according to these instructions I had issues with ´pyenv`and `miniconda` fighting over the $PATH of my system.

But then I stumbled upon this nice solution on SO: https://stackoverflow.com/a/73139031 , upon which I have based my suggested changes.

It runs perfectly on my M1 setup, with the anaconda setup as a virtual environment handled by pyenv.

Feel free to incorporate these instructions as you see fit.

Thanks a million for all your hard work.

* .gitignore WebUI temp files

Co-authored-by: Håvard Gulldahl <havard@lurtgjort.no>

commit 5c6b612a72

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Wed Sep 7 22:50:55 2022 -0400

fix bug that caused same seed to be redisplayed repeatedly

commit 56f155c590

Author: Johan Roxendal <johan@roxendal.com>

Date: Thu Sep 8 04:50:06 2022 +0200

added support for parsing run log and displaying images in the frontend init state (#410)

Co-authored-by: Johan Roxendal <johan.roxendal@litteraturbanken.se>

Co-authored-by: Lincoln Stein <lincoln.stein@gmail.com>

commit 41687746be

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Wed Sep 7 20:24:35 2022 -0400

added missing initialization of latent_noise to None

commit 171f8db742

Author: Denis Olshin <me@denull.ru>

Date: Thu Sep 8 03:15:20 2022 +0300

saving full prompt to metadata when using web ui

commit d7e67b62f0

Author: Denis Olshin <me@denull.ru>

Date: Thu Sep 8 01:51:47 2022 +0300

better logic for clicking to make variations

commit d1d044aa87

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Wed Sep 7 17:56:59 2022 -0400

actual image seed now written into web log rather than -1 (#428)

commit edada042b3

Author: Arturo Mendivil <60411196+artmen1516@users.noreply.github.com>

Date: Wed Sep 7 10:42:26 2022 -0700

Improve notebook and add requirements file (#422)

commit 29ab3c2028

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Wed Sep 7 13:28:11 2022 -0400

disable neonpixel optimizations on M1 hardware (#414)

* disable neonpixel optimizations on M1 hardware

* fix typo that was causing random noise images on m1

commit 7670ecc63f

Author: cody <cnmizell@gmail.com>

Date: Wed Sep 7 12:24:41 2022 -0500

add more keyboard support on the web server (#391)

add ability to submit prompts with the "enter" key

add ability to cancel generations with the "escape" key

commit dd2aedacaf

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Wed Sep 7 13:23:53 2022 -0400

report VRAM usage stats during initial model loading (#419)

commit f6284777e6

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Tue Sep 6 17:12:39 2022 -0400

Squashed commit of the following:

commit 7d1344282d942a33dcecda4d5144fc154ec82915

Merge: caf4ea3ebeb556

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Mon Sep 5 10:07:27 2022 -0400

Merge branch 'development' of github.com:WebDev9000/stable-diffusion into WebDev9000-development

commit ebeb556af9

Author: Web Dev 9000 <rirath@gmail.com>

Date: Sun Sep 4 18:05:15 2022 -0700

Fixed unintentionally removed lines

commit ff2c4b9a1b

Author: Web Dev 9000 <rirath@gmail.com>

Date: Sun Sep 4 17:50:13 2022 -0700

Add ability to recreate variations via image click

commit c012929cda

Author: Web Dev 9000 <rirath@gmail.com>

Date: Sun Sep 4 14:35:33 2022 -0700

Add files via upload

commit 02a6018992

Author: Web Dev 9000 <rirath@gmail.com>

Date: Sun Sep 4 14:35:07 2022 -0700

Add files via upload

commit eef788981c

Author: Olivier Louvignes <olivier@mg-crea.com>

Date: Tue Sep 6 12:41:08 2022 +0200

feat(txt2img): allow from_file to work with len(lines) < batch_size (#349)

commit 720e5cd651

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Mon Sep 5 20:40:10 2022 -0400

Refactoring simplet2i (#387)

* start refactoring -not yet functional

* first phase of refactor done - not sure weighted prompts working

* Second phase of refactoring. Everything mostly working.

* The refactoring has moved all the hard-core inference work into

ldm.dream.generator.*, where there are submodules for txt2img and

img2img. inpaint will go in there as well.

* Some additional refactoring will be done soon, but relatively

minor work.

* fix -save_orig flag to actually work

* add @neonsecret attention.py memory optimization

* remove unneeded imports

* move token logging into conditioning.py

* add placeholder version of inpaint; porting in progress

* fix crash in img2img

* inpainting working; not tested on variations

* fix crashes in img2img

* ported attention.py memory optimization #117 from basujindal branch

* added @torch_no_grad() decorators to img2img, txt2img, inpaint closures

* Final commit prior to PR against development

* fixup crash when generating intermediate images in web UI

* rename ldm.simplet2i to ldm.generate

* add backward-compatibility simplet2i shell with deprecation warning

* add back in mps exception, addresses @vargol comment in #354

* replaced Conditioning class with exported functions

* fix wrong type of with_variations attribute during intialization

* changed "image_iterator()" to "get_make_image()"

* raise NotImplementedError for calling get_make_image() in parent class

* Update ldm/generate.py

better error message

Co-authored-by: Kevin Gibbons <bakkot@gmail.com>

* minor stylistic fixes and assertion checks from code review

* moved get_noise() method into img2img class

* break get_noise() into two methods, one for txt2img and the other for img2img

* inpainting works on non-square images now

* make get_noise() an abstract method in base class

* much improved inpainting

Co-authored-by: Kevin Gibbons <bakkot@gmail.com>

commit 1ad2a8e567

Author: thealanle <35761977+thealanle@users.noreply.github.com>

Date: Mon Sep 5 17:35:04 2022 -0700

Fix --outdir function for web (#373)

* Fix --outdir function for web

* Removed unnecessary hardcoded path

commit 52d8bb2836

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Mon Sep 5 10:31:59 2022 -0400

Squashed commit of the following:

commit 0cd48e932f1326e000c46f4140f98697eb9bdc79

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Mon Sep 5 10:27:43 2022 -0400

resolve conflicts with development

commit d7bc8c12e0

Author: Scott McMillin <scott@scottmcmillin.com>

Date: Sun Sep 4 18:52:09 2022 -0500

Add title attribute back to img tag

commit 5397c89184

Author: Scott McMillin <scott@scottmcmillin.com>

Date: Sun Sep 4 13:49:46 2022 -0500

Remove temp code

commit 1da080b509

Author: Scott McMillin <scott@scottmcmillin.com>

Date: Sun Sep 4 13:33:56 2022 -0500

Cleaned up HTML; small style changes; image click opens image; add seed to figcaption beneath image

commit caf4ea3d89

Author: Adam Rice <adam@askadam.io>

Date: Mon Sep 5 10:05:39 2022 -0400

Add a 'Remove Image' button to clear the file upload field (#382)

* added "remove image" button

* styled a new "remove image" button

* Update index.js

commit 95c088b303

Author: Kevin Gibbons <bakkot@gmail.com>

Date: Sun Sep 4 19:04:14 2022 -0700

Revert "Add CORS headers to dream server to ease integration with third-party web interfaces" (#371)

This reverts commit 91e826e5f4.

commit a20113d5a3

Author: Kevin Gibbons <bakkot@gmail.com>

Date: Sun Sep 4 18:59:12 2022 -0700

put no_grad decorator on make_image closures (#375)

commit 0f93dadd6a

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 4 21:39:15 2022 -0400

fix several dangling references to --gfpgan option, which no longer exists

commit f4004f660e

Author: tildebyte <337875+tildebyte@users.noreply.github.com>

Date: Sun Sep 4 19:43:04 2022 -0400

TOIL(requirements): Split requirements to per-platform (#355)

* toil(reqs): split requirements to per-platform

Signed-off-by: Ben Alkov <ben.alkov@gmail.com>

* toil(reqs): fix for Win and Lin...

...allow pip to resolve latest torch, numpy

Signed-off-by: Ben Alkov <ben.alkov@gmail.com>

* toil(install): update reqs in Win install notebook

Signed-off-by: Ben Alkov <ben.alkov@gmail.com>

Signed-off-by: Ben Alkov <ben.alkov@gmail.com>

commit 4406fd138d

Merge: 5116c81fd7a72e

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 4 08:23:53 2022 -0400

Merge branch 'SebastianAigner-main' into development

Add support for full CORS headers for dream server.

commit fd7a72e147

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 4 08:23:11 2022 -0400

remove debugging message

commit 3a2be621f3

Merge: 91e826e5116c81

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sun Sep 4 08:15:51 2022 -0400

Merge branch 'development' into main

commit 5116c8178c

Author: Justin Wong <1584142+wongjustin99@users.noreply.github.com>

Date: Sun Sep 4 07:17:58 2022 -0400

fix save_original flag saving to the same filename (#360)

* Update README.md with new Anaconda install steps (#347)

pip3 version did not work for me and this is the recommended way to install Anaconda now it seems

* fix save_original flag saving to the same filename

Before this, the `--save_orig` flag was not working. The upscaled/GFPGAN would overwrite the original output image.

Co-authored-by: greentext2 <112735219+greentext2@users.noreply.github.com>

commit 91e826e5f4

Author: Sebastian Aigner <SebastianAigner@users.noreply.github.com>

Date: Sun Sep 4 10:22:54 2022 +0200

Add CORS headers to dream server to ease integration with third-party web interfaces

commit 6266d9e8d6

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sat Sep 3 15:45:20 2022 -0400

remove stray debugging message

commit 138956e516

Author: greentext2 <112735219+greentext2@users.noreply.github.com>

Date: Sat Sep 3 13:38:57 2022 -0500

Update README.md with new Anaconda install steps (#347)

pip3 version did not work for me and this is the recommended way to install Anaconda now it seems

commit 60be735e80

Author: Cora Johnson-Roberson <cora.johnson.roberson@gmail.com>

Date: Sat Sep 3 14:28:34 2022 -0400

Switch to regular pytorch channel and restore Python 3.10 for Macs. (#301)

* Switch to regular pytorch channel and restore Python 3.10 for Macs.

Although pytorch-nightly should in theory be faster, it is currently

causing increased memory usage and slower iterations:

https://github.com/lstein/stable-diffusion/pull/283#issuecomment-1234784885

This changes the environment-mac.yaml file back to the regular pytorch

channel and moves the `transformers` dep into pip for now (since it

cannot be satisfied until tokenizers>=0.11 is built for Python 3.10).

* Specify versions for Pip packages as well.

commit d0d95d3a2a

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sat Sep 3 14:10:31 2022 -0400

make initimg appear in web log

commit b90a215000

Merge: 1eee8116270e31

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sat Sep 3 13:47:15 2022 -0400

Merge branch 'prixt-seamless' into development

commit 6270e313b8

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sat Sep 3 13:46:29 2022 -0400

add credit to prixt for seamless circular tiling

commit a01b7bdc40

Merge: 1eee8119d88abe

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sat Sep 3 13:43:04 2022 -0400

add web interface for seamless option

commit 1eee8111b9

Merge: 64eca42fb857f0

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sat Sep 3 12:33:39 2022 -0400

Merge branch 'development' of github.com:lstein/stable-diffusion into development

commit 64eca42610

Merge: 9130ad721a1f68

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sat Sep 3 12:33:05 2022 -0400

Merge branch 'main' into development

* brings in small documentation fixes that were

added directly to main during release tweaking.

commit fb857f05ba

Author: Lincoln Stein <lincoln.stein@gmail.com>

Date: Sat Sep 3 12:07:07 2022 -0400

fix typo in docs

commit 9d88abe2ea

Author: prixt <paraxite@naver.com>

Date: Sat Sep 3 22:42:16 2022 +0900

fixed typo

commit a61e49bc97

Author: prixt <paraxite@naver.com>

Date: Sat Sep 3 22:39:35 2022 +0900

* Removed unnecessary code

* Added description about --seamless

commit 02bee4fdb1

Author: prixt <paraxite@naver.com>

Date: Sat Sep 3 16:08:03 2022 +0900

added --seamless tag logging to normalize_prompt

commit d922b53c26

Author: prixt <paraxite@naver.com>

Date: Sat Sep 3 15:13:31 2022 +0900

added seamless tiling mode and commands

* This moves the call to half() before model.to(device) to avoid GPU

copy of full model. Improves speed and reduces memory usage dramatically

* This fix contributed by @mh-dm (Mihai)

* Add instructions on how to install alongside pyenv (#393)

Like probably many others, I have a lot of different virtualenvs, one for each project. Most of them are handled by `pyenv`.

After installing according to these instructions I had issues with ´pyenv`and `miniconda` fighting over the $PATH of my system.

But then I stumbled upon this nice solution on SO: https://stackoverflow.com/a/73139031 , upon which I have based my suggested changes.

It runs perfectly on my M1 setup, with the anaconda setup as a virtual environment handled by pyenv.

Feel free to incorporate these instructions as you see fit.

Thanks a million for all your hard work.

* Disabled debug output (#436)

Co-authored-by: Henry van Megen <hvanmegen@gmail.com>

* Add New Logo

Co-authored-by: Håvard Gulldahl <havard@lurtgjort.no>

Co-authored-by: Henry van Megen <h.vanmegen@gmail.com>

Co-authored-by: Henry van Megen <hvanmegen@gmail.com>

Co-authored-by: Lincoln Stein <lincoln.stein@gmail.com>

* macOS CI workflow, dream.py exits with an error, but the workflow completes.

* Files for testing

Co-authored-by: James Reynolds <magnsuviri@me.com>

Co-authored-by: Lincoln Stein <lincoln.stein@gmail.com>

* Add workflow that caches the model, step 1 for CI

* Change name of workflow job

Co-authored-by: James Reynolds <magnsuviri@me.com>

Co-authored-by: Lincoln Stein <lincoln.stein@gmail.com>

* Add instructions on how to install alongside pyenv (#393)

Like probably many others, I have a lot of different virtualenvs, one for each project. Most of them are handled by `pyenv`.

After installing according to these instructions I had issues with ´pyenv`and `miniconda` fighting over the $PATH of my system.

But then I stumbled upon this nice solution on SO: https://stackoverflow.com/a/73139031 , upon which I have based my suggested changes.

It runs perfectly on my M1 setup, with the anaconda setup as a virtual environment handled by pyenv.

Feel free to incorporate these instructions as you see fit.

Thanks a million for all your hard work.

* .gitignore WebUI temp files

Co-authored-by: Håvard Gulldahl <havard@lurtgjort.no>

Like probably many others, I have a lot of different virtualenvs, one for each project. Most of them are handled by `pyenv`.

After installing according to these instructions I had issues with ´pyenv`and `miniconda` fighting over the $PATH of my system.

But then I stumbled upon this nice solution on SO: https://stackoverflow.com/a/73139031 , upon which I have based my suggested changes.

It runs perfectly on my M1 setup, with the anaconda setup as a virtual environment handled by pyenv.

Feel free to incorporate these instructions as you see fit.

Thanks a million for all your hard work.

* start refactoring -not yet functional

* first phase of refactor done - not sure weighted prompts working

* Second phase of refactoring. Everything mostly working.

* The refactoring has moved all the hard-core inference work into

ldm.dream.generator.*, where there are submodules for txt2img and

img2img. inpaint will go in there as well.

* Some additional refactoring will be done soon, but relatively

minor work.

* fix -save_orig flag to actually work

* add @neonsecret attention.py memory optimization

* remove unneeded imports

* move token logging into conditioning.py

* add placeholder version of inpaint; porting in progress

* fix crash in img2img

* inpainting working; not tested on variations

* fix crashes in img2img

* ported attention.py memory optimization #117 from basujindal branch

* added @torch_no_grad() decorators to img2img, txt2img, inpaint closures

* Final commit prior to PR against development

* fixup crash when generating intermediate images in web UI

* rename ldm.simplet2i to ldm.generate

* add backward-compatibility simplet2i shell with deprecation warning

* add back in mps exception, addresses @vargol comment in #354

* replaced Conditioning class with exported functions

* fix wrong type of with_variations attribute during intialization

* changed "image_iterator()" to "get_make_image()"

* raise NotImplementedError for calling get_make_image() in parent class

* Update ldm/generate.py

better error message

Co-authored-by: Kevin Gibbons <bakkot@gmail.com>

* minor stylistic fixes and assertion checks from code review

* moved get_noise() method into img2img class

* break get_noise() into two methods, one for txt2img and the other for img2img

* inpainting works on non-square images now

* make get_noise() an abstract method in base class

* much improved inpainting

Co-authored-by: Kevin Gibbons <bakkot@gmail.com>

* toil(reqs): split requirements to per-platform

Signed-off-by: Ben Alkov <ben.alkov@gmail.com>

* toil(reqs): fix for Win and Lin...

...allow pip to resolve latest torch, numpy

Signed-off-by: Ben Alkov <ben.alkov@gmail.com>

* toil(install): update reqs in Win install notebook

Signed-off-by: Ben Alkov <ben.alkov@gmail.com>

Signed-off-by: Ben Alkov <ben.alkov@gmail.com>

* Update README.md with new Anaconda install steps (#347)

pip3 version did not work for me and this is the recommended way to install Anaconda now it seems

* fix save_original flag saving to the same filename

Before this, the `--save_orig` flag was not working. The upscaled/GFPGAN would overwrite the original output image.

Co-authored-by: greentext2 <112735219+greentext2@users.noreply.github.com>

* Switch to regular pytorch channel and restore Python 3.10 for Macs.

Although pytorch-nightly should in theory be faster, it is currently

causing increased memory usage and slower iterations:

https://github.com/lstein/stable-diffusion/pull/283#issuecomment-1234784885

This changes the environment-mac.yaml file back to the regular pytorch

channel and moves the `transformers` dep into pip for now (since it

cannot be satisfied until tokenizers>=0.11 is built for Python 3.10).

* Specify versions for Pip packages as well.

This merge adds the following major features:

* Support for image variations.

* Security fix for webGUI (binds to localhost by default, use

--host=0.0.0.0 to allow access from external interface.

* Scalable configs/models.yaml configuration file for adding more

models as they become available.

* More tuning and exception handling for M1 hardware running MPS.

* Various documentation fixes.

* Update README.md

Those []() link pairs get me every time.

* New issue template

* Added issue templates

* feat(install+run): add notebook for Windows for from-zero install...

...and run

Tested with JupyterLab and VSCode

Signed-off-by: Ben Alkov <ben.alkov@gmail.com>

Signed-off-by: Ben Alkov <ben.alkov@gmail.com>

Co-authored-by: Lincoln Stein <lincoln.stein@gmail.com>

Co-authored-by: James Reynolds <magnusviri@users.noreply.github.com>

Co-authored-by: James Reynolds <magnsuviri@me.com>

* check that fixed side provided when requesting variant parameter sweep

(-v)

* move _get_noise() into outer scope to improve readability -

refactoring of big method call needed

By supplying --model (defaulting to stable-diffusion-1.4) a user can specify which model to load.

Width/Height/Config Location/Weights Location are referenced from configs/models.yaml

models.yaml can serve as a base for expanding our support for other versions of Latent/Stable Diffusion.

Contained are parameters for default width/height, as well as where to find the config and weights for this model.

Adding a new model is as simple as adding to this file.

I'm using stable-diffusion on a 2022 Macbook M2 Air with 24 GB unified memory.

I see this taking about 2.0s/it.

I've moved many deps from pip to conda-forge, to take advantage of the

precompiled binaries. Some notes for Mac users, since I've seen a lot of

confusion about this:

One doesn't need the `apple` channel to run this on a Mac-- that's only

used by `tensorflow-deps`, required for running tensorflow-metal. For

that, I have an example environment.yml here:

https://developer.apple.com/forums/thread/711792?answerId=723276022#723276022

However, the `CONDA_ENV=osx-arm64` environment variable *is* needed to

ensure that you do not run any Intel-specific packages such as `mkl`,

which will fail with [cryptic errors](https://github.com/CompVis/stable-diffusion/issues/25#issuecomment-1226702274)

on the ARM architecture and cause the environment to break.

I've also added a comment in the env file about 3.10 not working yet.

When it becomes possible to update, those commands run on an osx-arm64

machine should work to determine the new version set.

Here's what a successful run of dream.py should look like:

```

$ python scripts/dream.py --full_precision SIGABRT(6) ↵ 08:42:59

* Initializing, be patient...

Loading model from models/ldm/stable-diffusion-v1/model.ckpt

LatentDiffusion: Running in eps-prediction mode

DiffusionWrapper has 859.52 M params.

making attention of type 'vanilla' with 512 in_channels

Working with z of shape (1, 4, 32, 32) = 4096 dimensions.

making attention of type 'vanilla' with 512 in_channels

Using slower but more accurate full-precision math (--full_precision)

>> Setting Sampler to k_lms

model loaded in 6.12s

* Initialization done! Awaiting your command (-h for help, 'q' to quit)

dream> "an astronaut riding a horse"

Generating: 0%| | 0/1 [00:00<?, ?it/s]/Users/corajr/Documents/lstein/ldm/modules/embedding_manager.py:152: UserWarning: The operator 'aten::nonzero' is not currently supported on the MPS backend and will fall back to run on the CPU. This may have performance implications. (Triggered internally at /Users/runner/work/_temp/anaconda/conda-bld/pytorch_1662016319283/work/aten/src/ATen/mps/MPSFallback.mm:11.)

placeholder_idx = torch.where(

100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 50/50 [01:37<00:00, 1.95s/it]

Generating: 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 1/1 [01:38<00:00, 98.55s/it]

Usage stats:

1 image(s) generated in 98.60s

Max VRAM used for this generation: 0.00G

Outputs:

outputs/img-samples/000001.1525943180.png: "an astronaut riding a horse" -s50 -W512 -H512 -C7.5 -Ak_lms -F -S1525943180

```

- move all device init logic to T2I.__init__

- handle m1 specific edge case with autocast device type

- check torch.cuda.is_available before using cuda

* This functionality is triggered by the --fit option in the CLI (default

false), and by the "fit" checkbox in the WebGUI (default True)

* In addition, this commit contains a number of whitespace changes to

make the code more readable, as well as an attempt to unify the visual

appearance of info and warning messages.

* fix AttributeError crash when running on non-CUDA systems; closes issue #234 and issue #250

* although this prevents dream.py script from crashing immediately on MPS systems, MPS support still very much a work in progress.

* Allow configuration of which SD model to use

Closes https://github.com/lstein/stable-diffusion/issues/49 The syntax isn't quite the same (opting for --weights over --model), although --weights is more in-line with the existing naming convention.

This method also locks us into models in the models/ldm/stable-diffusion-v1/ directory. Personally, I'm not averse to this, although a secondary solution may be necessary if we wish to supply weights from an external directory.

* Fix typo

* Allow either filename OR filepath input for arg

This approach allows both

--weights SD13

--weights C:/StableDiffusion/models/ldm/stable-diffusion-v1/SD13.ckpt

Fixed merging embeddings based on the changes made in textual inversion. Tested and working. Inverted their logic to prioritize Stable Diffusion implementation over alternatives, but left the option for alternatives to still be used.

* Optimizations to the training model

Based on the changes made in

textual_inversion I carried over the relevant changes that improve model training. These changes reduce the amount of memory used, significantly improve the speed at which training runs, and improves the quality of the results.

It also fixes the problem where the model trainer wouldn't automatically stop when it hit the set number of steps.

* Update main.py

Cleaned up whitespace

Removed the changes to the index.html and .gitattributes for this PR. Will add them in separate PRs.

Applied recommended change for resolving the case issue.

Case sensitivity between os.getcwd and os.realpath can fail due to different drive letter casing. C:\ vs c:\. This change addresses that by normalizing the strings before comparing.

This adds correct treatment of upscaling/face-fixing within the WebUI.

Also adds a basic status message so that the user knows what's happening

during the post-processing steps.

This adds an option -t argument that will print out color-coded tokenization, SD has a maximum of 77 tokens, it silently discards tokens over the limit if your prompt is too long.

By using -t you can see how your prompt is being tokenized which helps prompt crafting.

- Quenched tokenizer warnings during model initialization.

- Changed "batch" to "iterations" for generating multiple images in

order to conserve vram.

- Updated README.

- Moved static folder from under scripts to top level. Can store other

static content there in future.

- Added screenshot of web server in action (to static folder).

example: "an apple: a banana:0 a watermelon:0.5"

the above example turns into 3 sub-prompts:

"an apple" 1.0 (default if no value)

"a banana" 0.0

"a watermelon" 0.5

The weights are added and normalized

The resulting image will be: apple 66%, banana 0%, watermelon 33%

This allows users with 6 & 8gb cards to run 512x512 and for even larger resolutions for bigger GPUs

I compared the output in Beyond Compare and there are minor differences detected at tolerance 3, but side by side the differences are not perceptible.

You must not distribute the weights provided to you directly or indirectly without explicit consent of the authors.

You must not distribute harmful, offensive, dehumanizing content or otherwise harmful representations of people or their environments, cultures, religions, etc. produced with the model weights

or other generated content described in the "Misuse and Malicious Use" section in the model card.

The model weights are provided for research purposes only.

MIT License

Copyright (c) 2022 Lincoln D. Stein (https://github.com/lstein)

This software is derived from a fork of the source code available from

https://github.com/pesser/stable-diffusion and

https://github.com/CompViz/stable-diffusion. They carry the following

copyrights:

Copyright (c) 2022 Machine Vision and Learning Group, LMU Munich

Copyright (c) 2022 Robin Rombach and Patrick Esser and contributors

Please see individual source code files for copyright and authorship

attributions.

Permission is hereby granted, free of charge, to any person obtaining a copy

of this software and associated documentation files (the "Software"), to deal

in the Software without restriction, including without limitation the rights

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

copies of the Software, and to permit persons to whom the Software is

furnished to do so, subject to the following conditions:

The above copyright notice and this permission notice shall be included in all

copies or substantial portions of the Software.

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

@@ -11,4 +29,4 @@ FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

There is now a command-line script, located in scripts/dream.py, which

provides an interactive interface to image generation similar to

the "dream mothership" bot that Stable AI provided on its Discord

server. The advantage of this is that the lengthy model

initialization only happens once. After that image generation is

fast.

[![CI checks on main badge]][CI checks on main link] [![CI checks on dev badge]][CI checks on dev link] [![latest commit to dev badge]][latest commit to dev link]

Note that this has only been tested in the Linux environment!

[![github open issues badge]][github open issues link] [![github open prs badge]][github open prs link]

~~~~

(ldm) ~/stable-diffusion$ ./scripts/dream.py

* Initializing, be patient...

Loading model from models/ldm/text2img-large/model.ckpt

LatentDiffusion: Running in eps-prediction mode

DiffusionWrapper has 872.30 M params.

making attention of type 'vanilla' with 512 in_channels

Working with z of shape (1, 4, 32, 32) = 4096 dimensions.

making attention of type 'vanilla' with 512 in_channels

Loading Bert tokenizer from "models/bert"

setting sampler to plms

[CI checks on dev badge]: https://flat.badgen.net/github/checks/invoke-ai/InvokeAI/development?label=CI%20status%20on%20dev&cache=900&icon=github

[CI checks on dev link]: https://github.com/invoke-ai/InvokeAI/actions?query=branch%3Adevelopment

[CI checks on main badge]: https://flat.badgen.net/github/checks/invoke-ai/InvokeAI/main?label=CI%20status%20on%20main&cache=900&icon=github

[CI checks on main link]: https://github.com/invoke-ai/InvokeAI/actions/workflows/test-dream-conda.yml

[latest commit to dev badge]: https://flat.badgen.net/github/last-commit/invoke-ai/InvokeAI/development?icon=github&color=yellow&label=last%20dev%20commit&cache=900

[latest commit to dev link]: https://github.com/invoke-ai/InvokeAI/commits/development

Downloading: "https://github.com/DagnyT/hardnet/raw/master/pretrained/train_liberty_with_aug/checkpoint_liberty_with_aug.pth" to /u/lstein/.cache/torch/hub/checkpoints/checkpoint_liberty_with_aug.pth

I added the requirement for torchmetrics to environment.yaml.

### Hardware Requirements

## Installation and support

#### System

Follow the directions from the original README, which starts below, to

configure the environment and install requirements. For support,

please use this repository's GitHub Issues tracking service. Feel free

to send me an email if you use and like the script.

You wil need one of the following:

*Author:* Lincoln D. Stein <lincoln.stein@gmail.com>

- An NVIDIA-based graphics card with 4 GB or more VRAM memory.

- An Apple computer with an M1 chip.

# Original README from CompViz/stable-diffusion

*Stable Diffusion was made possible thanks to a collaboration with [Stability AI](https://stability.ai/) and [Runway](https://runwayml.com/) and builds upon our previous work:*

#### Memory

[**High-Resolution Image Synthesis with Latent Diffusion Models**](https://arxiv.org/abs/2112.10752)<br/>

[Stable Diffusion](#stable-diffusion-v1) is a latent text-to-image diffusion

model.

Thanks to a generous compute donation from [Stability AI](https://stability.ai/) and support from [LAION](https://laion.ai/), we were able to train a Latent Diffusion Model on 512x512 images from a subset of the [LAION-5B](https://laion.ai/blog/laion-5b/) database.

Similar to Google's [Imagen](https://arxiv.org/abs/2205.11487),

this model uses a frozen CLIP ViT-L/14 text encoder to condition the model on text prompts.

With its 860M UNet and 123M text encoder, the model is relatively lightweight and runs on a GPU with at least 10GB VRAM.

See [this section](#stable-diffusion-v1) below and the [model card](https://huggingface.co/CompVis/stable-diffusion).

- At least 6 GB of free disk space for the machine learning model, Python, and all its dependencies.

## Requirements

A suitable [conda](https://conda.io/) environment named `ldm` can be created

and activated with:

#### Note

```

conda env create -f environment.yaml

conda activate ldm

Precision is auto configured based on the device. If however you encounter

errors like 'expected type Float but found Half' or 'not implemented for Half'

you can try starting `dream.py` with the `--precision=float32` flag:

*Note: Stable Diffusion v1 is a general text-to-image diffusion model and therefore mirrors biases and (mis-)conceptions that are present

in its training data.

Details on the training procedure and data, as well as the intended use of the model can be found in the corresponding [model card](https://huggingface.co/CompVis/stable-diffusion).

Research into the safe deployment of general text-to-image models is an ongoing effort. To prevent misuse and harm, we currently provide access to the checkpoints only for [academic research purposes upon request](https://stability.ai/academia-access-form).

**This is an experiment in safe and community-driven publication of a capable and general text-to-image model. We are working on a public release with a more permissive license that also incorporates ethical considerations.***

### Latest Changes

[Request access to Stable Diffusion v1 checkpoints for academic research](https://stability.ai/academia-access-form)

- vNEXT (TODO 2022)

### Weights

- Deprecated `--full_precision` / `-F`. Simply omit it and `dream.py` will auto

configure. To switch away from auto use the new flag like `--precision=float32`.

We currently provide three checkpoints, `sd-v1-1.ckpt`, `sd-v1-2.ckpt` and `sd-v1-3.ckpt`,

which were trained as follows,

- v1.14 (11 September 2022)

- `sd-v1-1.ckpt`: 237k steps at resolution `256x256` on [laion2B-en](https://huggingface.co/datasets/laion/laion2B-en).

194k steps at resolution `512x512` on [laion-high-resolution](https://huggingface.co/datasets/laion/laion-high-resolution) (170M examples from LAION-5B with resolution `>= 1024x1024`).

- `sd-v1-2.ckpt`: Resumed from `sd-v1-1.ckpt`.

515k steps at resolution `512x512` on "laion-improved-aesthetics" (a subset of laion2B-en,

filtered to images with an original size `>= 512x512`, estimated aesthetics score `> 5.0`, and an estimated watermark probability `< 0.5`. The watermark estimate is from the LAION-5B metadata, the aesthetics score is estimated using an [improved aesthetics estimator](https://github.com/christophschuhmann/improved-aesthetic-predictor)).

- `sd-v1-3.ckpt`: Resumed from `sd-v1-2.ckpt`. 195k steps at resolution `512x512` on "laion-improved-aesthetics" and 10\% dropping of the text-conditioning to improve [classifier-free guidance sampling](https://arxiv.org/abs/2207.12598).

- Memory optimizations for small-RAM cards. 512x512 now possible on 4 GB GPUs.

- Full support for Apple hardware with M1 or M2 chips.

- Add "seamless mode" for circular tiling of image. Generates beautiful effects.

([prixt](https://github.com/prixt)).

- Inpainting support.

- Improved web server GUI.

- Lots of code and documentation cleanups.

Evaluations with different classifier-free guidance scales (1.5, 2.0, 3.0, 4.0,

5.0, 6.0, 7.0, 8.0) and 50 PLMS sampling

steps show the relative improvements of the checkpoints:

python scripts/txt2img.py --prompt "a photograph of an astronaut riding a horse" --plms

```

A full set of contribution guidelines, along with templates, are in progress, but for now the most

important thing is to **make your pull request against the "development" branch**, and not against

"main". This will help keep public breakage to a minimum and will allow you to propose more radical

changes.

By default, this uses a guidance scale of `--scale 7.5`, [Katherine Crowson's implementation](https://github.com/CompVis/latent-diffusion/pull/51) of the [PLMS](https://arxiv.org/abs/2202.09778) sampler,

and renders images of size 512x512 (which it was trained on) in 50 steps. All supported arguments are listed below (type `python scripts/txt2img.py --help`).

--config CONFIG path to config which constructs model

--ckpt CKPT path to checkpoint of model

--seed SEED the seed (for reproducible sampling)

--precision {full,autocast}

evaluate at this precision

### Support

```

Note: The inference config for all v1 versions is designed to be used with EMA-only checkpoints.

For this reason `use_ema=False` is set in the configuration, otherwise the code will try to switch from

non-EMA to EMA weights. If you want to examine the effect of EMA vs no EMA, we provide "full" checkpoints

which contain both types of weights. For these, `use_ema=False` will load and use the non-EMA weights.

For support, please use this repository's GitHub Issues tracking service. Feel free to send me an

email if you use and like the script.

Original portions of the software are Copyright (c) 2020

[Lincoln D. Stein](https://github.com/lstein)

#### Diffusers Integration

Another way to download and sample Stable Diffusion is by using the [diffusers library](https://github.com/huggingface/diffusers/tree/main#new--stable-diffusion-is-now-fully-compatible-with-diffusers)

```py

# make sure you're logged in with `huggingface-cli login`

prompt="a photo of an astronaut riding a horse on mars"

withautocast("cuda"):

image=pipe(prompt)["sample"][0]

image.save("astronaut_rides_horse.png")

```

### Image Modification with Stable Diffusion

By using a diffusion-denoising mechanism as first proposed by [SDEdit](https://arxiv.org/abs/2108.01073), the model can be used for different

tasks such as text-guided image-to-image translation and upscaling. Similar to the txt2img sampling script,

we provide a script to perform image modification with Stable Diffusion.

The following describes an example where a rough sketch made in [Pinta](https://www.pinta-project.com/) is converted into a detailed artwork.

```

python scripts/img2img.py --prompt "A fantasy landscape, trending on artstation" --init-img <path-to-img.jpg> --strength 0.8

```

Here, strength is a value between 0.0 and 1.0, that controls the amount of noise that is added to the input image.

Values that approach 1.0 allow for lots of variations but will also produce images that are not semantically consistent with the input. See the following example.

This procedure can, for example, also be used to upscale samples from the base model.

## Comments

- Our codebase for the diffusion models builds heavily on [OpenAI's ADM codebase](https://github.com/openai/guided-diffusion)

and [https://github.com/lucidrains/denoising-diffusion-pytorch](https://github.com/lucidrains/denoising-diffusion-pytorch).

Thanks for open-sourcing!

- The implementation of the transformer encoder is from [x-transformers](https://github.com/lucidrains/x-transformers) by [lucidrains](https://github.com/lucidrains?tab=repositories).

## BibTeX

```

@misc{rombach2021highresolution,

title={High-Resolution Image Synthesis with Latent Diffusion Models},

author={Robin Rombach and Andreas Blattmann and Dominik Lorenz and Patrick Esser and Björn Ommer},

year={2021},

eprint={2112.10752},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

```

### Further Reading

Please see the original README for more information on this software and underlying algorithm,

located in the file [README-CompViz.md](docs/other/README-CompViz.md).

- Supports a Google Colab notebook for a standalone server running on Google hardware [Arturo Mendivil](https://github.com/artmen1516)

- WebUI supports GFPGAN/ESRGAN facial reconstruction and upscaling [Kevin Gibbons](https://github.com/bakkot)

- WebUI supports incremental display of in-progress images during generation [Kevin Gibbons](https://github.com/bakkot)

- Output directory can be specified on the dream> command line.