@@ -9,6 +9,10 @@ Enhancing Ethereum through cryptographic research and collective experimentation

|

||||

- For adding new features, please open PR and first merge to staging/dev for QA, or open issue for suggestion, bug report.

|

||||

- For any misc. update such as typo, PR to main and two approval is needed.

|

||||

|

||||

### Add/Edit article

|

||||

|

||||

- For updating/adding a new article [you can follow this guide](https://github.com/privacy-scaling-explorations/pse.dev/blob/main/articles/README.md)

|

||||

|

||||

### Add/Edit project list

|

||||

|

||||

- For updating/adding project detail [you can follow this guide](https://github.com/privacy-scaling-explorations/pse.dev/blob/main/data/projects/README.md)

|

||||

|

||||

92

app/[lang]/blog/[slug]/page.tsx

Normal file

@@ -0,0 +1,92 @@

|

||||

import { blogArticleCardTagCardVariants } from "@/components/blog/blog-article-card"

|

||||

import { BlogContent } from "@/components/blog/blog-content"

|

||||

import { AppContent } from "@/components/ui/app-content"

|

||||

import { Label } from "@/components/ui/label"

|

||||

import { Markdown } from "@/components/ui/markdown"

|

||||

import { getArticles, getArticleById } from "@/lib/blog"

|

||||

import { Metadata } from "next"

|

||||

|

||||

export const generateStaticParams = async () => {

|

||||

const articles = await getArticles()

|

||||

return articles.map(({ id }) => ({

|

||||

slug: id,

|

||||

}))

|

||||

}

|

||||

|

||||

export async function generateMetadata({ params }: any): Promise<Metadata> {

|

||||

const post = await getArticleById(params.slug)

|

||||

|

||||

const imageUrl =

|

||||

(post?.image ?? "")?.length > 0

|

||||

? `/articles/${post?.id}/${post?.image}`

|

||||

: "/og-image.png"

|

||||

|

||||

const metadata: Metadata = {

|

||||

title: post?.title,

|

||||

description: post?.tldr,

|

||||

openGraph: {

|

||||

images: [{ url: imageUrl, width: 1200, height: 630 }],

|

||||

},

|

||||

}

|

||||

|

||||

// Add canonical URL if post has canonical property

|

||||

if (post && "canonical" in post) {

|

||||

metadata.alternates = {

|

||||

canonical: post.canonical as string,

|

||||

}

|

||||

}

|

||||

|

||||

return metadata

|

||||

}

|

||||

|

||||

export default function BlogArticle({ params }: any) {

|

||||

const slug = params.slug

|

||||

const post = getArticleById(slug)

|

||||

|

||||

if (!post) return null

|

||||

return (

|

||||

<div className="flex flex-col">

|

||||

<div className="flex items-start justify-center background-gradient z-0">

|

||||

<div className="w-full bg-cover-gradient border-b border-tuatara-300">

|

||||

<AppContent className="flex flex-col gap-8 py-10 max-w-[978px]">

|

||||

<Label.PageTitle label={post?.title} />

|

||||

{post?.date || post?.tldr ? (

|

||||

<div className="flex flex-col gap-2">

|

||||

{post?.date && (

|

||||

<div

|

||||

className={blogArticleCardTagCardVariants({

|

||||

variant: "secondary",

|

||||

})}

|

||||

>

|

||||

{new Date(post?.date).toLocaleDateString("en-US", {

|

||||

month: "long",

|

||||

day: "numeric",

|

||||

year: "numeric",

|

||||

})}

|

||||

</div>

|

||||

)}

|

||||

{post?.canonical && (

|

||||

<div className="text-sm italic text-gray-500 mt-1">

|

||||

This post was originally posted in{" "}

|

||||

<a

|

||||

href={post.canonical}

|

||||

target="_blank"

|

||||

rel="noopener noreferrer canonical"

|

||||

className="text-primary hover:underline"

|

||||

>

|

||||

{new URL(post.canonical).hostname.replace(/^www\./, "")}

|

||||

</a>

|

||||

</div>

|

||||

)}

|

||||

{post?.tldr && <Markdown>{post?.tldr}</Markdown>}

|

||||

</div>

|

||||

) : null}

|

||||

</AppContent>

|

||||

</div>

|

||||

</div>

|

||||

<div className="pt-10 md:pt-16 pb-32">

|

||||

<BlogContent post={post} />

|

||||

</div>

|

||||

</div>

|

||||

)

|

||||

}

|

||||

33

app/[lang]/blog/page.tsx

Normal file

@@ -0,0 +1,33 @@

|

||||

import { useTranslation } from "@/app/i18n"

|

||||

import { BlogArticles } from "@/components/blog/blog-articles"

|

||||

import { AppContent } from "@/components/ui/app-content"

|

||||

import { Label } from "@/components/ui/label"

|

||||

import { Metadata } from "next"

|

||||

|

||||

export const metadata: Metadata = {

|

||||

title: "Blog",

|

||||

description: "",

|

||||

}

|

||||

|

||||

const BlogPage = async ({ params: { lang } }: any) => {

|

||||

const { t } = await useTranslation(lang, "blog-page")

|

||||

|

||||

return (

|

||||

<div className="flex flex-col">

|

||||

<div className="w-full bg-cover-gradient border-b border-tuatara-300">

|

||||

<AppContent className="flex flex-col gap-4 py-10 w-full">

|

||||

<Label.PageTitle label={t("title")} />

|

||||

<h6 className="font-sans text-base font-normal text-tuatara-950 md:text-[18px] md:leading-[27px] md:max-w-[700px]">

|

||||

{t("subtitle")}

|

||||

</h6>

|

||||

</AppContent>

|

||||

</div>

|

||||

|

||||

<AppContent className="flex flex-col gap-10 py-10">

|

||||

<BlogArticles />

|

||||

</AppContent>

|

||||

</div>

|

||||

)

|

||||

}

|

||||

|

||||

export default BlogPage

|

||||

@@ -1,152 +1,36 @@

|

||||

"use client"

|

||||

|

||||

import Image from "next/image"

|

||||

import Link from "next/link"

|

||||

import PSELogo from "@/public/icons/archstar.webp"

|

||||

import { motion } from "framer-motion"

|

||||

|

||||

import { siteConfig } from "@/config/site"

|

||||

import { Button } from "@/components/ui/button"

|

||||

import { Label } from "@/components/ui/label"

|

||||

import { Banner } from "@/components/banner"

|

||||

import { Divider } from "@/components/divider"

|

||||

import { Icons } from "@/components/icons"

|

||||

import { PageHeader } from "@/components/page-header"

|

||||

import { ConnectWithUs } from "@/components/sections/ConnectWithUs"

|

||||

import { NewsSection } from "@/components/sections/NewsSection"

|

||||

import { WhatWeDo } from "@/components/sections/WhatWeDo"

|

||||

|

||||

import { useTranslation } from "../i18n/client"

|

||||

import { BlogRecentArticles } from "@/components/blog/blog-recent-articles"

|

||||

import { HomepageHeader } from "@/components/sections/HomepageHeader"

|

||||

import { HomepageBanner } from "@/components/sections/HomepageBanner"

|

||||

import { Suspense } from "react"

|

||||

|

||||

/*

|

||||

const Devcon7Banner = () => {

|

||||

function BlogSection({ lang }: { lang: string }) {

|

||||

return (

|

||||

<div className="bg-[#FFDE17] relative py-6">

|

||||

<AppContent>

|

||||

<div className="flex flex-col lg:flex-row items-center gap-4 justify-between">

|

||||

<Image

|

||||

src="/images/devcon-7-banner-title-mobile.svg"

|

||||

alt="Devcon 7 Banner"

|

||||

className="block object-cover md:hidden"

|

||||

width={204}

|

||||

height={54}

|

||||

/>

|

||||

|

||||

<Image

|

||||

src="/images/devcon-7-banner-title-desktop.svg"

|

||||

alt="Devcon 7 Banner"

|

||||

width={559}

|

||||

height={38}

|

||||

className="hidden object-cover md:block"

|

||||

priority

|

||||

/>

|

||||

<span className="hidden lg:flex font-sans font-bold text-[#006838] tracking-[2.5px]">

|

||||

BANGKOK, THAILAND // NOVEMBER 2024

|

||||

</span>

|

||||

<Link

|

||||

href="/en/devcon-7"

|

||||

className="bg-[#EC008C] cursor-pointer hover:scale-105 duration-200 flex items-center py-0.5 px-4 min-h-8 gap-2 rounded-[6px] text-[#FFDE17] text-sm font-medium font-sans"

|

||||

>

|

||||

<span>SEE THE SCHEDULE</span>

|

||||

<Icons.arrowRight />

|

||||

</Link>

|

||||

</div>

|

||||

</AppContent>

|

||||

</div>

|

||||

<Suspense

|

||||

fallback={

|

||||

<div className="py-10 lg:py-16">Loading recent articles...</div>

|

||||

}

|

||||

>

|

||||

{/* @ts-expect-error - This is a valid server component pattern */}

|

||||

<BlogRecentArticles lang={lang} />

|

||||

</Suspense>

|

||||

)

|

||||

}

|

||||

*/

|

||||

|

||||

export default function IndexPage({ params: { lang } }: any) {

|

||||

const { t } = useTranslation(lang, "homepage")

|

||||

const { t: common } = useTranslation(lang, "common")

|

||||

|

||||

return (

|

||||

<section className="flex flex-col">

|

||||

<Divider.Section>

|

||||

<PageHeader

|

||||

title={

|

||||

<motion.h1

|

||||

initial={{ y: 16, opacity: 0 }}

|

||||

animate={{ y: 0, opacity: 1 }}

|

||||

transition={{ duration: 0.8, cubicBezier: "easeOut" }}

|

||||

>

|

||||

<Label.PageTitle label={t("headerTitle")} />

|

||||

</motion.h1>

|

||||

}

|

||||

subtitle={t("headerSubtitle")}

|

||||

image={

|

||||

<div className="m-auto flex h-[320px] w-full max-w-[280px] items-center justify-center md:m-0 md:h-full md:w-full lg:max-w-[380px]">

|

||||

<Image

|

||||

src={PSELogo}

|

||||

alt="pselogo"

|

||||

style={{ objectFit: "cover" }}

|

||||

/>

|

||||

</div>

|

||||

}

|

||||

actions={

|

||||

<div className="flex flex-col lg:flex-row gap-10">

|

||||

<Link

|

||||

href={"/research"}

|

||||

className="flex items-center gap-2 group"

|

||||

>

|

||||

<Button className="w-full sm:w-auto">

|

||||

<div className="flex items-center gap-1">

|

||||

<span className="text-base font-medium uppercase">

|

||||

{common("research")}

|

||||

</span>

|

||||

<Icons.arrowRight

|

||||

fill="white"

|

||||

className="h-5 duration-200 ease-in-out group-hover:translate-x-2"

|

||||

/>

|

||||

</div>

|

||||

</Button>

|

||||

</Link>

|

||||

<Link

|

||||

href={"/projects"}

|

||||

className="flex items-center gap-2 group"

|

||||

>

|

||||

<Button className="w-full sm:w-auto">

|

||||

<div className="flex items-center gap-1">

|

||||

<span className="text-base font-medium uppercase">

|

||||

{common("developmentProjects")}

|

||||

</span>

|

||||

<Icons.arrowRight

|

||||

fill="white"

|

||||

className="h-5 duration-200 ease-in-out group-hover:translate-x-2"

|

||||

/>

|

||||

</div>

|

||||

</Button>

|

||||

</Link>

|

||||

</div>

|

||||

}

|

||||

/>

|

||||

<HomepageHeader lang={lang} />

|

||||

|

||||

<NewsSection lang={lang} />

|

||||

<BlogSection lang={lang} />

|

||||

|

||||

<WhatWeDo lang={lang} />

|

||||

|

||||

<Banner

|

||||

title={common("connectWithUs")}

|

||||

subtitle={common("connectWithUsDescription")}

|

||||

>

|

||||

<Link

|

||||

href={siteConfig.links.discord}

|

||||

target="_blank"

|

||||

rel="noreferrer"

|

||||

passHref

|

||||

>

|

||||

<Button>

|

||||

<div className="flex items-center gap-2">

|

||||

<Icons.discord fill="white" className="h-4" />

|

||||

<span className="text-[14px] uppercase">

|

||||

{t("joinOurDiscord")}

|

||||

</span>

|

||||

<Icons.externalUrl fill="white" className="h-5" />

|

||||

</div>

|

||||

</Button>

|

||||

</Link>

|

||||

</Banner>

|

||||

<HomepageBanner lang={lang} />

|

||||

</Divider.Section>

|

||||

</section>

|

||||

)

|

||||

|

||||

6

app/i18n/locales/en/blog-page.json

Normal file

@@ -0,0 +1,6 @@

|

||||

{

|

||||

"title": "Blog",

|

||||

"subtitle": "Read our latest articles and stay updated on the latest news in the world of cryptography.",

|

||||

"recentArticles": "Recent",

|

||||

"seeMore": "See more"

|

||||

}

|

||||

72

articles/README.md

Normal file

@@ -0,0 +1,72 @@

|

||||

# Adding New Articles

|

||||

|

||||

This document explains how to add new articles to into pse.dev blog section.

|

||||

|

||||

## Step 1: Create the Article File

|

||||

|

||||

1. Duplicate the `_article-template.md` file in the `articles` directory

|

||||

2. Rename it to match your article's title using kebab-case (e.g., `my-new-article.md`)

|

||||

|

||||

## Step 2: Fill in the Article Information

|

||||

|

||||

Edit the frontmatter section at the top of the file:

|

||||

|

||||

```

|

||||

---

|

||||

authors: ["Your Name"] # Add your name or multiple authors in an array

|

||||

title: "Your Article Title" # The title of your article

|

||||

image: "/articles/my-new-article/cover.webp" # Image used as cover

|

||||

tldr: "A brief summary of your article" #Short summary

|

||||

date: "YYYY-MM-DD" # Publication date in ISO format

|

||||

canonical: "mirror.xyz/my-new-article" # (Optional) The original source URL, this tells search engines the primary version of the content

|

||||

---

|

||||

```

|

||||

|

||||

Write your article content using Markdown formatting:

|

||||

|

||||

- Use `#` for main headings (H1), `##` for subheadings (H2), etc.

|

||||

- Use `*italic*` for italic text and `**bold**` for bold text

|

||||

- For code blocks, use triple backticks with optional language specification:

|

||||

```javascript

|

||||

// Your code here

|

||||

```

|

||||

- For images, use the Markdown image syntax: ``

|

||||

- For LaTeX math formulas:

|

||||

- Use single dollar signs for inline math: `$E=mc^2$` will render as $E=mc^2$

|

||||

- Use double dollar signs for block math:

|

||||

```

|

||||

$$

|

||||

F(x) = \int_{-\infty}^{x} f(t) dt

|

||||

$$

|

||||

```

|

||||

Will render as a centered math equation block

|

||||

|

||||

## Step 3: Add Images

|

||||

|

||||

1. Create a new folder in the `/public/articles` directory with **exactly the same name** as your markdown file (without the .md extension)

|

||||

|

||||

- Example: If your article is named `my-new-article.md`, create a folder named `my-new-article`

|

||||

|

||||

2. Add your images to this folder:

|

||||

- Any additional images you want to use in your article should be placed in this folder

|

||||

- Reference images in your article using just the file name and the extensions of it

|

||||

|

||||

## Step 4: Preview Your Article

|

||||

|

||||

Before submitting, make sure to:

|

||||

|

||||

1. Check that your markdown formatting is correct

|

||||

2. Verify all images are displaying properly

|

||||

|

||||

## Step 5: PR Review process

|

||||

|

||||

Open Pull request following the previews step and for any help

|

||||

|

||||

- Suggest to tag: @kalidiagne, @b1gk4t, @AtHeartEngineer for PR review.

|

||||

- If question, please reach out in discord channel #website-pse

|

||||

|

||||

## Important Notes

|

||||

|

||||

- The folder name in `/public/articles` must **exactly match** your markdown filename (without the .md extension)

|

||||

- Use descriptive file names for your additional images

|

||||

- Optimize your images for web before adding them to keep page load times fast

|

||||

7

articles/_article-template.md

Normal file

@@ -0,0 +1,7 @@

|

||||

---

|

||||

authors: [""]

|

||||

title: "Examle Title"

|

||||

image: "cover.png"

|

||||

tldr: ""

|

||||

date: "2024-04-07"

|

||||

---

|

||||

@@ -0,0 +1,163 @@

|

||||

---

|

||||

authors: ["PSE Team"]

|

||||

title: "A Technical Introduction to Arbitrum's Optimistic Rollup"

|

||||

image: null

|

||||

tldr: ""

|

||||

date: "2022-08-29"

|

||||

canonical: "https://mirror.xyz/privacy-scaling-explorations.eth/UlHGv9KIk_2MOHr7POfwZAXP01k221hZwQsLCF63cLQ"

|

||||

---

|

||||

|

||||

|

||||

|

||||

Originally published on Sep 30, 2021:

|

||||

|

||||

Arbitrum is Offchain Labs’ optimistic rollup implementation that aims to greatly increase the throughput of the Ethereum network. This guide is an introduction to how the Arbitrum design works and is meant for anyone looking to get a somewhat technical overview on this layer 2 solution. This article assumes that the reader has some knowledge of Ethereum and optimistic rollups. The following links may be helpful to those who would like more info on optimistic rollups:

|

||||

|

||||

1. [Optimistic Rollups](https://docs.ethhub.io/ethereum-roadmap/layer-2-scaling/optimistic_rollups/)

|

||||

2. [An Incomplete Guide to Rollups](https://vitalik.ca/general/2021/01/05/rollup.html)

|

||||

3. [A Rollup-Centric Ethereum Roadmap](https://ethereum-magicians.org/t/a-rollup-centric-ethereum-roadmap/4698)

|

||||

4. [(Almost) Everything you need to know about the Optimistic Rollup](https://research.paradigm.xyz/rollups)

|

||||

|

||||

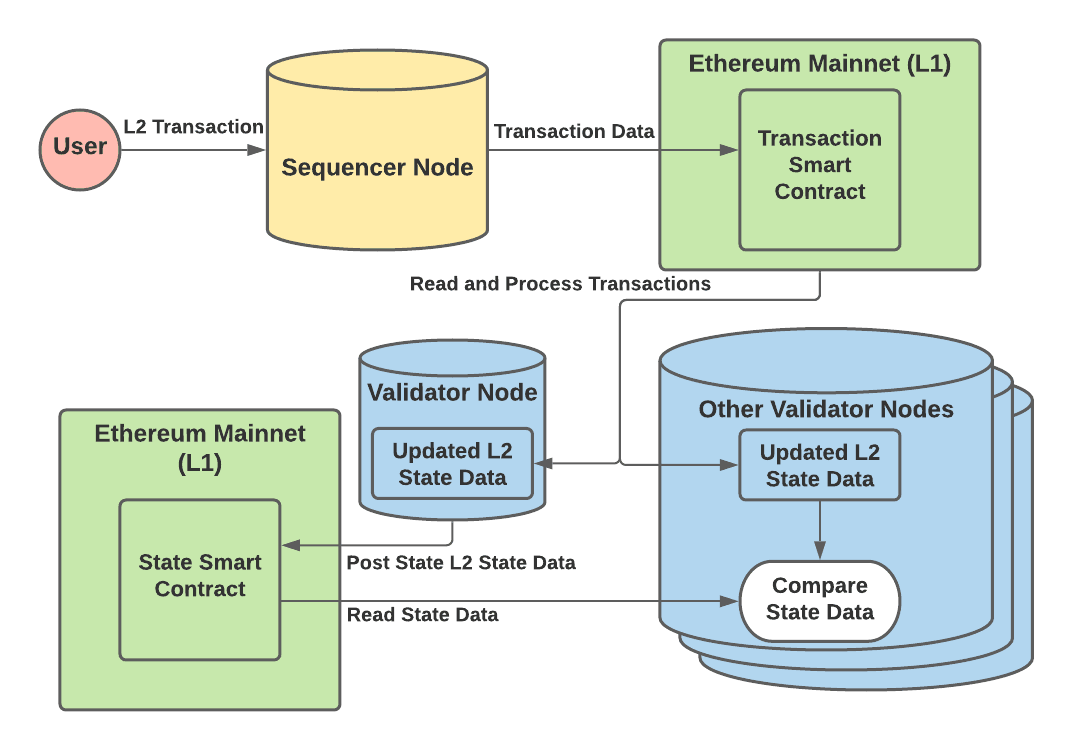

The Arbitrum network is run by two main types of nodes — batchers and validators. Together these nodes interact with Ethereum mainnet (layer 1, L1) in order to maintain a separate chain with its own state, known as layer 2 (L2). Batchers are responsible for taking user L2 transactions and submitting the transaction data onto L1. Validators on the other hand, are responsible for reading the transaction data on L1, processing the transaction and therefore updating the L2 state. Validators will then post the updated L2 state data to L1 so that anyone can verify the validity of this new state. The transaction and state data that is actually stored on L1 is described in more detail in the ‘Transaction and State Data Storage on L1’ section.

|

||||

|

||||

**Basic Workflow**

|

||||

|

||||

1. The basic workflow begins with users sending L2 transactions to a batcher node, usually the sequencer.

|

||||

2. Once the sequencer receives enough transactions, it will post them into an L1 smart contract as a batch.

|

||||

3. A validator node will read these transactions from the L1 smart contract and process them on their local copy of the L2 state.

|

||||

4. Once processed, a new L2 state is generated locally and the validator will post this new state root into an L1 smart contract.

|

||||

5. Then, all other validators will process the same transactions on their local copies of the L2 state.

|

||||

6. They will compare their resultant L2 state root with the original one posted to the L1 smart contract.

|

||||

7. If one of the validators gets a different state root than the one posted to L1, they will begin a challenge on L1 (explained in more detail in the ‘Challenges’ section).

|

||||

8. The challenge will require the challenger and the validator that posted the original state root to take turns proving what the correct state root should be.

|

||||

9. Whichever user loses the challenge, gets their initial deposit (stake) slashed. If the original L2 state root posted was invalid, it will be destroyed by future validators and will not be included in the L2 chain.

|

||||

|

||||

The following diagram illustrates this basic workflow for steps 1–6.

|

||||

|

||||

|

||||

|

||||

## Batcher Nodes and Submitting L2 Transaction Data

|

||||

|

||||

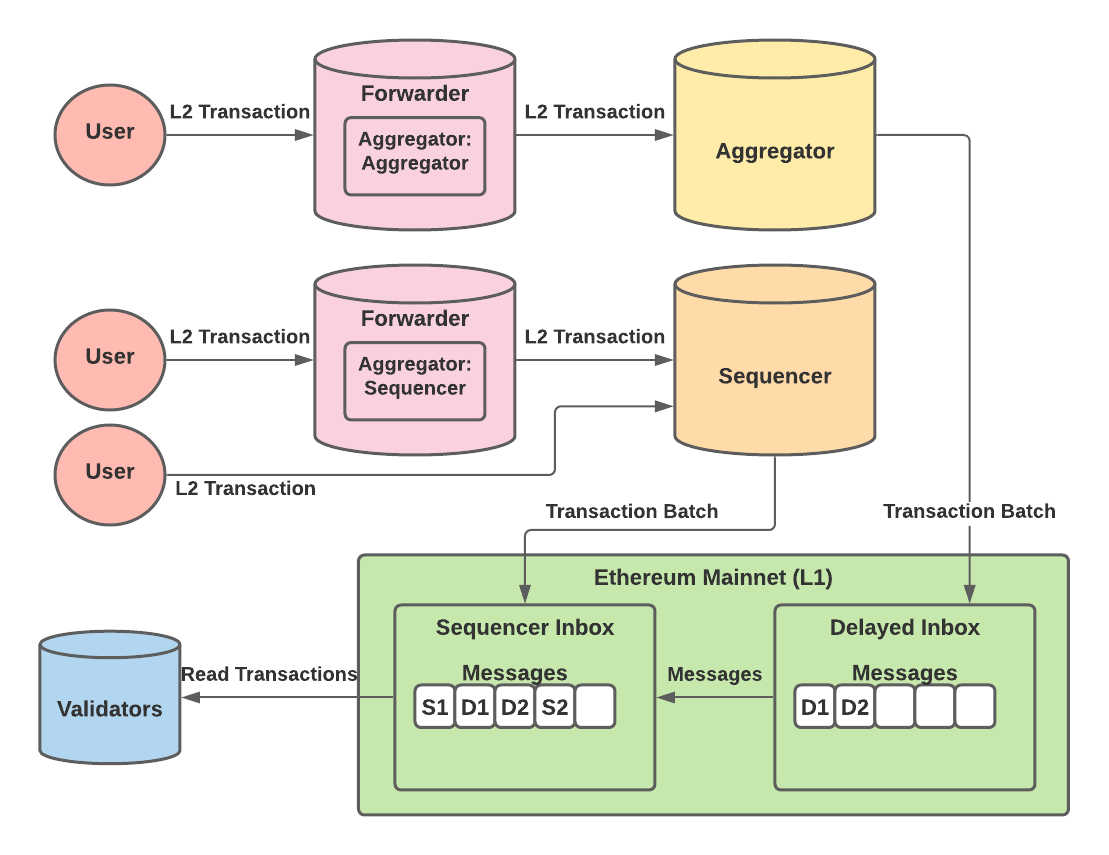

There are two different L1 smart contracts that batcher nodes will use to post the transaction data. One is known as the ‘delayed inbox’ while the other is known as the ‘sequencer inbox’. Anyone can send transactions to the delayed inbox, whereas only the sequencer can send transactions to the sequencer inbox. The sequencer inbox pulls in transaction data from the delayed inbox and interweaves it with the other L2 transactions submitted by the sequencer. Therefore, the sequencer inbox is the primary contract where every validator pulls in the latest L2 transaction data.

|

||||

|

||||

There are 3 types of batcher nodes — forwarders, aggregators, and sequencers. Users can send their L2 transactions to any of these 3 nodes. Forwarder nodes forward any L2 transactions to a designated address of another node. The designated node can be either a sequencer or an aggregator and is referred to as the aggregator address.

|

||||

|

||||

Aggregator nodes will take a group of incoming L2 transactions and batch them into a single message to the delayed inbox. The sequencer node will also take a group of incoming L2 transactions and batch them into a single message, but it will send the batch message to the sequencer inbox instead. If the sequencer node stops adding transactions to the sequencer inbox, anyone can force the sequencer inbox to include transactions from the delayed inbox via a smart contract function call. This allows the Arbitrum network to always be available and resistant to a malicious sequencer. Currently Arbitrum is running their own single sequencer for Arbitrum mainnet, but they have plans to decentralize the sequencer role in the future.

|

||||

|

||||

The different L2 transaction paths are shown below.

|

||||

|

||||

|

||||

|

||||

Essentially, batcher nodes are responsible for submitting any L2 transaction data onto L1. Once these transactions are processed on L2 and a new state is generated, a validator must submit that state data onto L1 as well. That process is covered in the next section.

|

||||

|

||||

## Validator Nodes and Submitting L2 State Data

|

||||

|

||||

The set of smart contracts that enable validators to submit and store L2 state data is known as the rollup. The rollup is essentially a chain of blocks, so in other words, the rollup is the L2 chain. Note that the Arbitrum codebase refers to these blocks as ‘nodes’. However, to prevent confusion with the terms ‘validator nodes’ and ‘batcher nodes’, I will continue to refer to these rollup nodes as blocks throughout the article.

|

||||

|

||||

Each block contains a hash of the L2 state data. So, validators will read and process transactions from the sequencer inbox, and then submit the updated L2 state data hash to the rollup smart contract. The rollup, which stores a chain of blocks, will create a new block with this data and add it as the latest block to the chain. When the validator submits the L2 state data to the rollup smart contract, they also specify which block in the current chain is the parent block to this new block.

|

||||

|

||||

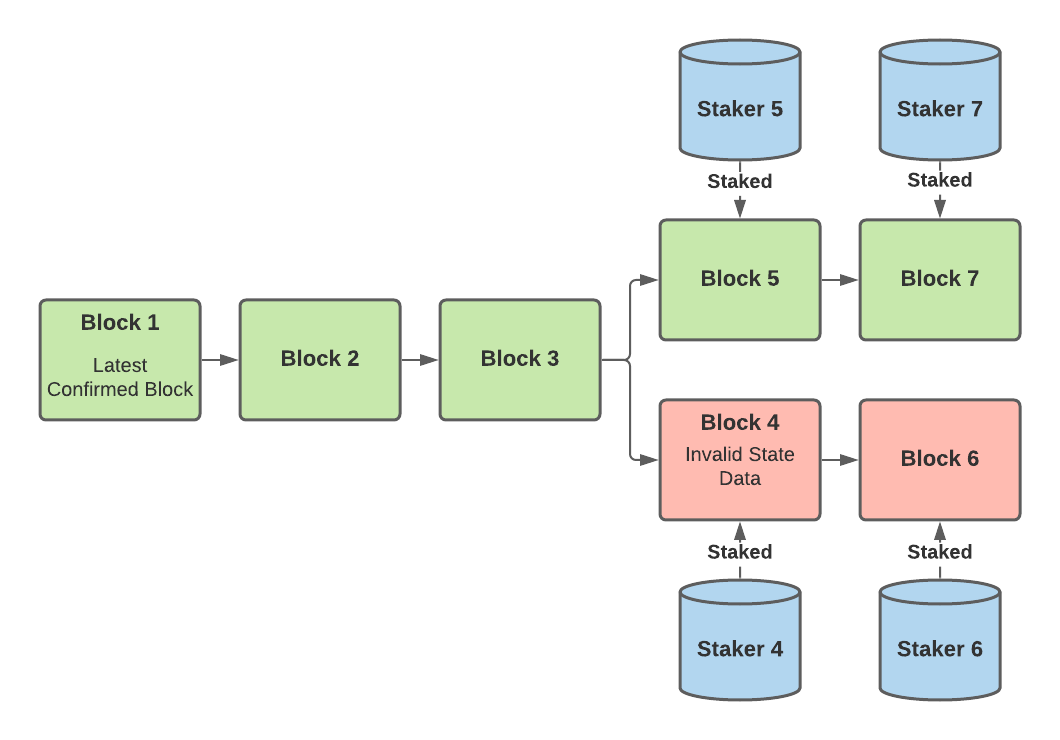

In order to penalize validators that submit invalid state data, a staking system has been implemented. In order to submit new L2 state data to the rollup, a validator must be a staker — they must have deposited a certain amount of Eth (or other tokens depending on the rollup). That way, if a malicious validator submits invalid state data, another validator can challenge that block and the malicious validator will lose their stake.

|

||||

|

||||

Once a validator becomes a staker, they can then stake on different blocks. Here are some of the important rules to staking:

|

||||

|

||||

- Stakers must stake on any block they create.

|

||||

- Multiple stakers can stake on the same block.

|

||||

- Stakers cannot stake on two separate block paths — when a staker stakes on a new block, it must be a descendant of the block they were previously staked on (unless this is the stakers first stake).

|

||||

- Stakers do not have to add an additional deposit anytime they stake on a new block.

|

||||

- If a block loses a challenge, all stakers staked on that block or any descendant of that block will lose their stake.

|

||||

|

||||

A block will be confirmed — permanently accepted in L1 and never reverted — if all of the following are true:

|

||||

|

||||

- The 7 day period has passed since the block’s creation

|

||||

- There are no existing challenging blocks

|

||||

- At least one staker is staked on it

|

||||

|

||||

A block can be rejected (destroyed) if all of the following are true:

|

||||

|

||||

- It’s parent block is older than the latest confirmed block (the latest confirmed block is on another branch)

|

||||

- There is a staker staked on a sibling block

|

||||

- There are no stakers staked on this block

|

||||

- The 7 day period has passed since the block’s creation

|

||||

|

||||

Take the following diagram as an example:

|

||||

|

||||

|

||||

|

||||

In this example, since blocks 4 and 5 share the same parent, staker 5 decides to challenge staker 4 and wins since block 4 contains invalid state data. Therefore, both staker 4 and staker 6 will lose their stakes. Stakers will continue adding new blocks to the chain that contains blocks 5 and 7. Blocks 4 and 6 will then be destroyed after the 7 day period has passed. Even though stakers are necessary for the system to work, not all validator nodes are stakers. The different types of validators are explained in the next section.

|

||||

|

||||

**Validator Types**

|

||||

|

||||

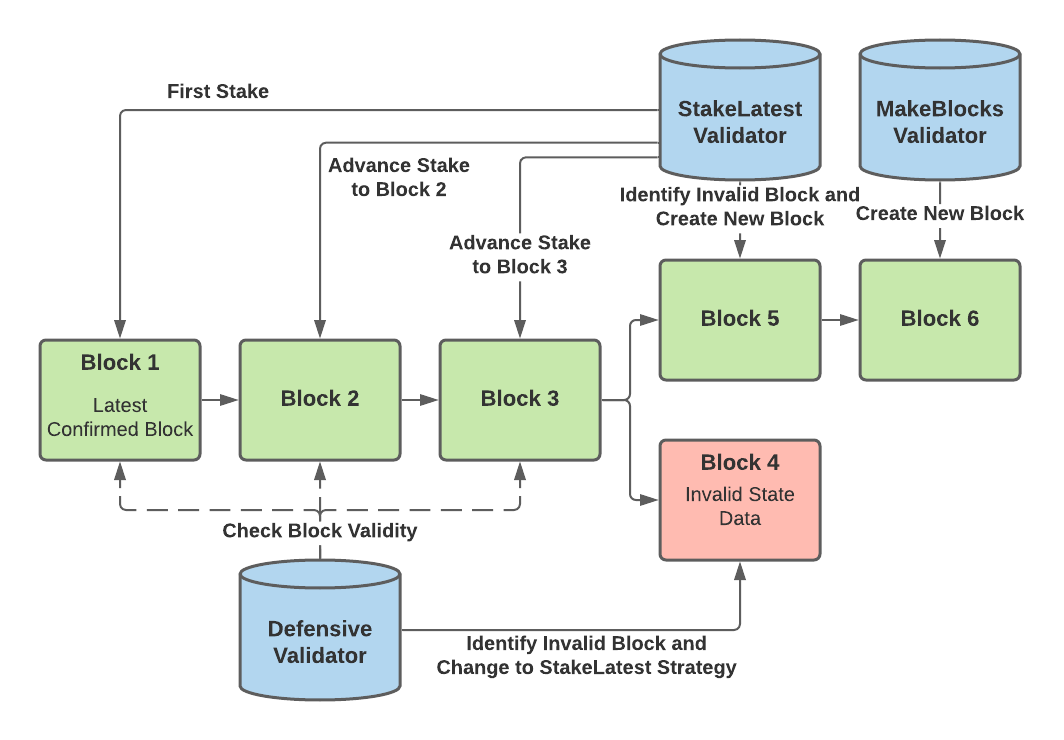

Each validator may use a different strategy to keep the network secure. Currently, there are three types of supported validating strategies — Defensive, StakeLatest, and MakeBlocks (known as MakeNodes in the codebase). Defensive validators will monitor the rollup chain of blocks and look out for any forks/conflicting blocks. If a fork is detected, that validator will switch to the StakeLatest strategy. Therefore, if there are no conflicting blocks, defensive validators will not have any stakes.

|

||||

|

||||

StakeLatest validators will stake on the existing blocks in the rollup if the blocks have valid L2 state data. They will advance their stake to the furthest correct block in the chain as possible. StakeLatest validators normally do not create new blocks unless they have identified a block with incorrect state data. In that case, the validators will create a new block with correct data and mandatorily stake on it.

|

||||

|

||||

MakeBlocks validators will stake on the furthest correct block in the rollup chain as well. However, even when there are no invalid blocks, MakeBlocks validators will create new blocks once they have advanced their stake to the end of the chain. These are the primary validators responsible for progressing the chain forward with new state data.

|

||||

|

||||

The following diagram illustrates the actions of the different validator strategies:

|

||||

|

||||

|

||||

|

||||

## Transaction and State Data Storage on L1

|

||||

|

||||

**Transaction Data Storage**

|

||||

|

||||

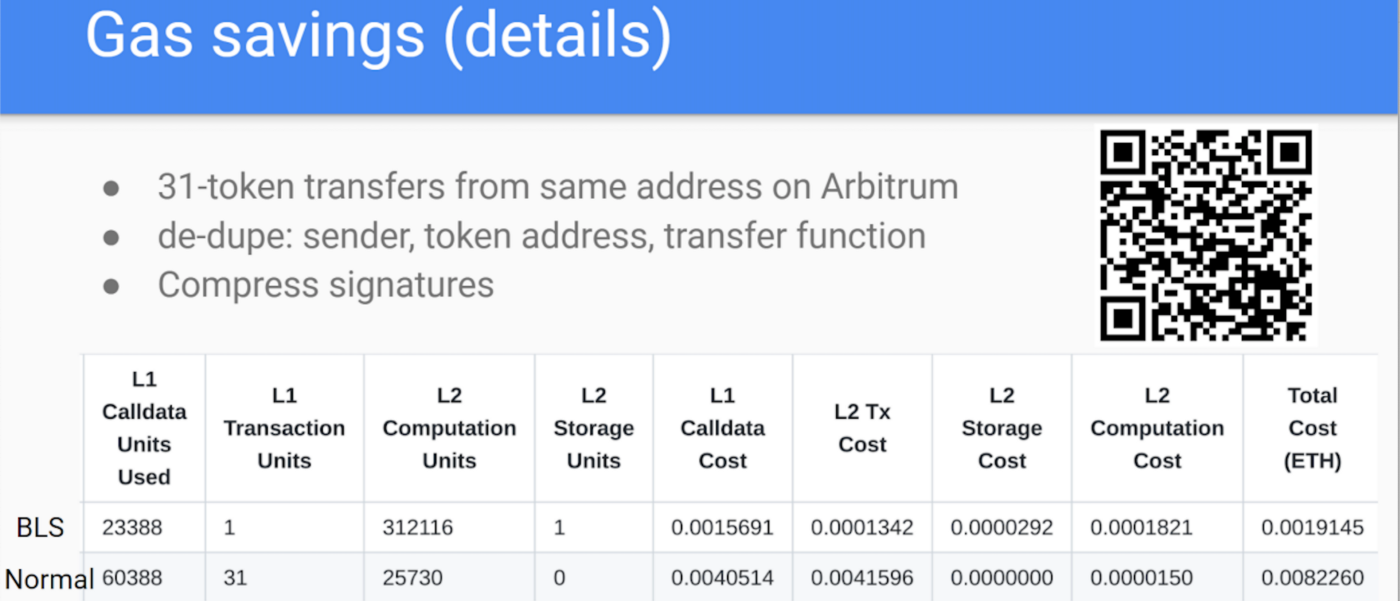

As explained above, aggregator and sequencer nodes receive L2 transactions and submit them to the L1 delayed and sequencer inboxes. Posting this data to L1 is where most of the expenses of L2 come from. Therefore, it is important to understand how this data is stored on L1 and the methods used to reduce this storage requirement as much as possible.

|

||||

|

||||

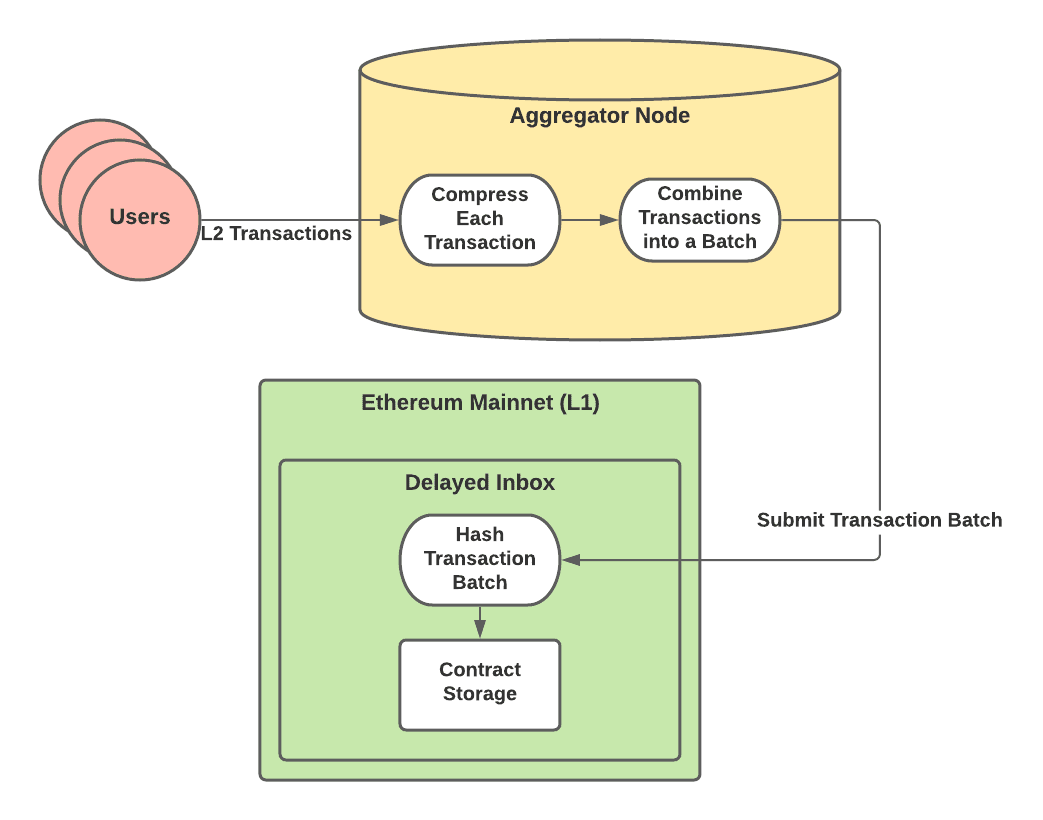

Aggregator nodes receive user L2 transactions, compress the calldata into a byte array, and then combine multiple of these compressed transactions into an array of byte arrays known as a transaction batch. Finally, they will submit the transaction batch to the delayed inbox. The inbox will then hash the transaction batch and store the hash in contract storage. Sequencer nodes follow a very similar pattern, but the sequencer inbox must also include data about the number of messages to include from the delayed inbox. This data will be part of the final hash that the sequencer inbox stores in contract storage.

|

||||

|

||||

|

||||

|

||||

**State Data Storage**

|

||||

|

||||

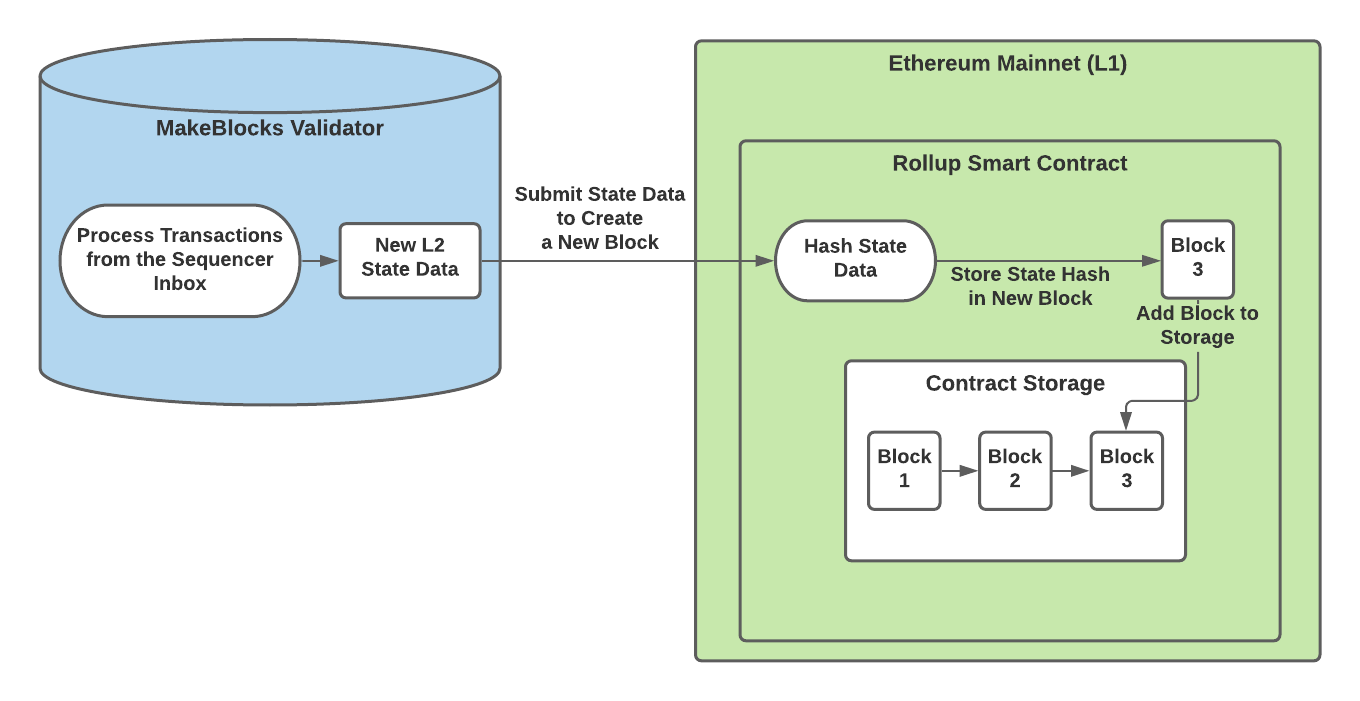

After MakeBlocks validators read and process L2 transactions from the sequencer inbox, they will submit their updated L2 state data to the rollup smart contract. The rollup smart contract then hashes the state data and stores the hash in contract storage.

|

||||

|

||||

|

||||

|

||||

**Retrieving Transaction and State Data**

|

||||

|

||||

Even though only the hash of the transaction and state data is stored in contract storage, other nodes can see the original data by retrieving the calldata of the transaction that submitted the data to L1 from an Ethereum full node. The calldata of transactions to the delayed or sequencer inboxes contain the data for every L2 transaction that the aggregator or sequencer batched. The calldata of transactions to the rollup contract contain all of the relevant state data — enough for other validators to determine if it is valid or invalid — for L2 at that time. To make looking up the transaction easier, the smart contracts emit an event to the Ethereum logs that allows anyone to easily search for either the L2 transaction data or the L2 state data.

|

||||

|

||||

Since the smart contracts only have to store hashes in their storage rather than the full transaction or state data, a lot of gas is saved. The primary cost of rollups comes from storing this data on L1. Therefore, this storage mechanism is able to reduce gas expenses even further.

|

||||

|

||||

## The AVM and ArbOS

|

||||

|

||||

**The Arbitrum Virtual Machine**

|

||||

|

||||

Since Arbitrum L2 transactions are not executed on L1, they don’t have to follow the same exact rules as the EVM for computation. Therefore, the Arbitrum team built their own virtual machine known as the Arbitrum Virtual Machine (AVM). The AVM is very similar to the EVM because a primary goal was to support compatibility with EVM compiled smart contracts. However, there are a few important differences.

|

||||

|

||||

A major difference between the AVM and EVM is that the AVM must support Arbitrum’s challenges. Challenges, covered in more detail in the next section, require that a step of transaction execution must be provable. Therefore, Arbitrum has introduced the use of CodePoints to their virtual machine. Normally, when code is executed, the instructions are stored in a linear array with a program counter (PC) pointing to the current instruction. Using the program counter to prove which instruction is being executed would take logarithmic time. In order to reduce this time complexity to constant time, the Arbitrum team implemented CodePoints — a pair of the current instruction and the hash of the next codepoint. Every instruction in the array has a codepoint and this allows the AVM to instantly prove which instruction was being executed at that program counter. CodePoints do add some complexity to the AVM, but the Arbitrum system only uses codepoints when it needs to make a proof about transaction execution. Normally, it will use the normal program counter architecture instead.

|

||||

|

||||

There are quite a few other important differences that are well documented on Arbitrum’s site — [Why AVM Differs from EVM](https://developer.offchainlabs.com/docs/inside_arbitrum#why-avm-differs-from-evm)

|

||||

|

||||

**ArbOS**

|

||||

|

||||

ArbOS is Arbitrum’s own operating system. It is responsible for managing and tracking the resources of smart contracts used during execution. So, ArbOS keeps an account table that keeps track of the state for each account. Additionally, it operates the funding model for validators participating in the rollup protocol.

|

||||

|

||||

The AVM has built in instructions to aid the execution of ArbOS and its ability to track resources. This support for ArbOS in the AVM allows ArbOS to implement certain rules of execution at layer 2 instead of in the rollup smart contracts on layer 1. Any computation moved from layer 1 to layer 2 saves gas and lowers expenses.

|

||||

|

||||

## Challenges

|

||||

|

||||

Optimistic rollup designs require there be a way to tell whether the L2 state data submitted to L1 is valid or invalid. Currently, there are two widely known methods — replayability and interactive proving. Arbitrum has implemented interactive proving as their choice for proving an invalid state.

|

||||

|

||||

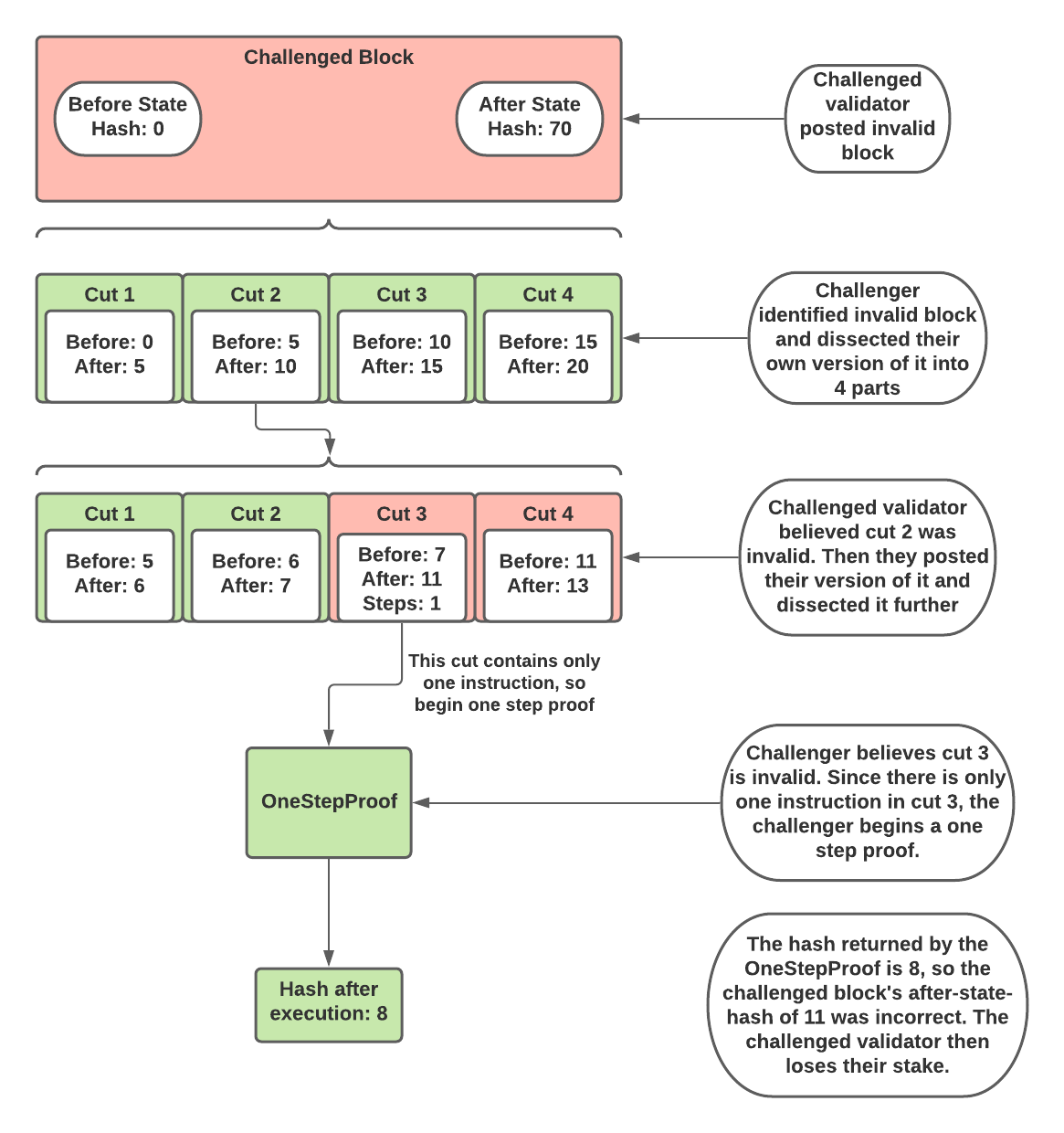

When a validator submits updated state data to the rollup, any staker can challenge that block and submit the correct version of it. When a challenge begins, the two stakers involved (challenger and challenged staker) must take turns dividing the execution into an equal number of parts and claim which of those parts is invalid. Then they must submit their version of that part. The other staker will then dissect that part into an equal number of parts as well, and select which part they believe is invalid. They will then submit their version of that part.

|

||||

|

||||

This process continues until the contested part of execution is only one instruction long. At this point, the smart contracts on L1 will perform a one step proof. Essentially, the one step proof executes that one instruction and returns an updated state. The block whose state matches that of the one step proof will win the challenge and the other block will lose their stake. The following diagram illustrates an example of a challenge.

|

||||

|

||||

|

||||

|

||||

## Conclusion

|

||||

|

||||

**How does this raise Ethereum’s transaction per second and lower transaction costs?**

|

||||

|

||||

Arbitrum, along with all optimistic rollups, greatly improves the scalability of the Ethereum network and therefore lowers the gas costs (holding throughput constant). In L1 every Ethereum full node in the network will process the transaction, and since the network contains so many nodes, computation becomes very expensive. With Arbitrum, transactions will only be processed by a small set of nodes — the sequencer, aggregators, and validators. So, the computation of each transaction has been moved off of L1 while only the transaction calldata remains on L1. This clears a lot of space on L1 and allows many more transactions to be processed. The greater throughput reduces the gas costs since the competition for getting a transaction added to a block is lower.

|

||||

|

||||

**Anything special about Abritrum’s implementation of optimistic rollups?**

|

||||

|

||||

Arbitrum’s design gives many advantages that other rollup implementations don’t have because of its use of interactive proving. Interactive proving provides a great number of benefits, such as no limits on contract size, that are outlined in good detail on Arbitrum’s site — [Why Interactive Proving is Better](https://developer.offchainlabs.com/docs/inside_arbitrum#why-interactive-proving-is-better). With Arbitrum’s successful mainnet launch (though still early), it’s clear that the project has achieved an incredible feat.

|

||||

|

||||

If you’re interested in reading more on Arbitrum’s optimistic rollup, their documentation covers a lot more ground and is easy to read.

|

||||

|

||||

- [Arbitrum Doc — Inside Arbitrum](https://developer.offchainlabs.com/docs/inside_arbitrum)

|

||||

- [Arbitrum Doc — Rollup Protocol](https://developer.offchainlabs.com/docs/rollup_protocol)

|

||||

- [Arbitrum Doc — AVM Design Rationale](https://developer.offchainlabs.com/docs/avm_design)

|

||||

- [Arbitrum Doc — Overview of Differences with Ethereum](https://developer.offchainlabs.com/docs/differences_overview)

|

||||

@@ -0,0 +1,205 @@

|

||||

---

|

||||

authors: ["PSE Team"]

|

||||

title: "A Technical Introduction to MACI 1.0 - Privacy & Scaling Explorations"

|

||||

image: null

|

||||

tldr: ""

|

||||

date: "2022-08-29"

|

||||

canonical: "https://mirror.xyz/privacy-scaling-explorations.eth/IlWP_ITvmeZ2-elTJl44SCEGlBiemKt3uxXv2A6Dqy4"

|

||||

---

|

||||

|

||||

|

||||

|

||||

Originally published on Jan 18, 2022:

|

||||

|

||||

1. [Introduction](https://mirror.xyz/privacy-scaling-explorations.eth/IlWP_ITvmeZ2-elTJl44SCEGlBiemKt3uxXv2A6Dqy4#5e4c)

|

||||

|

||||

a. [Background](https://mirror.xyz/privacy-scaling-explorations.eth/IlWP_ITvmeZ2-elTJl44SCEGlBiemKt3uxXv2A6Dqy4#2bcd)

|

||||

|

||||

2. [System Overview](https://mirror.xyz/privacy-scaling-explorations.eth/IlWP_ITvmeZ2-elTJl44SCEGlBiemKt3uxXv2A6Dqy4#6ca2)

|

||||

|

||||

a. [Roles](https://mirror.xyz/privacy-scaling-explorations.eth/IlWP_ITvmeZ2-elTJl44SCEGlBiemKt3uxXv2A6Dqy4#f130)

|

||||

|

||||

b. [Vote Overriding and Public Key Switching](https://mirror.xyz/privacy-scaling-explorations.eth/IlWP_ITvmeZ2-elTJl44SCEGlBiemKt3uxXv2A6Dqy4#0b6f)

|

||||

|

||||

c. [zk-SNARKs](https://mirror.xyz/privacy-scaling-explorations.eth/IlWP_ITvmeZ2-elTJl44SCEGlBiemKt3uxXv2A6Dqy4#aa08)

|

||||

|

||||

3. [Workflow](https://mirror.xyz/privacy-scaling-explorations.eth/IlWP_ITvmeZ2-elTJl44SCEGlBiemKt3uxXv2A6Dqy4#357c)

|

||||

|

||||

a. [Sign Up](https://mirror.xyz/privacy-scaling-explorations.eth/IlWP_ITvmeZ2-elTJl44SCEGlBiemKt3uxXv2A6Dqy4#a35f)

|

||||

|

||||

b. [Publish Message](https://mirror.xyz/privacy-scaling-explorations.eth/IlWP_ITvmeZ2-elTJl44SCEGlBiemKt3uxXv2A6Dqy4#37df)

|

||||

|

||||

c. [Process Messages](https://mirror.xyz/privacy-scaling-explorations.eth/IlWP_ITvmeZ2-elTJl44SCEGlBiemKt3uxXv2A6Dqy4#493a)

|

||||

|

||||

d. [Tally Votes](https://mirror.xyz/privacy-scaling-explorations.eth/IlWP_ITvmeZ2-elTJl44SCEGlBiemKt3uxXv2A6Dqy4#56b5)

|

||||

|

||||

4. [Conclusion](https://mirror.xyz/privacy-scaling-explorations.eth/IlWP_ITvmeZ2-elTJl44SCEGlBiemKt3uxXv2A6Dqy4#53c9)

|

||||

|

||||

MACI, which stands for Minimal Anti-Collusion Infrastructure, is an application that allows users to have an on-chain voting process with greatly increased collusion resistance. A common problem among today’s on-chain voting processes is how easy it is to bribe voters into voting for a particular option. Oftentimes this bribery takes the form of “join our pool (vote our way) and we will give you a cut of the rewards (the bribe)”. Since all transactions on the blockchain are public, without MACI, voters can easily prove to the briber which option they voted for and therefore receive the bribe rewards.

|

||||

|

||||

MACI counters this by using zk-SNARKs to essentially hide how each person voted while still revealing the final vote result. User’s cannot prove which option they voted for, and therefore bribers cannot reliably trust that a user voted for their preferred option. For example, a voter can tell a briber that they are voting for option A, but in reality they voted for option B. There is no reliable way to prove which option the voter actually voted for, so the briber does not have the incentive to pay voters to vote their way.

|

||||

|

||||

## a. Background

|

||||

|

||||

For a general overview, the history and the importance of MACI, see [Release Announcement: MACI 1.0](https://medium.com/privacy-scaling-explorations/release-announcement-maci-1-0-c032bddd2157) by Wei Jie, one of the creators. He also created a very helpful [youtube video](https://www.youtube.com/watch?v=sKuNj_IQVYI) on the overview of MACI. To see the origin of the idea of MACI, see Vitalik’s research post on [Minimal Anti-Collusion Infrastructure](https://ethresear.ch/t/minimal-anti-collusion-infrastructure/5413?u=weijiekoh). Lastly, it is recommended to understand the basic idea behind zk-SNARKs, as these are a core component of MACI. The following articles are great resources:

|

||||

|

||||

- [Introduction to zk-SNARKs](https://consensys.net/blog/developers/introduction-to-zk-snarks/) — Consensys

|

||||

- [What are zk-SNARKs](https://z.cash/technology/zksnarks/) — Zcash

|

||||

- [An approximate introduction to how zk-SNARKs are possible](https://vitalik.ca/general/2021/01/26/snarks.html) — Vitalik

|

||||

- [zkSNARKs in a nutshell](https://blog.ethereum.org/2016/12/05/zksnarks-in-a-nutshell/) — Ethereum.org

|

||||

|

||||

This article will go over the general workflow of MACI and how it is capable of providing the following tenets (taken word for word from Wei Jie’s article):

|

||||

|

||||

1. **Collusion Resistance**: No one except a trusted coordinator should be certain of the validity of a vote, reducing the effectiveness of bribery

|

||||

2. **Receipt-freeness**: No voter may prove (besides to the coordinator) which way they voted

|

||||

3. **Privacy**: No one except a trusted coordinator should be able to decrypt a vote

|

||||

4. **Uncensorability**: No one (not even the trusted coordinator) should be able to censor a vote

|

||||

5. **Unforgeability**: Only the owner of a user’s private key may cast a vote tied to its corresponding public key

|

||||

6. **Non-repudiation**: No one may modify or delete a vote after it is cast, although a user may cast another vote to nullify it

|

||||

7. **Correct execution**: No one (not even the trusted coordinator) should be able to produce a false tally of votes

|

||||

|

||||

## 2\. System Overview

|

||||

|

||||

## a. Roles

|

||||

|

||||

In the MACI workflow, there are two different roles: users (voters) and a single trusted coordinator. The users vote on the blockchain via MACI smart contracts, and the coordinator tallies up the votes and releases the final results.

|

||||

|

||||

The coordinators must use zk-SNARKs to prove that their final tally result is valid without releasing the vote of every individual. Therefore, even if a coordinator is corrupt, they are unable to change a user’s vote or add extra votes themselves. A corrupt coordinator can stop a vote by never publishing the results, but they can’t publish false results.

|

||||

|

||||

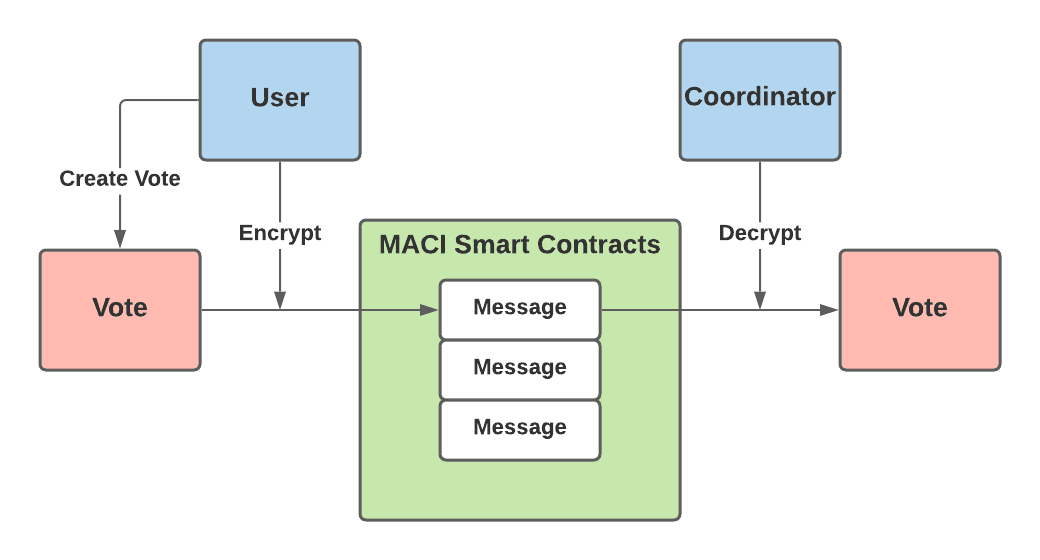

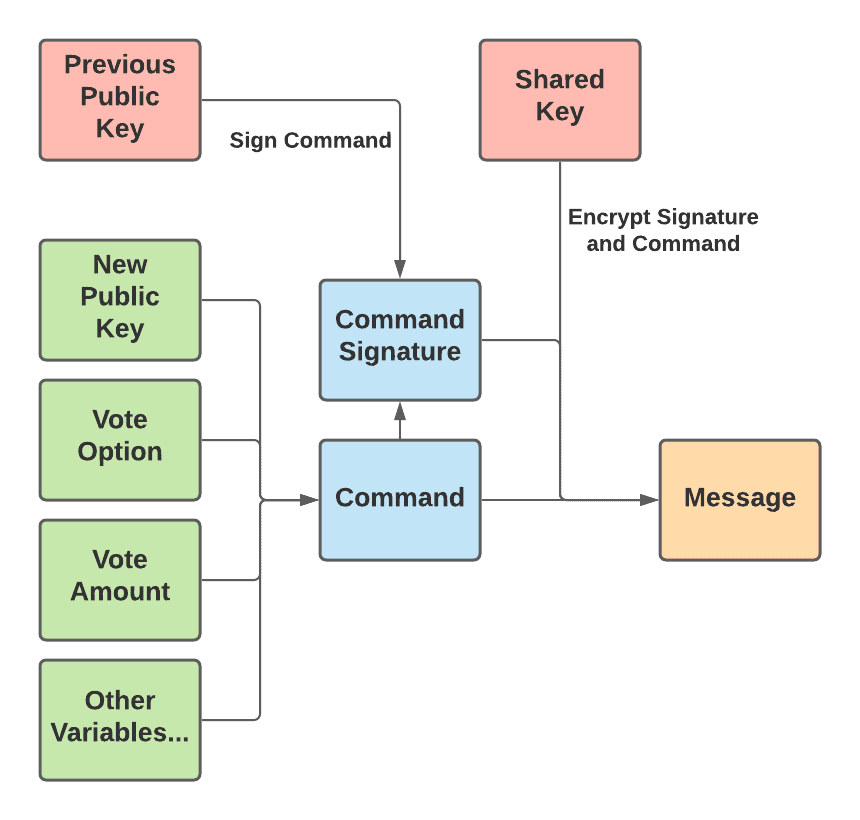

Before sending their vote on the blockchain, users encrypt their vote using a shared key that only the user and coordinator can know. This key scheme is designed so that every individual user shares a distinct key with the coordinator. This prevents any bribers from simply reading the transaction data to see which option a user voted for. The encrypted vote is now considered a “message” and the user sends this message to a MACI smart contract to be stored on-chain.

|

||||

|

||||

A very simplified illustration of this encryption can be seen below:

|

||||

|

||||

|

||||

|

||||

## b. Vote Overriding and Public Key Switching

|

||||

|

||||

Before a user can cast a vote, they must sign up by sending the public key they wish to use to vote to a MACI smart contract. This public key acts as their identity when voting. They can vote from any address, but their message must contain a signature from that public key. When casting an actual vote after signing up, a user will bundle a few variables — including a public key, their vote option, their vote amount, and a few others — into what is called a “command”. Then, the user signs the command with the public key they originally used to sign up. After that, the user encrypts the signature and command together so that it is now considered a message. This more complex description of how a message is constructed is illustrated below:

|

||||

|

||||

|

||||

|

||||

Users are able to override their previous vote as long as they sign their command with the previous public key. If the command is properly signed by the user’s previous public key, then the message is considered valid and the coordinator will count this as the correct vote. So, when a user provides a public key in their vote that is different than their previous public key, they may now submit a new vote signed by this new public key to override their previous vote. If the signature is not from the previous public key, the message will be marked as invalid and not counted toward the tally. Therefore, the public key can be thought of as the user’s voting username, and the signature is the voting password. If they provide the correct signature, they can submit a vote or change their public key — or both.

|

||||

|

||||

This feature, which I refer to as public key switching, is designed to counter the bribery attack where a user simply shows the briber their message, and then decrypts it for the briber to see which way the user voted. Public key switching allows users to change their public key and create invalid messages in favor of the bribers. The bribers have no way of telling if the user switched their public keys before sending in the vote shown to the bribers.

|

||||

|

||||

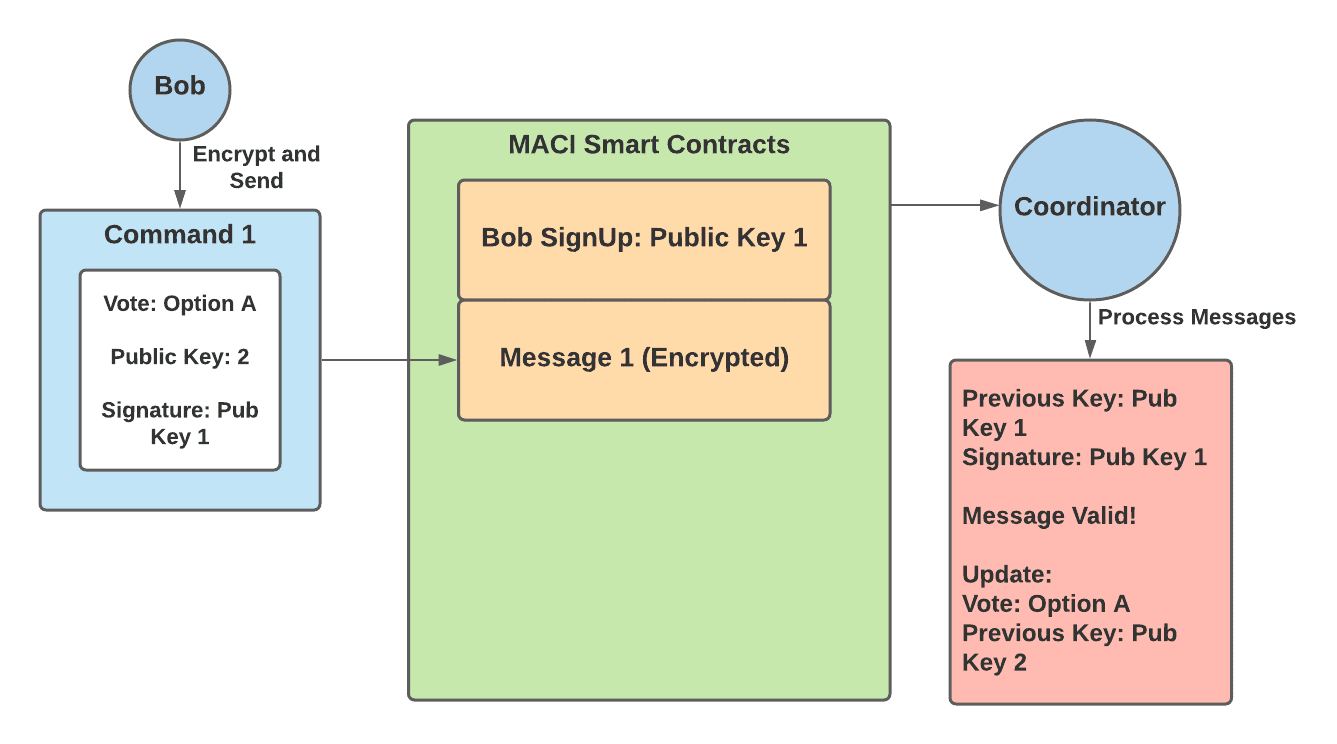

This can be quite confusing so here is an example:

|

||||

|

||||

1. Bob signs up with public key 1

|

||||

2. Bob then creates a command that contains — a vote for option A and public key 2

|

||||

3. Bob signs this command with public key 1, the key he used to sign up

|

||||

4. Bob encrypts this command into a message and submits it to the MACI smart contracts

|

||||

5. The coordinator decrypts this message, and checks to ensure that the command is signed by Bob’s previous key — public key 1. This message is valid.

|

||||

6. The coordinator then records Bob’s vote for option A and updates his public key to public key 2

|

||||

|

||||

|

||||

|

||||

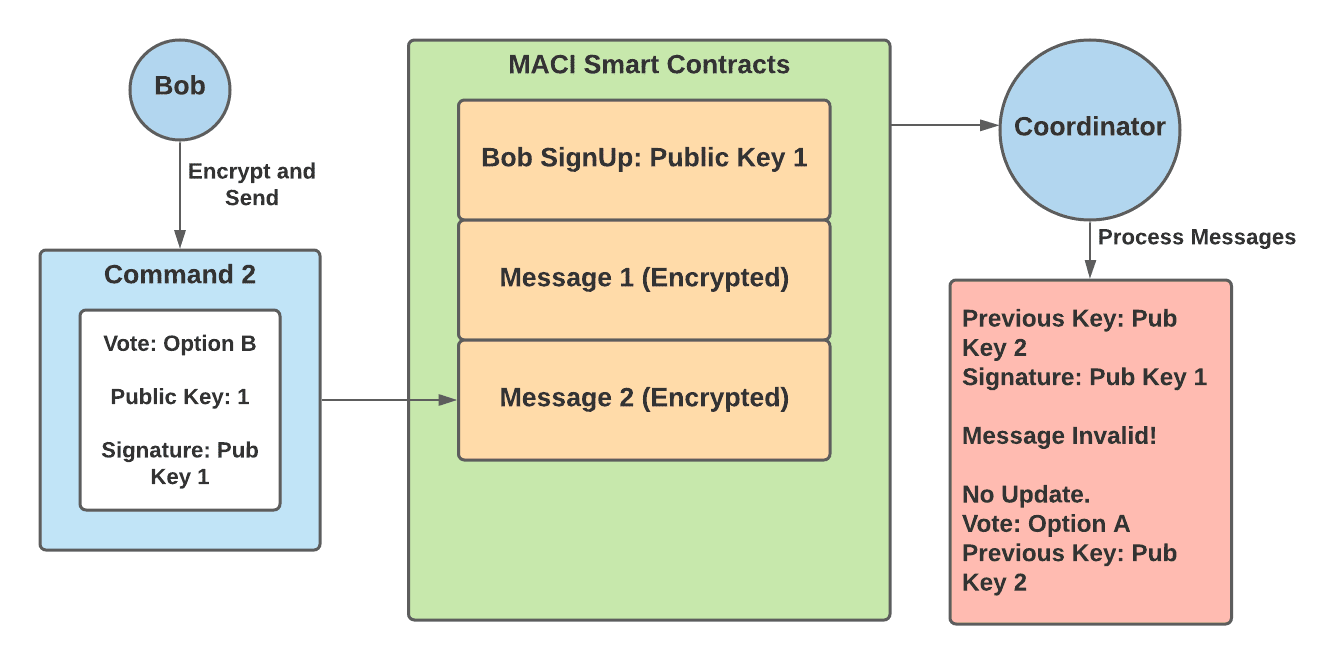

At this point, Bob has successfully voted for option A, and in order to override this vote must send in a new vote with a signature from public key 2. At this point, a briber now tries to get Bob to vote for option B:

|

||||

|

||||

1. Bob creates a command that contains — a vote for option B and public key 1

|

||||

2. Bob signs this command with public key 1, encrypts the message and submits it to the MACI smart contracts

|

||||

3. Bob shows the briber the decrypted message as proof of his vote for option B

|

||||

4. The coordinator decrypts Bob’s message and sees that the signature does not match up with public key 2 — Bob’s previous key added in his previous message. Therefore this message is invalid and this vote is not counted in the final tally.

|

||||

5. The briber has no way of knowing whether the vote was valid or invalid, and so is not incentivized to offer bribes to other users.

|

||||

|

||||

|

||||

|

||||

In order to get a good idea of how MACI works, it’s important to know how the zk-SNARKs are able to prove that the coordinator decrypted each message and tallied the votes properly. The next section gives a quick and much oversimplified overview of zk-SNARKs, although the readings listed in the introduction are much more helpful.

|

||||

|

||||

## c. zk-SNARKs

|

||||

|

||||

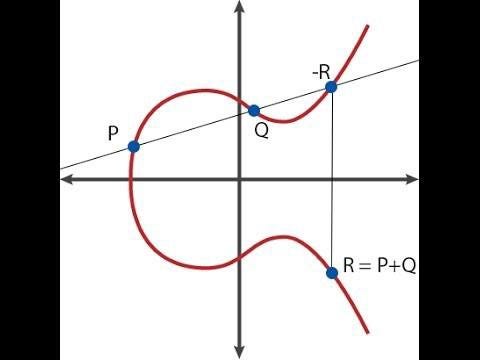

Essentially, zk-SNARKs allow users to prove they know an answer to a specific mathematical equation, without revealing what that answer is. Take the following equation for example,

|

||||

|

||||

> X + Y = 15

|

||||

|

||||

I can prove that I know 2 values, X and Y that satisfy the equation without revealing what those two values are. When I create a zk-SNARK for my answer, anyone can use the SNARK (a group of numbers) and validate it against the equation above to prove that I do know a solution to that equation. The user is unable to use the SNARK to find out my answers for X and Y.

|

||||

|

||||

For MACI, the equation is much more complicated but can be summarized as the following equations:

|

||||

|

||||

> encrypt(command1) = message1

|

||||

>

|

||||

> encrypt(command2) = message2

|

||||

>

|

||||

> encrypt(command3) = message3

|

||||

>

|

||||

> …

|

||||

>

|

||||

> Command1 from user1 + command2 from user2 + command3 from user3 + … = total tally result

|

||||

|

||||

Here, everyone is able to see the messages on the blockchain and the total tally result. Only the coordinator knows what the individual commands/votes are by decrypting the messages. So, the coordinator uses a zk-SNARK to prove they know all of the votes that:

|

||||

|

||||

1. Encrypt to the messages present on the blockchain

|

||||

2. Sum to the tally result

|

||||

|

||||

Users can then use the SNARK to prove that the tally result is correct, but cannot use it to prove any individual’s vote choices.

|

||||

|

||||

Now that the core components of MACI have been covered, it is helpful to dive deeper into the MACI workflow and specific smart contracts.

|

||||

|

||||

## 3\. Workflow

|

||||

|

||||

The general workflow process can be broken down into 4 different phases:

|

||||

|

||||

1. Sign Up

|

||||

2. Publish Message

|

||||

3. Process Messages

|

||||

4. Tally Results

|

||||

|

||||

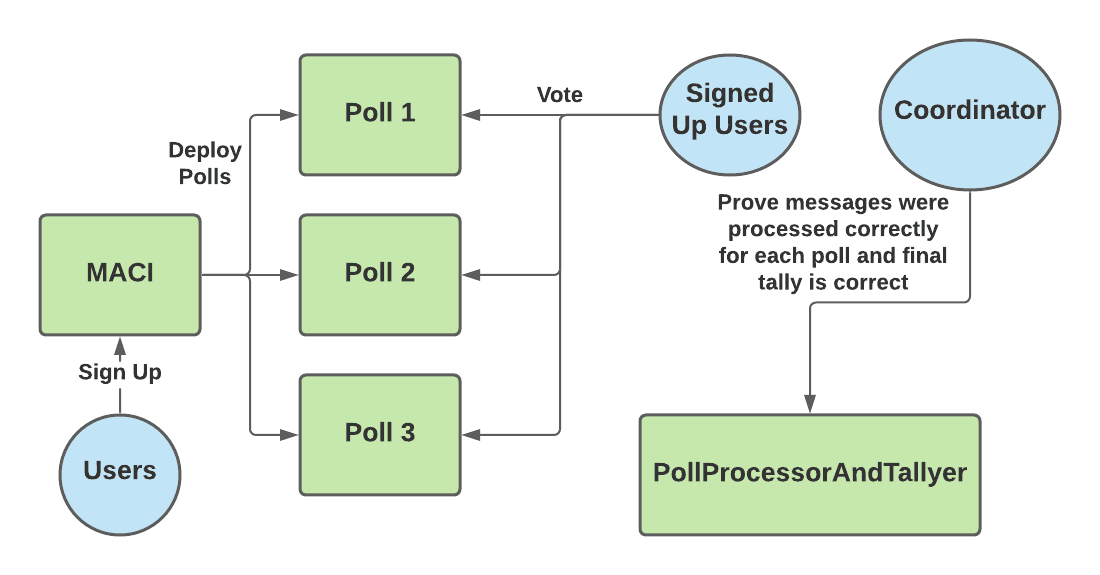

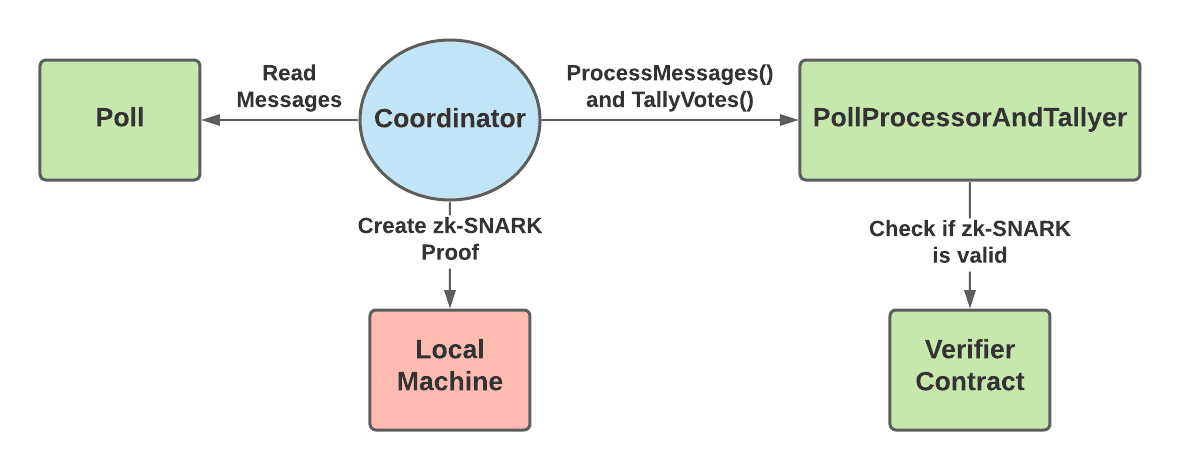

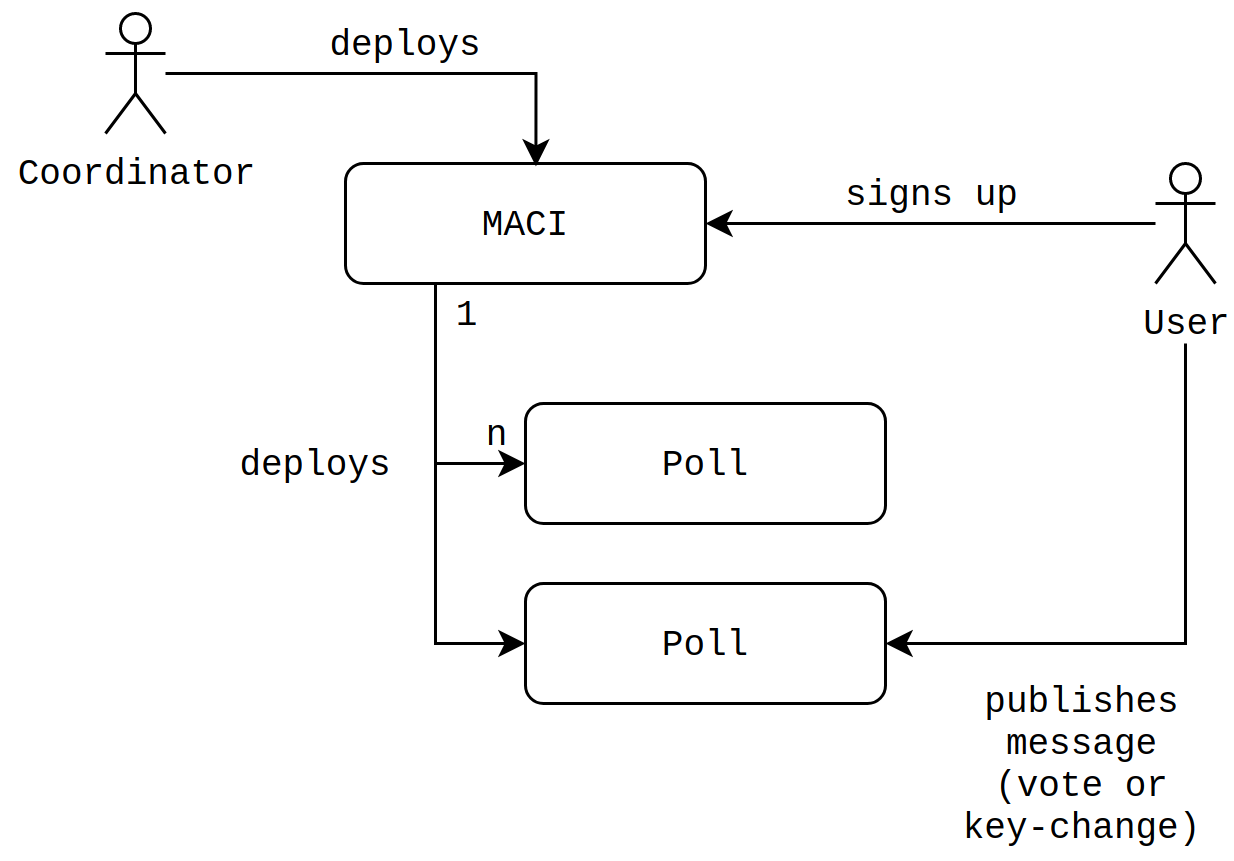

These phases make use of 3 main smart contracts — MACI, Poll and PollProcessorAndTallyer. These contracts can be found on the [MACI github page](https://github.com/appliedzkp/maci/tree/v1/contracts/contracts). The MACI contract is responsible for keeping track of all the user sign ups by recording the initial public key for each user. When a vote is going to take place, users can deploy a Poll smart contract via MACI.deployPoll().

|

||||

|

||||

The Poll smart contract is where users submit their messages. One MACI contract can be used for multiple polls. In other words, the users that signed up to the MACI contract can vote on multiple issues, with each issue represented by a distinct Poll contract.

|

||||

|

||||

Finally, the PollProcessorAndTallyer contract is used by the coordinator to prove on-chain that they are correctly tallying each vote. This process is explained in more detail in the Process Messages and Tally Results sections below.

|

||||

|

||||

|

||||

|

||||

## a. Sign Up

|

||||

|

||||

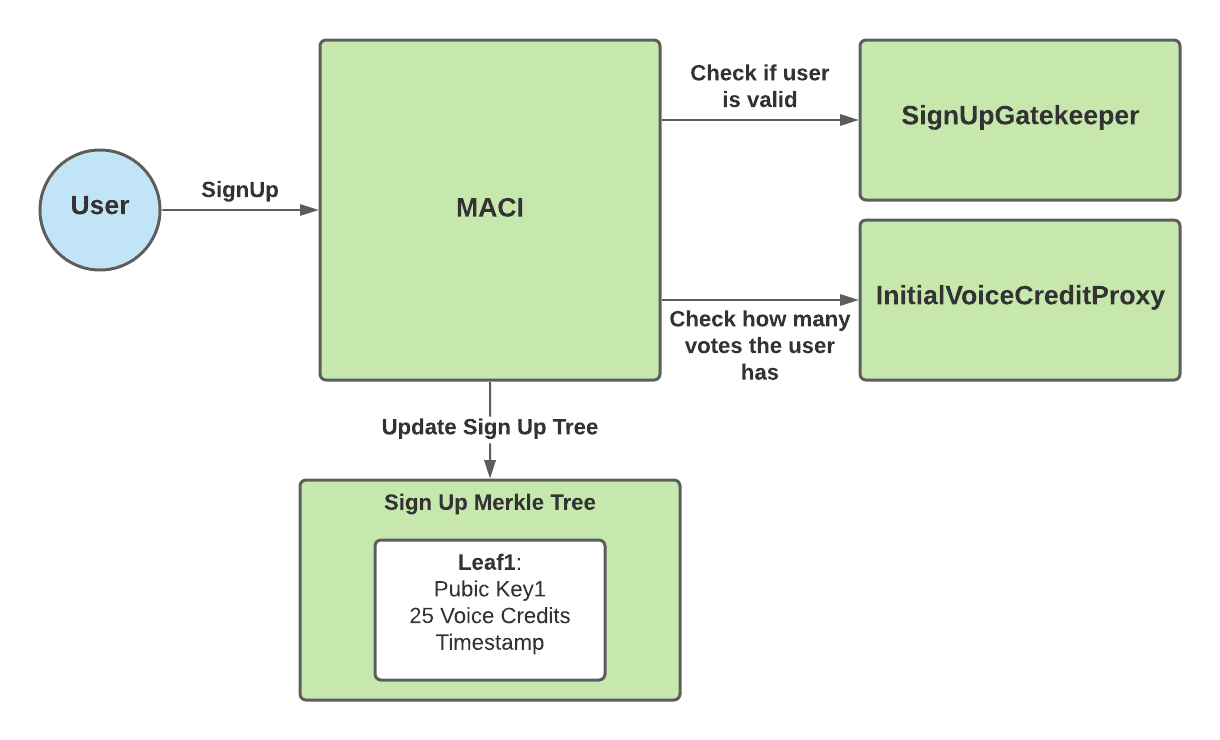

The sign up process for MACI is handled via the MACI.sol smart contract. Users need to send three pieces of information when calling MACI.signUp():

|

||||

|

||||

1. Public Key

|

||||

2. Sign Up Gatekeeper Data

|

||||

3. Initial Voice Credit Proxy Data

|

||||

|

||||

The public key is the original public key mentioned in above sections that the user will need to vote. As explained in earlier sections, they can change this public key later once voting starts. The user’s public key used to sign up is shared amongst every poll.

|

||||

|

||||

MACI allows the contract creator/owner to set a “signUpGateKeeper”. The sign up gatekeeper is meant to be the address of another smart contract that determines the rules to sign up. So, when a user calls MACI.signUp(), the function will call the sign up gatekeeper to check if this user is valid to sign up.

|

||||

|

||||

MACI also allows the contract creator/owner to set an “initialVoiceCreditProxy”. This represents the contract that determines how many votes a given user gets. So, when a user calls MACI.signUp(), the function will call the initial voice credit proxy to check how many votes they can spend. The user’s voice credit balance is reset to this number for every new poll.

|

||||

|

||||

Once MACI has checked that the user is valid and retrieved how many voice credits they have, MACI stores the following user info into the Sign Up Merkle Tree:

|

||||

|

||||

1. Public Key

|

||||

2. Voice Credits

|

||||

3. Timestamp

|

||||

|

||||

|

||||

|

||||

## b. Publish Message

|

||||

|

||||

Once it is time to vote, the MACI creator/owner will deploy a Poll smart contract. Then, users will call Poll.publishMessage() and send the following data:

|

||||

|

||||

1. Message

|

||||

2. Encryption Key

|

||||

|

||||

As explained in sections above, the coordinator will need to use the encryption key in order to derive a shared key. The coordinator can then use the shared key to decrypt the message into a command, which contains the vote.

|

||||

|

||||

Once a user publishes their message, the Poll contract will store the message and encryption key into the Message Merkle Tree.

|

||||

|

||||

## c. Process Messages

|

||||

|

||||

Once the voting is done for a specific poll, the coordinator will use the PollProcessAndTallyer contract to first prove that they have correctly decrypted each message and applied them to correctly create an updated state tree. This state tree keeps an account of all the valid votes that should be counted. So, when processing the messages, the coordinator will not keep messages that are later overridden by a newer message inside the state tree. For example, if a user votes for option A, but then later sends a new message to vote for option B, the coordinator will only count the vote for option B.

|

||||

|

||||

The coordinator must process messages in groups so that proving on chain does not exceed the data limit. The coordinator then creates a zk-SNARK proving their state tree correctly contains only the valid messages. Once the proof is ready, the coordinator calls PollProcessorAndTallyer.processMessages(), providing a hash of the state tree and the zk-SNARK proof as an input parameters.

|

||||

|

||||

The PollProcessorAndTallyer contract will send the proof to a separate verifier contract. The verifier contract is specifically built to read MACI zk-SNARK proofs and tell if they are valid or not. So, if the verifier contract returns true, then everyone can see on-chain that the coordinator correctly processed that batch of messages. The coordinator repeats this process until all messages have been processed.

|

||||

|

||||

## d. Tally Votes

|

||||

|

||||

Finally, once all messages have been processed, the coordinator tallies the votes of the valid messages. The coordinator creates a zk-SNARK proving that the valid messages in the state tree (proved in Process Messages step) contain votes that sum to the given tally result. Then, they call PollProcessorAndTallyer.tallyVotes() with a hash of the correct tally results and the zk-SNARK proof. Similarly to the processMessages function, the tallyVotes function will send the proof to a verifier contract to ensure that it is valid.

|

||||

|

||||

The tallyVotes function is only successful if the verifier contract returns that the proof is valid. Therefore, once the tallyVotes function succeeds, users can trust that the coordinator has correctly tallied all of the valid votes. After this step, anyone can see the final tally results and the proof that these results are a correct result of the messages sent to the Poll contract. The users won’t be able to see how any individual voted, but will be able to trust that these votes were properly processed and counted.

|

||||

|

||||

|

||||

|

||||

## 4\. Conclusion

|

||||

|

||||

MACI is a huge step forward in preventing collusion for on-chain votes. While it doesn’t prevent all possibilities of collusion, it does make it much harder. MACI can already be [seen](https://twitter.com/vitalikbuterin/status/1329012998585733120) to be in use by the [clr.fund](https://blog.clr.fund/round-4-review/), which has users vote on which projects to receive funding. When the possible funding amount becomes very large, users and organizations have a large incentive to collude to receive parts of these funds. This is where MACI can truly make a difference, to protect the fairness of such important voting processes such as those at clr.fund.

|

||||

64

articles/advancing-anon-aadhaar-whats-new-in-v100.md

Normal file

@@ -0,0 +1,64 @@

|

||||

---

|

||||

authors: ["Anon Aadhaar Team"]

|

||||

title: "Advancing Anon Aadhaar: what's new in v1.0.0"

|

||||

image: "cover.webp"

|

||||

tldr: "This post was written by the Anon Aadhaar team. If you’re new to Anon Aadhaar make sure to read our [initial announcement post](https://mirror.xyz/privacy-scaling-explorations.eth/6R8kACTYp9mF3eIpLZMXs8JAQmTyb6Uy8KnZqzmDFZI)."

|

||||

date: "2024-02-14"

|

||||

canonical: "https://mirror.xyz/privacy-scaling-explorations.eth/YnqHAxpjoWl4e_K2opKPN4OAy5EU4sIJYYYHFCjkNOE"

|

||||

---

|

||||

|

||||

### **Introducing Anon Aadhaar v1.0.0**

|

||||

|

||||

[Anon Aadhaar](https://github.com/anon-aadhaar/anon-aadhaar) is a protocol that enables [Aadhaar](https://en.wikipedia.org/wiki/Aadhaar) holders to prove their identity anonymously. It works by verifying the Aadhaar card's issuer signature, which is issued by the Indian government in formats like *PDF*, _XML_, and _Secure QR_ code. These digital versions are signed using RSA, involving a pair of keys: a private key for signing data and a public key for verification.

|

||||

|

||||

Our protocol leverages the [UIDAI's](https://uidai.gov.in/en/about-uidai.html) (government authority) RSA signature, enabling us to verify the documents as anyone could. The novelty of our approach is the use of a SNARK proof in the verification process, which hides sensitive data from the verifier, maintaining the same level of verification while enhancing privacy.

|

||||

|

||||

**Recap of Previous Version Developments**

|

||||

|

||||

In the previous version, we implemented RSA verification in Circom using Groth16. We used the eAadhaar PDF, which is easily downloadable by Aadhaar residents, for signature verification.

|

||||

|

||||

However, we encountered two major issues:

|

||||

|

||||

1. The PDF's size was too large for circuit input

|

||||

2. A changing timestamp in the document made it impossible to have a consistent identity hash

|

||||

|

||||

To overcome these obstacles, we transitioned to use the [Aadhaar secure QR code](https://uidai.gov.in/en/ecosystem/authentication-devices-documents/qr-code-reader.html) for verification purposes.

|

||||

|

||||

This method is not only broadly adopted but also readily accessible through the [mAadhaar](https://uidai.gov.in/en/contact-support/have-any-question/285-english-uk/faqs/your-aadhaar/maadhaar-faqs.html) mobile application or via the printed version of the e-Aadhaar PDF. This adjustment enhances the efficiency of verifying signed identity data and streamlines the process of document parsing within our system.

|

||||

|

||||

**Key Features in v1.0.0**

|

||||

|

||||

1. **SHA-256 Hash Verification**: leveraging [zk-email](https://github.com/zkemail) implementation, we've integrated SHA-256 hash verification alongside RSA verification, allowing us to work effectively with the signed data.

|

||||

2. **Extractor**: with verified data, our new Circom extractor implementation enables selective extraction of identity fields from the document.

|

||||

3. **Nullifiers**: we're now computing two types of nullifiers:

|

||||

|

||||

- **userNullifier**: this nullifier serves as a high-entropy, unique identifier for each user, virtually eliminating the possibility of collisions between the identifiers of different individuals. It is generated by hashing the combination of the last four digits of a user's Aadhaar number and their identity photo. The unique byte data of the photo enhances the identifier's uniqueness, ensuring distinctness even in the vast pool of users. This approach is particularly useful for app interactions, where collision avoidance is crucial.

|

||||

|

||||

```jsx

|

||||

userNullifier = Hash(last4, photo)

|

||||

```

|

||||

|

||||

- **identityNullifier**: This nullifier is constructed from a hash of various personal identity elements, including the last four digits of the Aadhaar number, date of birth (DOB), name, gender, and PIN code. The design of the identity nullifier allows for its easy recomputation by users, should there be any changes to their identity data. This feature is particularly valuable for maintaining continuity of identity within the system. For instance, if a user updates their photo—thus altering their user nullifier—they can still be linked to their historical interactions by recalculating the identity nullifier using readily available personal information.

|

||||

|

||||

```jsx

|

||||

identityNullifier=Hash(last4,name,DOB,gender,pin code)

|

||||

```

|

||||

|

||||

The dual nullifier system ensures both robust identity verification and the flexibility to accommodate changes in user data, maintaining a seamless user experience while safeguarding against identity collisions and enhancing privacy and security.

|

||||

|

||||

4. **Timestamp Check**: our circuit extracts the IST signature timestamp, captured at the moment the QR code is signed, and convert it to UNIX UTC timestamp. This serves as a real-time indicator of document issuance and user access to their UIDAI portal, functioning akin to a Time-based One-Time Password (TOTP) system. It ensures document freshness and validates recent user interaction, requiring proofs to be signed within a specified timeframe (e.g., less than 1 hour ago) for verification purposes.

|

||||

5. **Signal Signing**: this functionality empowers both applications and users to securely sign any data during the proof generation process, a critical component for implementing ERC-4337 standards. It facilitates the creation of Aadhaar-linked transaction hash signatures, offering a robust mechanism to deter front-running in on-chain transactions by anchoring the _msg.sender_ identity within smart contract interactions. This advancement paves the way for the development of Account Abstraction Wallets, enabling users to authenticate and execute transactions directly with their identity, streamlining the user experience while enhancing security.

|

||||

6. **Improved On-chain Verification Gas Cost**: outputting the issuer's public key hash from the circuit allows us to store this value in the AnonAadhaar smart contract, reducing on-chain verification costs.

|

||||

|

||||

**Looking Forward**

|

||||

|

||||

We are incredibly excited to see what developers will build using Anon Aadhaar v1! And invite you to join the [Anon Aadhaar Community](https://t.me/anon_aadhaar) to continue the conversation. To support and inspire your innovative projects, we prepared a variety of resources for you to try:

|

||||

|

||||

- **[GitHub Repository](https://github.com/anon-aadhaar/anon-aadhaar)**: dive into the codebase and explore the inner workings of our protocol.

|

||||

- **[Project ideas to Build with Anon Aadhaar:](https://github.com/anon-aadhaar/anon-aadhaar/discussions/155)** looking for inspiration? Here are some ideas we’ve compiled.

|

||||

- **[On-chain voting Example App](https://github.com/anon-aadhaar/boilerplate)**: get hands-on with a practical implementation to see how Anon Aadhaar can be integrated into real-world applications.

|

||||

- **[Quick Setup Repository](https://github.com/anon-aadhaar/quick-setup)**: for those eager to get started, this repository provides a streamlined Nextjs setup process.

|

||||

- **[Documentation](https://anon-aadhaar-documentation.vercel.app/)**: comprehensive and detailed, our documentation covers everything from basic setup to advanced features, ensuring you have the information needed at your fingertips.

|

||||

- **[Roadmap](https://github.com/privacy-scaling-explorations/bandada/discussions/350)**: get an idea about how we’re thinking of evolving the protocol.

|

||||

|

||||

We're eager to witness the creative and impactful ways in which the developer community will utilize Anon Aadhaar, pushing the boundaries of privacy and security in digital identity verification. Happy coding!

|

||||

85

articles/announcing-anon-aadhaar.md

Normal file

@@ -0,0 +1,85 @@

|

||||

---

|

||||

authors: ["Anon Aadhaar team"]

|

||||

title: "Announcing Anon Aadhaar"

|

||||

image: "cover.webp"

|

||||

tldr: "_This post was written by the Anon Aadhaar team._ /n/n _We’re excited to announce the public release of Anon Aadhaar!_"

|

||||

date: "2023-09-21"

|

||||

canonical: "https://mirror.xyz/privacy-scaling-explorations.eth/6R8kACTYp9mF3eIpLZMXs8JAQmTyb6Uy8KnZqzmDFZI"

|

||||

---

|

||||

|

||||

### What is Anon Aadhaar?

|

||||

|

||||

Anon Aadhaar is a protocol that lets users anonymously prove their Aadhaar (Indian) identity, in a very fast and simple way. The core of the protocol is the [circuits](https://github.com/privacy-scaling-explorations/anon-aadhaar/tree/main/packages/anon-aadhaar-pcd/circuits), but we also provide a SDK to let any app use the protocol.

|

||||

|

||||

[Try our demo](https://anon-aadhaar-example.vercel.app/) with your Aadhaar card or example files ([signed pdf](https://anon-aadhaar-documentation.vercel.app/assets/files/signed-66a64f9f9b3da47ff19b81f6510e26fe.pdf), [certificate file](https://anon-aadhaar-documentation.vercel.app/assets/files/certificate-8bda87cda7bd74771f70cc0df28fc400.cer)). Follow our tutorial by [building a voting app with Anon Aadhaar](https://anon-aadhaar-documentation.vercel.app/blog), fork our [example app](https://github.com/anon-aadhaar-private/anon-aadhaar-example) and build your own.

|

||||

|

||||

### Why Aadhaar cards?

|

||||

|

||||

The [Aadhaar program](https://en.wikipedia.org/wiki/Aadhaar) is among the largest digital identity schemes in the world. There are 1.2 billion people enrolled, accounting for around 90% of India’s population.

|

||||

|

||||

Aadhaar cards carry both demographic and biometric data, including the holder’s date of birth and its fingerprint. They are used in a variety of contexts such as loan agreements or housing applications. Bring this onchain in a privacy preserving way opens the possibility for many more applications on Ethereum.

|

||||

|

||||

Anon Aadhaar is one instantiation of the broader “Anonymous Credentials" with the goals of “[proof of citizenship](https://discord.com/channels/943612659163602974/1141757600568971304/1141759379578822707)”, “proof of identity”, “proof of passport”, “proof of personhood”, among others. Our approach leverages government identities, in this case Aadhaar Cards, to enhance digital interactions.

|

||||

|

||||

### Importance of Anonymity

|

||||

|

||||

A healthy society enables people to voice their concerns, opinions and ideas without fear or reprimands. Although there are many protocols that provide anonymity, anonymity without context lowers the value of the interactions. How can I be sure the opinions shared are not part of a bot network, campaign, or external influence for my country/DAO/company?

|

||||

|

||||

**Contextual anonymity is key to build trust** and enhance the value of noise to signal.

|

||||

|

||||

In the broader context, Anon Aadhaar supports [proof of personhood](https://vitalik.ca/general/2023/07/24/biometric.html) by adding a convenient privacy layer. We can talk about a “forth column” that leverages existing PKI and public government ID programs to enhance digital interactions.

|

||||

|

||||

|

||||

|

||||

_Table modified from [https://vitalik.ca/general/2023/07/24/biometric.html](https://vitalik.ca/general/2023/07/24/biometric.html)_

|

||||

|

||||

\*Low decentralization in regard to the Government being the single issuer of the IDs. But high decentralization in the verification and permissionless applications that can be built on top of them.

|

||||

|

||||

## Highlight Features

|

||||

|

||||

- SDK to directly integrate with your dapp

|

||||

- PCD package to leverage this framework

|

||||

- React package to quickly integrate your front-end

|

||||

- Example app to try it and and fork

|

||||

- Proving time ~30s (avg on browser)

|

||||

|

||||

### What it contains

|

||||

|

||||

- **[anon-aadhaar-contracts:](https://github.com/privacy-scaling-explorations/anon-aadhaar/tree/main/packages/anon-aadhaar-contracts)** import it directly in your smart contract to check on-chain that a user has a valid anon Aadhaar identity proof.