mirror of

https://github.com/invoke-ai/InvokeAI.git

synced 2026-01-22 07:08:01 -05:00

Compare commits

15 Commits

ryan/sd35

...

ryan/gguf-

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

bc300bc498 | ||

|

|

c95a8d2a3c | ||

|

|

b31ba61d23 | ||

|

|

579eb8718e | ||

|

|

b4c5210902 | ||

|

|

1f58b26e74 | ||

|

|

2eba5457da | ||

|

|

b1ac6f986e | ||

|

|

7213fceaa6 | ||

|

|

faae144b35 | ||

|

|

17bf03ab7f | ||

|

|

cc24a0e39f | ||

|

|

1a40b486e4 | ||

|

|

12f5247caa | ||

|

|

d43dd97016 |

@@ -105,7 +105,7 @@ Invoke features an organized gallery system for easily storing, accessing, and r

|

||||

### Other features

|

||||

|

||||

- Support for both ckpt and diffusers models

|

||||

- SD1.5, SD2.0, SDXL, and FLUX support

|

||||

- SD1.5, SD2.0, and SDXL support

|

||||

- Upscaling Tools

|

||||

- Embedding Manager & Support

|

||||

- Model Manager & Support

|

||||

|

||||

@@ -38,9 +38,9 @@ RUN --mount=type=cache,target=/root/.cache/pip \

|

||||

if [ "$TARGETPLATFORM" = "linux/arm64" ] || [ "$GPU_DRIVER" = "cpu" ]; then \

|

||||

extra_index_url_arg="--extra-index-url https://download.pytorch.org/whl/cpu"; \

|

||||

elif [ "$GPU_DRIVER" = "rocm" ]; then \

|

||||

extra_index_url_arg="--extra-index-url https://download.pytorch.org/whl/rocm6.1"; \

|

||||

extra_index_url_arg="--extra-index-url https://download.pytorch.org/whl/rocm5.6"; \

|

||||

else \

|

||||

extra_index_url_arg="--extra-index-url https://download.pytorch.org/whl/cu124"; \

|

||||

extra_index_url_arg="--extra-index-url https://download.pytorch.org/whl/cu121"; \

|

||||

fi &&\

|

||||

|

||||

# xformers + triton fails to install on arm64

|

||||

|

||||

@@ -1,7 +1,7 @@

|

||||

# Copyright (c) 2023 Eugene Brodsky https://github.com/ebr

|

||||

|

||||

x-invokeai: &invokeai

|

||||

image: "ghcr.io/invoke-ai/invokeai:latest"

|

||||

image: "local/invokeai:latest"

|

||||

build:

|

||||

context: ..

|

||||

dockerfile: docker/Dockerfile

|

||||

|

||||

@@ -144,7 +144,7 @@ As you might have noticed, we added two new arguments to the `InputField`

|

||||

definition for `width` and `height`, called `gt` and `le`. They stand for

|

||||

_greater than or equal to_ and _less than or equal to_.

|

||||

|

||||

These impose constraints on those fields, and will raise an exception if the

|

||||

These impose contraints on those fields, and will raise an exception if the

|

||||

values do not meet the constraints. Field constraints are provided by

|

||||

**pydantic**, so anything you see in the **pydantic docs** will work.

|

||||

|

||||

|

||||

@@ -239,7 +239,7 @@ Consult the

|

||||

get it set up.

|

||||

|

||||

Suggest using VSCode's included settings sync so that your remote dev host has

|

||||

all the same app settings and extensions automatically.

|

||||

all the same app settings and extensions automagically.

|

||||

|

||||

##### One remote dev gotcha

|

||||

|

||||

|

||||

@@ -2,7 +2,7 @@

|

||||

|

||||

## **What do I need to know to help?**

|

||||

|

||||

If you are looking to help with a code contribution, InvokeAI uses several different technologies under the hood: Python (Pydantic, FastAPI, diffusers) and Typescript (React, Redux Toolkit, ChakraUI, Mantine, Konva). Familiarity with StableDiffusion and image generation concepts is helpful, but not essential.

|

||||

If you are looking to help to with a code contribution, InvokeAI uses several different technologies under the hood: Python (Pydantic, FastAPI, diffusers) and Typescript (React, Redux Toolkit, ChakraUI, Mantine, Konva). Familiarity with StableDiffusion and image generation concepts is helpful, but not essential.

|

||||

|

||||

|

||||

## **Get Started**

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

# Tutorials

|

||||

|

||||

Tutorials help new & existing users expand their ability to use InvokeAI to the full extent of our features and services.

|

||||

Tutorials help new & existing users expand their abilty to use InvokeAI to the full extent of our features and services.

|

||||

|

||||

Currently, we have a set of tutorials available on our [YouTube channel](https://www.youtube.com/@invokeai), but as InvokeAI continues to evolve with new updates, we want to ensure that we are giving our users the resources they need to succeed.

|

||||

|

||||

@@ -8,4 +8,4 @@ Tutorials can be in the form of videos or article walkthroughs on a subject of y

|

||||

|

||||

## Contributing

|

||||

|

||||

Please reach out to @imic or @hipsterusername on [Discord](https://discord.gg/ZmtBAhwWhy) to help create tutorials for InvokeAI.

|

||||

Please reach out to @imic or @hipsterusername on [Discord](https://discord.gg/ZmtBAhwWhy) to help create tutorials for InvokeAI.

|

||||

@@ -21,7 +21,6 @@ To use a community workflow, download the `.json` node graph file and load it in

|

||||

+ [Clothing Mask](#clothing-mask)

|

||||

+ [Contrast Limited Adaptive Histogram Equalization](#contrast-limited-adaptive-histogram-equalization)

|

||||

+ [Depth Map from Wavefront OBJ](#depth-map-from-wavefront-obj)

|

||||

+ [Enhance Detail](#enhance-detail)

|

||||

+ [Film Grain](#film-grain)

|

||||

+ [Generative Grammar-Based Prompt Nodes](#generative-grammar-based-prompt-nodes)

|

||||

+ [GPT2RandomPromptMaker](#gpt2randompromptmaker)

|

||||

@@ -41,7 +40,6 @@ To use a community workflow, download the `.json` node graph file and load it in

|

||||

+ [Metadata-Linked](#metadata-linked-nodes)

|

||||

+ [Negative Image](#negative-image)

|

||||

+ [Nightmare Promptgen](#nightmare-promptgen)

|

||||

+ [Ollama](#ollama-node)

|

||||

+ [One Button Prompt](#one-button-prompt)

|

||||

+ [Oobabooga](#oobabooga)

|

||||

+ [Prompt Tools](#prompt-tools)

|

||||

@@ -82,7 +80,7 @@ Note: These are inherited from the core nodes so any update to the core nodes sh

|

||||

|

||||

**Example Usage:**

|

||||

</br>

|

||||

<img src="https://raw.githubusercontent.com/skunkworxdark/autostereogram_nodes/refs/heads/main/images/spider.png" width="200" /> -> <img src="https://raw.githubusercontent.com/skunkworxdark/autostereogram_nodes/refs/heads/main/images/spider-depth.png" width="200" /> -> <img src="https://raw.githubusercontent.com/skunkworxdark/autostereogram_nodes/refs/heads/main/images/spider-dots.png" width="200" /> <img src="https://raw.githubusercontent.com/skunkworxdark/autostereogram_nodes/refs/heads/main/images/spider-pattern.png" width="200" />

|

||||

<img src="https://github.com/skunkworxdark/autostereogram_nodes/blob/main/images/spider.png" width="200" /> -> <img src="https://github.com/skunkworxdark/autostereogram_nodes/blob/main/images/spider-depth.png" width="200" /> -> <img src="https://github.com/skunkworxdark/autostereogram_nodes/raw/main/images/spider-dots.png" width="200" /> <img src="https://github.com/skunkworxdark/autostereogram_nodes/raw/main/images/spider-pattern.png" width="200" />

|

||||

|

||||

--------------------------------

|

||||

### Average Images

|

||||

@@ -143,17 +141,6 @@ To be imported, an .obj must use triangulated meshes, so make sure to enable tha

|

||||

**Example Usage:**

|

||||

</br><img src="https://raw.githubusercontent.com/dwringer/depth-from-obj-node/main/depth_from_obj_usage.jpg" width="500" />

|

||||

|

||||

--------------------------------

|

||||

### Enhance Detail

|

||||

|

||||

**Description:** A single node that can enhance the detail in an image. Increase or decrease details in an image using a guided filter (as opposed to the typical Gaussian blur used by most sharpening filters.) Based on the `Enhance Detail` ComfyUI node from https://github.com/spacepxl/ComfyUI-Image-Filters

|

||||

|

||||

**Node Link:** https://github.com/skunkworxdark/enhance-detail-node

|

||||

|

||||

**Example Usage:**

|

||||

</br>

|

||||

<img src="https://raw.githubusercontent.com/skunkworxdark/enhance-detail-node/refs/heads/main/images/Comparison.png" />

|

||||

|

||||

--------------------------------

|

||||

### Film Grain

|

||||

|

||||

@@ -320,7 +307,7 @@ View:

|

||||

**Node Link:** https://github.com/helix4u/load_video_frame

|

||||

|

||||

**Output Example:**

|

||||

<img src="https://raw.githubusercontent.com/helix4u/load_video_frame/refs/heads/main/_git_assets/dance1736978273.gif" width="500" />

|

||||

<img src="https://raw.githubusercontent.com/helix4u/load_video_frame/main/_git_assets/testmp4_embed_converted.gif" width="500" />

|

||||

|

||||

--------------------------------

|

||||

### Make 3D

|

||||

@@ -361,7 +348,7 @@ See full docs here: https://github.com/skunkworxdark/Prompt-tools-nodes/edit/mai

|

||||

|

||||

**Output Examples**

|

||||

|

||||

<img src="https://github.com/skunkworxdark/match_histogram/assets/21961335/ed12f329-a0ef-444a-9bae-129ed60d6097" />

|

||||

<img src="https://github.com/skunkworxdark/match_histogram/assets/21961335/ed12f329-a0ef-444a-9bae-129ed60d6097" width="300" />

|

||||

|

||||

--------------------------------

|

||||

### Metadata Linked Nodes

|

||||

@@ -403,23 +390,10 @@ View:

|

||||

|

||||

**Node Link:** [https://github.com/gogurtenjoyer/nightmare-promptgen](https://github.com/gogurtenjoyer/nightmare-promptgen)

|

||||

|

||||

--------------------------------

|

||||

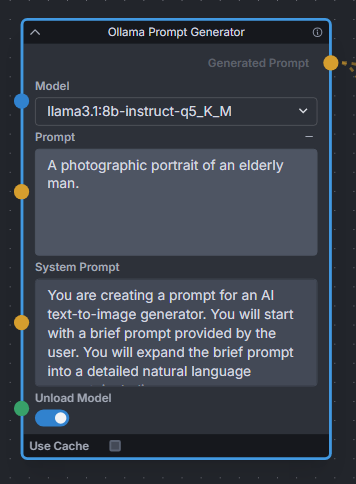

### Ollama Node

|

||||

|

||||

**Description:** Uses Ollama API to expand text prompts for text-to-image generation using local LLMs. Works great for expanding basic prompts into detailed natural language prompts for Flux. Also provides a toggle to unload the LLM model immediately after expanding, to free up VRAM for Invoke to continue the image generation workflow.

|

||||

|

||||

**Node Link:** https://github.com/Jonseed/Ollama-Node

|

||||

|

||||

**Example Node Graph:** https://github.com/Jonseed/Ollama-Node/blob/main/Ollama-Node-Flux-example.json

|

||||

|

||||

**View:**

|

||||

|

||||

|

||||

|

||||

--------------------------------

|

||||

### One Button Prompt

|

||||

|

||||

<img src="https://raw.githubusercontent.com/AIrjen/OneButtonPrompt_X_InvokeAI/refs/heads/main/images/background.png" width="800" />

|

||||

<img src="https://github.com/AIrjen/OneButtonPrompt_X_InvokeAI/blob/main/images/background.png" width="800" />

|

||||

|

||||

**Description:** an extensive suite of auto prompt generation and prompt helper nodes based on extensive logic. Get creative with the best prompt generator in the world.

|

||||

|

||||

@@ -429,7 +403,7 @@ The main node generates interesting prompts based on a set of parameters. There

|

||||

|

||||

**Nodes:**

|

||||

|

||||

<img src="https://raw.githubusercontent.com/AIrjen/OneButtonPrompt_X_InvokeAI/refs/heads/main/images/OBP_nodes_invokeai.png" width="800" />

|

||||

<img src="https://github.com/AIrjen/OneButtonPrompt_X_InvokeAI/blob/main/images/OBP_nodes_invokeai.png" width="800" />

|

||||

|

||||

--------------------------------

|

||||

### Oobabooga

|

||||

@@ -482,7 +456,7 @@ See full docs here: https://github.com/skunkworxdark/Prompt-tools-nodes/edit/mai

|

||||

|

||||

**Workflow Examples**

|

||||

|

||||

<img src="https://raw.githubusercontent.com/skunkworxdark/prompt-tools/refs/heads/main/images/CSVToIndexStringNode.png"/>

|

||||

<img src="https://github.com/skunkworxdark/prompt-tools/blob/main/images/CSVToIndexStringNode.png" width="300" />

|

||||

|

||||

--------------------------------

|

||||

### Remote Image

|

||||

@@ -620,7 +594,7 @@ See full docs here: https://github.com/skunkworxdark/XYGrid_nodes/edit/main/READ

|

||||

|

||||

**Output Examples**

|

||||

|

||||

<img src="https://raw.githubusercontent.com/skunkworxdark/XYGrid_nodes/refs/heads/main/images/collage.png" />

|

||||

<img src="https://github.com/skunkworxdark/XYGrid_nodes/blob/main/images/collage.png" width="300" />

|

||||

|

||||

|

||||

--------------------------------

|

||||

|

||||

6

flake.lock

generated

6

flake.lock

generated

@@ -2,11 +2,11 @@

|

||||

"nodes": {

|

||||

"nixpkgs": {

|

||||

"locked": {

|

||||

"lastModified": 1727955264,

|

||||

"narHash": "sha256-lrd+7mmb5NauRoMa8+J1jFKYVa+rc8aq2qc9+CxPDKc=",

|

||||

"lastModified": 1690630721,

|

||||

"narHash": "sha256-Y04onHyBQT4Erfr2fc82dbJTfXGYrf4V0ysLUYnPOP8=",

|

||||

"owner": "NixOS",

|

||||

"repo": "nixpkgs",

|

||||

"rev": "71cd616696bd199ef18de62524f3df3ffe8b9333",

|

||||

"rev": "d2b52322f35597c62abf56de91b0236746b2a03d",

|

||||

"type": "github"

|

||||

},

|

||||

"original": {

|

||||

|

||||

@@ -34,7 +34,7 @@

|

||||

cudaPackages.cudnn

|

||||

cudaPackages.cuda_nvrtc

|

||||

cudatoolkit

|

||||

pkg-config

|

||||

pkgconfig

|

||||

libconfig

|

||||

cmake

|

||||

blas

|

||||

@@ -66,7 +66,7 @@

|

||||

black

|

||||

|

||||

# Frontend.

|

||||

pnpm_8

|

||||

yarn

|

||||

nodejs

|

||||

];

|

||||

LD_LIBRARY_PATH = pkgs.lib.makeLibraryPath buildInputs;

|

||||

|

||||

@@ -12,7 +12,7 @@ MINIMUM_PYTHON_VERSION=3.10.0

|

||||

MAXIMUM_PYTHON_VERSION=3.11.100

|

||||

PYTHON=""

|

||||

for candidate in python3.11 python3.10 python3 python ; do

|

||||

if ppath=`which $candidate 2>/dev/null`; then

|

||||

if ppath=`which $candidate`; then

|

||||

# when using `pyenv`, the executable for an inactive Python version will exist but will not be operational

|

||||

# we check that this found executable can actually run

|

||||

if [ $($candidate --version &>/dev/null; echo ${PIPESTATUS}) -gt 0 ]; then continue; fi

|

||||

@@ -30,11 +30,10 @@ done

|

||||

if [ -z "$PYTHON" ]; then

|

||||

echo "A suitable Python interpreter could not be found"

|

||||

echo "Please install Python $MINIMUM_PYTHON_VERSION or higher (maximum $MAXIMUM_PYTHON_VERSION) before running this script. See instructions at $INSTRUCTIONS for help."

|

||||

echo "For the best user experience we suggest enlarging or maximizing this window now."

|

||||

read -p "Press any key to exit"

|

||||

exit -1

|

||||

fi

|

||||

|

||||

echo "For the best user experience we suggest enlarging or maximizing this window now."

|

||||

|

||||

exec $PYTHON ./lib/main.py ${@}

|

||||

read -p "Press any key to exit"

|

||||

|

||||

@@ -245,9 +245,6 @@ class InvokeAiInstance:

|

||||

|

||||

pip = local[self.pip]

|

||||

|

||||

# Uninstall xformers if it is present; the correct version of it will be reinstalled if needed

|

||||

_ = pip["uninstall", "-yqq", "xformers"] & FG

|

||||

|

||||

pipeline = pip[

|

||||

"install",

|

||||

"--require-virtualenv",

|

||||

@@ -285,6 +282,12 @@ class InvokeAiInstance:

|

||||

shutil.copy(src, dest)

|

||||

os.chmod(dest, 0o0755)

|

||||

|

||||

def update(self):

|

||||

pass

|

||||

|

||||

def remove(self):

|

||||

pass

|

||||

|

||||

|

||||

### Utility functions ###

|

||||

|

||||

@@ -399,7 +402,7 @@ def get_torch_source() -> Tuple[str | None, str | None]:

|

||||

:rtype: list

|

||||

"""

|

||||

|

||||

from messages import GpuType, select_gpu

|

||||

from messages import select_gpu

|

||||

|

||||

# device can be one of: "cuda", "rocm", "cpu", "cuda_and_dml, autodetect"

|

||||

device = select_gpu()

|

||||

@@ -409,22 +412,16 @@ def get_torch_source() -> Tuple[str | None, str | None]:

|

||||

url = None

|

||||

optional_modules: str | None = None

|

||||

if OS == "Linux":

|

||||

if device == GpuType.ROCM:

|

||||

url = "https://download.pytorch.org/whl/rocm6.1"

|

||||

elif device == GpuType.CPU:

|

||||

if device.value == "rocm":

|

||||

url = "https://download.pytorch.org/whl/rocm5.6"

|

||||

elif device.value == "cpu":

|

||||

url = "https://download.pytorch.org/whl/cpu"

|

||||

elif device == GpuType.CUDA:

|

||||

url = "https://download.pytorch.org/whl/cu124"

|

||||

optional_modules = "[onnx-cuda]"

|

||||

elif device == GpuType.CUDA_WITH_XFORMERS:

|

||||

url = "https://download.pytorch.org/whl/cu124"

|

||||

elif device.value == "cuda":

|

||||

# CUDA uses the default PyPi index

|

||||

optional_modules = "[xformers,onnx-cuda]"

|

||||

elif OS == "Windows":

|

||||

if device == GpuType.CUDA:

|

||||

url = "https://download.pytorch.org/whl/cu124"

|

||||

optional_modules = "[onnx-cuda]"

|

||||

elif device == GpuType.CUDA_WITH_XFORMERS:

|

||||

url = "https://download.pytorch.org/whl/cu124"

|

||||

if device.value == "cuda":

|

||||

url = "https://download.pytorch.org/whl/cu121"

|

||||

optional_modules = "[xformers,onnx-cuda]"

|

||||

elif device.value == "cpu":

|

||||

# CPU uses the default PyPi index, no optional modules

|

||||

|

||||

@@ -206,7 +206,6 @@ def dest_path(dest: Optional[str | Path] = None) -> Path | None:

|

||||

|

||||

|

||||

class GpuType(Enum):

|

||||

CUDA_WITH_XFORMERS = "xformers"

|

||||

CUDA = "cuda"

|

||||

ROCM = "rocm"

|

||||

CPU = "cpu"

|

||||

@@ -222,15 +221,11 @@ def select_gpu() -> GpuType:

|

||||

return GpuType.CPU

|

||||

|

||||

nvidia = (

|

||||

"an [gold1 b]NVIDIA[/] RTX 3060 or newer GPU using CUDA",

|

||||

"an [gold1 b]NVIDIA[/] GPU (using CUDA™)",

|

||||

GpuType.CUDA,

|

||||

)

|

||||

vintage_nvidia = (

|

||||

"an [gold1 b]NVIDIA[/] RTX 20xx or older GPU using CUDA+xFormers",

|

||||

GpuType.CUDA_WITH_XFORMERS,

|

||||

)

|

||||

amd = (

|

||||

"an [gold1 b]AMD[/] GPU using ROCm",

|

||||

"an [gold1 b]AMD[/] GPU (using ROCm™)",

|

||||

GpuType.ROCM,

|

||||

)

|

||||

cpu = (

|

||||

@@ -240,13 +235,14 @@ def select_gpu() -> GpuType:

|

||||

|

||||

options = []

|

||||

if OS == "Windows":

|

||||

options = [nvidia, vintage_nvidia, cpu]

|

||||

options = [nvidia, cpu]

|

||||

if OS == "Linux":

|

||||

options = [nvidia, vintage_nvidia, amd, cpu]

|

||||

options = [nvidia, amd, cpu]

|

||||

elif OS == "Darwin":

|

||||

options = [cpu]

|

||||

|

||||

if len(options) == 1:

|

||||

print(f'Your platform [gold1]{OS}-{ARCH}[/] only supports the "{options[0][1]}" driver. Proceeding with that.')

|

||||

return options[0][1]

|

||||

|

||||

options = {str(i): opt for i, opt in enumerate(options, 1)}

|

||||

|

||||

@@ -5,10 +5,9 @@ from fastapi.routing import APIRouter

|

||||

from pydantic import BaseModel, Field

|

||||

|

||||

from invokeai.app.api.dependencies import ApiDependencies

|

||||

from invokeai.app.services.board_records.board_records_common import BoardChanges, BoardRecordOrderBy

|

||||

from invokeai.app.services.board_records.board_records_common import BoardChanges

|

||||

from invokeai.app.services.boards.boards_common import BoardDTO

|

||||

from invokeai.app.services.shared.pagination import OffsetPaginatedResults

|

||||

from invokeai.app.services.shared.sqlite.sqlite_common import SQLiteDirection

|

||||

|

||||

boards_router = APIRouter(prefix="/v1/boards", tags=["boards"])

|

||||

|

||||

@@ -116,8 +115,6 @@ async def delete_board(

|

||||

response_model=Union[OffsetPaginatedResults[BoardDTO], list[BoardDTO]],

|

||||

)

|

||||

async def list_boards(

|

||||

order_by: BoardRecordOrderBy = Query(default=BoardRecordOrderBy.CreatedAt, description="The attribute to order by"),

|

||||

direction: SQLiteDirection = Query(default=SQLiteDirection.Descending, description="The direction to order by"),

|

||||

all: Optional[bool] = Query(default=None, description="Whether to list all boards"),

|

||||

offset: Optional[int] = Query(default=None, description="The page offset"),

|

||||

limit: Optional[int] = Query(default=None, description="The number of boards per page"),

|

||||

@@ -125,9 +122,9 @@ async def list_boards(

|

||||

) -> Union[OffsetPaginatedResults[BoardDTO], list[BoardDTO]]:

|

||||

"""Gets a list of boards"""

|

||||

if all:

|

||||

return ApiDependencies.invoker.services.boards.get_all(order_by, direction, include_archived)

|

||||

return ApiDependencies.invoker.services.boards.get_all(include_archived)

|

||||

elif offset is not None and limit is not None:

|

||||

return ApiDependencies.invoker.services.boards.get_many(order_by, direction, offset, limit, include_archived)

|

||||

return ApiDependencies.invoker.services.boards.get_many(offset, limit, include_archived)

|

||||

else:

|

||||

raise HTTPException(

|

||||

status_code=400,

|

||||

|

||||

@@ -38,12 +38,7 @@ from invokeai.backend.model_manager.load.model_cache.model_cache_base import Cac

|

||||

from invokeai.backend.model_manager.metadata.fetch.huggingface import HuggingFaceMetadataFetch

|

||||

from invokeai.backend.model_manager.metadata.metadata_base import ModelMetadataWithFiles, UnknownMetadataException

|

||||

from invokeai.backend.model_manager.search import ModelSearch

|

||||

from invokeai.backend.model_manager.starter_models import (

|

||||

STARTER_BUNDLES,

|

||||

STARTER_MODELS,

|

||||

StarterModel,

|

||||

StarterModelWithoutDependencies,

|

||||

)

|

||||

from invokeai.backend.model_manager.starter_models import STARTER_MODELS, StarterModel, StarterModelWithoutDependencies

|

||||

|

||||

model_manager_router = APIRouter(prefix="/v2/models", tags=["model_manager"])

|

||||

|

||||

@@ -797,48 +792,22 @@ async def convert_model(

|

||||

return new_config

|

||||

|

||||

|

||||

class StarterModelResponse(BaseModel):

|

||||

starter_models: list[StarterModel]

|

||||

starter_bundles: dict[str, list[StarterModel]]

|

||||

|

||||

|

||||

def get_is_installed(

|

||||

starter_model: StarterModel | StarterModelWithoutDependencies, installed_models: list[AnyModelConfig]

|

||||

) -> bool:

|

||||

for model in installed_models:

|

||||

if model.source == starter_model.source:

|

||||

return True

|

||||

if model.name == starter_model.name and model.base == starter_model.base and model.type == starter_model.type:

|

||||

return True

|

||||

return False

|

||||

|

||||

|

||||

@model_manager_router.get("/starter_models", operation_id="get_starter_models", response_model=StarterModelResponse)

|

||||

async def get_starter_models() -> StarterModelResponse:

|

||||

@model_manager_router.get("/starter_models", operation_id="get_starter_models", response_model=list[StarterModel])

|

||||

async def get_starter_models() -> list[StarterModel]:

|

||||

installed_models = ApiDependencies.invoker.services.model_manager.store.search_by_attr()

|

||||

installed_model_sources = {m.source for m in installed_models}

|

||||

starter_models = deepcopy(STARTER_MODELS)

|

||||

starter_bundles = deepcopy(STARTER_BUNDLES)

|

||||

for model in starter_models:

|

||||

model.is_installed = get_is_installed(model, installed_models)

|

||||

if model.source in installed_model_sources:

|

||||

model.is_installed = True

|

||||

# Remove already-installed dependencies

|

||||

missing_deps: list[StarterModelWithoutDependencies] = []

|

||||

|

||||

for dep in model.dependencies or []:

|

||||

if not get_is_installed(dep, installed_models):

|

||||

if dep.source not in installed_model_sources:

|

||||

missing_deps.append(dep)

|

||||

model.dependencies = missing_deps

|

||||

|

||||

for bundle in starter_bundles.values():

|

||||

for model in bundle:

|

||||

model.is_installed = get_is_installed(model, installed_models)

|

||||

# Remove already-installed dependencies

|

||||

missing_deps: list[StarterModelWithoutDependencies] = []

|

||||

for dep in model.dependencies or []:

|

||||

if not get_is_installed(dep, installed_models):

|

||||

missing_deps.append(dep)

|

||||

model.dependencies = missing_deps

|

||||

|

||||

return StarterModelResponse(starter_models=starter_models, starter_bundles=starter_bundles)

|

||||

return starter_models

|

||||

|

||||

|

||||

@model_manager_router.get(

|

||||

|

||||

@@ -83,7 +83,7 @@ async def create_workflow(

|

||||

)

|

||||

async def list_workflows(

|

||||

page: int = Query(default=0, description="The page to get"),

|

||||

per_page: Optional[int] = Query(default=None, description="The number of workflows per page"),

|

||||

per_page: int = Query(default=10, description="The number of workflows per page"),

|

||||

order_by: WorkflowRecordOrderBy = Query(

|

||||

default=WorkflowRecordOrderBy.Name, description="The attribute to order by"

|

||||

),

|

||||

@@ -93,5 +93,5 @@ async def list_workflows(

|

||||

) -> PaginatedResults[WorkflowRecordListItemDTO]:

|

||||

"""Gets a page of workflows"""

|

||||

return ApiDependencies.invoker.services.workflow_records.get_many(

|

||||

order_by=order_by, direction=direction, page=page, per_page=per_page, query=query, category=category

|

||||

page=page, per_page=per_page, order_by=order_by, direction=direction, query=query, category=category

|

||||

)

|

||||

|

||||

@@ -547,9 +547,7 @@ class DenoiseLatentsInvocation(BaseInvocation):

|

||||

if not isinstance(single_ipa_image_fields, list):

|

||||

single_ipa_image_fields = [single_ipa_image_fields]

|

||||

|

||||

single_ipa_images = [

|

||||

context.images.get_pil(image.image_name, mode="RGB") for image in single_ipa_image_fields

|

||||

]

|

||||

single_ipa_images = [context.images.get_pil(image.image_name) for image in single_ipa_image_fields]

|

||||

with image_encoder_model_info as image_encoder_model:

|

||||

assert isinstance(image_encoder_model, CLIPVisionModelWithProjection)

|

||||

# Get image embeddings from CLIP and ImageProjModel.

|

||||

|

||||

@@ -133,7 +133,6 @@ class FieldDescriptions:

|

||||

clip_embed_model = "CLIP Embed loader"

|

||||

unet = "UNet (scheduler, LoRAs)"

|

||||

transformer = "Transformer"

|

||||

mmditx = "MMDiTX"

|

||||

vae = "VAE"

|

||||

cond = "Conditioning tensor"

|

||||

controlnet_model = "ControlNet model to load"

|

||||

@@ -141,7 +140,6 @@ class FieldDescriptions:

|

||||

lora_model = "LoRA model to load"

|

||||

main_model = "Main model (UNet, VAE, CLIP) to load"

|

||||

flux_model = "Flux model (Transformer) to load"

|

||||

sd3_model = "SD3 model (MMDiTX) to load"

|

||||

sdxl_main_model = "SDXL Main model (UNet, VAE, CLIP1, CLIP2) to load"

|

||||

sdxl_refiner_model = "SDXL Refiner Main Modde (UNet, VAE, CLIP2) to load"

|

||||

onnx_main_model = "ONNX Main model (UNet, VAE, CLIP) to load"

|

||||

@@ -194,7 +192,6 @@ class FieldDescriptions:

|

||||

freeu_s2 = 'Scaling factor for stage 2 to attenuate the contributions of the skip features. This is done to mitigate the "oversmoothing effect" in the enhanced denoising process.'

|

||||

freeu_b1 = "Scaling factor for stage 1 to amplify the contributions of backbone features."

|

||||

freeu_b2 = "Scaling factor for stage 2 to amplify the contributions of backbone features."

|

||||

instantx_control_mode = "The control mode for InstantX ControlNet union models. Ignored for other ControlNet models. The standard mapping is: canny (0), tile (1), depth (2), blur (3), pose (4), gray (5), low quality (6). Negative values will be treated as 'None'."

|

||||

|

||||

|

||||

class ImageField(BaseModel):

|

||||

|

||||

@@ -1,99 +0,0 @@

|

||||

from pydantic import BaseModel, Field, field_validator, model_validator

|

||||

|

||||

from invokeai.app.invocations.baseinvocation import (

|

||||

BaseInvocation,

|

||||

BaseInvocationOutput,

|

||||

Classification,

|

||||

invocation,

|

||||

invocation_output,

|

||||

)

|

||||

from invokeai.app.invocations.fields import FieldDescriptions, ImageField, InputField, OutputField, UIType

|

||||

from invokeai.app.invocations.model import ModelIdentifierField

|

||||

from invokeai.app.invocations.util import validate_begin_end_step, validate_weights

|

||||

from invokeai.app.services.shared.invocation_context import InvocationContext

|

||||

from invokeai.app.util.controlnet_utils import CONTROLNET_RESIZE_VALUES

|

||||

|

||||

|

||||

class FluxControlNetField(BaseModel):

|

||||

image: ImageField = Field(description="The control image")

|

||||

control_model: ModelIdentifierField = Field(description="The ControlNet model to use")

|

||||

control_weight: float | list[float] = Field(default=1, description="The weight given to the ControlNet")

|

||||

begin_step_percent: float = Field(

|

||||

default=0, ge=0, le=1, description="When the ControlNet is first applied (% of total steps)"

|

||||

)

|

||||

end_step_percent: float = Field(

|

||||

default=1, ge=0, le=1, description="When the ControlNet is last applied (% of total steps)"

|

||||

)

|

||||

resize_mode: CONTROLNET_RESIZE_VALUES = Field(default="just_resize", description="The resize mode to use")

|

||||

instantx_control_mode: int | None = Field(default=-1, description=FieldDescriptions.instantx_control_mode)

|

||||

|

||||

@field_validator("control_weight")

|

||||

@classmethod

|

||||

def validate_control_weight(cls, v: float | list[float]) -> float | list[float]:

|

||||

validate_weights(v)

|

||||

return v

|

||||

|

||||

@model_validator(mode="after")

|

||||

def validate_begin_end_step_percent(self):

|

||||

validate_begin_end_step(self.begin_step_percent, self.end_step_percent)

|

||||

return self

|

||||

|

||||

|

||||

@invocation_output("flux_controlnet_output")

|

||||

class FluxControlNetOutput(BaseInvocationOutput):

|

||||

"""FLUX ControlNet info"""

|

||||

|

||||

control: FluxControlNetField = OutputField(description=FieldDescriptions.control)

|

||||

|

||||

|

||||

@invocation(

|

||||

"flux_controlnet",

|

||||

title="FLUX ControlNet",

|

||||

tags=["controlnet", "flux"],

|

||||

category="controlnet",

|

||||

version="1.0.0",

|

||||

classification=Classification.Prototype,

|

||||

)

|

||||

class FluxControlNetInvocation(BaseInvocation):

|

||||

"""Collect FLUX ControlNet info to pass to other nodes."""

|

||||

|

||||

image: ImageField = InputField(description="The control image")

|

||||

control_model: ModelIdentifierField = InputField(

|

||||

description=FieldDescriptions.controlnet_model, ui_type=UIType.ControlNetModel

|

||||

)

|

||||

control_weight: float | list[float] = InputField(

|

||||

default=1.0, ge=-1, le=2, description="The weight given to the ControlNet"

|

||||

)

|

||||

begin_step_percent: float = InputField(

|

||||

default=0, ge=0, le=1, description="When the ControlNet is first applied (% of total steps)"

|

||||

)

|

||||

end_step_percent: float = InputField(

|

||||

default=1, ge=0, le=1, description="When the ControlNet is last applied (% of total steps)"

|

||||

)

|

||||

resize_mode: CONTROLNET_RESIZE_VALUES = InputField(default="just_resize", description="The resize mode used")

|

||||

# Note: We default to -1 instead of None, because in the workflow editor UI None is not currently supported.

|

||||

instantx_control_mode: int | None = InputField(default=-1, description=FieldDescriptions.instantx_control_mode)

|

||||

|

||||

@field_validator("control_weight")

|

||||

@classmethod

|

||||

def validate_control_weight(cls, v: float | list[float]) -> float | list[float]:

|

||||

validate_weights(v)

|

||||

return v

|

||||

|

||||

@model_validator(mode="after")

|

||||

def validate_begin_end_step_percent(self):

|

||||

validate_begin_end_step(self.begin_step_percent, self.end_step_percent)

|

||||

return self

|

||||

|

||||

def invoke(self, context: InvocationContext) -> FluxControlNetOutput:

|

||||

return FluxControlNetOutput(

|

||||

control=FluxControlNetField(

|

||||

image=self.image,

|

||||

control_model=self.control_model,

|

||||

control_weight=self.control_weight,

|

||||

begin_step_percent=self.begin_step_percent,

|

||||

end_step_percent=self.end_step_percent,

|

||||

resize_mode=self.resize_mode,

|

||||

instantx_control_mode=self.instantx_control_mode,

|

||||

),

|

||||

)

|

||||

@@ -1,38 +1,26 @@

|

||||

from contextlib import ExitStack

|

||||

from typing import Callable, Iterator, Optional, Tuple

|

||||

|

||||

import numpy as np

|

||||

import numpy.typing as npt

|

||||

import torch

|

||||

import torchvision.transforms as tv_transforms

|

||||

from torchvision.transforms.functional import resize as tv_resize

|

||||

from transformers import CLIPImageProcessor, CLIPVisionModelWithProjection

|

||||

|

||||

from invokeai.app.invocations.baseinvocation import BaseInvocation, Classification, invocation

|

||||

from invokeai.app.invocations.fields import (

|

||||

DenoiseMaskField,

|

||||

FieldDescriptions,

|

||||

FluxConditioningField,

|

||||

ImageField,

|

||||

Input,

|

||||

InputField,

|

||||

LatentsField,

|

||||

WithBoard,

|

||||

WithMetadata,

|

||||

)

|

||||

from invokeai.app.invocations.flux_controlnet import FluxControlNetField

|

||||

from invokeai.app.invocations.ip_adapter import IPAdapterField

|

||||

from invokeai.app.invocations.model import TransformerField, VAEField

|

||||

from invokeai.app.invocations.model import TransformerField

|

||||

from invokeai.app.invocations.primitives import LatentsOutput

|

||||

from invokeai.app.services.shared.invocation_context import InvocationContext

|

||||

from invokeai.backend.flux.controlnet.instantx_controlnet_flux import InstantXControlNetFlux

|

||||

from invokeai.backend.flux.controlnet.xlabs_controlnet_flux import XLabsControlNetFlux

|

||||

from invokeai.backend.flux.denoise import denoise

|

||||

from invokeai.backend.flux.extensions.inpaint_extension import InpaintExtension

|

||||

from invokeai.backend.flux.extensions.instantx_controlnet_extension import InstantXControlNetExtension

|

||||

from invokeai.backend.flux.extensions.xlabs_controlnet_extension import XLabsControlNetExtension

|

||||

from invokeai.backend.flux.extensions.xlabs_ip_adapter_extension import XLabsIPAdapterExtension

|

||||

from invokeai.backend.flux.ip_adapter.xlabs_ip_adapter_flux import XlabsIpAdapterFlux

|

||||

from invokeai.backend.flux.inpaint_extension import InpaintExtension

|

||||

from invokeai.backend.flux.model import Flux

|

||||

from invokeai.backend.flux.sampling_utils import (

|

||||

clip_timestep_schedule_fractional,

|

||||

@@ -42,7 +30,7 @@ from invokeai.backend.flux.sampling_utils import (

|

||||

pack,

|

||||

unpack,

|

||||

)

|

||||

from invokeai.backend.lora.conversions.flux_lora_constants import FLUX_LORA_TRANSFORMER_PREFIX

|

||||

from invokeai.backend.lora.conversions.flux_kohya_lora_conversion_utils import FLUX_KOHYA_TRANFORMER_PREFIX

|

||||

from invokeai.backend.lora.lora_model_raw import LoRAModelRaw

|

||||

from invokeai.backend.lora.lora_patcher import LoRAPatcher

|

||||

from invokeai.backend.model_manager.config import ModelFormat

|

||||

@@ -56,7 +44,7 @@ from invokeai.backend.util.devices import TorchDevice

|

||||

title="FLUX Denoise",

|

||||

tags=["image", "flux"],

|

||||

category="image",

|

||||

version="3.2.0",

|

||||

version="3.0.0",

|

||||

classification=Classification.Prototype,

|

||||

)

|

||||

class FluxDenoiseInvocation(BaseInvocation, WithMetadata, WithBoard):

|

||||

@@ -89,24 +77,6 @@ class FluxDenoiseInvocation(BaseInvocation, WithMetadata, WithBoard):

|

||||

positive_text_conditioning: FluxConditioningField = InputField(

|

||||

description=FieldDescriptions.positive_cond, input=Input.Connection

|

||||

)

|

||||

negative_text_conditioning: FluxConditioningField | None = InputField(

|

||||

default=None,

|

||||

description="Negative conditioning tensor. Can be None if cfg_scale is 1.0.",

|

||||

input=Input.Connection,

|

||||

)

|

||||

cfg_scale: float | list[float] = InputField(default=1.0, description=FieldDescriptions.cfg_scale, title="CFG Scale")

|

||||

cfg_scale_start_step: int = InputField(

|

||||

default=0,

|

||||

title="CFG Scale Start Step",

|

||||

description="Index of the first step to apply cfg_scale. Negative indices count backwards from the "

|

||||

+ "the last step (e.g. a value of -1 refers to the final step).",

|

||||

)

|

||||

cfg_scale_end_step: int = InputField(

|

||||

default=-1,

|

||||

title="CFG Scale End Step",

|

||||

description="Index of the last step to apply cfg_scale. Negative indices count backwards from the "

|

||||

+ "last step (e.g. a value of -1 refers to the final step).",

|

||||

)

|

||||

width: int = InputField(default=1024, multiple_of=16, description="Width of the generated image.")

|

||||

height: int = InputField(default=1024, multiple_of=16, description="Height of the generated image.")

|

||||

num_steps: int = InputField(

|

||||

@@ -117,18 +87,6 @@ class FluxDenoiseInvocation(BaseInvocation, WithMetadata, WithBoard):

|

||||

description="The guidance strength. Higher values adhere more strictly to the prompt, and will produce less diverse images. FLUX dev only, ignored for schnell.",

|

||||

)

|

||||

seed: int = InputField(default=0, description="Randomness seed for reproducibility.")

|

||||

control: FluxControlNetField | list[FluxControlNetField] | None = InputField(

|

||||

default=None, input=Input.Connection, description="ControlNet models."

|

||||

)

|

||||

controlnet_vae: VAEField | None = InputField(

|

||||

default=None,

|

||||

description=FieldDescriptions.vae,

|

||||

input=Input.Connection,

|

||||

)

|

||||

|

||||

ip_adapter: IPAdapterField | list[IPAdapterField] | None = InputField(

|

||||

description=FieldDescriptions.ip_adapter, title="IP-Adapter", default=None, input=Input.Connection

|

||||

)

|

||||

|

||||

@torch.no_grad()

|

||||

def invoke(self, context: InvocationContext) -> LatentsOutput:

|

||||

@@ -138,19 +96,6 @@ class FluxDenoiseInvocation(BaseInvocation, WithMetadata, WithBoard):

|

||||

name = context.tensors.save(tensor=latents)

|

||||

return LatentsOutput.build(latents_name=name, latents=latents, seed=None)

|

||||

|

||||

def _load_text_conditioning(

|

||||

self, context: InvocationContext, conditioning_name: str, dtype: torch.dtype

|

||||

) -> Tuple[torch.Tensor, torch.Tensor]:

|

||||

# Load the conditioning data.

|

||||

cond_data = context.conditioning.load(conditioning_name)

|

||||

assert len(cond_data.conditionings) == 1

|

||||

flux_conditioning = cond_data.conditionings[0]

|

||||

assert isinstance(flux_conditioning, FLUXConditioningInfo)

|

||||

flux_conditioning = flux_conditioning.to(dtype=dtype)

|

||||

t5_embeddings = flux_conditioning.t5_embeds

|

||||

clip_embeddings = flux_conditioning.clip_embeds

|

||||

return t5_embeddings, clip_embeddings

|

||||

|

||||

def _run_diffusion(

|

||||

self,

|

||||

context: InvocationContext,

|

||||

@@ -158,15 +103,13 @@ class FluxDenoiseInvocation(BaseInvocation, WithMetadata, WithBoard):

|

||||

inference_dtype = torch.bfloat16

|

||||

|

||||

# Load the conditioning data.

|

||||

pos_t5_embeddings, pos_clip_embeddings = self._load_text_conditioning(

|

||||

context, self.positive_text_conditioning.conditioning_name, inference_dtype

|

||||

)

|

||||

neg_t5_embeddings: torch.Tensor | None = None

|

||||

neg_clip_embeddings: torch.Tensor | None = None

|

||||

if self.negative_text_conditioning is not None:

|

||||

neg_t5_embeddings, neg_clip_embeddings = self._load_text_conditioning(

|

||||

context, self.negative_text_conditioning.conditioning_name, inference_dtype

|

||||

)

|

||||

cond_data = context.conditioning.load(self.positive_text_conditioning.conditioning_name)

|

||||

assert len(cond_data.conditionings) == 1

|

||||

flux_conditioning = cond_data.conditionings[0]

|

||||

assert isinstance(flux_conditioning, FLUXConditioningInfo)

|

||||

flux_conditioning = flux_conditioning.to(dtype=inference_dtype)

|

||||

t5_embeddings = flux_conditioning.t5_embeds

|

||||

clip_embeddings = flux_conditioning.clip_embeds

|

||||

|

||||

# Load the input latents, if provided.

|

||||

init_latents = context.tensors.load(self.latents.latents_name) if self.latents else None

|

||||

@@ -224,19 +167,11 @@ class FluxDenoiseInvocation(BaseInvocation, WithMetadata, WithBoard):

|

||||

|

||||

inpaint_mask = self._prep_inpaint_mask(context, x)

|

||||

|

||||

b, _c, latent_h, latent_w = x.shape

|

||||

img_ids = generate_img_ids(h=latent_h, w=latent_w, batch_size=b, device=x.device, dtype=x.dtype)

|

||||

b, _c, h, w = x.shape

|

||||

img_ids = generate_img_ids(h=h, w=w, batch_size=b, device=x.device, dtype=x.dtype)

|

||||

|

||||

pos_bs, pos_t5_seq_len, _ = pos_t5_embeddings.shape

|

||||

pos_txt_ids = torch.zeros(

|

||||

pos_bs, pos_t5_seq_len, 3, dtype=inference_dtype, device=TorchDevice.choose_torch_device()

|

||||

)

|

||||

neg_txt_ids: torch.Tensor | None = None

|

||||

if neg_t5_embeddings is not None:

|

||||

neg_bs, neg_t5_seq_len, _ = neg_t5_embeddings.shape

|

||||

neg_txt_ids = torch.zeros(

|

||||

neg_bs, neg_t5_seq_len, 3, dtype=inference_dtype, device=TorchDevice.choose_torch_device()

|

||||

)

|

||||

bs, t5_seq_len, _ = t5_embeddings.shape

|

||||

txt_ids = torch.zeros(bs, t5_seq_len, 3, dtype=inference_dtype, device=TorchDevice.choose_torch_device())

|

||||

|

||||

# Pack all latent tensors.

|

||||

init_latents = pack(init_latents) if init_latents is not None else None

|

||||

@@ -257,36 +192,12 @@ class FluxDenoiseInvocation(BaseInvocation, WithMetadata, WithBoard):

|

||||

noise=noise,

|

||||

)

|

||||

|

||||

# Compute the IP-Adapter image prompt clip embeddings.

|

||||

# We do this before loading other models to minimize peak memory.

|

||||

# TODO(ryand): We should really do this in a separate invocation to benefit from caching.

|

||||

ip_adapter_fields = self._normalize_ip_adapter_fields()

|

||||

pos_image_prompt_clip_embeds, neg_image_prompt_clip_embeds = self._prep_ip_adapter_image_prompt_clip_embeds(

|

||||

ip_adapter_fields, context

|

||||

)

|

||||

|

||||

cfg_scale = self.prep_cfg_scale(

|

||||

cfg_scale=self.cfg_scale,

|

||||

timesteps=timesteps,

|

||||

cfg_scale_start_step=self.cfg_scale_start_step,

|

||||

cfg_scale_end_step=self.cfg_scale_end_step,

|

||||

)

|

||||

|

||||

with ExitStack() as exit_stack:

|

||||

# Prepare ControlNet extensions.

|

||||

# Note: We do this before loading the transformer model to minimize peak memory (see implementation).

|

||||

controlnet_extensions = self._prep_controlnet_extensions(

|

||||

context=context,

|

||||

exit_stack=exit_stack,

|

||||

latent_height=latent_h,

|

||||

latent_width=latent_w,

|

||||

dtype=inference_dtype,

|

||||

device=x.device,

|

||||

)

|

||||

|

||||

# Load the transformer model.

|

||||

(cached_weights, transformer) = exit_stack.enter_context(transformer_info.model_on_device())

|

||||

with (

|

||||

transformer_info.model_on_device() as (cached_weights, transformer),

|

||||

ExitStack() as exit_stack,

|

||||

):

|

||||

assert isinstance(transformer, Flux)

|

||||

|

||||

config = transformer_info.config

|

||||

assert config is not None

|

||||

|

||||

@@ -298,7 +209,7 @@ class FluxDenoiseInvocation(BaseInvocation, WithMetadata, WithBoard):

|

||||

LoRAPatcher.apply_lora_patches(

|

||||

model=transformer,

|

||||

patches=self._lora_iterator(context),

|

||||

prefix=FLUX_LORA_TRANSFORMER_PREFIX,

|

||||

prefix=FLUX_KOHYA_TRANFORMER_PREFIX,

|

||||

cached_weights=cached_weights,

|

||||

)

|

||||

)

|

||||

@@ -313,95 +224,29 @@ class FluxDenoiseInvocation(BaseInvocation, WithMetadata, WithBoard):

|

||||

LoRAPatcher.apply_lora_sidecar_patches(

|

||||

model=transformer,

|

||||

patches=self._lora_iterator(context),

|

||||

prefix=FLUX_LORA_TRANSFORMER_PREFIX,

|

||||

prefix=FLUX_KOHYA_TRANFORMER_PREFIX,

|

||||

dtype=inference_dtype,

|

||||

)

|

||||

)

|

||||

else:

|

||||

raise ValueError(f"Unsupported model format: {config.format}")

|

||||

|

||||

# Prepare IP-Adapter extensions.

|

||||

pos_ip_adapter_extensions, neg_ip_adapter_extensions = self._prep_ip_adapter_extensions(

|

||||

pos_image_prompt_clip_embeds=pos_image_prompt_clip_embeds,

|

||||

neg_image_prompt_clip_embeds=neg_image_prompt_clip_embeds,

|

||||

ip_adapter_fields=ip_adapter_fields,

|

||||

context=context,

|

||||

exit_stack=exit_stack,

|

||||

dtype=inference_dtype,

|

||||

)

|

||||

|

||||

x = denoise(

|

||||

model=transformer,

|

||||

img=x,

|

||||

img_ids=img_ids,

|

||||

txt=pos_t5_embeddings,

|

||||

txt_ids=pos_txt_ids,

|

||||

vec=pos_clip_embeddings,

|

||||

neg_txt=neg_t5_embeddings,

|

||||

neg_txt_ids=neg_txt_ids,

|

||||

neg_vec=neg_clip_embeddings,

|

||||

txt=t5_embeddings,

|

||||

txt_ids=txt_ids,

|

||||

vec=clip_embeddings,

|

||||

timesteps=timesteps,

|

||||

step_callback=self._build_step_callback(context),

|

||||

guidance=self.guidance,

|

||||

cfg_scale=cfg_scale,

|

||||

inpaint_extension=inpaint_extension,

|

||||

controlnet_extensions=controlnet_extensions,

|

||||

pos_ip_adapter_extensions=pos_ip_adapter_extensions,

|

||||

neg_ip_adapter_extensions=neg_ip_adapter_extensions,

|

||||

)

|

||||

|

||||

x = unpack(x.float(), self.height, self.width)

|

||||

return x

|

||||

|

||||

@classmethod

|

||||

def prep_cfg_scale(

|

||||

cls, cfg_scale: float | list[float], timesteps: list[float], cfg_scale_start_step: int, cfg_scale_end_step: int

|

||||

) -> list[float]:

|

||||

"""Prepare the cfg_scale schedule.

|

||||

|

||||

- Clips the cfg_scale schedule based on cfg_scale_start_step and cfg_scale_end_step.

|

||||

- If cfg_scale is a list, then it is assumed to be a schedule and is returned as-is.

|

||||

- If cfg_scale is a scalar, then a linear schedule is created from cfg_scale_start_step to cfg_scale_end_step.

|

||||

"""

|

||||

# num_steps is the number of denoising steps, which is one less than the number of timesteps.

|

||||

num_steps = len(timesteps) - 1

|

||||

|

||||

# Normalize cfg_scale to a list if it is a scalar.

|

||||

cfg_scale_list: list[float]

|

||||

if isinstance(cfg_scale, float):

|

||||

cfg_scale_list = [cfg_scale] * num_steps

|

||||

elif isinstance(cfg_scale, list):

|

||||

cfg_scale_list = cfg_scale

|

||||

else:

|

||||

raise ValueError(f"Unsupported cfg_scale type: {type(cfg_scale)}")

|

||||

assert len(cfg_scale_list) == num_steps

|

||||

|

||||

# Handle negative indices for cfg_scale_start_step and cfg_scale_end_step.

|

||||

start_step_index = cfg_scale_start_step

|

||||

if start_step_index < 0:

|

||||

start_step_index = num_steps + start_step_index

|

||||

end_step_index = cfg_scale_end_step

|

||||

if end_step_index < 0:

|

||||

end_step_index = num_steps + end_step_index

|

||||

|

||||

# Validate the start and end step indices.

|

||||

if not (0 <= start_step_index < num_steps):

|

||||

raise ValueError(f"Invalid cfg_scale_start_step. Out of range: {cfg_scale_start_step}.")

|

||||

if not (0 <= end_step_index < num_steps):

|

||||

raise ValueError(f"Invalid cfg_scale_end_step. Out of range: {cfg_scale_end_step}.")

|

||||

if start_step_index > end_step_index:

|

||||

raise ValueError(

|

||||

f"cfg_scale_start_step ({cfg_scale_start_step}) must be before cfg_scale_end_step "

|

||||

+ f"({cfg_scale_end_step})."

|

||||

)

|

||||

|

||||

# Set values outside the start and end step indices to 1.0. This is equivalent to disabling cfg_scale for those

|

||||

# steps.

|

||||

clipped_cfg_scale = [1.0] * num_steps

|

||||

clipped_cfg_scale[start_step_index : end_step_index + 1] = cfg_scale_list[start_step_index : end_step_index + 1]

|

||||

|

||||

return clipped_cfg_scale

|

||||

|

||||

def _prep_inpaint_mask(self, context: InvocationContext, latents: torch.Tensor) -> torch.Tensor | None:

|

||||

"""Prepare the inpaint mask.

|

||||

|

||||

@@ -443,210 +288,6 @@ class FluxDenoiseInvocation(BaseInvocation, WithMetadata, WithBoard):

|

||||

# `latents`.

|

||||

return mask.expand_as(latents)

|

||||

|

||||

def _prep_controlnet_extensions(

|

||||

self,

|

||||

context: InvocationContext,

|

||||

exit_stack: ExitStack,

|

||||

latent_height: int,

|

||||

latent_width: int,

|

||||

dtype: torch.dtype,

|

||||

device: torch.device,

|

||||

) -> list[XLabsControlNetExtension | InstantXControlNetExtension]:

|

||||

# Normalize the controlnet input to list[ControlField].

|

||||

controlnets: list[FluxControlNetField]

|

||||

if self.control is None:

|

||||

controlnets = []

|

||||

elif isinstance(self.control, FluxControlNetField):

|

||||

controlnets = [self.control]

|

||||

elif isinstance(self.control, list):

|

||||

controlnets = self.control

|

||||

else:

|

||||

raise ValueError(f"Unsupported controlnet type: {type(self.control)}")

|

||||

|

||||

# TODO(ryand): Add a field to the model config so that we can distinguish between XLabs and InstantX ControlNets

|

||||

# before loading the models. Then make sure that all VAE encoding is done before loading the ControlNets to

|

||||

# minimize peak memory.

|

||||

|

||||

# First, load the ControlNet models so that we can determine the ControlNet types.

|

||||

controlnet_models = [context.models.load(controlnet.control_model) for controlnet in controlnets]

|

||||

|

||||

# Calculate the controlnet conditioning tensors.

|

||||

# We do this before loading the ControlNet models because it may require running the VAE, and we are trying to

|

||||

# keep peak memory down.

|

||||

controlnet_conds: list[torch.Tensor] = []

|

||||

for controlnet, controlnet_model in zip(controlnets, controlnet_models, strict=True):

|

||||

image = context.images.get_pil(controlnet.image.image_name)

|

||||

if isinstance(controlnet_model.model, InstantXControlNetFlux):

|

||||

if self.controlnet_vae is None:

|

||||

raise ValueError("A ControlNet VAE is required when using an InstantX FLUX ControlNet.")

|

||||

vae_info = context.models.load(self.controlnet_vae.vae)

|

||||

controlnet_conds.append(

|

||||

InstantXControlNetExtension.prepare_controlnet_cond(

|

||||

controlnet_image=image,

|

||||

vae_info=vae_info,

|

||||

latent_height=latent_height,

|

||||

latent_width=latent_width,

|

||||

dtype=dtype,

|

||||

device=device,

|

||||

resize_mode=controlnet.resize_mode,

|

||||

)

|

||||

)

|

||||

elif isinstance(controlnet_model.model, XLabsControlNetFlux):

|

||||

controlnet_conds.append(

|

||||

XLabsControlNetExtension.prepare_controlnet_cond(

|

||||

controlnet_image=image,

|

||||

latent_height=latent_height,

|

||||

latent_width=latent_width,

|

||||

dtype=dtype,

|

||||

device=device,

|

||||

resize_mode=controlnet.resize_mode,

|

||||

)

|

||||

)

|

||||

|

||||

# Finally, load the ControlNet models and initialize the ControlNet extensions.

|

||||

controlnet_extensions: list[XLabsControlNetExtension | InstantXControlNetExtension] = []

|

||||

for controlnet, controlnet_cond, controlnet_model in zip(

|

||||

controlnets, controlnet_conds, controlnet_models, strict=True

|

||||

):

|

||||

model = exit_stack.enter_context(controlnet_model)

|

||||

|

||||

if isinstance(model, XLabsControlNetFlux):

|

||||

controlnet_extensions.append(

|

||||

XLabsControlNetExtension(

|

||||

model=model,

|

||||

controlnet_cond=controlnet_cond,

|

||||

weight=controlnet.control_weight,

|

||||

begin_step_percent=controlnet.begin_step_percent,

|

||||

end_step_percent=controlnet.end_step_percent,

|

||||

)

|

||||

)

|

||||

elif isinstance(model, InstantXControlNetFlux):

|

||||

instantx_control_mode: torch.Tensor | None = None

|

||||

if controlnet.instantx_control_mode is not None and controlnet.instantx_control_mode >= 0:

|

||||

instantx_control_mode = torch.tensor(controlnet.instantx_control_mode, dtype=torch.long)

|

||||

instantx_control_mode = instantx_control_mode.reshape([-1, 1])

|

||||

|

||||

controlnet_extensions.append(

|

||||

InstantXControlNetExtension(

|

||||

model=model,

|

||||

controlnet_cond=controlnet_cond,

|

||||

instantx_control_mode=instantx_control_mode,

|

||||

weight=controlnet.control_weight,

|

||||

begin_step_percent=controlnet.begin_step_percent,

|

||||

end_step_percent=controlnet.end_step_percent,

|

||||

)

|

||||

)

|

||||

else:

|

||||

raise ValueError(f"Unsupported ControlNet model type: {type(model)}")

|

||||

|

||||

return controlnet_extensions

|

||||

|

||||

def _normalize_ip_adapter_fields(self) -> list[IPAdapterField]:

|

||||

if self.ip_adapter is None:

|

||||

return []

|

||||

elif isinstance(self.ip_adapter, IPAdapterField):

|

||||

return [self.ip_adapter]

|

||||

elif isinstance(self.ip_adapter, list):

|

||||

return self.ip_adapter

|

||||

else:

|

||||

raise ValueError(f"Unsupported IP-Adapter type: {type(self.ip_adapter)}")

|

||||

|

||||

def _prep_ip_adapter_image_prompt_clip_embeds(

|

||||

self,

|

||||

ip_adapter_fields: list[IPAdapterField],

|

||||

context: InvocationContext,

|

||||

) -> tuple[list[torch.Tensor], list[torch.Tensor]]:

|

||||

"""Run the IPAdapter CLIPVisionModel, returning image prompt embeddings."""

|

||||

clip_image_processor = CLIPImageProcessor()

|

||||

|

||||

pos_image_prompt_clip_embeds: list[torch.Tensor] = []

|

||||

neg_image_prompt_clip_embeds: list[torch.Tensor] = []

|

||||

for ip_adapter_field in ip_adapter_fields:

|

||||

# `ip_adapter_field.image` could be a list or a single ImageField. Normalize to a list here.

|

||||

ipa_image_fields: list[ImageField]

|

||||

if isinstance(ip_adapter_field.image, ImageField):

|

||||

ipa_image_fields = [ip_adapter_field.image]

|

||||

elif isinstance(ip_adapter_field.image, list):

|

||||

ipa_image_fields = ip_adapter_field.image

|

||||

else:

|

||||

raise ValueError(f"Unsupported IP-Adapter image type: {type(ip_adapter_field.image)}")

|

||||

|

||||

if len(ipa_image_fields) != 1:

|

||||

raise ValueError(

|

||||

f"FLUX IP-Adapter only supports a single image prompt (received {len(ipa_image_fields)})."

|

||||

)

|

||||

|

||||

ipa_images = [context.images.get_pil(image.image_name, mode="RGB") for image in ipa_image_fields]

|

||||

|

||||

pos_images: list[npt.NDArray[np.uint8]] = []

|

||||

neg_images: list[npt.NDArray[np.uint8]] = []

|

||||

for ipa_image in ipa_images:

|

||||

assert ipa_image.mode == "RGB"

|

||||

pos_image = np.array(ipa_image)

|

||||

# We use a black image as the negative image prompt for parity with

|

||||

# https://github.com/XLabs-AI/x-flux-comfyui/blob/45c834727dd2141aebc505ae4b01f193a8414e38/nodes.py#L592-L593

|

||||

# An alternative scheme would be to apply zeros_like() after calling the clip_image_processor.

|

||||

neg_image = np.zeros_like(pos_image)

|

||||

pos_images.append(pos_image)

|

||||

neg_images.append(neg_image)

|

||||

|

||||

with context.models.load(ip_adapter_field.image_encoder_model) as image_encoder_model:

|

||||

assert isinstance(image_encoder_model, CLIPVisionModelWithProjection)

|

||||

|

||||

clip_image: torch.Tensor = clip_image_processor(images=pos_images, return_tensors="pt").pixel_values

|

||||

clip_image = clip_image.to(device=image_encoder_model.device, dtype=image_encoder_model.dtype)

|

||||

pos_clip_image_embeds = image_encoder_model(clip_image).image_embeds

|

||||

|

||||

clip_image = clip_image_processor(images=neg_images, return_tensors="pt").pixel_values

|

||||

clip_image = clip_image.to(device=image_encoder_model.device, dtype=image_encoder_model.dtype)

|

||||

neg_clip_image_embeds = image_encoder_model(clip_image).image_embeds

|

||||

|

||||

pos_image_prompt_clip_embeds.append(pos_clip_image_embeds)

|

||||

neg_image_prompt_clip_embeds.append(neg_clip_image_embeds)

|

||||

|

||||

return pos_image_prompt_clip_embeds, neg_image_prompt_clip_embeds

|

||||

|

||||

def _prep_ip_adapter_extensions(

|

||||

self,

|

||||

ip_adapter_fields: list[IPAdapterField],

|

||||

pos_image_prompt_clip_embeds: list[torch.Tensor],

|

||||

neg_image_prompt_clip_embeds: list[torch.Tensor],

|

||||

context: InvocationContext,

|

||||

exit_stack: ExitStack,

|

||||

dtype: torch.dtype,

|

||||

) -> tuple[list[XLabsIPAdapterExtension], list[XLabsIPAdapterExtension]]:

|

||||

pos_ip_adapter_extensions: list[XLabsIPAdapterExtension] = []

|

||||

neg_ip_adapter_extensions: list[XLabsIPAdapterExtension] = []

|

||||

for ip_adapter_field, pos_image_prompt_clip_embed, neg_image_prompt_clip_embed in zip(

|

||||

ip_adapter_fields, pos_image_prompt_clip_embeds, neg_image_prompt_clip_embeds, strict=True

|

||||

):

|

||||

ip_adapter_model = exit_stack.enter_context(context.models.load(ip_adapter_field.ip_adapter_model))

|

||||

assert isinstance(ip_adapter_model, XlabsIpAdapterFlux)

|

||||

ip_adapter_model = ip_adapter_model.to(dtype=dtype)

|

||||

if ip_adapter_field.mask is not None:

|

||||

raise ValueError("IP-Adapter masks are not yet supported in Flux.")

|

||||

ip_adapter_extension = XLabsIPAdapterExtension(

|

||||

model=ip_adapter_model,

|

||||

image_prompt_clip_embed=pos_image_prompt_clip_embed,

|

||||

weight=ip_adapter_field.weight,

|

||||

begin_step_percent=ip_adapter_field.begin_step_percent,

|

||||

end_step_percent=ip_adapter_field.end_step_percent,

|

||||

)

|

||||

ip_adapter_extension.run_image_proj(dtype=dtype)

|

||||

pos_ip_adapter_extensions.append(ip_adapter_extension)

|

||||

|

||||

ip_adapter_extension = XLabsIPAdapterExtension(

|

||||

model=ip_adapter_model,

|

||||

image_prompt_clip_embed=neg_image_prompt_clip_embed,

|

||||

weight=ip_adapter_field.weight,

|

||||

begin_step_percent=ip_adapter_field.begin_step_percent,

|

||||

end_step_percent=ip_adapter_field.end_step_percent,

|

||||

)

|

||||

ip_adapter_extension.run_image_proj(dtype=dtype)

|

||||

neg_ip_adapter_extensions.append(ip_adapter_extension)

|

||||

|

||||

return pos_ip_adapter_extensions, neg_ip_adapter_extensions

|

||||

|

||||

def _lora_iterator(self, context: InvocationContext) -> Iterator[Tuple[LoRAModelRaw, float]]:

|

||||

for lora in self.transformer.loras:

|

||||

lora_info = context.models.load(lora.lora)

|

||||

|

||||

@@ -1,89 +0,0 @@

|

||||

from builtins import float

|

||||

from typing import List, Literal, Union

|

||||

|

||||

from pydantic import field_validator, model_validator

|

||||

from typing_extensions import Self

|

||||

|

||||

from invokeai.app.invocations.baseinvocation import BaseInvocation, Classification, invocation

|

||||

from invokeai.app.invocations.fields import InputField, UIType

|

||||

from invokeai.app.invocations.ip_adapter import (

|

||||

CLIP_VISION_MODEL_MAP,

|

||||

IPAdapterField,

|

||||

IPAdapterInvocation,

|

||||

IPAdapterOutput,

|

||||

)

|

||||

from invokeai.app.invocations.model import ModelIdentifierField

|

||||

from invokeai.app.invocations.primitives import ImageField

|

||||

from invokeai.app.invocations.util import validate_begin_end_step, validate_weights

|

||||

from invokeai.app.services.shared.invocation_context import InvocationContext

|

||||

from invokeai.backend.model_manager.config import (

|

||||

IPAdapterCheckpointConfig,

|

||||

IPAdapterInvokeAIConfig,

|

||||

)

|

||||

|

||||

|

||||

@invocation(

|

||||

"flux_ip_adapter",

|

||||

title="FLUX IP-Adapter",

|

||||

tags=["ip_adapter", "control"],

|

||||

category="ip_adapter",

|

||||

version="1.0.0",

|

||||

classification=Classification.Prototype,

|

||||

)

|

||||

class FluxIPAdapterInvocation(BaseInvocation):

|

||||

"""Collects FLUX IP-Adapter info to pass to other nodes."""

|

||||

|

||||

# FLUXIPAdapterInvocation is based closely on IPAdapterInvocation, but with some unsupported features removed.

|

||||

|

||||

image: ImageField = InputField(description="The IP-Adapter image prompt(s).")

|

||||

ip_adapter_model: ModelIdentifierField = InputField(

|

||||