mirror of

https://github.com/invoke-ai/InvokeAI.git

synced 2026-01-19 15:58:03 -05:00

Compare commits

5 Commits

v3.1.1

...

feat/dynam

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

3985c16183 | ||

|

|

751fe68d16 | ||

|

|

877348af49 | ||

|

|

3dbfee23e6 | ||

|

|

17314ea82d |

38

.github/CODEOWNERS

vendored

38

.github/CODEOWNERS

vendored

@@ -1,34 +1,34 @@

|

||||

# continuous integration

|

||||

/.github/workflows/ @lstein @blessedcoolant @hipsterusername

|

||||

/.github/workflows/ @lstein @blessedcoolant

|

||||

|

||||

# documentation

|

||||

/docs/ @lstein @blessedcoolant @hipsterusername @Millu

|

||||

/mkdocs.yml @lstein @blessedcoolant @hipsterusername @Millu

|

||||

/docs/ @lstein @blessedcoolant @hipsterusername

|

||||

/mkdocs.yml @lstein @blessedcoolant

|

||||

|

||||

# nodes

|

||||

/invokeai/app/ @Kyle0654 @blessedcoolant @psychedelicious @brandonrising @hipsterusername

|

||||

/invokeai/app/ @Kyle0654 @blessedcoolant @psychedelicious @brandonrising

|

||||

|

||||

# installation and configuration

|

||||

/pyproject.toml @lstein @blessedcoolant @hipsterusername

|

||||

/docker/ @lstein @blessedcoolant @hipsterusername

|

||||

/scripts/ @ebr @lstein @hipsterusername

|

||||

/installer/ @lstein @ebr @hipsterusername

|

||||

/invokeai/assets @lstein @ebr @hipsterusername

|

||||

/invokeai/configs @lstein @hipsterusername

|

||||

/invokeai/version @lstein @blessedcoolant @hipsterusername

|

||||

/pyproject.toml @lstein @blessedcoolant

|

||||

/docker/ @lstein @blessedcoolant

|

||||

/scripts/ @ebr @lstein

|

||||

/installer/ @lstein @ebr

|

||||

/invokeai/assets @lstein @ebr

|

||||

/invokeai/configs @lstein

|

||||

/invokeai/version @lstein @blessedcoolant

|

||||

|

||||

# web ui

|

||||

/invokeai/frontend @blessedcoolant @psychedelicious @lstein @maryhipp @hipsterusername

|

||||

/invokeai/backend @blessedcoolant @psychedelicious @lstein @maryhipp @hipsterusername

|

||||

/invokeai/frontend @blessedcoolant @psychedelicious @lstein @maryhipp

|

||||

/invokeai/backend @blessedcoolant @psychedelicious @lstein @maryhipp

|

||||

|

||||

# generation, model management, postprocessing

|

||||

/invokeai/backend @damian0815 @lstein @blessedcoolant @gregghelt2 @StAlKeR7779 @brandonrising @ryanjdick @hipsterusername

|

||||

/invokeai/backend @damian0815 @lstein @blessedcoolant @gregghelt2 @StAlKeR7779 @brandonrising

|

||||

|

||||

# front ends

|

||||

/invokeai/frontend/CLI @lstein @hipsterusername

|

||||

/invokeai/frontend/install @lstein @ebr @hipsterusername

|

||||

/invokeai/frontend/merge @lstein @blessedcoolant @hipsterusername

|

||||

/invokeai/frontend/training @lstein @blessedcoolant @hipsterusername

|

||||

/invokeai/frontend/web @psychedelicious @blessedcoolant @maryhipp @hipsterusername

|

||||

/invokeai/frontend/CLI @lstein

|

||||

/invokeai/frontend/install @lstein @ebr

|

||||

/invokeai/frontend/merge @lstein @blessedcoolant

|

||||

/invokeai/frontend/training @lstein @blessedcoolant

|

||||

/invokeai/frontend/web @psychedelicious @blessedcoolant @maryhipp

|

||||

|

||||

|

||||

|

||||

12

.github/ISSUE_TEMPLATE/FEATURE_REQUEST.yml

vendored

12

.github/ISSUE_TEMPLATE/FEATURE_REQUEST.yml

vendored

@@ -1,5 +1,5 @@

|

||||

name: Feature Request

|

||||

description: Contribute a idea or request a new feature

|

||||

description: Commit a idea or Request a new feature

|

||||

title: '[enhancement]: '

|

||||

labels: ['enhancement']

|

||||

# assignees:

|

||||

@@ -9,14 +9,14 @@ body:

|

||||

- type: markdown

|

||||

attributes:

|

||||

value: |

|

||||

Thanks for taking the time to fill out this feature request!

|

||||

Thanks for taking the time to fill out this Feature request!

|

||||

|

||||

- type: checkboxes

|

||||

attributes:

|

||||

label: Is there an existing issue for this?

|

||||

description: |

|

||||

Please make use of the [search function](https://github.com/invoke-ai/InvokeAI/labels/enhancement)

|

||||

to see if a similar issue already exists for the feature you want to request

|

||||

to see if a simmilar issue already exists for the feature you want to request

|

||||

options:

|

||||

- label: I have searched the existing issues

|

||||

required: true

|

||||

@@ -36,7 +36,7 @@ body:

|

||||

label: What should this feature add?

|

||||

description: Please try to explain the functionality this feature should add

|

||||

placeholder: |

|

||||

Instead of one huge text field, it would be nice to have forms for bug-reports, feature-requests, ...

|

||||

Instead of one huge textfield, it would be nice to have forms for bug-reports, feature-requests, ...

|

||||

Great benefits with automatic labeling, assigning and other functionalitys not available in that form

|

||||

via old-fashioned markdown-templates. I would also love to see the use of a moderator bot 🤖 like

|

||||

https://github.com/marketplace/actions/issue-moderator-with-commands to auto close old issues and other things

|

||||

@@ -51,6 +51,6 @@ body:

|

||||

|

||||

- type: textarea

|

||||

attributes:

|

||||

label: Additional Content

|

||||

label: Aditional Content

|

||||

description: Add any other context or screenshots about the feature request here.

|

||||

placeholder: This is a mockup of the design how I imagine it <screenshot>

|

||||

placeholder: This is a Mockup of the design how I imagine it <screenshot>

|

||||

|

||||

@@ -29,13 +29,12 @@ The first set of things we need to do when creating a new Invocation are -

|

||||

|

||||

- Create a new class that derives from a predefined parent class called

|

||||

`BaseInvocation`.

|

||||

- The name of every Invocation must end with the word `Invocation` in order for

|

||||

it to be recognized as an Invocation.

|

||||

- Every Invocation must have a `docstring` that describes what this Invocation

|

||||

does.

|

||||

- While not strictly required, we suggest every invocation class name ends in

|

||||

"Invocation", eg "CropImageInvocation".

|

||||

- Every Invocation must use the `@invocation` decorator to provide its unique

|

||||

invocation type. You may also provide its title, tags and category using the

|

||||

decorator.

|

||||

- Every Invocation must have a unique `type` field defined which becomes its

|

||||

indentifier.

|

||||

- Invocations are strictly typed. We make use of the native

|

||||

[typing](https://docs.python.org/3/library/typing.html) library and the

|

||||

installed [pydantic](https://pydantic-docs.helpmanual.io/) library for

|

||||

@@ -44,11 +43,12 @@ The first set of things we need to do when creating a new Invocation are -

|

||||

So let us do that.

|

||||

|

||||

```python

|

||||

from .baseinvocation import BaseInvocation, invocation

|

||||

from typing import Literal

|

||||

from .baseinvocation import BaseInvocation

|

||||

|

||||

@invocation('resize')

|

||||

class ResizeInvocation(BaseInvocation):

|

||||

'''Resizes an image'''

|

||||

type: Literal['resize'] = 'resize'

|

||||

```

|

||||

|

||||

That's great.

|

||||

@@ -62,10 +62,8 @@ our Invocation takes.

|

||||

|

||||

### **Inputs**

|

||||

|

||||

Every Invocation input must be defined using the `InputField` function. This is

|

||||

a wrapper around the pydantic `Field` function, which handles a few extra things

|

||||

and provides type hints. Like everything else, this should be strictly typed and

|

||||

defined.

|

||||

Every Invocation input is a pydantic `Field` and like everything else should be

|

||||

strictly typed and defined.

|

||||

|

||||

So let us create these inputs for our Invocation. First up, the `image` input we

|

||||

need. Generally, we can use standard variable types in Python but InvokeAI

|

||||

@@ -78,51 +76,55 @@ create your own custom field types later in this guide. For now, let's go ahead

|

||||

and use it.

|

||||

|

||||

```python

|

||||

from .baseinvocation import BaseInvocation, InputField, invocation

|

||||

from .primitives import ImageField

|

||||

from typing import Literal, Union

|

||||

from pydantic import Field

|

||||

|

||||

from .baseinvocation import BaseInvocation

|

||||

from ..models.image import ImageField

|

||||

|

||||

@invocation('resize')

|

||||

class ResizeInvocation(BaseInvocation):

|

||||

'''Resizes an image'''

|

||||

type: Literal['resize'] = 'resize'

|

||||

|

||||

# Inputs

|

||||

image: ImageField = InputField(description="The input image")

|

||||

image: Union[ImageField, None] = Field(description="The input image", default=None)

|

||||

```

|

||||

|

||||

Let us break down our input code.

|

||||

|

||||

```python

|

||||

image: ImageField = InputField(description="The input image")

|

||||

image: Union[ImageField, None] = Field(description="The input image", default=None)

|

||||

```

|

||||

|

||||

| Part | Value | Description |

|

||||

| --------- | ------------------------------------------- | ------------------------------------------------------------------------------- |

|

||||

| Name | `image` | The variable that will hold our image |

|

||||

| Type Hint | `ImageField` | The types for our field. Indicates that the image must be an `ImageField` type. |

|

||||

| Field | `InputField(description="The input image")` | The image variable is an `InputField` which needs a description. |

|

||||

| Part | Value | Description |

|

||||

| --------- | ---------------------------------------------------- | -------------------------------------------------------------------------------------------------- |

|

||||

| Name | `image` | The variable that will hold our image |

|

||||

| Type Hint | `Union[ImageField, None]` | The types for our field. Indicates that the image can either be an `ImageField` type or `None` |

|

||||

| Field | `Field(description="The input image", default=None)` | The image variable is a field which needs a description and a default value that we set to `None`. |

|

||||

|

||||

Great. Now let us create our other inputs for `width` and `height`

|

||||

|

||||

```python

|

||||

from .baseinvocation import BaseInvocation, InputField, invocation

|

||||

from .primitives import ImageField

|

||||

from typing import Literal, Union

|

||||

from pydantic import Field

|

||||

|

||||

from .baseinvocation import BaseInvocation

|

||||

from ..models.image import ImageField

|

||||

|

||||

@invocation('resize')

|

||||

class ResizeInvocation(BaseInvocation):

|

||||

'''Resizes an image'''

|

||||

type: Literal['resize'] = 'resize'

|

||||

|

||||

# Inputs

|

||||

image: ImageField = InputField(description="The input image")

|

||||

width: int = InputField(default=512, ge=64, le=2048, description="Width of the new image")

|

||||

height: int = InputField(default=512, ge=64, le=2048, description="Height of the new image")

|

||||

image: Union[ImageField, None] = Field(description="The input image", default=None)

|

||||

width: int = Field(default=512, ge=64, le=2048, description="Width of the new image")

|

||||

height: int = Field(default=512, ge=64, le=2048, description="Height of the new image")

|

||||

```

|

||||

|

||||

As you might have noticed, we added two new arguments to the `InputField`

|

||||

definition for `width` and `height`, called `gt` and `le`. They stand for

|

||||

_greater than or equal to_ and _less than or equal to_.

|

||||

|

||||

These impose contraints on those fields, and will raise an exception if the

|

||||

values do not meet the constraints. Field constraints are provided by

|

||||

**pydantic**, so anything you see in the **pydantic docs** will work.

|

||||

As you might have noticed, we added two new parameters to the field type for

|

||||

`width` and `height` called `gt` and `le`. These basically stand for _greater

|

||||

than or equal to_ and _less than or equal to_. There are various other param

|

||||

types for field that you can find on the **pydantic** documentation.

|

||||

|

||||

**Note:** _Any time it is possible to define constraints for our field, we

|

||||

should do it so the frontend has more information on how to parse this field._

|

||||

@@ -139,17 +141,20 @@ that are provided by it by InvokeAI.

|

||||

Let us create this function first.

|

||||

|

||||

```python

|

||||

from .baseinvocation import BaseInvocation, InputField, invocation

|

||||

from .primitives import ImageField

|

||||

from typing import Literal, Union

|

||||

from pydantic import Field

|

||||

|

||||

from .baseinvocation import BaseInvocation, InvocationContext

|

||||

from ..models.image import ImageField

|

||||

|

||||

@invocation('resize')

|

||||

class ResizeInvocation(BaseInvocation):

|

||||

'''Resizes an image'''

|

||||

type: Literal['resize'] = 'resize'

|

||||

|

||||

# Inputs

|

||||

image: ImageField = InputField(description="The input image")

|

||||

width: int = InputField(default=512, ge=64, le=2048, description="Width of the new image")

|

||||

height: int = InputField(default=512, ge=64, le=2048, description="Height of the new image")

|

||||

image: Union[ImageField, None] = Field(description="The input image", default=None)

|

||||

width: int = Field(default=512, ge=64, le=2048, description="Width of the new image")

|

||||

height: int = Field(default=512, ge=64, le=2048, description="Height of the new image")

|

||||

|

||||

def invoke(self, context: InvocationContext):

|

||||

pass

|

||||

@@ -168,18 +173,21 @@ all the necessary info related to image outputs. So let us use that.

|

||||

We will cover how to create your own output types later in this guide.

|

||||

|

||||

```python

|

||||

from .baseinvocation import BaseInvocation, InputField, invocation

|

||||

from .primitives import ImageField

|

||||

from typing import Literal, Union

|

||||

from pydantic import Field

|

||||

|

||||

from .baseinvocation import BaseInvocation, InvocationContext

|

||||

from ..models.image import ImageField

|

||||

from .image import ImageOutput

|

||||

|

||||

@invocation('resize')

|

||||

class ResizeInvocation(BaseInvocation):

|

||||

'''Resizes an image'''

|

||||

type: Literal['resize'] = 'resize'

|

||||

|

||||

# Inputs

|

||||

image: ImageField = InputField(description="The input image")

|

||||

width: int = InputField(default=512, ge=64, le=2048, description="Width of the new image")

|

||||

height: int = InputField(default=512, ge=64, le=2048, description="Height of the new image")

|

||||

image: Union[ImageField, None] = Field(description="The input image", default=None)

|

||||

width: int = Field(default=512, ge=64, le=2048, description="Width of the new image")

|

||||

height: int = Field(default=512, ge=64, le=2048, description="Height of the new image")

|

||||

|

||||

def invoke(self, context: InvocationContext) -> ImageOutput:

|

||||

pass

|

||||

@@ -187,34 +195,39 @@ class ResizeInvocation(BaseInvocation):

|

||||

|

||||

Perfect. Now that we have our Invocation setup, let us do what we want to do.

|

||||

|

||||

- We will first load the image using one of the services provided by InvokeAI to

|

||||

load the image.

|

||||

- We will first load the image. Generally we do this using the `PIL` library but

|

||||

we can use one of the services provided by InvokeAI to load the image.

|

||||

- We will resize the image using `PIL` to our input data.

|

||||

- We will output this image in the format we set above.

|

||||

|

||||

So let's do that.

|

||||

|

||||

```python

|

||||

from .baseinvocation import BaseInvocation, InputField, invocation

|

||||

from .primitives import ImageField

|

||||

from typing import Literal, Union

|

||||

from pydantic import Field

|

||||

|

||||

from .baseinvocation import BaseInvocation, InvocationContext

|

||||

from ..models.image import ImageField, ResourceOrigin, ImageCategory

|

||||

from .image import ImageOutput

|

||||

|

||||

@invocation("resize")

|

||||

class ResizeInvocation(BaseInvocation):

|

||||

"""Resizes an image"""

|

||||

'''Resizes an image'''

|

||||

type: Literal['resize'] = 'resize'

|

||||

|

||||

image: ImageField = InputField(description="The input image")

|

||||

width: int = InputField(default=512, ge=64, le=2048, description="Width of the new image")

|

||||

height: int = InputField(default=512, ge=64, le=2048, description="Height of the new image")

|

||||

# Inputs

|

||||

image: Union[ImageField, None] = Field(description="The input image", default=None)

|

||||

width: int = Field(default=512, ge=64, le=2048, description="Width of the new image")

|

||||

height: int = Field(default=512, ge=64, le=2048, description="Height of the new image")

|

||||

|

||||

def invoke(self, context: InvocationContext) -> ImageOutput:

|

||||

# Load the image using InvokeAI's predefined Image Service. Returns the PIL image.

|

||||

image = context.services.images.get_pil_image(self.image.image_name)

|

||||

# Load the image using InvokeAI's predefined Image Service.

|

||||

image = context.services.images.get_pil_image(self.image.image_origin, self.image.image_name)

|

||||

|

||||

# Resizing the image

|

||||

# Because we used the above service, we already have a PIL image. So we can simply resize.

|

||||

resized_image = image.resize((self.width, self.height))

|

||||

|

||||

# Save the image using InvokeAI's predefined Image Service. Returns the prepared PIL image.

|

||||

# Preparing the image for output using InvokeAI's predefined Image Service.

|

||||

output_image = context.services.images.create(

|

||||

image=resized_image,

|

||||

image_origin=ResourceOrigin.INTERNAL,

|

||||

@@ -228,6 +241,7 @@ class ResizeInvocation(BaseInvocation):

|

||||

return ImageOutput(

|

||||

image=ImageField(

|

||||

image_name=output_image.image_name,

|

||||

image_origin=output_image.image_origin,

|

||||

),

|

||||

width=output_image.width,

|

||||

height=output_image.height,

|

||||

@@ -239,24 +253,6 @@ certain way that the images need to be dispatched in order to be stored and read

|

||||

correctly. In 99% of the cases when dealing with an image output, you can simply

|

||||

copy-paste the template above.

|

||||

|

||||

### Customization

|

||||

|

||||

We can use the `@invocation` decorator to provide some additional info to the

|

||||

UI, like a custom title, tags and category.

|

||||

|

||||

We also encourage providing a version. This must be a

|

||||

[semver](https://semver.org/) version string ("$MAJOR.$MINOR.$PATCH"). The UI

|

||||

will let users know if their workflow is using a mismatched version of the node.

|

||||

|

||||

```python

|

||||

@invocation("resize", title="My Resizer", tags=["resize", "image"], category="My Invocations", version="1.0.0")

|

||||

class ResizeInvocation(BaseInvocation):

|

||||

"""Resizes an image"""

|

||||

|

||||

image: ImageField = InputField(description="The input image")

|

||||

...

|

||||

```

|

||||

|

||||

That's it. You made your own **Resize Invocation**.

|

||||

|

||||

## Result

|

||||

@@ -275,55 +271,10 @@ new Invocation ready to be used.

|

||||

|

||||

|

||||

## Contributing Nodes

|

||||

|

||||

Once you've created a Node, the next step is to share it with the community! The

|

||||

best way to do this is to submit a Pull Request to add the Node to the

|

||||

[Community Nodes](nodes/communityNodes) list. If you're not sure how to do that,

|

||||

take a look a at our [contributing nodes overview](contributingNodes).

|

||||

Once you've created a Node, the next step is to share it with the community! The best way to do this is to submit a Pull Request to add the Node to the [Community Nodes](nodes/communityNodes) list. If you're not sure how to do that, take a look a at our [contributing nodes overview](contributingNodes).

|

||||

|

||||

## Advanced

|

||||

|

||||

### Custom Output Types

|

||||

|

||||

Like with custom inputs, sometimes you might find yourself needing custom

|

||||

outputs that InvokeAI does not provide. We can easily set one up.

|

||||

|

||||

Now that you are familiar with Invocations and Inputs, let us use that knowledge

|

||||

to create an output that has an `image` field, a `color` field and a `string`

|

||||

field.

|

||||

|

||||

- An invocation output is a class that derives from the parent class of

|

||||

`BaseInvocationOutput`.

|

||||

- All invocation outputs must use the `@invocation_output` decorator to provide

|

||||

their unique output type.

|

||||

- Output fields must use the provided `OutputField` function. This is very

|

||||

similar to the `InputField` function described earlier - it's a wrapper around

|

||||

`pydantic`'s `Field()`.

|

||||

- It is not mandatory but we recommend using names ending with `Output` for

|

||||

output types.

|

||||

- It is not mandatory but we highly recommend adding a `docstring` to describe

|

||||

what your output type is for.

|

||||

|

||||

Now that we know the basic rules for creating a new output type, let us go ahead

|

||||

and make it.

|

||||

|

||||

```python

|

||||

from .baseinvocation import BaseInvocationOutput, OutputField, invocation_output

|

||||

from .primitives import ImageField, ColorField

|

||||

|

||||

@invocation_output('image_color_string_output')

|

||||

class ImageColorStringOutput(BaseInvocationOutput):

|

||||

'''Base class for nodes that output a single image'''

|

||||

|

||||

image: ImageField = OutputField(description="The image")

|

||||

color: ColorField = OutputField(description="The color")

|

||||

text: str = OutputField(description="The string")

|

||||

```

|

||||

|

||||

That's all there is to it.

|

||||

|

||||

<!-- TODO: DANGER - we probably do not want people to create their own field types, because this requires a lot of work on the frontend to accomodate.

|

||||

|

||||

### Custom Input Fields

|

||||

|

||||

Now that you know how to create your own Invocations, let us dive into slightly

|

||||

@@ -378,6 +329,172 @@ like this.

|

||||

color: ColorField = Field(default=ColorField(r=0, g=0, b=0, a=0), description='Background color of an image')

|

||||

```

|

||||

|

||||

**Extra Config**

|

||||

|

||||

All input fields also take an additional `Config` class that you can use to do

|

||||

various advanced things like setting required parameters and etc.

|

||||

|

||||

Let us do that for our _ColorField_ and enforce all the values because we did

|

||||

not define any defaults for our fields.

|

||||

|

||||

```python

|

||||

class ColorField(BaseModel):

|

||||

'''A field that holds the rgba values of a color'''

|

||||

r: int = Field(ge=0, le=255, description="The red channel")

|

||||

g: int = Field(ge=0, le=255, description="The green channel")

|

||||

b: int = Field(ge=0, le=255, description="The blue channel")

|

||||

a: int = Field(ge=0, le=255, description="The alpha channel")

|

||||

|

||||

class Config:

|

||||

schema_extra = {"required": ["r", "g", "b", "a"]}

|

||||

```

|

||||

|

||||

Now it becomes mandatory for the user to supply all the values required by our

|

||||

input field.

|

||||

|

||||

We will discuss the `Config` class in extra detail later in this guide and how

|

||||

you can use it to make your Invocations more robust.

|

||||

|

||||

### Custom Output Types

|

||||

|

||||

Like with custom inputs, sometimes you might find yourself needing custom

|

||||

outputs that InvokeAI does not provide. We can easily set one up.

|

||||

|

||||

Now that you are familiar with Invocations and Inputs, let us use that knowledge

|

||||

to put together a custom output type for an Invocation that returns _width_,

|

||||

_height_ and _background_color_ that we need to create a blank image.

|

||||

|

||||

- A custom output type is a class that derives from the parent class of

|

||||

`BaseInvocationOutput`.

|

||||

- It is not mandatory but we recommend using names ending with `Output` for

|

||||

output types. So we'll call our class `BlankImageOutput`

|

||||

- It is not mandatory but we highly recommend adding a `docstring` to describe

|

||||

what your output type is for.

|

||||

- Like Invocations, each output type should have a `type` variable that is

|

||||

**unique**

|

||||

|

||||

Now that we know the basic rules for creating a new output type, let us go ahead

|

||||

and make it.

|

||||

|

||||

```python

|

||||

from typing import Literal

|

||||

from pydantic import Field

|

||||

|

||||

from .baseinvocation import BaseInvocationOutput

|

||||

|

||||

class BlankImageOutput(BaseInvocationOutput):

|

||||

'''Base output type for creating a blank image'''

|

||||

type: Literal['blank_image_output'] = 'blank_image_output'

|

||||

|

||||

# Inputs

|

||||

width: int = Field(description='Width of blank image')

|

||||

height: int = Field(description='Height of blank image')

|

||||

bg_color: ColorField = Field(description='Background color of blank image')

|

||||

|

||||

class Config:

|

||||

schema_extra = {"required": ["type", "width", "height", "bg_color"]}

|

||||

```

|

||||

|

||||

All set. We now have an output type that requires what we need to create a

|

||||

blank_image. And if you noticed it, we even used the `Config` class to ensure

|

||||

the fields are required.

|

||||

|

||||

### Custom Configuration

|

||||

|

||||

As you might have noticed when making inputs and outputs, we used a class called

|

||||

`Config` from _pydantic_ to further customize them. Because our inputs and

|

||||

outputs essentially inherit from _pydantic_'s `BaseModel` class, all

|

||||

[configuration options](https://docs.pydantic.dev/latest/usage/schema/#schema-customization)

|

||||

that are valid for _pydantic_ classes are also valid for our inputs and outputs.

|

||||

You can do the same for your Invocations too but InvokeAI makes our life a

|

||||

little bit easier on that end.

|

||||

|

||||

InvokeAI provides a custom configuration class called `InvocationConfig`

|

||||

particularly for configuring Invocations. This is exactly the same as the raw

|

||||

`Config` class from _pydantic_ with some extra stuff on top to help faciliate

|

||||

parsing of the scheme in the frontend UI.

|

||||

|

||||

At the current moment, tihs `InvocationConfig` class is further improved with

|

||||

the following features related the `ui`.

|

||||

|

||||

| Config Option | Field Type | Example |

|

||||

| ------------- | ------------------------------------------------------------------------------------------------------------- | --------------------------------------------------------------------------------------------------------------------- |

|

||||

| type_hints | `Dict[str, Literal["integer", "float", "boolean", "string", "enum", "image", "latents", "model", "control"]]` | `type_hint: "model"` provides type hints related to the model like displaying a list of available models |

|

||||

| tags | `List[str]` | `tags: ['resize', 'image']` will classify your invocation under the tags of resize and image. |

|

||||

| title | `str` | `title: 'Resize Image` will rename your to this custom title rather than infer from the name of the Invocation class. |

|

||||

|

||||

So let us update your `ResizeInvocation` with some extra configuration and see

|

||||

how that works.

|

||||

|

||||

```python

|

||||

from typing import Literal, Union

|

||||

from pydantic import Field

|

||||

|

||||

from .baseinvocation import BaseInvocation, InvocationContext, InvocationConfig

|

||||

from ..models.image import ImageField, ResourceOrigin, ImageCategory

|

||||

from .image import ImageOutput

|

||||

|

||||

class ResizeInvocation(BaseInvocation):

|

||||

'''Resizes an image'''

|

||||

type: Literal['resize'] = 'resize'

|

||||

|

||||

# Inputs

|

||||

image: Union[ImageField, None] = Field(description="The input image", default=None)

|

||||

width: int = Field(default=512, ge=64, le=2048, description="Width of the new image")

|

||||

height: int = Field(default=512, ge=64, le=2048, description="Height of the new image")

|

||||

|

||||

class Config(InvocationConfig):

|

||||

schema_extra: {

|

||||

ui: {

|

||||

tags: ['resize', 'image'],

|

||||

title: ['My Custom Resize']

|

||||

}

|

||||

}

|

||||

|

||||

def invoke(self, context: InvocationContext) -> ImageOutput:

|

||||

# Load the image using InvokeAI's predefined Image Service.

|

||||

image = context.services.images.get_pil_image(self.image.image_origin, self.image.image_name)

|

||||

|

||||

# Resizing the image

|

||||

# Because we used the above service, we already have a PIL image. So we can simply resize.

|

||||

resized_image = image.resize((self.width, self.height))

|

||||

|

||||

# Preparing the image for output using InvokeAI's predefined Image Service.

|

||||

output_image = context.services.images.create(

|

||||

image=resized_image,

|

||||

image_origin=ResourceOrigin.INTERNAL,

|

||||

image_category=ImageCategory.GENERAL,

|

||||

node_id=self.id,

|

||||

session_id=context.graph_execution_state_id,

|

||||

is_intermediate=self.is_intermediate,

|

||||

)

|

||||

|

||||

# Returning the Image

|

||||

return ImageOutput(

|

||||

image=ImageField(

|

||||

image_name=output_image.image_name,

|

||||

image_origin=output_image.image_origin,

|

||||

),

|

||||

width=output_image.width,

|

||||

height=output_image.height,

|

||||

)

|

||||

```

|

||||

|

||||

We now customized our code to let the frontend know that our Invocation falls

|

||||

under `resize` and `image` categories. So when the user searches for these

|

||||

particular words, our Invocation will show up too.

|

||||

|

||||

We also set a custom title for our Invocation. So instead of being called

|

||||

`Resize`, it will be called `My Custom Resize`.

|

||||

|

||||

As simple as that.

|

||||

|

||||

As time goes by, InvokeAI will further improve and add more customizability for

|

||||

Invocation configuration. We will have more documentation regarding this at a

|

||||

later time.

|

||||

|

||||

# **[TODO]**

|

||||

|

||||

### Custom Components For Frontend

|

||||

|

||||

Every backend input type should have a corresponding frontend component so the

|

||||

@@ -396,4 +513,282 @@ Let us create a new component for our custom color field we created above. When

|

||||

we use a color field, let us say we want the UI to display a color picker for

|

||||

the user to pick from rather than entering values. That is what we will build

|

||||

now.

|

||||

-->

|

||||

|

||||

---

|

||||

|

||||

<!-- # OLD -- TO BE DELETED OR MOVED LATER

|

||||

|

||||

---

|

||||

|

||||

## Creating a new invocation

|

||||

|

||||

To create a new invocation, either find the appropriate module file in

|

||||

`/ldm/invoke/app/invocations` to add your invocation to, or create a new one in

|

||||

that folder. All invocations in that folder will be discovered and made

|

||||

available to the CLI and API automatically. Invocations make use of

|

||||

[typing](https://docs.python.org/3/library/typing.html) and

|

||||

[pydantic](https://pydantic-docs.helpmanual.io/) for validation and integration

|

||||

into the CLI and API.

|

||||

|

||||

An invocation looks like this:

|

||||

|

||||

```py

|

||||

class UpscaleInvocation(BaseInvocation):

|

||||

"""Upscales an image."""

|

||||

|

||||

# fmt: off

|

||||

type: Literal["upscale"] = "upscale"

|

||||

|

||||

# Inputs

|

||||

image: Union[ImageField, None] = Field(description="The input image", default=None)

|

||||

strength: float = Field(default=0.75, gt=0, le=1, description="The strength")

|

||||

level: Literal[2, 4] = Field(default=2, description="The upscale level")

|

||||

# fmt: on

|

||||

|

||||

# Schema customisation

|

||||

class Config(InvocationConfig):

|

||||

schema_extra = {

|

||||

"ui": {

|

||||

"tags": ["upscaling", "image"],

|

||||

},

|

||||

}

|

||||

|

||||

def invoke(self, context: InvocationContext) -> ImageOutput:

|

||||

image = context.services.images.get_pil_image(

|

||||

self.image.image_origin, self.image.image_name

|

||||

)

|

||||

results = context.services.restoration.upscale_and_reconstruct(

|

||||

image_list=[[image, 0]],

|

||||

upscale=(self.level, self.strength),

|

||||

strength=0.0, # GFPGAN strength

|

||||

save_original=False,

|

||||

image_callback=None,

|

||||

)

|

||||

|

||||

# Results are image and seed, unwrap for now

|

||||

# TODO: can this return multiple results?

|

||||

image_dto = context.services.images.create(

|

||||

image=results[0][0],

|

||||

image_origin=ResourceOrigin.INTERNAL,

|

||||

image_category=ImageCategory.GENERAL,

|

||||

node_id=self.id,

|

||||

session_id=context.graph_execution_state_id,

|

||||

is_intermediate=self.is_intermediate,

|

||||

)

|

||||

|

||||

return ImageOutput(

|

||||

image=ImageField(

|

||||

image_name=image_dto.image_name,

|

||||

image_origin=image_dto.image_origin,

|

||||

),

|

||||

width=image_dto.width,

|

||||

height=image_dto.height,

|

||||

)

|

||||

|

||||

```

|

||||

|

||||

Each portion is important to implement correctly.

|

||||

|

||||

### Class definition and type

|

||||

|

||||

```py

|

||||

class UpscaleInvocation(BaseInvocation):

|

||||

"""Upscales an image."""

|

||||

type: Literal['upscale'] = 'upscale'

|

||||

```

|

||||

|

||||

All invocations must derive from `BaseInvocation`. They should have a docstring

|

||||

that declares what they do in a single, short line. They should also have a

|

||||

`type` with a type hint that's `Literal["command_name"]`, where `command_name`

|

||||

is what the user will type on the CLI or use in the API to create this

|

||||

invocation. The `command_name` must be unique. The `type` must be assigned to

|

||||

the value of the literal in the type hint.

|

||||

|

||||

### Inputs

|

||||

|

||||

```py

|

||||

# Inputs

|

||||

image: Union[ImageField,None] = Field(description="The input image")

|

||||

strength: float = Field(default=0.75, gt=0, le=1, description="The strength")

|

||||

level: Literal[2,4] = Field(default=2, description="The upscale level")

|

||||

```

|

||||

|

||||

Inputs consist of three parts: a name, a type hint, and a `Field` with default,

|

||||

description, and validation information. For example:

|

||||

|

||||

| Part | Value | Description |

|

||||

| --------- | ------------------------------------------------------------- | ------------------------------------------------------------------------------------------------------------------------- |

|

||||

| Name | `strength` | This field is referred to as `strength` |

|

||||

| Type Hint | `float` | This field must be of type `float` |

|

||||

| Field | `Field(default=0.75, gt=0, le=1, description="The strength")` | The default value is `0.75`, the value must be in the range (0,1], and help text will show "The strength" for this field. |

|

||||

|

||||

Notice that `image` has type `Union[ImageField,None]`. The `Union` allows this

|

||||

field to be parsed with `None` as a value, which enables linking to previous

|

||||

invocations. All fields should either provide a default value or allow `None` as

|

||||

a value, so that they can be overwritten with a linked output from another

|

||||

invocation.

|

||||

|

||||

The special type `ImageField` is also used here. All images are passed as

|

||||

`ImageField`, which protects them from pydantic validation errors (since images

|

||||

only ever come from links).

|

||||

|

||||

Finally, note that for all linking, the `type` of the linked fields must match.

|

||||

If the `name` also matches, then the field can be **automatically linked** to a

|

||||

previous invocation by name and matching.

|

||||

|

||||

### Config

|

||||

|

||||

```py

|

||||

# Schema customisation

|

||||

class Config(InvocationConfig):

|

||||

schema_extra = {

|

||||

"ui": {

|

||||

"tags": ["upscaling", "image"],

|

||||

},

|

||||

}

|

||||

```

|

||||

|

||||

This is an optional configuration for the invocation. It inherits from

|

||||

pydantic's model `Config` class, and it used primarily to customize the

|

||||

autogenerated OpenAPI schema.

|

||||

|

||||

The UI relies on the OpenAPI schema in two ways:

|

||||

|

||||

- An API client & Typescript types are generated from it. This happens at build

|

||||

time.

|

||||

- The node editor parses the schema into a template used by the UI to create the

|

||||

node editor UI. This parsing happens at runtime.

|

||||

|

||||

In this example, a `ui` key has been added to the `schema_extra` dict to provide

|

||||

some tags for the UI, to facilitate filtering nodes.

|

||||

|

||||

See the Schema Generation section below for more information.

|

||||

|

||||

### Invoke Function

|

||||

|

||||

```py

|

||||

def invoke(self, context: InvocationContext) -> ImageOutput:

|

||||

image = context.services.images.get_pil_image(

|

||||

self.image.image_origin, self.image.image_name

|

||||

)

|

||||

results = context.services.restoration.upscale_and_reconstruct(

|

||||

image_list=[[image, 0]],

|

||||

upscale=(self.level, self.strength),

|

||||

strength=0.0, # GFPGAN strength

|

||||

save_original=False,

|

||||

image_callback=None,

|

||||

)

|

||||

|

||||

# Results are image and seed, unwrap for now

|

||||

# TODO: can this return multiple results?

|

||||

image_dto = context.services.images.create(

|

||||

image=results[0][0],

|

||||

image_origin=ResourceOrigin.INTERNAL,

|

||||

image_category=ImageCategory.GENERAL,

|

||||

node_id=self.id,

|

||||

session_id=context.graph_execution_state_id,

|

||||

is_intermediate=self.is_intermediate,

|

||||

)

|

||||

|

||||

return ImageOutput(

|

||||

image=ImageField(

|

||||

image_name=image_dto.image_name,

|

||||

image_origin=image_dto.image_origin,

|

||||

),

|

||||

width=image_dto.width,

|

||||

height=image_dto.height,

|

||||

)

|

||||

```

|

||||

|

||||

The `invoke` function is the last portion of an invocation. It is provided an

|

||||

`InvocationContext` which contains services to perform work as well as a

|

||||

`session_id` for use as needed. It should return a class with output values that

|

||||

derives from `BaseInvocationOutput`.

|

||||

|

||||

Before being called, the invocation will have all of its fields set from

|

||||

defaults, inputs, and finally links (overriding in that order).

|

||||

|

||||

Assume that this invocation may be running simultaneously with other

|

||||

invocations, may be running on another machine, or in other interesting

|

||||

scenarios. If you need functionality, please provide it as a service in the

|

||||

`InvocationServices` class, and make sure it can be overridden.

|

||||

|

||||

### Outputs

|

||||

|

||||

```py

|

||||

class ImageOutput(BaseInvocationOutput):

|

||||

"""Base class for invocations that output an image"""

|

||||

|

||||

# fmt: off

|

||||

type: Literal["image_output"] = "image_output"

|

||||

image: ImageField = Field(default=None, description="The output image")

|

||||

width: int = Field(description="The width of the image in pixels")

|

||||

height: int = Field(description="The height of the image in pixels")

|

||||

# fmt: on

|

||||

|

||||

class Config:

|

||||

schema_extra = {"required": ["type", "image", "width", "height"]}

|

||||

```

|

||||

|

||||

Output classes look like an invocation class without the invoke method. Prefer

|

||||

to use an existing output class if available, and prefer to name inputs the same

|

||||

as outputs when possible, to promote automatic invocation linking.

|

||||

|

||||

## Schema Generation

|

||||

|

||||

Invocation, output and related classes are used to generate an OpenAPI schema.

|

||||

|

||||

### Required Properties

|

||||

|

||||

The schema generation treat all properties with default values as optional. This

|

||||

makes sense internally, but when when using these classes via the generated

|

||||

schema, we end up with e.g. the `ImageOutput` class having its `image` property

|

||||

marked as optional.

|

||||

|

||||

We know that this property will always be present, so the additional logic

|

||||

needed to always check if the property exists adds a lot of extraneous cruft.

|

||||

|

||||

To fix this, we can leverage `pydantic`'s

|

||||

[schema customisation](https://docs.pydantic.dev/usage/schema/#schema-customization)

|

||||

to mark properties that we know will always be present as required.

|

||||

|

||||

Here's that `ImageOutput` class, without the needed schema customisation:

|

||||

|

||||

```python

|

||||

class ImageOutput(BaseInvocationOutput):

|

||||

"""Base class for invocations that output an image"""

|

||||

|

||||

# fmt: off

|

||||

type: Literal["image_output"] = "image_output"

|

||||

image: ImageField = Field(default=None, description="The output image")

|

||||

width: int = Field(description="The width of the image in pixels")

|

||||

height: int = Field(description="The height of the image in pixels")

|

||||

# fmt: on

|

||||

```

|

||||

|

||||

The OpenAPI schema that results from this `ImageOutput` will have the `type`,

|

||||

`image`, `width` and `height` properties marked as optional, even though we know

|

||||

they will always have a value.

|

||||

|

||||

```python

|

||||

class ImageOutput(BaseInvocationOutput):

|

||||

"""Base class for invocations that output an image"""

|

||||

|

||||

# fmt: off

|

||||

type: Literal["image_output"] = "image_output"

|

||||

image: ImageField = Field(default=None, description="The output image")

|

||||

width: int = Field(description="The width of the image in pixels")

|

||||

height: int = Field(description="The height of the image in pixels")

|

||||

# fmt: on

|

||||

|

||||

# Add schema customization

|

||||

class Config:

|

||||

schema_extra = {"required": ["type", "image", "width", "height"]}

|

||||

```

|

||||

|

||||

With the customization in place, the schema will now show these properties as

|

||||

required, obviating the need for extensive null checks in client code.

|

||||

|

||||

See this `pydantic` issue for discussion on this solution:

|

||||

<https://github.com/pydantic/pydantic/discussions/4577> -->

|

||||

|

||||

|

||||

@@ -57,30 +57,6 @@ familiar with containerization technologies such as Docker.

|

||||

For downloads and instructions, visit the [NVIDIA CUDA Container

|

||||

Runtime Site](https://developer.nvidia.com/nvidia-container-runtime)

|

||||

|

||||

### cuDNN Installation for 40/30 Series Optimization* (Optional)

|

||||

|

||||

1. Find the InvokeAI folder

|

||||

2. Click on .venv folder - e.g., YourInvokeFolderHere\\.venv

|

||||

3. Click on Lib folder - e.g., YourInvokeFolderHere\\.venv\Lib

|

||||

4. Click on site-packages folder - e.g., YourInvokeFolderHere\\.venv\Lib\site-packages

|

||||

5. Click on Torch directory - e.g., YourInvokeFolderHere\InvokeAI\\.venv\Lib\site-packages\torch

|

||||

6. Click on the lib folder - e.g., YourInvokeFolderHere\\.venv\Lib\site-packages\torch\lib

|

||||

7. Copy everything inside the folder and save it elsewhere as a backup.

|

||||

8. Go to __https://developer.nvidia.com/cudnn__

|

||||

9. Login or create an Account.

|

||||

10. Choose the newer version of cuDNN. **Note:**

|

||||

There are two versions, 11.x or 12.x for the differents architectures(Turing,Maxwell Etc...) of GPUs.

|

||||

You can find which version you should download from [this link](https://docs.nvidia.com/deeplearning/cudnn/support-matrix/index.html).

|

||||

13. Download the latest version and extract it from the download location

|

||||

14. Find the bin folder E\cudnn-windows-x86_64-__Whatever Version__\bin

|

||||

15. Copy and paste the .dll files into YourInvokeFolderHere\\.venv\Lib\site-packages\torch\lib **Make sure to copy, and not move the files**

|

||||

16. If prompted, replace any existing files

|

||||

|

||||

**Notes:**

|

||||

* If no change is seen or any issues are encountered, follow the same steps as above and paste the torch/lib backup folder you made earlier and replace it. If you didn't make a backup, you can also uninstall and reinstall torch through the command line to repair this folder.

|

||||

* This optimization is intended for the newer version of graphics card (40/30 series) but results have been seen with older graphics card.

|

||||

|

||||

|

||||

### Torch Installation

|

||||

|

||||

When installing torch and torchvision manually with `pip`, remember to provide

|

||||

|

||||

@@ -22,28 +22,16 @@ To use a community node graph, download the the `.json` node graph file and load

|

||||

|

||||

|

||||

|

||||

--------------------------------

|

||||

<hr>

|

||||

|

||||

### Ideal Size

|

||||

|

||||

**Description:** This node calculates an ideal image size for a first pass of a multi-pass upscaling. The aim is to avoid duplication that results from choosing a size larger than the model is capable of.

|

||||

|

||||

**Node Link:** https://github.com/JPPhoto/ideal-size-node

|

||||

|

||||

--------------------------------

|

||||

### Film Grain

|

||||

<hr>

|

||||

|

||||

**Description:** This node adds a film grain effect to the input image based on the weights, seeds, and blur radii parameters. It works with RGB input images only.

|

||||

|

||||

**Node Link:** https://github.com/JPPhoto/film-grain-node

|

||||

|

||||

--------------------------------

|

||||

### Image Picker

|

||||

|

||||

**Description:** This InvokeAI node takes in a collection of images and randomly chooses one. This can be useful when you have a number of poses to choose from for a ControlNet node, or a number of input images for another purpose.

|

||||

|

||||

**Node Link:** https://github.com/JPPhoto/image-picker-node

|

||||

|

||||

--------------------------------

|

||||

### Retroize

|

||||

|

||||

**Description:** Retroize is a collection of nodes for InvokeAI to "Retroize" images. Any image can be given a fresh coat of retro paint with these nodes, either from your gallery or from within the graph itself. It includes nodes to pixelize, quantize, palettize, and ditherize images; as well as to retrieve palettes from existing images.

|

||||

@@ -67,135 +55,9 @@ Generated Prompt: An enchanted weapon will be usable by any character regardless

|

||||

|

||||

|

||||

|

||||

--------------------------------

|

||||

### Load Video Frame

|

||||

|

||||

**Description:** This is a video frame image provider + indexer/video creation nodes for hooking up to iterators and ranges and ControlNets and such for invokeAI node experimentation. Think animation + ControlNet outputs.

|

||||

|

||||

**Node Link:** https://github.com/helix4u/load_video_frame

|

||||

|

||||

**Example Node Graph:** https://github.com/helix4u/load_video_frame/blob/main/Example_Workflow.json

|

||||

|

||||

**Output Example:**

|

||||

=======

|

||||

|

||||

[Full mp4 of Example Output test.mp4](https://github.com/helix4u/load_video_frame/blob/main/test.mp4)

|

||||

|

||||

--------------------------------

|

||||

|

||||

### Oobabooga

|

||||

|

||||

**Description:** asks a local LLM running in Oobabooga's Text-Generation-Webui to write a prompt based on the user input.

|

||||

|

||||

**Link:** https://github.com/sammyf/oobabooga-node

|

||||

|

||||

|

||||

**Example:**

|

||||

|

||||

"describe a new mystical creature in its natural environment"

|

||||

|

||||

*can return*

|

||||

|

||||

"The mystical creature I am describing to you is called the "Glimmerwing". It is a majestic, iridescent being that inhabits the depths of the most enchanted forests and glimmering lakes. Its body is covered in shimmering scales that reflect every color of the rainbow, and it has delicate, translucent wings that sparkle like diamonds in the sunlight. The Glimmerwing's home is a crystal-clear lake, surrounded by towering trees with leaves that shimmer like jewels. In this serene environment, the Glimmerwing spends its days swimming gracefully through the water, chasing schools of glittering fish and playing with the gentle ripples of the lake's surface.

|

||||

As the sun sets, the Glimmerwing perches on a branch of one of the trees, spreading its wings to catch the last rays of light. The creature's scales glow softly, casting a rainbow of colors across the forest floor. The Glimmerwing sings a haunting melody, its voice echoing through the stillness of the night air. Its song is said to have the power to heal the sick and bring peace to troubled souls. Those who are lucky enough to hear the Glimmerwing's song are forever changed by its beauty and grace."

|

||||

|

||||

|

||||

|

||||

**Requirement**

|

||||

|

||||

a Text-Generation-Webui instance (might work remotely too, but I never tried it) and obviously InvokeAI 3.x

|

||||

|

||||

**Note**

|

||||

|

||||

This node works best with SDXL models, especially as the style can be described independantly of the LLM's output.

|

||||

|

||||

--------------------------------

|

||||

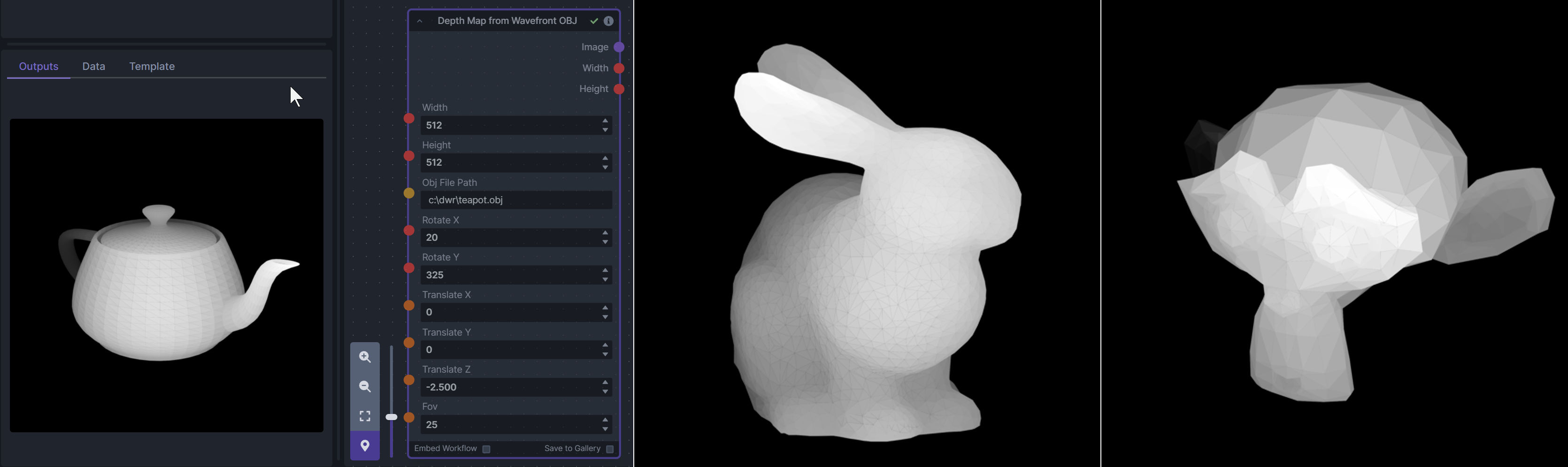

### Depth Map from Wavefront OBJ

|

||||

|

||||

**Description:** Render depth maps from Wavefront .obj files (triangulated) using this simple 3D renderer utilizing numpy and matplotlib to compute and color the scene. There are simple parameters to change the FOV, camera position, and model orientation.

|

||||

|

||||

To be imported, an .obj must use triangulated meshes, so make sure to enable that option if exporting from a 3D modeling program. This renderer makes each triangle a solid color based on its average depth, so it will cause anomalies if your .obj has large triangles. In Blender, the Remesh modifier can be helpful to subdivide a mesh into small pieces that work well given these limitations.

|

||||

|

||||

**Node Link:** https://github.com/dwringer/depth-from-obj-node

|

||||

|

||||

**Example Usage:**

|

||||

|

||||

|

||||

--------------------------------

|

||||

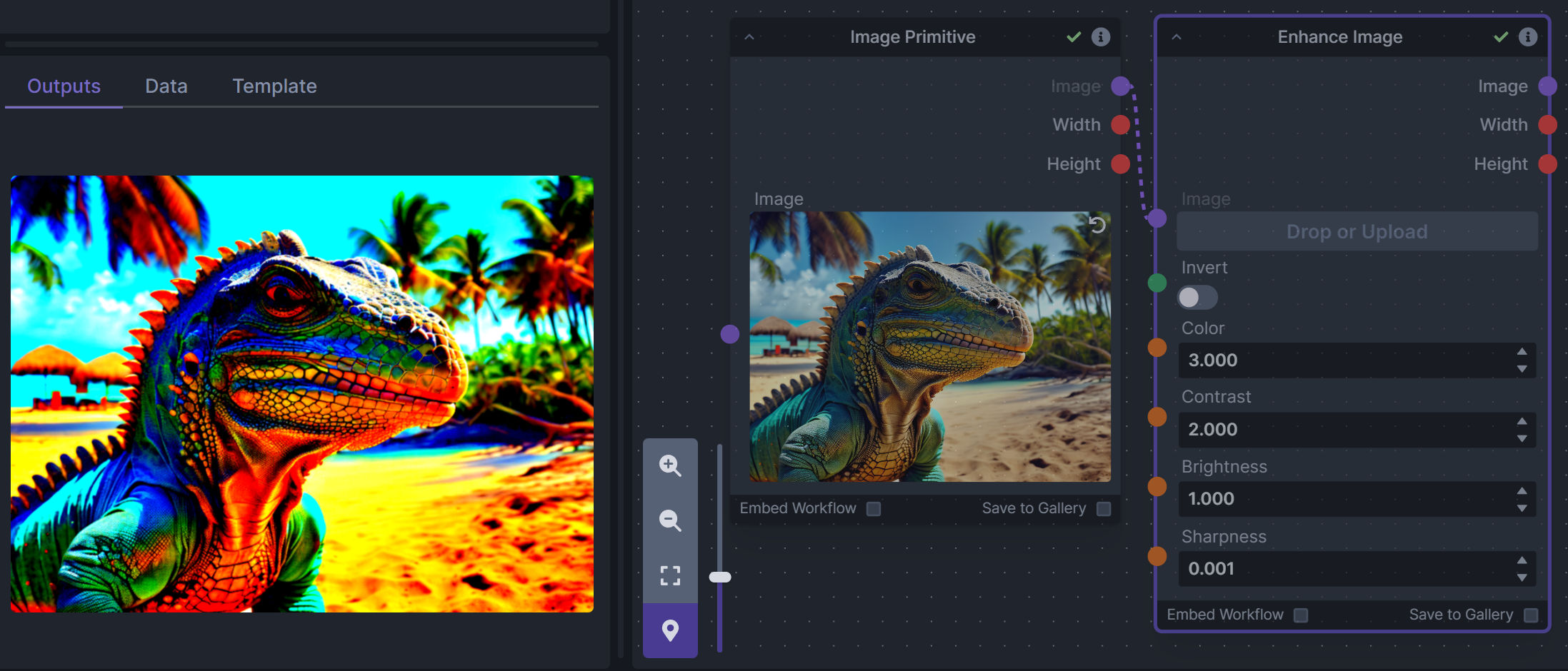

### Enhance Image (simple adjustments)

|

||||

|

||||

**Description:** Boost or reduce color saturation, contrast, brightness, sharpness, or invert colors of any image at any stage with this simple wrapper for pillow [PIL]'s ImageEnhance module.

|

||||

|

||||

Color inversion is toggled with a simple switch, while each of the four enhancer modes are activated by entering a value other than 1 in each corresponding input field. Values less than 1 will reduce the corresponding property, while values greater than 1 will enhance it.

|

||||

|

||||

**Node Link:** https://github.com/dwringer/image-enhance-node

|

||||

|

||||

**Example Usage:**

|

||||

|

||||

|

||||

--------------------------------

|

||||

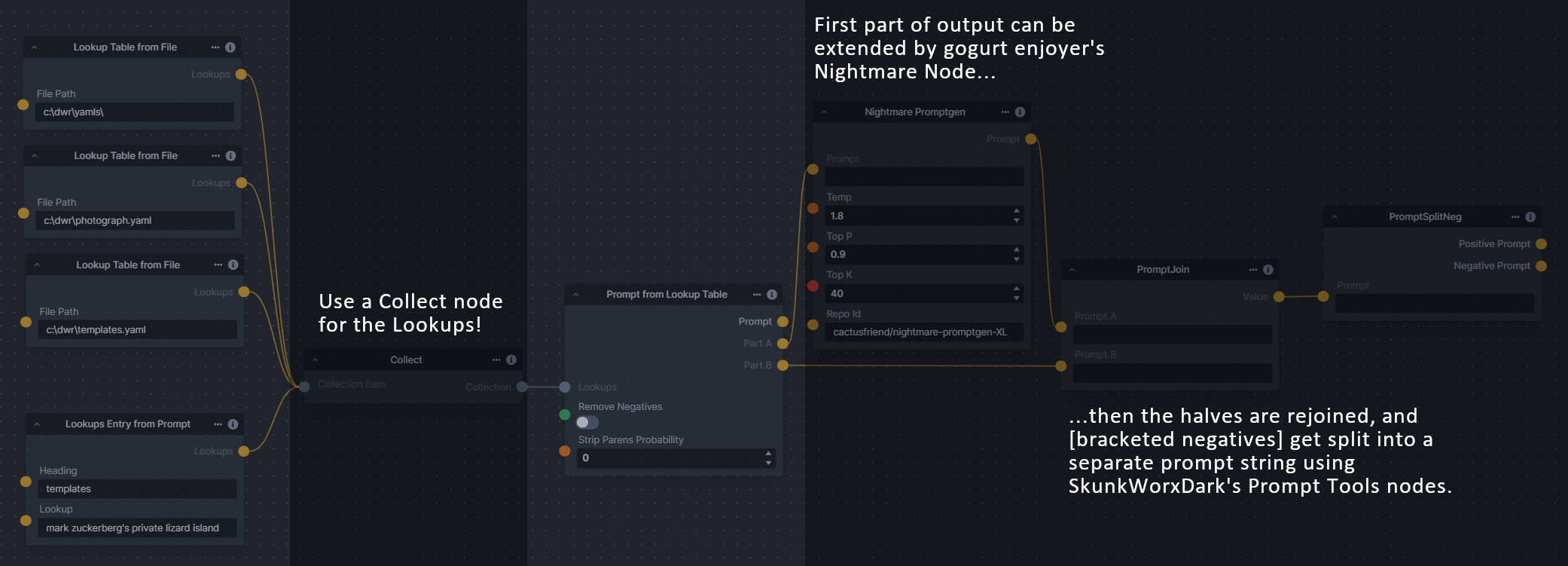

### Generative Grammar-Based Prompt Nodes

|

||||

|

||||

**Description:** This set of 3 nodes generates prompts from simple user-defined grammar rules (loaded from custom files - examples provided below). The prompts are made by recursively expanding a special template string, replacing nonterminal "parts-of-speech" until no more nonterminal terms remain in the string.

|

||||

|

||||

This includes 3 Nodes:

|

||||

- *Lookup Table from File* - loads a YAML file "prompt" section (or of a whole folder of YAML's) into a JSON-ified dictionary (Lookups output)

|

||||

- *Lookups Entry from Prompt* - places a single entry in a new Lookups output under the specified heading

|

||||

- *Prompt from Lookup Table* - uses a Collection of Lookups as grammar rules from which to randomly generate prompts.

|

||||

|

||||

**Node Link:** https://github.com/dwringer/generative-grammar-prompt-nodes

|

||||

|

||||

**Example Usage:**

|

||||

|

||||

|

||||

--------------------------------

|

||||

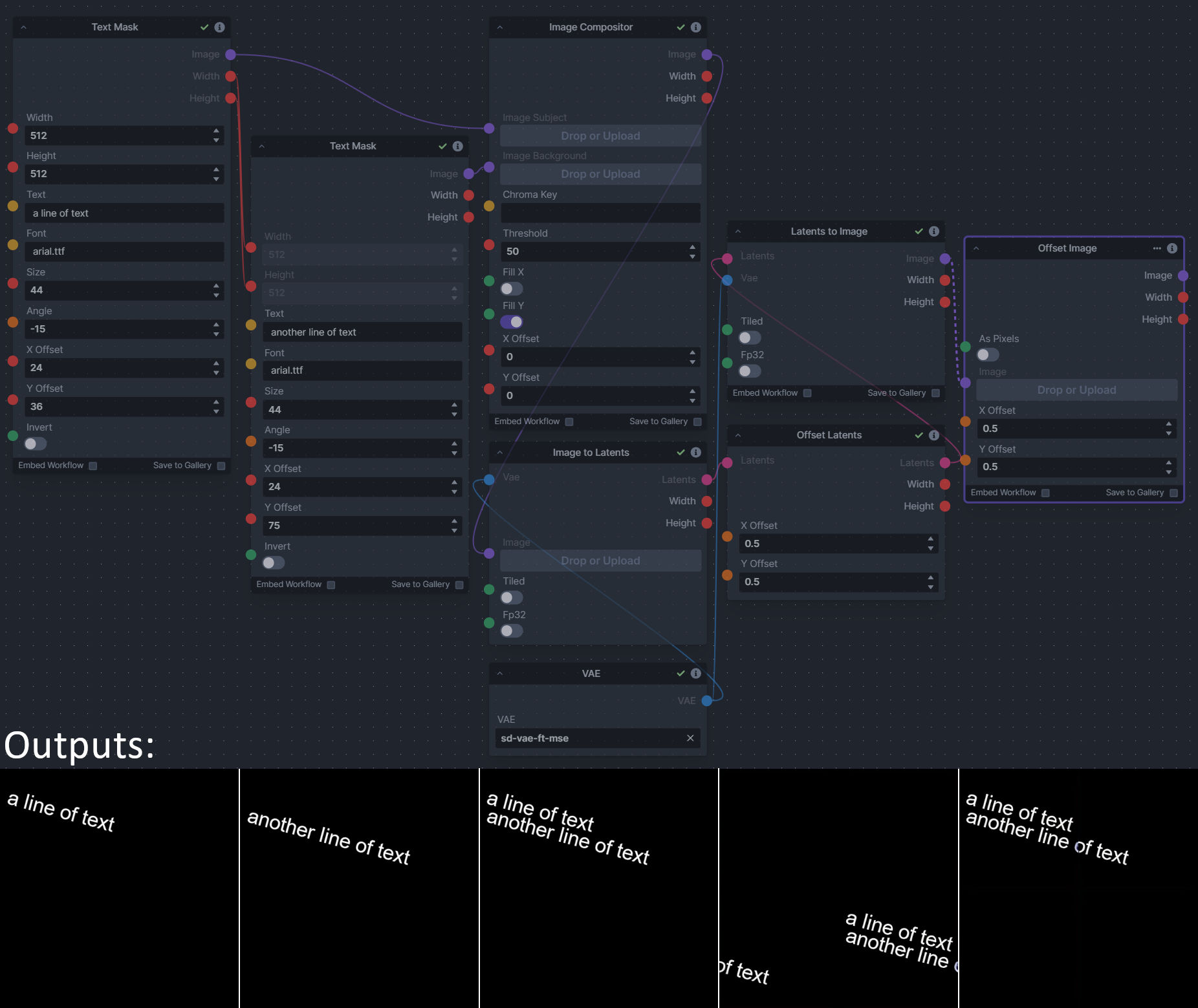

### Image and Mask Composition Pack

|

||||

|

||||

**Description:** This is a pack of nodes for composing masks and images, including a simple text mask creator and both image and latent offset nodes. The offsets wrap around, so these can be used in conjunction with the Seamless node to progressively generate centered on different parts of the seamless tiling.

|

||||

|

||||

This includes 4 Nodes:

|

||||

- *Text Mask (simple 2D)* - create and position a white on black (or black on white) line of text using any font locally available to Invoke.

|

||||

- *Image Compositor* - Take a subject from an image with a flat backdrop and layer it on another image using a chroma key or flood select background removal.

|

||||

- *Offset Latents* - Offset a latents tensor in the vertical and/or horizontal dimensions, wrapping it around.

|

||||

- *Offset Image* - Offset an image in the vertical and/or horizontal dimensions, wrapping it around.

|

||||

|

||||

**Node Link:** https://github.com/dwringer/composition-nodes

|

||||

|

||||

**Example Usage:**

|

||||

|

||||

|

||||

--------------------------------

|

||||

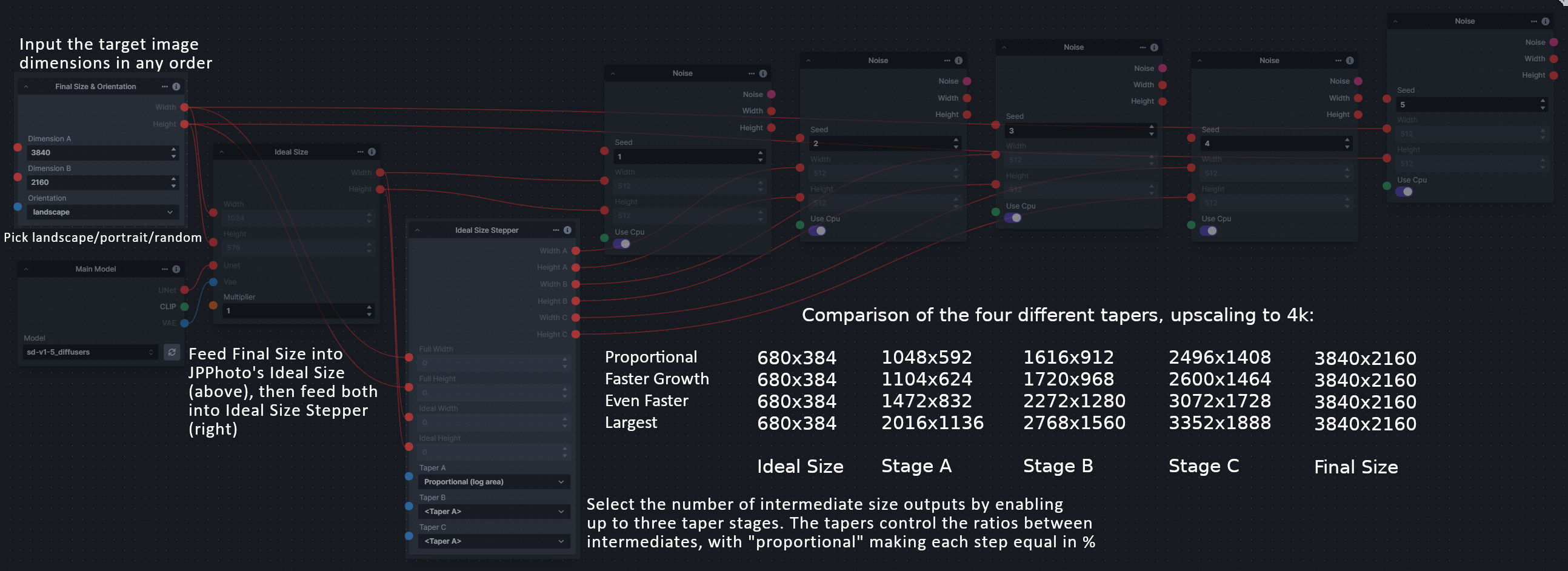

### Size Stepper Nodes

|

||||

|

||||

**Description:** This is a set of nodes for calculating the necessary size increments for doing upscaling workflows. Use the *Final Size & Orientation* node to enter your full size dimensions and orientation (portrait/landscape/random), then plug that and your initial generation dimensions into the *Ideal Size Stepper* and get 1, 2, or 3 intermediate pairs of dimensions for upscaling. Note this does not output the initial size or full size dimensions: the 1, 2, or 3 outputs of this node are only the intermediate sizes.

|

||||

|

||||

A third node is included, *Random Switch (Integers)*, which is just a generic version of Final Size with no orientation selection.

|

||||

|

||||

**Node Link:** https://github.com/dwringer/size-stepper-nodes

|

||||

|

||||

**Example Usage:**

|

||||

|

||||

|

||||

--------------------------------

|

||||

|

||||

### Text font to Image

|

||||

|

||||

**Description:** text font to text image node for InvokeAI, download a font to use (or if in font cache uses it from there), the text is always resized to the image size, but can control that with padding, optional 2nd line

|

||||

|

||||

**Node Link:** https://github.com/mickr777/textfontimage

|

||||

|

||||

**Output Examples**

|

||||

|

||||

|

||||

|

||||

Results after using the depth controlnet

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

--------------------------------

|

||||

|

||||

### Example Node Template

|

||||

|

||||

**Description:** This node allows you to do super cool things with InvokeAI.

|

||||

|

||||

@@ -35,13 +35,13 @@ The table below contains a list of the default nodes shipped with InvokeAI and t

|

||||

|Inverse Lerp Image | Inverse linear interpolation of all pixels of an image|

|

||||

|Image Primitive | An image primitive value|

|

||||

|Lerp Image | Linear interpolation of all pixels of an image|

|

||||

|Offset Image Channel | Add to or subtract from an image color channel by a uniform value.|

|

||||

|Multiply Image Channel | Multiply or Invert an image color channel by a scalar value.|

|

||||

|Image Luminosity Adjustment | Adjusts the Luminosity (Value) of an image.|

|

||||

|Multiply Images | Multiplies two images together using `PIL.ImageChops.multiply()`.|

|

||||

|Blur NSFW Image | Add blur to NSFW-flagged images|

|

||||

|Paste Image | Pastes an image into another image.|

|

||||

|ImageProcessor | Base class for invocations that preprocess images for ControlNet|

|

||||

|Resize Image | Resizes an image to specific dimensions|

|

||||

|Image Saturation Adjustment | Adjusts the Saturation of an image.|

|

||||

|Scale Image | Scales an image by a factor|

|

||||

|Image to Latents | Encodes an image into latents.|

|

||||

|Add Invisible Watermark | Add an invisible watermark to an image|

|

||||

|

||||

@@ -14,7 +14,7 @@ fi

|

||||

VERSION=$(cd ..; python -c "from invokeai.version import __version__ as version; print(version)")

|

||||

PATCH=""

|

||||

VERSION="v${VERSION}${PATCH}"

|

||||

LATEST_TAG="v3-latest"

|

||||

LATEST_TAG="v3.0-latest"

|

||||

|

||||

echo Building installer for version $VERSION

|

||||

echo "Be certain that you're in the 'installer' directory before continuing."

|

||||

@@ -46,7 +46,6 @@ if [[ $(python -c 'from importlib.util import find_spec; print(find_spec("build"

|

||||

pip install --user build

|

||||

fi

|

||||

|

||||

rm -r ../build

|

||||

python -m build --wheel --outdir dist/ ../.

|

||||

|

||||

# ----------------------

|

||||

|

||||

@@ -1,19 +1,19 @@

|

||||

import typing

|

||||

from enum import Enum

|

||||

from pathlib import Path

|

||||

|

||||

from fastapi import Body

|

||||

from fastapi.routing import APIRouter

|

||||

from pathlib import Path

|

||||

from pydantic import BaseModel, Field

|

||||

|

||||

from invokeai.app.invocations.upscale import ESRGAN_MODELS

|

||||

from invokeai.backend.image_util.invisible_watermark import InvisibleWatermark

|

||||

from invokeai.backend.image_util.patchmatch import PatchMatch

|

||||

from invokeai.backend.image_util.safety_checker import SafetyChecker

|

||||

from invokeai.backend.util.logging import logging

|

||||

from invokeai.backend.image_util.invisible_watermark import InvisibleWatermark

|

||||

from invokeai.app.invocations.upscale import ESRGAN_MODELS

|

||||

|

||||

from invokeai.version import __version__

|

||||

|

||||

from ..dependencies import ApiDependencies

|

||||

from invokeai.backend.util.logging import logging

|

||||

|

||||

|

||||

class LogLevel(int, Enum):

|

||||

@@ -55,7 +55,7 @@ async def get_version() -> AppVersion:

|

||||

|

||||

@app_router.get("/config", operation_id="get_config", status_code=200, response_model=AppConfig)

|

||||

async def get_config() -> AppConfig:

|

||||

infill_methods = ["tile", "lama", "cv2"]

|

||||

infill_methods = ["tile", "lama"]

|

||||

if PatchMatch.patchmatch_available():

|

||||

infill_methods.append("patchmatch")

|

||||

|

||||

|

||||

@@ -1,22 +1,24 @@

|

||||

# Copyright (c) 2022 Kyle Schouviller (https://github.com/kyle0654)

|

||||

|

||||

from typing import Annotated, Optional, Union

|

||||

from typing import Annotated, Literal, Optional, Union

|

||||

|

||||

from fastapi import Body, HTTPException, Path, Query, Response

|

||||

from fastapi.routing import APIRouter

|

||||

from pydantic.fields import Field

|

||||

|

||||

from invokeai.app.services.item_storage import PaginatedResults

|

||||

|

||||

# Importing * is bad karma but needed here for node detection

|

||||

from ...invocations import * # noqa: F401 F403

|

||||

from ...invocations.baseinvocation import BaseInvocation

|

||||

from ...invocations.baseinvocation import BaseInvocation, BaseInvocationOutput

|

||||

from ...services.graph import (

|

||||

Edge,

|

||||

EdgeConnection,

|

||||

Graph,

|

||||

GraphExecutionState,

|

||||

NodeAlreadyExecutedError,

|

||||

update_invocations_union,

|

||||

)

|

||||

from ...services.item_storage import PaginatedResults

|

||||

from ..dependencies import ApiDependencies

|

||||

|

||||

session_router = APIRouter(prefix="/v1/sessions", tags=["sessions"])

|

||||

@@ -38,6 +40,24 @@ async def create_session(

|

||||

return session

|

||||

|

||||

|

||||

@session_router.post(

|

||||

"/update_nodes",

|

||||

operation_id="update_nodes",

|

||||

)

|

||||

async def update_nodes() -> None:

|

||||

class TestFromRouterOutput(BaseInvocationOutput):

|

||||

type: Literal["test_from_router"] = "test_from_router"

|

||||

|

||||

class TestInvocationFromRouter(BaseInvocation):

|

||||

type: Literal["test_from_router_output"] = "test_from_router_output"

|

||||

|

||||

def invoke(self, context) -> TestFromRouterOutput:

|

||||

return TestFromRouterOutput()

|

||||

|

||||

# doesn't work from here... hmm...

|

||||

update_invocations_union()

|

||||

|

||||

|

||||