mirror of

https://github.com/invoke-ai/InvokeAI.git

synced 2026-01-15 10:48:12 -05:00

Compare commits

935 Commits

| Author | SHA1 | Date | |

|---|---|---|---|

|

|

4ffe831958 | ||

|

|

481423d678 | ||

|

|

89ede0aef3 | ||

|

|

359bdee9c6 | ||

|

|

0e6fba3763 | ||

|

|

652502d7a6 | ||

|

|

91d981a49e | ||

|

|

24f61d21b2 | ||

|

|

eb9a4177c5 | ||

|

|

3c43351a5b | ||

|

|

b1359b6dff | ||

|

|

bddccf6d2f | ||

|

|

21ffaab2a2 | ||

|

|

1e969f938f | ||

|

|

9c6c86ee4f | ||

|

|

6b53a48b48 | ||

|

|

c813fa3fc0 | ||

|

|

a08e61184a | ||

|

|

a0d62a5f41 | ||

|

|

616c0f11e1 | ||

|

|

e1626a4e49 | ||

|

|

6ab891a319 | ||

|

|

492de41316 | ||

|

|

c064efc866 | ||

|

|

1a0885bfb1 | ||

|

|

e8b202d0a5 | ||

|

|

c6fc82f756 | ||

|

|

9a77e951d2 | ||

|

|

8bd4207a27 | ||

|

|

0bb601aaf7 | ||

|

|

2da25a0043 | ||

|

|

51d0931898 | ||

|

|

357b68d1ba | ||

|

|

d9ddb6c32e | ||

|

|

ad02a99a83 | ||

|

|

b707dafc7b | ||

|

|

02906c8f5d | ||

|

|

8538e508f1 | ||

|

|

8c333ffd14 | ||

|

|

72ace5fdff | ||

|

|

9b7583fc84 | ||

|

|

989eee338e | ||

|

|

acc3d7b91b | ||

|

|

49de868658 | ||

|

|

b1702c7d90 | ||

|

|

e49e19ea13 | ||

|

|

c9f91f391e | ||

|

|

4cb6b2b701 | ||

|

|

7d132ea148 | ||

|

|

1088accd91 | ||

|

|

8d237d8f8b | ||

|

|

0c86a3232d | ||

|

|

dbfb0359cb | ||

|

|

b4c2aa596b | ||

|

|

87e89b7995 | ||

|

|

9b089430e2 | ||

|

|

f2b0025958 | ||

|

|

4b390906bc | ||

|

|

c5b8efe03b | ||

|

|

4d08d00ad8 | ||

|

|

9b0130262b | ||

|

|

878093f64e | ||

|

|

d5ff7ef250 | ||

|

|

f36583f866 | ||

|

|

829bc1bc7d | ||

|

|

17c7b57145 | ||

|

|

6a12189542 | ||

|

|

96a31a5563 | ||

|

|

067747eca9 | ||

|

|

c7878fddc6 | ||

|

|

54c51e0a06 | ||

|

|

1640ea0298 | ||

|

|

0c32ae9775 | ||

|

|

fdb8ca5165 | ||

|

|

571faf6d7c | ||

|

|

bdbdb22b74 | ||

|

|

9bbb5644af | ||

|

|

e90ad19f22 | ||

|

|

0ba11e8f73 | ||

|

|

1cf7600f5b | ||

|

|

4f9d12b872 | ||

|

|

68c3b0649b | ||

|

|

8ef8bd4261 | ||

|

|

50897ba066 | ||

|

|

3510643870 | ||

|

|

ca9cb1c9ef | ||

|

|

b89caa02bd | ||

|

|

eaf4e08c44 | ||

|

|

fb19621361 | ||

|

|

9179619077 | ||

|

|

13cb5f0ba2 | ||

|

|

7e52fc1c17 | ||

|

|

7f60a4a282 | ||

|

|

3f880496f7 | ||

|

|

f05efd3270 | ||

|

|

79eb8172b6 | ||

|

|

7732b5d478 | ||

|

|

a2a1934b66 | ||

|

|

dff6570078 | ||

|

|

04e4fb63af | ||

|

|

83609d5008 | ||

|

|

2618ed0ae7 | ||

|

|

bb3cedddd5 | ||

|

|

5b3e1593ca | ||

|

|

2d08078a7d | ||

|

|

75acece1f1 | ||

|

|

a9db2ffefd | ||

|

|

cdd148b4d1 | ||

|

|

730fabe2de | ||

|

|

6c59790a7f | ||

|

|

0e6cb91863 | ||

|

|

a0fefcd43f | ||

|

|

c37251d6f7 | ||

|

|

2854210162 | ||

|

|

5545b980af | ||

|

|

0c9434c464 | ||

|

|

8771de917d | ||

|

|

122946ef4c | ||

|

|

2d974f670c | ||

|

|

75f0da9c35 | ||

|

|

5df3c00e28 | ||

|

|

b049880502 | ||

|

|

e5293fdd1a | ||

|

|

8883775762 | ||

|

|

cfadb313d2 | ||

|

|

b5cadd9a1a | ||

|

|

5361b6e014 | ||

|

|

ff346172af | ||

|

|

92f660018b | ||

|

|

1afc2cba4e | ||

|

|

ee8359242c | ||

|

|

f0c80a8d7a | ||

|

|

8da9e7c1f6 | ||

|

|

6d7a486e5b | ||

|

|

57122c6aa3 | ||

|

|

54abd8d4d1 | ||

|

|

06283cffed | ||

|

|

27fa0e1140 | ||

|

|

533d48abdb | ||

|

|

6845cae4c9 | ||

|

|

31c9acb1fa | ||

|

|

fb5e462300 | ||

|

|

2f3abc29b1 | ||

|

|

c5c071f285 | ||

|

|

93a3ed56e7 | ||

|

|

406fc58889 | ||

|

|

cf67d084fd | ||

|

|

d4a95af14f | ||

|

|

8c8e7102c2 | ||

|

|

b6b9ea9d70 | ||

|

|

63126950bc | ||

|

|

29d63d5dea | ||

|

|

a5f8c23dee | ||

|

|

7bb4ea57c6 | ||

|

|

75dc961bcb | ||

|

|

a9a1f6ef21 | ||

|

|

aa40161f26 | ||

|

|

6efa812874 | ||

|

|

8a683f5a3c | ||

|

|

f4b0b6a93d | ||

|

|

1337c33ad3 | ||

|

|

2f6b035138 | ||

|

|

4f9ae44472 | ||

|

|

c682330852 | ||

|

|

c064257759 | ||

|

|

8a4c629576 | ||

|

|

496b02a3bc | ||

|

|

7b5efc2203 | ||

|

|

a01d44f813 | ||

|

|

63fb3a15e9 | ||

|

|

4d0837541b | ||

|

|

999809b4c7 | ||

|

|

c452edfb9f | ||

|

|

ad2cdbd8a2 | ||

|

|

f15c24bfa7 | ||

|

|

d1f653f28c | ||

|

|

244465d3a6 | ||

|

|

c6236ab70c | ||

|

|

644d5cb411 | ||

|

|

bb0a630416 | ||

|

|

2148ae9287 | ||

|

|

42d242609c | ||

|

|

fd0a52392b | ||

|

|

e64415d59a | ||

|

|

1871e0bdbf | ||

|

|

3ae9a965c2 | ||

|

|

85932e35a7 | ||

|

|

41b07a56cc | ||

|

|

54064c0cb8 | ||

|

|

68284b37fa | ||

|

|

ae5bc6f5d6 | ||

|

|

6dc16c9f54 | ||

|

|

faa9ac4e15 | ||

|

|

d0460849b0 | ||

|

|

bed3c2dd77 | ||

|

|

916ddd17d7 | ||

|

|

accfa7407f | ||

|

|

908db31e48 | ||

|

|

b70f632b26 | ||

|

|

d07a6385ab | ||

|

|

68df612fa1 | ||

|

|

3b96c79461 | ||

|

|

89bda5b983 | ||

|

|

22bff1fb22 | ||

|

|

55ba6488d1 | ||

|

|

2d78859171 | ||

|

|

3a661bac34 | ||

|

|

bb8a02de18 | ||

|

|

78155344f6 | ||

|

|

391a24b0f6 | ||

|

|

e75903389f | ||

|

|

27567052f2 | ||

|

|

6f447f7169 | ||

|

|

8b370cc182 | ||

|

|

af583d2971 | ||

|

|

0ebe8fb1bd | ||

|

|

befb629f46 | ||

|

|

874d67cb37 | ||

|

|

19f7a1295a | ||

|

|

78bd605617 | ||

|

|

b87f4e59a5 | ||

|

|

1eca4f12c8 | ||

|

|

f1de11d6bf | ||

|

|

9361ed9d70 | ||

|

|

ebabf4f7a8 | ||

|

|

606f3321f5 | ||

|

|

3970aa30fb | ||

|

|

678436e07c | ||

|

|

c620581699 | ||

|

|

c331d42ce4 | ||

|

|

1ac9b502f1 | ||

|

|

3fa478a12f | ||

|

|

2d86298b7f | ||

|

|

009cdb714c | ||

|

|

9d3f5427b4 | ||

|

|

e4b17f019a | ||

|

|

586c00bc02 | ||

|

|

0f11fda65a | ||

|

|

3e75331ef7 | ||

|

|

be133408ac | ||

|

|

7e1e0d6928 | ||

|

|

cd3d8df5a8 | ||

|

|

24d3c22017 | ||

|

|

b0d37f4e51 | ||

|

|

3559124674 | ||

|

|

6c33e02141 | ||

|

|

8cf94d602f | ||

|

|

016a6f182f | ||

|

|

6fbc019142 | ||

|

|

26f95d6a97 | ||

|

|

40f7b0d171 | ||

|

|

4904700751 | ||

|

|

83538c4b2b | ||

|

|

eb7b559529 | ||

|

|

4945465cf0 | ||

|

|

7eed7282a9 | ||

|

|

47f0781822 | ||

|

|

88b8e3e3d5 | ||

|

|

47c3ab9214 | ||

|

|

d6d436b59c | ||

|

|

6ff7057967 | ||

|

|

e032ab1179 | ||

|

|

65bddfcd93 | ||

|

|

2d3ce418dd | ||

|

|

548d72f7b9 | ||

|

|

19837a0f29 | ||

|

|

483b65a1dc | ||

|

|

b85931c7ab | ||

|

|

9225f47338 | ||

|

|

bccac5e4a6 | ||

|

|

7cb07fdc04 | ||

|

|

b137450026 | ||

|

|

dc5090469a | ||

|

|

e0ae2ace89 | ||

|

|

269faae04b | ||

|

|

e282acd41c | ||

|

|

a266668348 | ||

|

|

3bb3e142fc | ||

|

|

6ac6d70a22 | ||

|

|

b0acf33ba5 | ||

|

|

b3eb64b64c | ||

|

|

95f8ab1a29 | ||

|

|

4e043384db | ||

|

|

0f5df8ba17 | ||

|

|

2826ab48a2 | ||

|

|

7ff1b635c8 | ||

|

|

dfb5e8b5e5 | ||

|

|

7259da799c | ||

|

|

965069fce1 | ||

|

|

90232806d9 | ||

|

|

81bc153399 | ||

|

|

c63e526f99 | ||

|

|

2b74263007 | ||

|

|

d3a82f7119 | ||

|

|

291c5a0341 | ||

|

|

bcb41399ca | ||

|

|

6f0f53849b | ||

|

|

4e7d63761a | ||

|

|

198c84105d | ||

|

|

2453b9f443 | ||

|

|

b091aca986 | ||

|

|

8f02ce54a0 | ||

|

|

f4b7c63002 | ||

|

|

a4629280b5 | ||

|

|

855fb007da | ||

|

|

d805b52c1f | ||

|

|

2ea55685bb | ||

|

|

bd6ff3deaa | ||

|

|

82dd53ec88 | ||

|

|

71d749541d | ||

|

|

48a57fc4b9 | ||

|

|

530e0910fc | ||

|

|

2fdf8fc0a2 | ||

|

|

91db9c9300 | ||

|

|

bc42205593 | ||

|

|

2e3cba6416 | ||

|

|

7852aacd11 | ||

|

|

6cccd67ecd | ||

|

|

a7a89c9de1 | ||

|

|

5ca8eed89e | ||

|

|

c885c3c9a6 | ||

|

|

d81c38c350 | ||

|

|

92d5b73215 | ||

|

|

097e92db6a | ||

|

|

84c6209a45 | ||

|

|

107e48808a | ||

|

|

47168b5505 | ||

|

|

58152ec981 | ||

|

|

c74afbf332 | ||

|

|

7cdda00a54 | ||

|

|

a74282bce6 | ||

|

|

107f048c7a | ||

|

|

a2486a5f06 | ||

|

|

07ab116efb | ||

|

|

1a13af3c7a | ||

|

|

f2966a2594 | ||

|

|

58bb97e3c6 | ||

|

|

34569a2410 | ||

|

|

a84aa5c049 | ||

|

|

acfa9c87ef | ||

|

|

f245d8e429 | ||

|

|

62cf0f54e0 | ||

|

|

5f015e76ba | ||

|

|

aebcec28e0 | ||

|

|

db1c5a94f7 | ||

|

|

56222a8493 | ||

|

|

b7510ce709 | ||

|

|

5739799e2e | ||

|

|

813cf87920 | ||

|

|

c95b151daf | ||

|

|

a0f823a3cf | ||

|

|

64e0f6d688 | ||

|

|

ddd5b1087c | ||

|

|

008be9b846 | ||

|

|

8e7cabdc04 | ||

|

|

a4c4237f99 | ||

|

|

bda3740dcd | ||

|

|

5b4633baa9 | ||

|

|

96351181cb | ||

|

|

957d591d99 | ||

|

|

75f605ba1a | ||

|

|

ab898a7180 | ||

|

|

c9a4516ab1 | ||

|

|

fe97c0d5eb | ||

|

|

6056764840 | ||

|

|

8747c0dbb0 | ||

|

|

c5cdd5f9c6 | ||

|

|

abc5d53159 | ||

|

|

2f76019a89 | ||

|

|

3f45beb1ed | ||

|

|

bc1126a85b | ||

|

|

380017041e | ||

|

|

ab7cdbb7e0 | ||

|

|

e5b78d0221 | ||

|

|

1acaa6c486 | ||

|

|

b0381076b7 | ||

|

|

ffff2d6dbb | ||

|

|

afa9f07649 | ||

|

|

addb5c49ea | ||

|

|

a112d2d55b | ||

|

|

619a271c8a | ||

|

|

909f2ee36d | ||

|

|

b4cf3d9d03 | ||

|

|

e6ab6e0293 | ||

|

|

66d9c7c631 | ||

|

|

fec45f3eb6 | ||

|

|

7211d1a6fc | ||

|

|

f3069754a9 | ||

|

|

4f43152aeb | ||

|

|

7125055d02 | ||

|

|

c91a9ce390 | ||

|

|

3e7b73da2c | ||

|

|

61ac50c00d | ||

|

|

c1201f0bce | ||

|

|

acdffac5ad | ||

|

|

e420300fa4 | ||

|

|

260a5a4f9a | ||

|

|

ed0c2006fe | ||

|

|

9ffd888c86 | ||

|

|

175a9dc28d | ||

|

|

5764e4f7f2 | ||

|

|

4275a494b9 | ||

|

|

a3deb8d30d | ||

|

|

aafdb0a37b | ||

|

|

56a815719a | ||

|

|

4db26bfa3a | ||

|

|

8d84ccb12b | ||

|

|

3321d14997 | ||

|

|

43cc4684e1 | ||

|

|

afa5a4b17c | ||

|

|

33c433fe59 | ||

|

|

9cd47fa857 | ||

|

|

32d9abe802 | ||

|

|

3947d4a165 | ||

|

|

3583d03b70 | ||

|

|

bc954b9996 | ||

|

|

c08075946a | ||

|

|

df8df914e8 | ||

|

|

33924e8491 | ||

|

|

7e5ce1d69d | ||

|

|

6a24594140 | ||

|

|

61d26cffe6 | ||

|

|

fdbc244dbe | ||

|

|

0eea84c90d | ||

|

|

e079a91800 | ||

|

|

eb20173487 | ||

|

|

20dd0779b5 | ||

|

|

b384a92f5c | ||

|

|

116d32fbbe | ||

|

|

b044f31a61 | ||

|

|

6c3c24403b | ||

|

|

591f48bb95 | ||

|

|

dc6e45485c | ||

|

|

829820479d | ||

|

|

48a471bfb8 | ||

|

|

ff72315db2 | ||

|

|

790846297a | ||

|

|

230b455a13 | ||

|

|

71f0fff55b | ||

|

|

7f2c83b9e6 | ||

|

|

bc85bd4bd4 | ||

|

|

38b09d73e4 | ||

|

|

606c4ae88c | ||

|

|

f666bac77f | ||

|

|

c9bf7da23a | ||

|

|

dfc65b93e9 | ||

|

|

9ca40b4cf5 | ||

|

|

d571e71d5e | ||

|

|

ad1e6c3fe6 | ||

|

|

21d02911dd | ||

|

|

43afe0bd9a | ||

|

|

e7a68c446d | ||

|

|

b9c68a2e7e | ||

|

|

371a1b1af3 | ||

|

|

dae4591de6 | ||

|

|

8ccb2e30ce | ||

|

|

b8106a4613 | ||

|

|

ce51e9582a | ||

|

|

00848eb631 | ||

|

|

b48430a892 | ||

|

|

f94a218561 | ||

|

|

9b6ed40875 | ||

|

|

26553dbb0e | ||

|

|

9eb695d0b4 | ||

|

|

babab17e1d | ||

|

|

d0a80f3347 | ||

|

|

9b30363177 | ||

|

|

89bde36b0c | ||

|

|

86a8476d97 | ||

|

|

afa0661e55 | ||

|

|

ba09c1277f | ||

|

|

80bf9ddb71 | ||

|

|

1dbc98d747 | ||

|

|

0698188ea2 | ||

|

|

59d0ad4505 | ||

|

|

074a5692dd | ||

|

|

bb0741146a | ||

|

|

1845d9a87a | ||

|

|

748c393e71 | ||

|

|

9bd17ea02f | ||

|

|

24f9b46fbc | ||

|

|

54b3aa1d01 | ||

|

|

d85733f22b | ||

|

|

aff6ad0316 | ||

|

|

61496fdcbc | ||

|

|

ee8975401a | ||

|

|

bf3260446d | ||

|

|

f53823b45e | ||

|

|

5cbe89afdd | ||

|

|

c466d50c3d | ||

|

|

d20b894a61 | ||

|

|

20362448b9 | ||

|

|

5df10cc494 | ||

|

|

da171114ea | ||

|

|

62919a443c | ||

|

|

ffcec91d87 | ||

|

|

0a96466b60 | ||

|

|

e48cab0276 | ||

|

|

740f6eb19f | ||

|

|

d1bb4c2c70 | ||

|

|

e545f18a45 | ||

|

|

e8cd1bb3d8 | ||

|

|

90a906e203 | ||

|

|

5546110127 | ||

|

|

73bbb12f7a | ||

|

|

dde54740c5 | ||

|

|

f70a8e2c1a | ||

|

|

fdccdd52d5 | ||

|

|

31ffd73423 | ||

|

|

3fa1012879 | ||

|

|

c2a8fbd8d6 | ||

|

|

d6643d7263 | ||

|

|

412e79d8e6 | ||

|

|

f939dbdc33 | ||

|

|

24a0ca86f5 | ||

|

|

95c30f6a8b | ||

|

|

ac7441e606 | ||

|

|

9c9af312fe | ||

|

|

7bf5927c43 | ||

|

|

32c7cdd856 | ||

|

|

bbd89d54b4 | ||

|

|

ee61006a49 | ||

|

|

0b43f5fd64 | ||

|

|

6c61266990 | ||

|

|

2d5afe8094 | ||

|

|

2430137d19 | ||

|

|

6df4ee5fc8 | ||

|

|

371742d8f9 | ||

|

|

5440c03767 | ||

|

|

358dbdbf84 | ||

|

|

5ec2d71be0 | ||

|

|

8f28903c81 | ||

|

|

73d4c4d56d | ||

|

|

a071f2788a | ||

|

|

d9a257ef8a | ||

|

|

23fada3eea | ||

|

|

2917e59c38 | ||

|

|

c691855a67 | ||

|

|

a00347379b | ||

|

|

ad1a8fbb8d | ||

|

|

f03b77e882 | ||

|

|

2b000cb006 | ||

|

|

af636f08b8 | ||

|

|

f8150f46a5 | ||

|

|

b613be0f5d | ||

|

|

a833d74913 | ||

|

|

02df055e8a | ||

|

|

add31ce596 | ||

|

|

7d7ad3052e | ||

|

|

3b16dbffb2 | ||

|

|

d8b0648766 | ||

|

|

ae64ee224f | ||

|

|

1251dfd7f6 | ||

|

|

804ee3a7fb | ||

|

|

fc5f9047c2 | ||

|

|

0b208220e5 | ||

|

|

916b9f7741 | ||

|

|

0947a006cc | ||

|

|

2c2df6423e | ||

|

|

c3df9d38c0 | ||

|

|

3790c254f5 | ||

|

|

abf46eaacd | ||

|

|

166548246d | ||

|

|

985dcd9862 | ||

|

|

b1df592506 | ||

|

|

a09a0eff69 | ||

|

|

e73bd09d93 | ||

|

|

6f5477a3f0 | ||

|

|

f78a542401 | ||

|

|

8613efb03a | ||

|

|

d8347d856d | ||

|

|

336e6e0c19 | ||

|

|

5bd87ca89b | ||

|

|

fe87c198eb | ||

|

|

69a4a88925 | ||

|

|

6e7491b086 | ||

|

|

3da8076a2b | ||

|

|

80360a8abb | ||

|

|

acfeb4a276 | ||

|

|

b33dbfc95f | ||

|

|

f9bc29203b | ||

|

|

cbe7717409 | ||

|

|

d6add93901 | ||

|

|

ea45dce9dc | ||

|

|

8d44363d49 | ||

|

|

9933cdb6b7 | ||

|

|

e3e9d1f27c | ||

|

|

bb59ad438a | ||

|

|

e38f5b1576 | ||

|

|

1bb49b698f | ||

|

|

fa1fbd89fe | ||

|

|

190ef6732c | ||

|

|

947cd4694b | ||

|

|

ee32d0666d | ||

|

|

bc8ad9ccbf | ||

|

|

e96b290fa9 | ||

|

|

b9f83eae6a | ||

|

|

9868e23235 | ||

|

|

0060cae17c | ||

|

|

56f0845552 | ||

|

|

da3f85dd8b | ||

|

|

7185363f17 | ||

|

|

ac08c31fbc | ||

|

|

ea54a2655a | ||

|

|

cc83dede9f | ||

|

|

8464fd2ced | ||

|

|

c3316368d9 | ||

|

|

8b2d5ab28a | ||

|

|

3f6acdc2d3 | ||

|

|

4aa20a95b2 | ||

|

|

2d82e69a33 | ||

|

|

683f9a70e7 | ||

|

|

bb6d073828 | ||

|

|

7f7d8e5177 | ||

|

|

f37c5011f4 | ||

|

|

bb947c6162 | ||

|

|

a654dad20f | ||

|

|

2bd44662f3 | ||

|

|

e7f9086006 | ||

|

|

5141be8009 | ||

|

|

eacdfc660b | ||

|

|

5fd3c39431 | ||

|

|

7daf3b7d4a | ||

|

|

908f65698d | ||

|

|

63c4ac58e9 | ||

|

|

8c125681ea | ||

|

|

118f0ba3bf | ||

|

|

b3b7d084d0 | ||

|

|

812940eb95 | ||

|

|

0559480dd6 | ||

|

|

d99e7dd4e4 | ||

|

|

e854181417 | ||

|

|

de414c09fd | ||

|

|

ce4624f72b | ||

|

|

47c7df3476 | ||

|

|

4289b5e6c3 | ||

|

|

c8d1d14662 | ||

|

|

44c588d778 | ||

|

|

d75ac56d00 | ||

|

|

714dd5f0be | ||

|

|

2f4d3cb5e6 | ||

|

|

b76555bda9 | ||

|

|

1cdd501a0a | ||

|

|

1125218bc5 | ||

|

|

683504bfb5 | ||

|

|

03cf953398 | ||

|

|

24c115663d | ||

|

|

a9e7ecad49 | ||

|

|

76f4766324 | ||

|

|

3dfc242f77 | ||

|

|

1e43389cb4 | ||

|

|

cb33de34f7 | ||

|

|

7562ea48dc | ||

|

|

83f4700f5a | ||

|

|

704e7479b2 | ||

|

|

5f44559f30 | ||

|

|

7a22819100 | ||

|

|

70495665c5 | ||

|

|

ca30acc5b4 | ||

|

|

8121843d86 | ||

|

|

bc0ded0a23 | ||

|

|

30f6034f88 | ||

|

|

7d56a8ce54 | ||

|

|

e7dc439006 | ||

|

|

bce5a93eb1 | ||

|

|

93e98a1f63 | ||

|

|

0f93deab3b | ||

|

|

3f3aba8b10 | ||

|

|

0b84f567f1 | ||

|

|

69c0d7dcc9 | ||

|

|

5307248fcf | ||

|

|

2efaea8f79 | ||

|

|

c1dfd9b7d9 | ||

|

|

c594ef89d2 | ||

|

|

563db67b80 | ||

|

|

236c065edd | ||

|

|

1f5d744d01 | ||

|

|

b36c6af0ae | ||

|

|

4e431a9d5f | ||

|

|

48a8232285 | ||

|

|

94007fef5b | ||

|

|

9e6fb3bd3f | ||

|

|

8522129639 | ||

|

|

15033b1a9d | ||

|

|

743d78f82b | ||

|

|

06a434b0a2 | ||

|

|

7f2fdae870 | ||

|

|

00be03b5b9 | ||

|

|

0f98806a25 | ||

|

|

0f1541d091 | ||

|

|

c49bbb22e5 | ||

|

|

7bd4b586a6 | ||

|

|

754f049f54 | ||

|

|

883beb90eb | ||

|

|

ad76399702 | ||

|

|

69773a791d | ||

|

|

99e88e601d | ||

|

|

4050f7deae | ||

|

|

0399b04f29 | ||

|

|

3b349b2686 | ||

|

|

aa34dbe1e1 | ||

|

|

ac2476c63c | ||

|

|

f16489f1ce | ||

|

|

3b38b69192 | ||

|

|

2c601438eb | ||

|

|

5d6a2a3709 | ||

|

|

1d7a264050 | ||

|

|

c494e0642a | ||

|

|

849b9e8d86 | ||

|

|

4a66b7ac83 | ||

|

|

751eb59afa | ||

|

|

f537cf1916 | ||

|

|

0cc6f67bb1 | ||

|

|

b2bf03fd37 | ||

|

|

14bc06ab66 | ||

|

|

9c82cc7fcb | ||

|

|

c60cab97a7 | ||

|

|

eda979341a | ||

|

|

b6c7949bb7 | ||

|

|

d691f672a2 | ||

|

|

8deeac1372 | ||

|

|

4aace24f1f | ||

|

|

b1567fe0e4 | ||

|

|

3953e60a4f | ||

|

|

3c46522595 | ||

|

|

63a2e17f6b | ||

|

|

8b1ef4b902 | ||

|

|

5f2279c984 | ||

|

|

e82d67849c | ||

|

|

3977ffaa3e | ||

|

|

9a8a858fe4 | ||

|

|

859944f848 | ||

|

|

8d1a45863c | ||

|

|

6798bbab26 | ||

|

|

2c92e8a495 | ||

|

|

216b36c75d | ||

|

|

8bf8742984 | ||

|

|

c78eeb1645 | ||

|

|

cd88723a80 | ||

|

|

dea6cbd599 | ||

|

|

0dd9f1f772 | ||

|

|

5d11c30ce6 | ||

|

|

a783539cd2 | ||

|

|

2f8f30b497 | ||

|

|

f878e5e74e | ||

|

|

bfc460a5c6 | ||

|

|

a24581ede2 | ||

|

|

56731766ca | ||

|

|

80bc4ebee3 | ||

|

|

745b6dbd5d | ||

|

|

c7628945c4 | ||

|

|

728927ecff | ||

|

|

1a7eece695 | ||

|

|

2cd14dd066 | ||

|

|

5872f05342 | ||

|

|

4ad135c6ae | ||

|

|

c72c2770fe | ||

|

|

e733a1f30e | ||

|

|

4be3a33744 | ||

|

|

1751c380db | ||

|

|

16cda33025 | ||

|

|

8308e7d186 | ||

|

|

c0aab56d08 | ||

|

|

1795f4f8a2 | ||

|

|

5bfd2ec6b7 | ||

|

|

a35b229a9d | ||

|

|

e93da5d4b2 | ||

|

|

a17ea9bfad | ||

|

|

3578010ba4 | ||

|

|

459cf52043 | ||

|

|

9bcb93f575 | ||

|

|

d1a0e99701 | ||

|

|

92b1515d9d | ||

|

|

36515e1e2a | ||

|

|

c81bb761ed | ||

|

|

1d4a58e52b | ||

|

|

62d12e6468 | ||

|

|

9541156ce5 | ||

|

|

eb5b6625ea | ||

|

|

9758e5a622 | ||

|

|

58eba8bdbd | ||

|

|

2821ba8967 | ||

|

|

2cc72b19bc | ||

|

|

8544ba3798 | ||

|

|

65fe79fa0e | ||

|

|

c99852657e | ||

|

|

ed54b89e9e | ||

|

|

d56c80af8e | ||

|

|

0a65a01db8 | ||

|

|

5f416ee4fa | ||

|

|

115c82231b | ||

|

|

ccc1d4417e | ||

|

|

5806a4bc73 | ||

|

|

734631bfe4 | ||

|

|

8d6996cdf0 | ||

|

|

965d6be1f4 | ||

|

|

e31f253b90 | ||

|

|

5a94575603 | ||

|

|

1c3d06dc83 | ||

|

|

09b19e3640 | ||

|

|

1e0a4dfa3c | ||

|

|

5a1ab4aa9c | ||

|

|

d5c872292f | ||

|

|

0d7edbce25 | ||

|

|

e20d964b59 | ||

|

|

ee95321801 | ||

|

|

179c6d206c | ||

|

|

ffecd83815 | ||

|

|

f1c538fafc | ||

|

|

ed88b096f3 | ||

|

|

a28cabdf97 | ||

|

|

db25be3ba2 | ||

|

|

3b9d1e8218 | ||

|

|

05d9ba8fa0 | ||

|

|

3eee1ba113 | ||

|

|

7882e9beae | ||

|

|

7c9779b496 | ||

|

|

5832228fea | ||

|

|

1d32e70a75 | ||

|

|

9092280583 | ||

|

|

96dd1d5102 | ||

|

|

969f8b8e8d | ||

|

|

ccb5f90556 | ||

|

|

4770d9895d | ||

|

|

aeb2275bd8 | ||

|

|

aff5524457 | ||

|

|

825c564089 | ||

|

|

9b97c57f00 | ||

|

|

4b3a201790 | ||

|

|

7e1b9567c1 | ||

|

|

56ef754292 | ||

|

|

2de99ec32d | ||

|

|

889e63d585 | ||

|

|

56de2b3a51 | ||

|

|

eb40bdb810 | ||

|

|

0840e5fa65 | ||

|

|

b79f2a4e4f | ||

|

|

76a533e67e | ||

|

|

188974988c | ||

|

|

b47aae2165 | ||

|

|

7105a22e0f | ||

|

|

eee4175e4d | ||

|

|

e0b63559d0 | ||

|

|

aa54c1f969 | ||

|

|

87fdea4cc6 | ||

|

|

53443084c5 | ||

|

|

8d2e5bfd77 | ||

|

|

05e285c95a | ||

|

|

25f19a35d7 | ||

|

|

01bbd32598 | ||

|

|

0e2761d5c6 | ||

|

|

d5b51cca56 | ||

|

|

a303777777 | ||

|

|

e90b3de706 | ||

|

|

3ce94e5b84 | ||

|

|

42e5ec3916 | ||

|

|

ffa00d1d9a | ||

|

|

1648a2af6e | ||

|

|

852e9e280a | ||

|

|

af72412d3f | ||

|

|

72f715e688 | ||

|

|

3b567bef3d | ||

|

|

3d867db315 | ||

|

|

a8c7dd74d0 | ||

|

|

2dc069d759 | ||

|

|

2a90f4f59e | ||

|

|

af5f342347 | ||

|

|

6dd53b6a32 | ||

|

|

0ca8351911 | ||

|

|

b14cbfde13 | ||

|

|

46dc633df9 | ||

|

|

d4a981fc1c | ||

|

|

e0474ce822 | ||

|

|

9e5ce6b2d4 | ||

|

|

98fa946f77 | ||

|

|

ef80d40b63 | ||

|

|

7a9f923d35 | ||

|

|

fd982fa7c2 | ||

|

|

df86ed653a | ||

|

|

0be8aacee6 | ||

|

|

4f993a4f32 | ||

|

|

0158320940 | ||

|

|

bb2dc6c78b | ||

|

|

80d7d69c2f | ||

|

|

1010c9877c | ||

|

|

8fd8994ee8 | ||

|

|

262c2f1fc7 | ||

|

|

150d3239e3 | ||

|

|

e49e5e9782 | ||

|

|

2d1e745594 | ||

|

|

b793328edd | ||

|

|

e79b316645 | ||

|

|

8297e7964c | ||

|

|

26832c1a0e | ||

|

|

c29259ccdb | ||

|

|

3d4bd71098 | ||

|

|

814be44cd7 | ||

|

|

d328eaf743 | ||

|

|

b502c05009 | ||

|

|

0f333388bb | ||

|

|

bc63e2acc5 | ||

|

|

ec7e771942 | ||

|

|

fe84013392 | ||

|

|

710f81266b | ||

|

|

446e2884bc | ||

|

|

7d9f125232 | ||

|

|

66bbd62758 | ||

|

|

0875e861f5 | ||

|

|

0267d73dfc | ||

|

|

c9ab7c5233 | ||

|

|

f06765dfba | ||

|

|

f347b26999 | ||

|

|

c665cf3525 | ||

|

|

8cf19c4124 | ||

|

|

f7112ae57b | ||

|

|

2bfb0ddff5 | ||

|

|

950c9f5d0c | ||

|

|

db283d21f9 | ||

|

|

70cca7a431 | ||

|

|

3c3938cfc8 | ||

|

|

4455fc4092 | ||

|

|

4b7e920612 | ||

|

|

433146d08f | ||

|

|

324a46d0c8 | ||

|

|

c4421241f6 | ||

|

|

43b417be6b | ||

|

|

4a135c1017 | ||

|

|

dd591abc2b | ||

|

|

0e65f295ac | ||

|

|

ab7fbb7b30 | ||

|

|

92aed5e4fc | ||

|

|

d9b0697d1f | ||

|

|

34a9409bc1 | ||

|

|

319d82751a | ||

|

|

9b90834248 |

1

.github/pull_request_template.md

vendored

1

.github/pull_request_template.md

vendored

@@ -19,3 +19,4 @@

|

||||

- [ ] _The PR has a short but descriptive title, suitable for a changelog_

|

||||

- [ ] _Tests added / updated (if applicable)_

|

||||

- [ ] _Documentation added / updated (if applicable)_

|

||||

- [ ] _Updated `What's New` copy (if doing a release after this PR)_

|

||||

|

||||

@@ -105,7 +105,7 @@ Invoke features an organized gallery system for easily storing, accessing, and r

|

||||

### Other features

|

||||

|

||||

- Support for both ckpt and diffusers models

|

||||

- SD1.5, SD2.0, and SDXL support

|

||||

- SD1.5, SD2.0, SDXL, and FLUX support

|

||||

- Upscaling Tools

|

||||

- Embedding Manager & Support

|

||||

- Model Manager & Support

|

||||

|

||||

@@ -38,9 +38,9 @@ RUN --mount=type=cache,target=/root/.cache/pip \

|

||||

if [ "$TARGETPLATFORM" = "linux/arm64" ] || [ "$GPU_DRIVER" = "cpu" ]; then \

|

||||

extra_index_url_arg="--extra-index-url https://download.pytorch.org/whl/cpu"; \

|

||||

elif [ "$GPU_DRIVER" = "rocm" ]; then \

|

||||

extra_index_url_arg="--extra-index-url https://download.pytorch.org/whl/rocm5.6"; \

|

||||

extra_index_url_arg="--extra-index-url https://download.pytorch.org/whl/rocm6.1"; \

|

||||

else \

|

||||

extra_index_url_arg="--extra-index-url https://download.pytorch.org/whl/cu121"; \

|

||||

extra_index_url_arg="--extra-index-url https://download.pytorch.org/whl/cu124"; \

|

||||

fi &&\

|

||||

|

||||

# xformers + triton fails to install on arm64

|

||||

|

||||

@@ -1,7 +1,7 @@

|

||||

# Copyright (c) 2023 Eugene Brodsky https://github.com/ebr

|

||||

|

||||

x-invokeai: &invokeai

|

||||

image: "local/invokeai:latest"

|

||||

image: "ghcr.io/invoke-ai/invokeai:latest"

|

||||

build:

|

||||

context: ..

|

||||

dockerfile: docker/Dockerfile

|

||||

|

||||

@@ -144,7 +144,7 @@ As you might have noticed, we added two new arguments to the `InputField`

|

||||

definition for `width` and `height`, called `gt` and `le`. They stand for

|

||||

_greater than or equal to_ and _less than or equal to_.

|

||||

|

||||

These impose contraints on those fields, and will raise an exception if the

|

||||

These impose constraints on those fields, and will raise an exception if the

|

||||

values do not meet the constraints. Field constraints are provided by

|

||||

**pydantic**, so anything you see in the **pydantic docs** will work.

|

||||

|

||||

|

||||

@@ -239,7 +239,7 @@ Consult the

|

||||

get it set up.

|

||||

|

||||

Suggest using VSCode's included settings sync so that your remote dev host has

|

||||

all the same app settings and extensions automagically.

|

||||

all the same app settings and extensions automatically.

|

||||

|

||||

##### One remote dev gotcha

|

||||

|

||||

|

||||

@@ -2,7 +2,7 @@

|

||||

|

||||

## **What do I need to know to help?**

|

||||

|

||||

If you are looking to help to with a code contribution, InvokeAI uses several different technologies under the hood: Python (Pydantic, FastAPI, diffusers) and Typescript (React, Redux Toolkit, ChakraUI, Mantine, Konva). Familiarity with StableDiffusion and image generation concepts is helpful, but not essential.

|

||||

If you are looking to help with a code contribution, InvokeAI uses several different technologies under the hood: Python (Pydantic, FastAPI, diffusers) and Typescript (React, Redux Toolkit, ChakraUI, Mantine, Konva). Familiarity with StableDiffusion and image generation concepts is helpful, but not essential.

|

||||

|

||||

|

||||

## **Get Started**

|

||||

|

||||

@@ -5,7 +5,7 @@ If you're a new contributor to InvokeAI or Open Source Projects, this is the gui

|

||||

## New Contributor Checklist

|

||||

|

||||

- [x] Set up your local development environment & fork of InvokAI by following [the steps outlined here](../dev-environment.md)

|

||||

- [x] Set up your local tooling with [this guide](InvokeAI/contributing/LOCAL_DEVELOPMENT/#developing-invokeai-in-vscode). Feel free to skip this step if you already have tooling you're comfortable with.

|

||||

- [x] Set up your local tooling with [this guide](../LOCAL_DEVELOPMENT.md). Feel free to skip this step if you already have tooling you're comfortable with.

|

||||

- [x] Familiarize yourself with [Git](https://www.atlassian.com/git) & our project structure by reading through the [development documentation](development.md)

|

||||

- [x] Join the [#dev-chat](https://discord.com/channels/1020123559063990373/1049495067846524939) channel of the Discord

|

||||

- [x] Choose an issue to work on! This can be achieved by asking in the #dev-chat channel, tackling a [good first issue](https://github.com/invoke-ai/InvokeAI/contribute) or finding an item on the [roadmap](https://github.com/orgs/invoke-ai/projects/7). If nothing in any of those places catches your eye, feel free to work on something of interest to you!

|

||||

|

||||

@@ -1,6 +1,6 @@

|

||||

# Tutorials

|

||||

|

||||

Tutorials help new & existing users expand their abilty to use InvokeAI to the full extent of our features and services.

|

||||

Tutorials help new & existing users expand their ability to use InvokeAI to the full extent of our features and services.

|

||||

|

||||

Currently, we have a set of tutorials available on our [YouTube channel](https://www.youtube.com/@invokeai), but as InvokeAI continues to evolve with new updates, we want to ensure that we are giving our users the resources they need to succeed.

|

||||

|

||||

@@ -8,4 +8,4 @@ Tutorials can be in the form of videos or article walkthroughs on a subject of y

|

||||

|

||||

## Contributing

|

||||

|

||||

Please reach out to @imic or @hipsterusername on [Discord](https://discord.gg/ZmtBAhwWhy) to help create tutorials for InvokeAI.

|

||||

Please reach out to @imic or @hipsterusername on [Discord](https://discord.gg/ZmtBAhwWhy) to help create tutorials for InvokeAI.

|

||||

|

||||

@@ -17,46 +17,49 @@ If you just want to use Invoke, you should use the [installer][installer link].

|

||||

## Setup

|

||||

|

||||

1. Run through the [requirements][requirements link].

|

||||

1. [Fork and clone][forking link] the [InvokeAI repo][repo link].

|

||||

1. Create an directory for user data (images, models, db, etc). This is typically at `~/invokeai`, but if you already have a non-dev install, you may want to create a separate directory for the dev install.

|

||||

1. Create a python virtual environment inside the directory you just created:

|

||||

2. [Fork and clone][forking link] the [InvokeAI repo][repo link].

|

||||

3. Create an directory for user data (images, models, db, etc). This is typically at `~/invokeai`, but if you already have a non-dev install, you may want to create a separate directory for the dev install.

|

||||

4. Create a python virtual environment inside the directory you just created:

|

||||

|

||||

```sh

|

||||

python3 -m venv .venv --prompt InvokeAI-Dev

|

||||

```

|

||||

```sh

|

||||

python3 -m venv .venv --prompt InvokeAI-Dev

|

||||

```

|

||||

|

||||

1. Activate the venv (you'll need to do this every time you want to run the app):

|

||||

5. Activate the venv (you'll need to do this every time you want to run the app):

|

||||

|

||||

```sh

|

||||

source .venv/bin/activate

|

||||

```

|

||||

```sh

|

||||

source .venv/bin/activate

|

||||

```

|

||||

|

||||

1. Install the repo as an [editable install][editable install link]:

|

||||

6. Install the repo as an [editable install][editable install link]:

|

||||

|

||||

```sh

|

||||

pip install -e ".[dev,test,xformers]" --use-pep517 --extra-index-url https://download.pytorch.org/whl/cu121

|

||||

```

|

||||

```sh

|

||||

pip install -e ".[dev,test,xformers]" --use-pep517 --extra-index-url https://download.pytorch.org/whl/cu121

|

||||

```

|

||||

|

||||

Refer to the [manual installation][manual install link]] instructions for more determining the correct install options. `xformers` is optional, but `dev` and `test` are not.

|

||||

Refer to the [manual installation][manual install link]] instructions for more determining the correct install options. `xformers` is optional, but `dev` and `test` are not.

|

||||

|

||||

1. Install the frontend dev toolchain:

|

||||

7. Install the frontend dev toolchain:

|

||||

|

||||

- [`nodejs`](https://nodejs.org/) (recommend v20 LTS)

|

||||

- [`pnpm`](https://pnpm.io/installation#installing-a-specific-version) (must be v8 - not v9!)

|

||||

- [`pnpm`](https://pnpm.io/8.x/installation) (must be v8 - not v9!)

|

||||

|

||||

1. Do a production build of the frontend:

|

||||

8. Do a production build of the frontend:

|

||||

|

||||

```sh

|

||||

pnpm build

|

||||

```

|

||||

```sh

|

||||

cd PATH_TO_INVOKEAI_REPO/invokeai/frontend/web

|

||||

pnpm i

|

||||

pnpm build

|

||||

```

|

||||

|

||||

1. Start the application:

|

||||

9. Start the application:

|

||||

|

||||

```sh

|

||||

python scripts/invokeai-web.py

|

||||

```

|

||||

```sh

|

||||

cd PATH_TO_INVOKEAI_REPO

|

||||

python scripts/invokeai-web.py

|

||||

```

|

||||

|

||||

1. Access the UI at `localhost:9090`.

|

||||

10. Access the UI at `localhost:9090`.

|

||||

|

||||

## Updating the UI

|

||||

|

||||

|

||||

@@ -209,7 +209,7 @@ checkpoint models.

|

||||

|

||||

To solve this, go to the Model Manager tab (the cube), select the

|

||||

checkpoint model that's giving you trouble, and press the "Convert"

|

||||

button in the upper right of your browser window. This will conver the

|

||||

button in the upper right of your browser window. This will convert the

|

||||

checkpoint into a diffusers model, after which loading should be

|

||||

faster and less memory-intensive.

|

||||

|

||||

|

||||

@@ -97,16 +97,16 @@ Prior to installing PyPatchMatch, you need to take the following steps:

|

||||

sudo pacman -S --needed base-devel

|

||||

```

|

||||

|

||||

2. Install `opencv` and `blas`:

|

||||

2. Install `opencv`, `blas`, and required dependencies:

|

||||

|

||||

```sh

|

||||

sudo pacman -S opencv blas

|

||||

sudo pacman -S opencv blas fmt glew vtk hdf5

|

||||

```

|

||||

|

||||

or for CUDA support

|

||||

|

||||

```sh

|

||||

sudo pacman -S opencv-cuda blas

|

||||

sudo pacman -S opencv-cuda blas fmt glew vtk hdf5

|

||||

```

|

||||

|

||||

3. Fix the naming of the `opencv` package configuration file:

|

||||

|

||||

@@ -21,6 +21,7 @@ To use a community workflow, download the `.json` node graph file and load it in

|

||||

+ [Clothing Mask](#clothing-mask)

|

||||

+ [Contrast Limited Adaptive Histogram Equalization](#contrast-limited-adaptive-histogram-equalization)

|

||||

+ [Depth Map from Wavefront OBJ](#depth-map-from-wavefront-obj)

|

||||

+ [Enhance Detail](#enhance-detail)

|

||||

+ [Film Grain](#film-grain)

|

||||

+ [Generative Grammar-Based Prompt Nodes](#generative-grammar-based-prompt-nodes)

|

||||

+ [GPT2RandomPromptMaker](#gpt2randompromptmaker)

|

||||

@@ -40,6 +41,7 @@ To use a community workflow, download the `.json` node graph file and load it in

|

||||

+ [Metadata-Linked](#metadata-linked-nodes)

|

||||

+ [Negative Image](#negative-image)

|

||||

+ [Nightmare Promptgen](#nightmare-promptgen)

|

||||

+ [Ollama](#ollama-node)

|

||||

+ [One Button Prompt](#one-button-prompt)

|

||||

+ [Oobabooga](#oobabooga)

|

||||

+ [Prompt Tools](#prompt-tools)

|

||||

@@ -80,7 +82,7 @@ Note: These are inherited from the core nodes so any update to the core nodes sh

|

||||

|

||||

**Example Usage:**

|

||||

</br>

|

||||

<img src="https://github.com/skunkworxdark/autostereogram_nodes/blob/main/images/spider.png" width="200" /> -> <img src="https://github.com/skunkworxdark/autostereogram_nodes/blob/main/images/spider-depth.png" width="200" /> -> <img src="https://github.com/skunkworxdark/autostereogram_nodes/raw/main/images/spider-dots.png" width="200" /> <img src="https://github.com/skunkworxdark/autostereogram_nodes/raw/main/images/spider-pattern.png" width="200" />

|

||||

<img src="https://raw.githubusercontent.com/skunkworxdark/autostereogram_nodes/refs/heads/main/images/spider.png" width="200" /> -> <img src="https://raw.githubusercontent.com/skunkworxdark/autostereogram_nodes/refs/heads/main/images/spider-depth.png" width="200" /> -> <img src="https://raw.githubusercontent.com/skunkworxdark/autostereogram_nodes/refs/heads/main/images/spider-dots.png" width="200" /> <img src="https://raw.githubusercontent.com/skunkworxdark/autostereogram_nodes/refs/heads/main/images/spider-pattern.png" width="200" />

|

||||

|

||||

--------------------------------

|

||||

### Average Images

|

||||

@@ -141,6 +143,17 @@ To be imported, an .obj must use triangulated meshes, so make sure to enable tha

|

||||

**Example Usage:**

|

||||

</br><img src="https://raw.githubusercontent.com/dwringer/depth-from-obj-node/main/depth_from_obj_usage.jpg" width="500" />

|

||||

|

||||

--------------------------------

|

||||

### Enhance Detail

|

||||

|

||||

**Description:** A single node that can enhance the detail in an image. Increase or decrease details in an image using a guided filter (as opposed to the typical Gaussian blur used by most sharpening filters.) Based on the `Enhance Detail` ComfyUI node from https://github.com/spacepxl/ComfyUI-Image-Filters

|

||||

|

||||

**Node Link:** https://github.com/skunkworxdark/enhance-detail-node

|

||||

|

||||

**Example Usage:**

|

||||

</br>

|

||||

<img src="https://raw.githubusercontent.com/skunkworxdark/enhance-detail-node/refs/heads/main/images/Comparison.png" />

|

||||

|

||||

--------------------------------

|

||||

### Film Grain

|

||||

|

||||

@@ -307,7 +320,7 @@ View:

|

||||

**Node Link:** https://github.com/helix4u/load_video_frame

|

||||

|

||||

**Output Example:**

|

||||

<img src="https://raw.githubusercontent.com/helix4u/load_video_frame/main/_git_assets/testmp4_embed_converted.gif" width="500" />

|

||||

<img src="https://raw.githubusercontent.com/helix4u/load_video_frame/refs/heads/main/_git_assets/dance1736978273.gif" width="500" />

|

||||

|

||||

--------------------------------

|

||||

### Make 3D

|

||||

@@ -348,7 +361,7 @@ See full docs here: https://github.com/skunkworxdark/Prompt-tools-nodes/edit/mai

|

||||

|

||||

**Output Examples**

|

||||

|

||||

<img src="https://github.com/skunkworxdark/match_histogram/assets/21961335/ed12f329-a0ef-444a-9bae-129ed60d6097" width="300" />

|

||||

<img src="https://github.com/skunkworxdark/match_histogram/assets/21961335/ed12f329-a0ef-444a-9bae-129ed60d6097" />

|

||||

|

||||

--------------------------------

|

||||

### Metadata Linked Nodes

|

||||

@@ -390,10 +403,23 @@ View:

|

||||

|

||||

**Node Link:** [https://github.com/gogurtenjoyer/nightmare-promptgen](https://github.com/gogurtenjoyer/nightmare-promptgen)

|

||||

|

||||

--------------------------------

|

||||

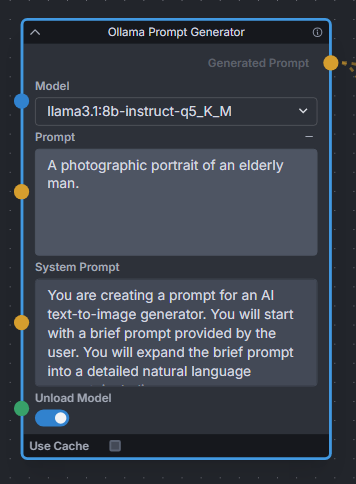

### Ollama Node

|

||||

|

||||

**Description:** Uses Ollama API to expand text prompts for text-to-image generation using local LLMs. Works great for expanding basic prompts into detailed natural language prompts for Flux. Also provides a toggle to unload the LLM model immediately after expanding, to free up VRAM for Invoke to continue the image generation workflow.

|

||||

|

||||

**Node Link:** https://github.com/Jonseed/Ollama-Node

|

||||

|

||||

**Example Node Graph:** https://github.com/Jonseed/Ollama-Node/blob/main/Ollama-Node-Flux-example.json

|

||||

|

||||

**View:**

|

||||

|

||||

|

||||

|

||||

--------------------------------

|

||||

### One Button Prompt

|

||||

|

||||

<img src="https://github.com/AIrjen/OneButtonPrompt_X_InvokeAI/blob/main/images/background.png" width="800" />

|

||||

<img src="https://raw.githubusercontent.com/AIrjen/OneButtonPrompt_X_InvokeAI/refs/heads/main/images/background.png" width="800" />

|

||||

|

||||

**Description:** an extensive suite of auto prompt generation and prompt helper nodes based on extensive logic. Get creative with the best prompt generator in the world.

|

||||

|

||||

@@ -403,7 +429,7 @@ The main node generates interesting prompts based on a set of parameters. There

|

||||

|

||||

**Nodes:**

|

||||

|

||||

<img src="https://github.com/AIrjen/OneButtonPrompt_X_InvokeAI/blob/main/images/OBP_nodes_invokeai.png" width="800" />

|

||||

<img src="https://raw.githubusercontent.com/AIrjen/OneButtonPrompt_X_InvokeAI/refs/heads/main/images/OBP_nodes_invokeai.png" width="800" />

|

||||

|

||||

--------------------------------

|

||||

### Oobabooga

|

||||

@@ -456,7 +482,7 @@ See full docs here: https://github.com/skunkworxdark/Prompt-tools-nodes/edit/mai

|

||||

|

||||

**Workflow Examples**

|

||||

|

||||

<img src="https://github.com/skunkworxdark/prompt-tools/blob/main/images/CSVToIndexStringNode.png" width="300" />

|

||||

<img src="https://raw.githubusercontent.com/skunkworxdark/prompt-tools/refs/heads/main/images/CSVToIndexStringNode.png"/>

|

||||

|

||||

--------------------------------

|

||||

### Remote Image

|

||||

@@ -594,7 +620,7 @@ See full docs here: https://github.com/skunkworxdark/XYGrid_nodes/edit/main/READ

|

||||

|

||||

**Output Examples**

|

||||

|

||||

<img src="https://github.com/skunkworxdark/XYGrid_nodes/blob/main/images/collage.png" width="300" />

|

||||

<img src="https://raw.githubusercontent.com/skunkworxdark/XYGrid_nodes/refs/heads/main/images/collage.png" />

|

||||

|

||||

|

||||

--------------------------------

|

||||

|

||||

@@ -99,7 +99,6 @@ their descriptions.

|

||||

| Scale Latents | Scales latents by a given factor. |

|

||||

| Segment Anything Processor | Applies segment anything processing to image |

|

||||

| Show Image | Displays a provided image, and passes it forward in the pipeline. |

|

||||

| Step Param Easing | Experimental per-step parameter easing for denoising steps |

|

||||

| String Primitive Collection | A collection of string primitive values |

|

||||

| String Primitive | A string primitive value |

|

||||

| Subtract Integers | Subtracts two numbers |

|

||||

|

||||

6

flake.lock

generated

6

flake.lock

generated

@@ -2,11 +2,11 @@

|

||||

"nodes": {

|

||||

"nixpkgs": {

|

||||

"locked": {

|

||||

"lastModified": 1690630721,

|

||||

"narHash": "sha256-Y04onHyBQT4Erfr2fc82dbJTfXGYrf4V0ysLUYnPOP8=",

|

||||

"lastModified": 1727955264,

|

||||

"narHash": "sha256-lrd+7mmb5NauRoMa8+J1jFKYVa+rc8aq2qc9+CxPDKc=",

|

||||

"owner": "NixOS",

|

||||

"repo": "nixpkgs",

|

||||

"rev": "d2b52322f35597c62abf56de91b0236746b2a03d",

|

||||

"rev": "71cd616696bd199ef18de62524f3df3ffe8b9333",

|

||||

"type": "github"

|

||||

},

|

||||

"original": {

|

||||

|

||||

@@ -34,7 +34,7 @@

|

||||

cudaPackages.cudnn

|

||||

cudaPackages.cuda_nvrtc

|

||||

cudatoolkit

|

||||

pkgconfig

|

||||

pkg-config

|

||||

libconfig

|

||||

cmake

|

||||

blas

|

||||

@@ -66,7 +66,7 @@

|

||||

black

|

||||

|

||||

# Frontend.

|

||||

yarn

|

||||

pnpm_8

|

||||

nodejs

|

||||

];

|

||||

LD_LIBRARY_PATH = pkgs.lib.makeLibraryPath buildInputs;

|

||||

|

||||

@@ -12,7 +12,7 @@ MINIMUM_PYTHON_VERSION=3.10.0

|

||||

MAXIMUM_PYTHON_VERSION=3.11.100

|

||||

PYTHON=""

|

||||

for candidate in python3.11 python3.10 python3 python ; do

|

||||

if ppath=`which $candidate`; then

|

||||

if ppath=`which $candidate 2>/dev/null`; then

|

||||

# when using `pyenv`, the executable for an inactive Python version will exist but will not be operational

|

||||

# we check that this found executable can actually run

|

||||

if [ $($candidate --version &>/dev/null; echo ${PIPESTATUS}) -gt 0 ]; then continue; fi

|

||||

@@ -30,10 +30,11 @@ done

|

||||

if [ -z "$PYTHON" ]; then

|

||||

echo "A suitable Python interpreter could not be found"

|

||||

echo "Please install Python $MINIMUM_PYTHON_VERSION or higher (maximum $MAXIMUM_PYTHON_VERSION) before running this script. See instructions at $INSTRUCTIONS for help."

|

||||

echo "For the best user experience we suggest enlarging or maximizing this window now."

|

||||

read -p "Press any key to exit"

|

||||

exit -1

|

||||

fi

|

||||

|

||||

echo "For the best user experience we suggest enlarging or maximizing this window now."

|

||||

|

||||

exec $PYTHON ./lib/main.py ${@}

|

||||

read -p "Press any key to exit"

|

||||

|

||||

@@ -245,6 +245,9 @@ class InvokeAiInstance:

|

||||

|

||||

pip = local[self.pip]

|

||||

|

||||

# Uninstall xformers if it is present; the correct version of it will be reinstalled if needed

|

||||

_ = pip["uninstall", "-yqq", "xformers"] & FG

|

||||

|

||||

pipeline = pip[

|

||||

"install",

|

||||

"--require-virtualenv",

|

||||

@@ -282,12 +285,6 @@ class InvokeAiInstance:

|

||||

shutil.copy(src, dest)

|

||||

os.chmod(dest, 0o0755)

|

||||

|

||||

def update(self):

|

||||

pass

|

||||

|

||||

def remove(self):

|

||||

pass

|

||||

|

||||

|

||||

### Utility functions ###

|

||||

|

||||

@@ -402,7 +399,7 @@ def get_torch_source() -> Tuple[str | None, str | None]:

|

||||

:rtype: list

|

||||

"""

|

||||

|

||||

from messages import select_gpu

|

||||

from messages import GpuType, select_gpu

|

||||

|

||||

# device can be one of: "cuda", "rocm", "cpu", "cuda_and_dml, autodetect"

|

||||

device = select_gpu()

|

||||

@@ -412,16 +409,22 @@ def get_torch_source() -> Tuple[str | None, str | None]:

|

||||

url = None

|

||||

optional_modules: str | None = None

|

||||

if OS == "Linux":

|

||||

if device.value == "rocm":

|

||||

url = "https://download.pytorch.org/whl/rocm5.6"

|

||||

elif device.value == "cpu":

|

||||

if device == GpuType.ROCM:

|

||||

url = "https://download.pytorch.org/whl/rocm6.1"

|

||||

elif device == GpuType.CPU:

|

||||

url = "https://download.pytorch.org/whl/cpu"

|

||||

elif device.value == "cuda":

|

||||

# CUDA uses the default PyPi index

|

||||

elif device == GpuType.CUDA:

|

||||

url = "https://download.pytorch.org/whl/cu124"

|

||||

optional_modules = "[onnx-cuda]"

|

||||

elif device == GpuType.CUDA_WITH_XFORMERS:

|

||||

url = "https://download.pytorch.org/whl/cu124"

|

||||

optional_modules = "[xformers,onnx-cuda]"

|

||||

elif OS == "Windows":

|

||||

if device.value == "cuda":

|

||||

url = "https://download.pytorch.org/whl/cu121"

|

||||

if device == GpuType.CUDA:

|

||||

url = "https://download.pytorch.org/whl/cu124"

|

||||

optional_modules = "[onnx-cuda]"

|

||||

elif device == GpuType.CUDA_WITH_XFORMERS:

|

||||

url = "https://download.pytorch.org/whl/cu124"

|

||||

optional_modules = "[xformers,onnx-cuda]"

|

||||

elif device.value == "cpu":

|

||||

# CPU uses the default PyPi index, no optional modules

|

||||

|

||||

@@ -206,6 +206,7 @@ def dest_path(dest: Optional[str | Path] = None) -> Path | None:

|

||||

|

||||

|

||||

class GpuType(Enum):

|

||||

CUDA_WITH_XFORMERS = "xformers"

|

||||

CUDA = "cuda"

|

||||

ROCM = "rocm"

|

||||

CPU = "cpu"

|

||||

@@ -221,11 +222,15 @@ def select_gpu() -> GpuType:

|

||||

return GpuType.CPU

|

||||

|

||||

nvidia = (

|

||||

"an [gold1 b]NVIDIA[/] GPU (using CUDA™)",

|

||||

"an [gold1 b]NVIDIA[/] RTX 3060 or newer GPU using CUDA",

|

||||

GpuType.CUDA,

|

||||

)

|

||||

vintage_nvidia = (

|

||||

"an [gold1 b]NVIDIA[/] RTX 20xx or older GPU using CUDA+xFormers",

|

||||

GpuType.CUDA_WITH_XFORMERS,

|

||||

)

|

||||

amd = (

|

||||

"an [gold1 b]AMD[/] GPU (using ROCm™)",

|

||||

"an [gold1 b]AMD[/] GPU using ROCm",

|

||||

GpuType.ROCM,

|

||||

)

|

||||

cpu = (

|

||||

@@ -235,14 +240,13 @@ def select_gpu() -> GpuType:

|

||||

|

||||

options = []

|

||||

if OS == "Windows":

|

||||

options = [nvidia, cpu]

|

||||

options = [nvidia, vintage_nvidia, cpu]

|

||||

if OS == "Linux":

|

||||

options = [nvidia, amd, cpu]

|

||||

options = [nvidia, vintage_nvidia, amd, cpu]

|

||||

elif OS == "Darwin":

|

||||

options = [cpu]

|

||||

|

||||

if len(options) == 1:

|

||||

print(f'Your platform [gold1]{OS}-{ARCH}[/] only supports the "{options[0][1]}" driver. Proceeding with that.')

|

||||

return options[0][1]

|

||||

|

||||

options = {str(i): opt for i, opt in enumerate(options, 1)}

|

||||

@@ -255,7 +259,7 @@ def select_gpu() -> GpuType:

|

||||

[

|

||||

f"Detected the [gold1]{OS}-{ARCH}[/] platform",

|

||||

"",

|

||||

"See [deep_sky_blue1]https://invoke-ai.github.io/InvokeAI/#system[/] to ensure your system meets the minimum requirements.",

|

||||

"See [deep_sky_blue1]https://invoke-ai.github.io/InvokeAI/installation/requirements/[/] to ensure your system meets the minimum requirements.",

|

||||

"",

|

||||

"[red3]🠶[/] [b]Your GPU drivers must be correctly installed before using InvokeAI![/] [red3]🠴[/]",

|

||||

]

|

||||

|

||||

@@ -68,7 +68,7 @@ do_line_input() {

|

||||

printf "2: Open the developer console\n"

|

||||

printf "3: Command-line help\n"

|

||||

printf "Q: Quit\n\n"

|

||||

printf "To update, download and run the installer from https://github.com/invoke-ai/InvokeAI/releases/latest.\n\n"

|

||||

printf "To update, download and run the installer from https://github.com/invoke-ai/InvokeAI/releases/latest\n\n"

|

||||

read -p "Please enter 1-4, Q: [1] " yn

|

||||

choice=${yn:='1'}

|

||||

do_choice $choice

|

||||

|

||||

@@ -40,6 +40,8 @@ class AppVersion(BaseModel):

|

||||

|

||||

version: str = Field(description="App version")

|

||||

|

||||

highlights: Optional[list[str]] = Field(default=None, description="Highlights of release")

|

||||

|

||||

|

||||

class AppDependencyVersions(BaseModel):

|

||||

"""App depencency Versions Response"""

|

||||

|

||||

@@ -5,9 +5,10 @@ from fastapi.routing import APIRouter

|

||||

from pydantic import BaseModel, Field

|

||||

|

||||

from invokeai.app.api.dependencies import ApiDependencies

|

||||

from invokeai.app.services.board_records.board_records_common import BoardChanges

|

||||

from invokeai.app.services.board_records.board_records_common import BoardChanges, BoardRecordOrderBy

|

||||

from invokeai.app.services.boards.boards_common import BoardDTO

|

||||

from invokeai.app.services.shared.pagination import OffsetPaginatedResults

|

||||

from invokeai.app.services.shared.sqlite.sqlite_common import SQLiteDirection

|

||||

|

||||

boards_router = APIRouter(prefix="/v1/boards", tags=["boards"])

|

||||

|

||||

@@ -115,6 +116,8 @@ async def delete_board(

|

||||

response_model=Union[OffsetPaginatedResults[BoardDTO], list[BoardDTO]],

|

||||

)

|

||||

async def list_boards(

|

||||

order_by: BoardRecordOrderBy = Query(default=BoardRecordOrderBy.CreatedAt, description="The attribute to order by"),

|

||||

direction: SQLiteDirection = Query(default=SQLiteDirection.Descending, description="The direction to order by"),

|

||||

all: Optional[bool] = Query(default=None, description="Whether to list all boards"),

|

||||

offset: Optional[int] = Query(default=None, description="The page offset"),

|

||||

limit: Optional[int] = Query(default=None, description="The number of boards per page"),

|

||||

@@ -122,9 +125,9 @@ async def list_boards(

|

||||

) -> Union[OffsetPaginatedResults[BoardDTO], list[BoardDTO]]:

|

||||

"""Gets a list of boards"""

|

||||

if all:

|

||||

return ApiDependencies.invoker.services.boards.get_all(include_archived)

|

||||

return ApiDependencies.invoker.services.boards.get_all(order_by, direction, include_archived)

|

||||

elif offset is not None and limit is not None:

|

||||

return ApiDependencies.invoker.services.boards.get_many(offset, limit, include_archived)

|

||||

return ApiDependencies.invoker.services.boards.get_many(order_by, direction, offset, limit, include_archived)

|

||||

else:

|

||||

raise HTTPException(

|

||||

status_code=400,

|

||||

|

||||

@@ -1,6 +1,7 @@

|

||||

# Copyright (c) 2023 Lincoln D. Stein

|

||||

"""FastAPI route for model configuration records."""

|

||||

|

||||

import contextlib

|

||||

import io

|

||||

import pathlib

|

||||

import shutil

|

||||

@@ -10,6 +11,7 @@ from enum import Enum

|

||||

from tempfile import TemporaryDirectory

|

||||

from typing import List, Optional, Type

|

||||

|

||||

import huggingface_hub

|

||||

from fastapi import Body, Path, Query, Response, UploadFile

|

||||

from fastapi.responses import FileResponse, HTMLResponse

|

||||

from fastapi.routing import APIRouter

|

||||

@@ -27,6 +29,7 @@ from invokeai.app.services.model_records import (

|

||||

ModelRecordChanges,

|

||||

UnknownModelException,

|

||||

)

|

||||

from invokeai.app.util.suppress_output import SuppressOutput

|

||||

from invokeai.backend.model_manager.config import (

|

||||

AnyModelConfig,

|

||||

BaseModelType,

|

||||

@@ -38,7 +41,12 @@ from invokeai.backend.model_manager.load.model_cache.model_cache_base import Cac

|

||||

from invokeai.backend.model_manager.metadata.fetch.huggingface import HuggingFaceMetadataFetch

|

||||

from invokeai.backend.model_manager.metadata.metadata_base import ModelMetadataWithFiles, UnknownMetadataException

|

||||

from invokeai.backend.model_manager.search import ModelSearch

|

||||

from invokeai.backend.model_manager.starter_models import STARTER_MODELS, StarterModel, StarterModelWithoutDependencies

|

||||

from invokeai.backend.model_manager.starter_models import (

|

||||

STARTER_BUNDLES,

|

||||

STARTER_MODELS,

|

||||

StarterModel,

|

||||

StarterModelWithoutDependencies,

|

||||

)

|

||||

|

||||

model_manager_router = APIRouter(prefix="/v2/models", tags=["model_manager"])

|

||||

|

||||

@@ -792,22 +800,52 @@ async def convert_model(

|

||||

return new_config

|

||||

|

||||

|

||||

@model_manager_router.get("/starter_models", operation_id="get_starter_models", response_model=list[StarterModel])

|

||||

async def get_starter_models() -> list[StarterModel]:

|

||||

class StarterModelResponse(BaseModel):

|

||||

starter_models: list[StarterModel]

|

||||

starter_bundles: dict[str, list[StarterModel]]

|

||||

|

||||

|

||||

def get_is_installed(

|

||||

starter_model: StarterModel | StarterModelWithoutDependencies, installed_models: list[AnyModelConfig]

|

||||

) -> bool:

|

||||

for model in installed_models:

|

||||

if model.source == starter_model.source:

|

||||

return True

|

||||

if (

|

||||

(model.name == starter_model.name or model.name in starter_model.previous_names)

|

||||

and model.base == starter_model.base

|

||||

and model.type == starter_model.type

|

||||

):

|

||||

return True

|

||||

return False

|

||||

|

||||

|

||||

@model_manager_router.get("/starter_models", operation_id="get_starter_models", response_model=StarterModelResponse)

|

||||

async def get_starter_models() -> StarterModelResponse:

|

||||

installed_models = ApiDependencies.invoker.services.model_manager.store.search_by_attr()

|

||||

installed_model_sources = {m.source for m in installed_models}

|

||||

starter_models = deepcopy(STARTER_MODELS)

|

||||

starter_bundles = deepcopy(STARTER_BUNDLES)

|

||||

for model in starter_models:

|

||||

if model.source in installed_model_sources:

|

||||

model.is_installed = True

|

||||

model.is_installed = get_is_installed(model, installed_models)

|

||||

# Remove already-installed dependencies

|

||||

missing_deps: list[StarterModelWithoutDependencies] = []

|

||||

|